Stories on the Late 20Th-Century Rise of Game Theory

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Uniqueness and Symmetry in Bargaining Theories of Justice

Philos Stud DOI 10.1007/s11098-013-0121-y Uniqueness and symmetry in bargaining theories of justice John Thrasher Ó Springer Science+Business Media Dordrecht 2013 Abstract For contractarians, justice is the result of a rational bargain. The goal is to show that the rules of justice are consistent with rationality. The two most important bargaining theories of justice are David Gauthier’s and those that use the Nash’s bargaining solution. I argue that both of these approaches are fatally undermined by their reliance on a symmetry condition. Symmetry is a substantive constraint, not an implication of rationality. I argue that using symmetry to generate uniqueness undermines the goal of bargaining theories of justice. Keywords David Gauthier Á John Nash Á John Harsanyi Á Thomas Schelling Á Bargaining Á Symmetry Throughout the last century and into this one, many philosophers modeled justice as a bargaining problem between rational agents. Even those who did not explicitly use a bargaining problem as their model, most notably Rawls, incorporated many of the concepts and techniques from bargaining theories into their understanding of what a theory of justice should look like. This allowed them to use the powerful tools of game theory to justify their various theories of distributive justice. The debates between partisans of different theories of distributive justice has tended to be over the respective benefits of each particular bargaining solution and whether or not the solution to the bargaining problem matches our pre-theoretical intuitions about justice. There is, however, a more serious problem that has effectively been ignored since economists originally J. -

1947–1967 a Conversation with Howard Raiffa

Statistical Science 2008, Vol. 23, No. 1, 136–149 DOI: 10.1214/088342307000000104 c Institute of Mathematical Statistics, 2008 The Early Statistical Years: 1947–1967 A Conversation with Howard Raiffa Stephen E. Fienberg Abstract. Howard Raiffa earned his bachelor’s degree in mathematics, his master’s degree in statistics and his Ph.D. in mathematics at the University of Michigan. Since 1957, Raiffa has been a member of the faculty at Harvard University, where he is now the Frank P. Ramsey Chair in Managerial Economics (Emeritus) in the Graduate School of Business Administration and the Kennedy School of Government. A pioneer in the creation of the field known as decision analysis, his re- search interests span statistical decision theory, game theory, behavioral decision theory, risk analysis and negotiation analysis. Raiffa has su- pervised more than 90 doctoral dissertations and written 11 books. His new book is Negotiation Analysis: The Science and Art of Collaborative Decision Making. Another book, Smart Choices, co-authored with his former doctoral students John Hammond and Ralph Keeney, was the CPR (formerly known as the Center for Public Resources) Institute for Dispute Resolution Book of the Year in 1998. Raiffa helped to create the International Institute for Applied Systems Analysis and he later became its first Director, serving in that capacity from 1972 to 1975. His many honors and awards include the Distinguished Contribution Award from the Society of Risk Analysis; the Frank P. Ramsey Medal for outstanding contributions to the field of decision analysis from the Operations Research Society of America; and the Melamed Prize from the University of Chicago Business School for The Art and Science of Negotiation. -

Matchings and Games on Networks

Matchings and games on networks by Linda Farczadi A thesis presented to the University of Waterloo in fulfilment of the thesis requirement for the degree of Doctor of Philosophy in Combinatorics and Optimization Waterloo, Ontario, Canada, 2015 c Linda Farczadi 2015 Author's Declaration I hereby declare that I am the sole author of this thesis. This is a true copy of the thesis, including any required final revisions, as accepted by my examiners. I understand that my thesis may be made electronically available to the public. ii Abstract We investigate computational aspects of popular solution concepts for different models of network games. In chapter 3 we study balanced solutions for network bargaining games with general capacities, where agents can participate in a fixed but arbitrary number of contracts. We fully characterize the existence of balanced solutions and provide the first polynomial time algorithm for their computation. Our methods use a new idea of reducing an instance with general capacities to an instance with unit capacities defined on an auxiliary graph. This chapter is an extended version of the conference paper [32]. In chapter 4 we propose a generalization of the classical stable marriage problem. In our model the preferences on one side of the partition are given in terms of arbitrary bi- nary relations, that need not be transitive nor acyclic. This generalization is practically well-motivated, and as we show, encompasses the well studied hard variant of stable mar- riage where preferences are allowed to have ties and to be incomplete. Our main result shows that deciding the existence of a stable matching in our model is NP-complete. -

A Brief Appraisal of Behavioral Economists' Plea for Light Paternalism

Brazilian Journal of Political Economy, vol 32, nº 3 (128), pp 445-458, July-September/2012 Freedom of choice and bounded rationality: a brief appraisal of behavioral economists’ plea for light paternalism ROBERTA MURAMATSU PATRÍCIA FONSECA* Behavioral economics has addressed interesting positive and normative ques- tions underlying the standard rational choice theory. More recently, it suggests that, in a real world of boundedly rational agents, economists could help people to im- prove the quality of their choices without any harm to autonomy and freedom of choice. This paper aims to scrutinize available arguments for and against current proposals of light paternalistic interventions mainly in the domain of intertemporal choice. It argues that incorporating the notion of bounded rationality in economic analysis and empirical findings of cognitive biases and self-control problems cannot make an indisputable case for paternalism. Keywords: freedom; choice; bounded rationality; paternalism; behavioral eco- nomics. JEL Classification: B40; B41; D11; D91. So the immediate problem in Libertarian Paternalism is the fatuity of its declared motivation Very few libertarians have maintained what Thaler and Sunstein suggest they maintain, and indeed many of the leading theorists have worked with ideas in line with what Thaler and Sunstein have to say about man’s nature Thaler and Sunstein are forcing an open door Daniel Klein, Statist Quo Bias, Economic Journal Watch, 2004 * Professora doutora da Universidade Presbiteriana Mackenzie e do Insper, e-mail: rmuramatsu@uol. com.br; Pesquisadora independente na área de Economia Comportamental e Psicologia Econômica, e-mail [email protected]. Submetido: 23/fevereiro/2011. Aprovado: 12/março/2011. Revista de Economia Política 32 (3), 2012 445 IntrodUCTION There is a long-standing methodological tradition stating that economics is a positive science that remains silent about policy issues and the complex determi- nants of human ends, values and motives. -

John Von Neumann Between Physics and Economics: a Methodological Note

Review of Economic Analysis 5 (2013) 177–189 1973-3909/2013177 John von Neumann between Physics and Economics: A methodological note LUCA LAMBERTINI∗y University of Bologna A methodological discussion is proposed, aiming at illustrating an analogy between game theory in particular (and mathematical economics in general) and quantum mechanics. This analogy relies on the equivalence of the two fundamental operators employed in the two fields, namely, the expected value in economics and the density matrix in quantum physics. I conjecture that this coincidence can be traced back to the contributions of von Neumann in both disciplines. Keywords: expected value, density matrix, uncertainty, quantum games JEL Classifications: B25, B41, C70 1 Introduction Over the last twenty years, a growing amount of attention has been devoted to the history of game theory. Among other reasons, this interest can largely be justified on the basis of the Nobel prize to John Nash, John Harsanyi and Reinhard Selten in 1994, to Robert Aumann and Thomas Schelling in 2005 and to Leonid Hurwicz, Eric Maskin and Roger Myerson in 2007 (for mechanism design).1 However, the literature dealing with the history of game theory mainly adopts an inner per- spective, i.e., an angle that allows us to reconstruct the developments of this sub-discipline under the general headings of economics. My aim is different, to the extent that I intend to pro- pose an interpretation of the formal relationships between game theory (and economics) and the hard sciences. Strictly speaking, this view is not new, as the idea that von Neumann’s interest in mathematics, logic and quantum mechanics is critical to our understanding of the genesis of ∗I would like to thank Jurek Konieczny (Editor), an anonymous referee, Corrado Benassi, Ennio Cavaz- zuti, George Leitmann, Massimo Marinacci, Stephen Martin, Manuela Mosca and Arsen Palestini for insightful comments and discussion. -

Introduction to Game Theory

Economic game theory: a learner centred introduction Author: Dr Peter Kührt Introduction to game theory Game theory is now an integral part of business and politics, showing how decisively it has shaped modern notions of strategy. Consultants use it for projects; managers swot up on it to make smarter decisions. The crucial aim of mathematical game theory is to characterise and establish rational deci- sions for conflict and cooperation situations. The problem with it is that none of the actors know the plans of the other “players” or how they will make the appropriate decisions. This makes it unclear for each player to know how their own specific decisions will impact on the plan of action (strategy). However, you can think about the situation from the perspective of the other players to build an expectation of what they are going to do. Game theory therefore makes it possible to illustrate social conflict situations, which are called “games” in game theory, and solve them mathematically on the basis of probabilities. It is assumed that all participants behave “rationally”. Then you can assign a value and a probability to each decision option, which ultimately allows a computational solution in terms of “benefit or profit optimisation”. However, people are not always strictly rational because seemingly “irrational” results also play a big role for them, e.g. gain in prestige, loss of face, traditions, preference for certain colours, etc. However, these “irrational" goals can also be measured with useful values so that “restricted rational behaviour” can result in computational results, even with these kinds of models. -

A Network of Excellence in Economic Theory Prepared By

Foundations of Game Theoretic Understanding of Socio-Economic Phenomena; A Network of Excellence in Economic Theory Prepared by Myrna Wooders, Department of Economics, University of Warwick Gibbet Hill Road, Coventry, CV4 7AL, UK [email protected] Applicable Instrument: Network of Excellence Sub-Thematic Priority most relevant to the topic: 1.1.7 Citizens and Governance in a Knowledge-based society Other relevant Sub-Thematic Priorities: 1.1.7.i Knowledge-based society and social cohesion 1.1.7.ii Citizenship, democracy and new forms of governance Summary: This EoI is for a network in game theory and economic theory, including evolutionary approaches. While the proposal relates to applications of theory, the main motivation and thrust is in a network of excellence in pure theory. Like research in theoretical physics or on the human genome, fundamental game theory and economic theory is not primarily motivated by immediate applications or by current policy issues. Instead, it is motivated by scientific intellectual curiosity. Because this curiosity arises from a quest towards a deeper understanding of the world around us, it is anticipated, however, that there will be applications of great significance – recall that the multi-billion dollar outcomes of auctions of spectrum in the US were guided by game theory. In addition, fundamental scientific theory, because of its search for understanding that will have broad consequences and transcend national boundaries, is appropriately funded by at the European Union level. The network aims to embrace the leading researchers in economic theory and game theory and strengthen Europe’s position as a leader in economic research. -

Study of the Evolution of Symbiosis at the Metabolic Level Using Models from Game Theory and Economics Martin Wannagat

Study of the evolution of symbiosis at the metabolic level using models from game theory and economics Martin Wannagat To cite this version: Martin Wannagat. Study of the evolution of symbiosis at the metabolic level using models from game theory and economics. Quantitative Methods [q-bio.QM]. Université de Lyon, 2016. English. NNT : 2016LYSE1094. tel-01394107v2 HAL Id: tel-01394107 https://hal.inria.fr/tel-01394107v2 Submitted on 22 Nov 2016 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. No d’ordre NNT : xxx THÈSE DE DOCTORAT DE L’UNIVERSITÉ DE LYON opérée au sein de l’Université Claude Bernard Lyon 1 École Doctorale ED01 E2M2 Spécialité de doctorat : Bioinformatique Soutenue publiquement/à huis clos le 04/07/2016, par : Martin Wannagat Study of the evolution of symbiosis at the metabolic level using models from game theory and economics Devant le jury composé de : Nom Prénom, grade/qualité, établissement/entreprise Président(e) Bockmayr Alexander, Prof. Dr., Freie Universität Berlin Rapporteur Jourdan Fabien, CR1, INRA Toulouse Rapporteur Neves Ana Rute, Dr., Chr. Hansen A/S Rapporteure Charles Hubert, Prof., INSA Lyon Examinateur Andrade Ricardo, Dr., INRIA Rhône-Alpes Examinateur Sagot Marie-France, DR, LBBE, INRIA Rhône-Alpes Directrice de thèse Stougie Leen, Prof., Vrije Universiteit, CWI Amsterdam Co-directeur de thèse Marchetti-Spaccamela Alberto, Prof., Sapienza Univ. -

Koopmans in the Soviet Union

Koopmans in the Soviet Union A travel report of the summer of 1965 Till Düppe1 December 2013 Abstract: Travelling is one of the oldest forms of knowledge production combining both discovery and contemplation. Tjalling C. Koopmans, research director of the Cowles Foundation of Research in Economics, the leading U.S. center for mathematical economics, was the first U.S. economist after World War II who, in the summer of 1965, travelled to the Soviet Union for an official visit of the Central Economics and Mathematics Institute of the Soviet Academy of Sciences. Koopmans left with the hope to learn from the experiences of Soviet economists in applying linear programming to economic planning. Would his own theories, as discovered independently by Leonid V. Kantorovich, help increasing allocative efficiency in a socialist economy? Koopmans even might have envisioned a research community across the iron curtain. Yet he came home with the discovery that learning about Soviet mathematical economists might be more interesting than learning from them. On top of that, he found the Soviet scene trapped in the same deplorable situation he knew all too well from home: that mathematicians are the better economists. Key-Words: mathematical economics, linear programming, Soviet economic planning, Cold War, Central Economics and Mathematics Institute, Tjalling C. Koopmans, Leonid V. Kantorovich. Word-Count: 11.000 1 Assistant Professor, Department of economics, Université du Québec à Montréal, Pavillon des Sciences de la gestion, 315, Rue Sainte-Catherine Est, Montréal (Québec), H2X 3X2, Canada, e-mail: [email protected]. A former version has been presented at the conference “Social and human sciences on both sides of the ‘iron curtain’”, October 17-19, 2013, in Moscow. -

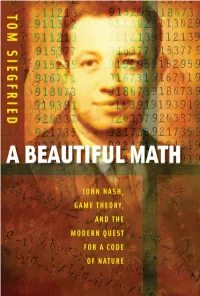

A Beautiful Math : John Nash, Game Theory, and the Modern Quest for a Code of Nature / Tom Siegfried

A BEAUTIFULA BEAUTIFUL MATH MATH JOHN NASH, GAME THEORY, AND THE MODERN QUEST FOR A CODE OF NATURE TOM SIEGFRIED JOSEPH HENRY PRESS Washington, D.C. Joseph Henry Press • 500 Fifth Street, NW • Washington, DC 20001 The Joseph Henry Press, an imprint of the National Academies Press, was created with the goal of making books on science, technology, and health more widely available to professionals and the public. Joseph Henry was one of the founders of the National Academy of Sciences and a leader in early Ameri- can science. Any opinions, findings, conclusions, or recommendations expressed in this volume are those of the author and do not necessarily reflect the views of the National Academy of Sciences or its affiliated institutions. Library of Congress Cataloging-in-Publication Data Siegfried, Tom, 1950- A beautiful math : John Nash, game theory, and the modern quest for a code of nature / Tom Siegfried. — 1st ed. p. cm. Includes bibliographical references and index. ISBN 0-309-10192-1 (hardback) — ISBN 0-309-65928-0 (pdfs) 1. Game theory. I. Title. QA269.S574 2006 519.3—dc22 2006012394 Copyright 2006 by Tom Siegfried. All rights reserved. Printed in the United States of America. Preface Shortly after 9/11, a Russian scientist named Dmitri Gusev pro- posed an explanation for the origin of the name Al Qaeda. He suggested that the terrorist organization took its name from Isaac Asimov’s famous 1950s science fiction novels known as the Foun- dation Trilogy. After all, he reasoned, the Arabic word “qaeda” means something like “base” or “foundation.” And the first novel in Asimov’s trilogy, Foundation, apparently was titled “al-Qaida” in an Arabic translation. -

Nine Takes on Indeterminacy, with Special Emphasis on the Criminal Law

University of Pennsylvania Carey Law School Penn Law: Legal Scholarship Repository Faculty Scholarship at Penn Law 2015 Nine Takes on Indeterminacy, with Special Emphasis on the Criminal Law Leo Katz University of Pennsylvania Carey Law School Follow this and additional works at: https://scholarship.law.upenn.edu/faculty_scholarship Part of the Criminal Law Commons, Law and Philosophy Commons, and the Public Law and Legal Theory Commons Repository Citation Katz, Leo, "Nine Takes on Indeterminacy, with Special Emphasis on the Criminal Law" (2015). Faculty Scholarship at Penn Law. 1580. https://scholarship.law.upenn.edu/faculty_scholarship/1580 This Article is brought to you for free and open access by Penn Law: Legal Scholarship Repository. It has been accepted for inclusion in Faculty Scholarship at Penn Law by an authorized administrator of Penn Law: Legal Scholarship Repository. For more information, please contact [email protected]. ARTICLE NINE TAKES ON INDETERMINACY, WITH SPECIAL EMPHASIS ON THE CRIMINAL LAW LEO KATZ† INTRODUCTION ............................................................................ 1945 I. TAKE 1: THE COGNITIVE THERAPY PERSPECTIVE ................ 1951 II. TAKE 2: THE MORAL INSTINCT PERSPECTIVE ..................... 1954 III. TAKE 3: THE CORE–PENUMBRA PERSPECTIVE .................... 1959 IV. TAKE 4: THE SOCIAL CHOICE PERSPECTIVE ....................... 1963 V. TAKE 5: THE ANALOGY PERSPECTIVE ................................. 1965 VI. TAKE 6: THE INCOMMENSURABILITY PERSPECTIVE ............ 1968 VII. TAKE 7: THE IRRATIONALITY-OF-DISAGREEMENT PERSPECTIVE ..................................................................... 1969 VIII. TAKE 8: THE SMALL WORLD/LARGE WORLD PERSPECTIVE 1970 IX. TAKE 9: THE RESIDUALIST PERSPECTIVE ........................... 1972 CONCLUSION ................................................................................ 1973 INTRODUCTION The claim that legal disputes have no determinate answer is an old one. The worry is one that assails every first-year law student at some point. -

Newsletter 56 November 1, 2012 1 Department of Economics

Department of Economics Newslett er 56 November 1, 2012 Autumn in the beautiful Swiss mountains Table of Contents 1 Spotlight 1 1.1 Gottlieb Duttweiler Prize for Ernst Fehr 1 1.2 Walras-Bowley Lecture by Ernst Fehr 1 1.3 Fabrizio Zilibotti has been elected member of the Academia Europaea 1 1.4 Zurich Graduate School of Economics 1 2 Events 2 2.1 Departmental Research Seminar in Economics 2 2.2 Guest Presentations 2 2.3 Alumni Events 5 3 Publications 5 3.1 In Economics 5 3.2 Others 6 3.3 Working Papers 6 3.4 Mainstream Publications & Appearances 7 4 People 7 4.1 Degrees 7 4.2 Awards 8 5 Miscellaneous 9 5.1 Congresses, Conferences & Selected Presentations 9 5.2 Grants 9 Department of Economics 1 Spotlight 1.1 Gottlieb Duttweiler Prize for Ernst Fehr The 2013 Gottlieb Duttweiler Prize will be awarded to Ernst Fehr for his research in the role of fairness in markets, organizations, and individual decision making. The Gottlieb Duttweiler Prize is politically independent and is conferred at irregular intervals to persons who have served the common good with exceptional accomplishments. Previous holders of the prize include Kofi Annan and Václav Havel. The prize will be awarded on April 9, 2013; behavioral economist Dan Ariely will hold the laudation. 1.2 Walras-Bowley Lecture by Ernst Fehr Ernst Fehr was invited to present the Walras-Bowley Lecture at the North American summer meeting of the Econometric Society in Evanston Illinous, June 2012. 1.3 Fabrizio Zilibotti has been elected member of the Academia Europaea Fabrizio Zilibotti has been elected member of the Academia Europaea.