Matchings and Games on Networks

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

On Likely Solutions of the Stable Matching Problem with Unequal

ON LIKELY SOLUTIONS OF THE STABLE MATCHING PROBLEM WITH UNEQUAL NUMBERS OF MEN AND WOMEN BORIS PITTEL Abstract. Following up a recent work by Ashlagi, Kanoria and Leshno, we study a stable matching problem with unequal numbers of men and women, and independent uniform preferences. The asymptotic formulas for the expected number of stable matchings, and for the probabilities of one point–concentration for the range of husbands’ total ranks and for the range of wives’ total ranks are obtained. 1. Introduction and main results Consider the set of n1 men and n2 women facing a problem of selecting a marriage partner. For n1 = n2 = n, a marriage M is a matching (bijection) between the two sets. It is assumed that each man and each woman has his/her preferences for a marriage partner, with no ties allowed. That is, there are given n permutations σj of the men set and n permutations ρj of the women set, each σj (ρj resp.) ordering the women set (the men set resp.) according to the desirability degree of a woman (a man) as a marriage partner for man j (woman j). A marriage is called stable if there is no unmarried pair (a man, a woman) who prefer each other to their respective partners in the marriage. A classic theorem, due to Gale and Shapley [4], asserts that, given any system of preferences {ρj,σj}j∈[n], there exists at least one stable marriage M. arXiv:1701.08900v2 [math.CO] 13 Feb 2017 The proof of this theorem is algorithmic. A bijection is constructed in steps such that at each step every man not currently on hold makes a pro- posal to his best choice among women who haven’t rejected him before, and the chosen woman either provisionally puts the man on hold or rejects him, based on comparison of him to her current suitor if she has one already. -

On the Effi Ciency of Stable Matchings in Large Markets Sangmok Lee

On the Effi ciency of Stable Matchings in Large Markets SangMok Lee Leeat Yarivyz August 22, 2018 Abstract. Stability is often the goal for matching clearinghouses, such as those matching residents to hospitals, students to schools, etc. We study the wedge between stability and utilitarian effi ciency in large one-to-one matching markets. We distinguish between stable matchings’average effi ciency (or, effi ciency per-person), which is maximal asymptotically for a rich preference class, and their aggregate effi ciency, which is not. The speed at which average effi ciency of stable matchings converges to its optimum depends on the underlying preferences. Furthermore, for severely imbalanced markets governed by idiosyncratic preferences, or when preferences are sub-modular, stable outcomes may be average ineffi cient asymptotically. Our results can guide market designers who care about effi ciency as to when standard stable mechanisms are desirable and when new mechanisms, or the availability of transfers, might be useful. Keywords: Matching, Stability, Effi ciency, Market Design. Department of Economics, Washington University in St. Louis, http://www.sangmoklee.com yDepartment of Economics, Princeton University, http://www.princeton.edu/yariv zWe thank Federico Echenique, Matthew Elliott, Aytek Erdil, Mallesh Pai, Rakesh Vohra, and Dan Wal- ton for very useful comments and suggestions. Euncheol Shin provided us with superb research assistance. Financial support from the National Science Foundation (SES 0963583) and the Gordon and Betty Moore Foundation (through grant 1158) is gratefully acknowledged. On the Efficiency of Stable Matchings in Large Markets 1 1. Introduction 1.1. Overview. The design of most matching markets has focused predominantly on mechanisms in which only ordinal preferences are specified: the National Resident Match- ing Program (NRMP), clearinghouses for matching schools and students in New York City and Boston, and many others utilize algorithms that implement a stable matching correspond- ing to reported rank preferences. -

Three Puzzles on Mathematics, Computation, and Games

P. I. C. M. – 2018 Rio de Janeiro, Vol. 1 (551–606) THREE PUZZLES ON MATHEMATICS, COMPUTATION, AND GAMES G K Abstract In this lecture I will talk about three mathematical puzzles involving mathemat- ics and computation that have preoccupied me over the years. The first puzzle is to understand the amazing success of the simplex algorithm for linear programming. The second puzzle is about errors made when votes are counted during elections. The third puzzle is: are quantum computers possible? 1 Introduction The theory of computing and computer science as a whole are precious resources for mathematicians. They bring up new questions, profound new ideas, and new perspec- tives on classical mathematical objects, and serve as new areas for applications of math- ematics and mathematical reasoning. In my lecture I will talk about three mathematical puzzles involving mathematics and computation (and, at times, other fields) that have preoccupied me over the years. The connection between mathematics and computing is especially strong in my field of combinatorics, and I believe that being able to person- ally experience the scientific developments described here over the past three decades may give my description some added value. For all three puzzles I will try to describe in some detail both the large picture at hand, and zoom in on topics related to my own work. Puzzle 1: What can explain the success of the simplex algorithm? Linear program- ming is the problem of maximizing a linear function subject to a system of linear inequalities. The set of solutions to the linear inequalities is a convex polyhedron P . -

John Von Neumann Between Physics and Economics: a Methodological Note

Review of Economic Analysis 5 (2013) 177–189 1973-3909/2013177 John von Neumann between Physics and Economics: A methodological note LUCA LAMBERTINI∗y University of Bologna A methodological discussion is proposed, aiming at illustrating an analogy between game theory in particular (and mathematical economics in general) and quantum mechanics. This analogy relies on the equivalence of the two fundamental operators employed in the two fields, namely, the expected value in economics and the density matrix in quantum physics. I conjecture that this coincidence can be traced back to the contributions of von Neumann in both disciplines. Keywords: expected value, density matrix, uncertainty, quantum games JEL Classifications: B25, B41, C70 1 Introduction Over the last twenty years, a growing amount of attention has been devoted to the history of game theory. Among other reasons, this interest can largely be justified on the basis of the Nobel prize to John Nash, John Harsanyi and Reinhard Selten in 1994, to Robert Aumann and Thomas Schelling in 2005 and to Leonid Hurwicz, Eric Maskin and Roger Myerson in 2007 (for mechanism design).1 However, the literature dealing with the history of game theory mainly adopts an inner per- spective, i.e., an angle that allows us to reconstruct the developments of this sub-discipline under the general headings of economics. My aim is different, to the extent that I intend to pro- pose an interpretation of the formal relationships between game theory (and economics) and the hard sciences. Strictly speaking, this view is not new, as the idea that von Neumann’s interest in mathematics, logic and quantum mechanics is critical to our understanding of the genesis of ∗I would like to thank Jurek Konieczny (Editor), an anonymous referee, Corrado Benassi, Ennio Cavaz- zuti, George Leitmann, Massimo Marinacci, Stephen Martin, Manuela Mosca and Arsen Palestini for insightful comments and discussion. -

Koopmans in the Soviet Union

Koopmans in the Soviet Union A travel report of the summer of 1965 Till Düppe1 December 2013 Abstract: Travelling is one of the oldest forms of knowledge production combining both discovery and contemplation. Tjalling C. Koopmans, research director of the Cowles Foundation of Research in Economics, the leading U.S. center for mathematical economics, was the first U.S. economist after World War II who, in the summer of 1965, travelled to the Soviet Union for an official visit of the Central Economics and Mathematics Institute of the Soviet Academy of Sciences. Koopmans left with the hope to learn from the experiences of Soviet economists in applying linear programming to economic planning. Would his own theories, as discovered independently by Leonid V. Kantorovich, help increasing allocative efficiency in a socialist economy? Koopmans even might have envisioned a research community across the iron curtain. Yet he came home with the discovery that learning about Soviet mathematical economists might be more interesting than learning from them. On top of that, he found the Soviet scene trapped in the same deplorable situation he knew all too well from home: that mathematicians are the better economists. Key-Words: mathematical economics, linear programming, Soviet economic planning, Cold War, Central Economics and Mathematics Institute, Tjalling C. Koopmans, Leonid V. Kantorovich. Word-Count: 11.000 1 Assistant Professor, Department of economics, Université du Québec à Montréal, Pavillon des Sciences de la gestion, 315, Rue Sainte-Catherine Est, Montréal (Québec), H2X 3X2, Canada, e-mail: [email protected]. A former version has been presented at the conference “Social and human sciences on both sides of the ‘iron curtain’”, October 17-19, 2013, in Moscow. -

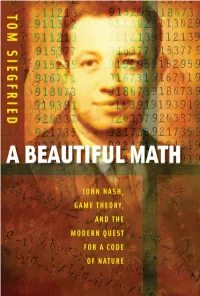

A Beautiful Math : John Nash, Game Theory, and the Modern Quest for a Code of Nature / Tom Siegfried

A BEAUTIFULA BEAUTIFUL MATH MATH JOHN NASH, GAME THEORY, AND THE MODERN QUEST FOR A CODE OF NATURE TOM SIEGFRIED JOSEPH HENRY PRESS Washington, D.C. Joseph Henry Press • 500 Fifth Street, NW • Washington, DC 20001 The Joseph Henry Press, an imprint of the National Academies Press, was created with the goal of making books on science, technology, and health more widely available to professionals and the public. Joseph Henry was one of the founders of the National Academy of Sciences and a leader in early Ameri- can science. Any opinions, findings, conclusions, or recommendations expressed in this volume are those of the author and do not necessarily reflect the views of the National Academy of Sciences or its affiliated institutions. Library of Congress Cataloging-in-Publication Data Siegfried, Tom, 1950- A beautiful math : John Nash, game theory, and the modern quest for a code of nature / Tom Siegfried. — 1st ed. p. cm. Includes bibliographical references and index. ISBN 0-309-10192-1 (hardback) — ISBN 0-309-65928-0 (pdfs) 1. Game theory. I. Title. QA269.S574 2006 519.3—dc22 2006012394 Copyright 2006 by Tom Siegfried. All rights reserved. Printed in the United States of America. Preface Shortly after 9/11, a Russian scientist named Dmitri Gusev pro- posed an explanation for the origin of the name Al Qaeda. He suggested that the terrorist organization took its name from Isaac Asimov’s famous 1950s science fiction novels known as the Foun- dation Trilogy. After all, he reasoned, the Arabic word “qaeda” means something like “base” or “foundation.” And the first novel in Asimov’s trilogy, Foundation, apparently was titled “al-Qaida” in an Arabic translation. -

Nine Takes on Indeterminacy, with Special Emphasis on the Criminal Law

University of Pennsylvania Carey Law School Penn Law: Legal Scholarship Repository Faculty Scholarship at Penn Law 2015 Nine Takes on Indeterminacy, with Special Emphasis on the Criminal Law Leo Katz University of Pennsylvania Carey Law School Follow this and additional works at: https://scholarship.law.upenn.edu/faculty_scholarship Part of the Criminal Law Commons, Law and Philosophy Commons, and the Public Law and Legal Theory Commons Repository Citation Katz, Leo, "Nine Takes on Indeterminacy, with Special Emphasis on the Criminal Law" (2015). Faculty Scholarship at Penn Law. 1580. https://scholarship.law.upenn.edu/faculty_scholarship/1580 This Article is brought to you for free and open access by Penn Law: Legal Scholarship Repository. It has been accepted for inclusion in Faculty Scholarship at Penn Law by an authorized administrator of Penn Law: Legal Scholarship Repository. For more information, please contact [email protected]. ARTICLE NINE TAKES ON INDETERMINACY, WITH SPECIAL EMPHASIS ON THE CRIMINAL LAW LEO KATZ† INTRODUCTION ............................................................................ 1945 I. TAKE 1: THE COGNITIVE THERAPY PERSPECTIVE ................ 1951 II. TAKE 2: THE MORAL INSTINCT PERSPECTIVE ..................... 1954 III. TAKE 3: THE CORE–PENUMBRA PERSPECTIVE .................... 1959 IV. TAKE 4: THE SOCIAL CHOICE PERSPECTIVE ....................... 1963 V. TAKE 5: THE ANALOGY PERSPECTIVE ................................. 1965 VI. TAKE 6: THE INCOMMENSURABILITY PERSPECTIVE ............ 1968 VII. TAKE 7: THE IRRATIONALITY-OF-DISAGREEMENT PERSPECTIVE ..................................................................... 1969 VIII. TAKE 8: THE SMALL WORLD/LARGE WORLD PERSPECTIVE 1970 IX. TAKE 9: THE RESIDUALIST PERSPECTIVE ........................... 1972 CONCLUSION ................................................................................ 1973 INTRODUCTION The claim that legal disputes have no determinate answer is an old one. The worry is one that assails every first-year law student at some point. -

Class Descriptions—Week 5, Mathcamp 2016

CLASS DESCRIPTIONS|WEEK 5, MATHCAMP 2016 Contents 9:10 Classes 2 Building Groups out of Other Groups 2 More Problem Solving: Polynomials 2 The Hairy and Still Lonely Torus 2 Turning Points in the History of Mathematics 4 Why Aren't We Learning This: Fun Stuff in Statistics 4 10:10 Classes 4 A Very Difficult Definite Integral 4 Homotopy (Co)limits 5 Scandalous Curves 5 Special Relativity 5 String Theory 6 The Magic of Determinants 6 The Mathematical ABCs 7 The Stable Marriage Problem 7 Quadratic Reciprocity 7 11:10 Classes 8 Benford's Law 8 Egyptian Fractions 8 Gambling with Nathan Ramesh 8 Hyper-Dimensional Geometry 8 IOL 2016 8 Many Cells Separating Points 9 Period Three Implies Chaos 9 Problem Solving: Tetrahedra 9 The Brauer Group 9 The BSD conjecture 10 The Cayley{Hamilton Theorem 10 The \Free Will" Theorem 10 The Yoneda Lemma 10 Twisty Puzzles are Easy 11 Wedderburn's Theorem 11 Wythoff's Game 11 1:10 Classes 11 Big Numbers 11 Graph Polynomials 12 Hard Problems That Are Almost Easy 12 Hyperbolic Geometry 12 Latin Squares and Finite Geometries 13 1 MC2016 ◦ W5 ◦ Classes 2 Multiplicative Functions 13 Permuting Conditionally Convergent Series 13 The Cap Set Problem 13 2:10 Classes 14 Computer-Aided Design 14 Factoring with Elliptic Curves 14 Math and Literature 14 More Group Theory! 15 Paradoxes in Probability 15 The Hales{Jewett Theorem 15 9:10 Classes Building Groups out of Other Groups. ( , Don, Wednesday{Friday) In group theory, everybody learns how to take a group, and shrink it down by taking a quotient by a normal subgroup|but what they don't tell you is that you can totally go the other direction. -

Handbook of Game Theory, Vol. 3 Edited by Robert Aumann and Sergiu Hart, Elsevier, New York, 2002

Games and Economic Behavior 46 (2004) 215–218 www.elsevier.com/locate/geb Handbook of Game Theory, Vol. 3 Edited by Robert Aumann and Sergiu Hart, Elsevier, New York, 2002. This is the final volume of three that organize and summarize the game theory literature, a task that becomes more necessary as the field grows. In the 1960s the main actors could have met in a large room, but today there are several national conferences with concurrent sessions, and the triennial World Congress. MathSciNet lists almost 700 pieces per year with game theory as their primary or secondary classification. The problem is not quite that the yearly output is growing—this number is only a bit higher than two decades ago— but simply that it is accumulating. No one can keep up with the whole literature, so we have come to recognize these volumes as immensely valuable. They are on almost everyone’s shelf. The initial four chapters discuss the basics of the Nash equilibrium. Van Damme focuses on the mathematical properties of refinements, and Hillas and Kohlberg work up to the Nash equilibrium from below, discussing its rationale with respect to dominance concepts, rationalizability, and correlated equilibria. Aumann and Heifetz treat the foundations of incomplete information, and Raghavan looks at the properties of two-person games that do not generalize. There is less overlap among the four chapters than one would expect, and it is valuable to get these parties’ views on the basic questions. It strikes me as lively and healthy that after many years the field continues to question and sometimes modify its foundations. -

Three Puzzles on Mathematics Computations, and Games

January 5, 2019 17:43 icm-961x669 main-pr page 551 P. I. C. M. – 2018 Rio de Janeiro, Vol. 1 (551–606) THREE PUZZLES ON MATHEMATICS, COMPUTATION, AND GAMES G K Abstract In this lecture I will talk about three mathematical puzzles involving mathemat- ics and computation that have preoccupied me over the years. The first puzzle is to understand the amazing success of the simplex algorithm for linear programming. The second puzzle is about errors made when votes are counted during elections. The third puzzle is: are quantum computers possible? 1 Introduction The theory of computing and computer science as a whole are precious resources for mathematicians. They bring up new questions, profound new ideas, and new perspec- tives on classical mathematical objects, and serve as new areas for applications of math- ematics and mathematical reasoning. In my lecture I will talk about three mathematical puzzles involving mathematics and computation (and, at times, other fields) that have preoccupied me over the years. The connection between mathematics and computing is especially strong in my field of combinatorics, and I believe that being able to person- ally experience the scientific developments described here over the past three decades may give my description some added value. For all three puzzles I will try to describe in some detail both the large picture at hand, and zoom in on topics related to my own work. Puzzle 1: What can explain the success of the simplex algorithm? Linear program- ming is the problem of maximizing a linear function subject to a system of linear inequalities. -

WEEK 5 CLASS PROPOSALS, MATHCAMP 2018 Contents

WEEK 5 CLASS PROPOSALS, MATHCAMP 2018 Abstract. We have a lot of fun class proposals for Week 5! Take a read through these proposals, and then let us know which ones you would like us to schedule for Week 5 by submitting your preferences on the appsys appsys.mathcamp.org. We'll keep voting open until sign-in on Wednesday evening. Please only vote for classes that you would attend if offered. Thanks for helping us create the schedule! Contents Aaron's Classes 3 Braid groups and the fundamental group of configuration space 3 Counting points over finite fields 4 Dimensional Analysis 4 Finite Fields 4 Math is Applied Physics 4 Riemann-Roching out on the projective line 4 The Art of Live-TeXing 4 The dimension formula for modular forms via the moduli stack of elliptic curves 5 Aaron, Vivian's Classes 5 Galois Theory and Number Theory 5 Ania, Jessica's Classes 5 Cycles in permutations 5 Apurva's Classes 6 Galois Correspondence of Covering Spaces 6 MP3 6= JPEG 6 The Quantum Spring 6 There isn't enough Homological Algebra in the world 6 Would I ever Lie Group to you? 6 Ben Dees's Classes 6 Bairely Sensible 6 Finite Field Fourier Fun 7 What is a \Sylow" anyways? 7 Yes, you can trisect angles 7 Brian Reinhart's Classes 8 A Slice of PIE 8 Dyusha, Michelle's Classes 8 Curse of Dimensionality 8 J-Lo's Classes 9 Axiomatic Music Theory part 2: Rhythm 9 Lattices 9 Minus Choice, Still Paradoxes 9 The Borsuk Problem 9 The Music of Zeta 10 Yes, you can solve the Quintic 10 Jeff's Classes 10 1 MC2018 ◦ W5 ◦ Classes 2 Combinatorial Topology 10 Jeff's Favorite Pictures -

Devising a Framework for Efficient and Accurate Matchmaking and Real-Time Web Communication

Dartmouth College Dartmouth Digital Commons Dartmouth College Undergraduate Theses Theses and Dissertations 6-1-2016 Devising a Framework for Efficient and Accurate Matchmaking and Real-time Web Communication Charles Li Dartmouth College Follow this and additional works at: https://digitalcommons.dartmouth.edu/senior_theses Part of the Computer Sciences Commons Recommended Citation Li, Charles, "Devising a Framework for Efficient and Accurate Matchmaking and Real-time Web Communication" (2016). Dartmouth College Undergraduate Theses. 106. https://digitalcommons.dartmouth.edu/senior_theses/106 This Thesis (Undergraduate) is brought to you for free and open access by the Theses and Dissertations at Dartmouth Digital Commons. It has been accepted for inclusion in Dartmouth College Undergraduate Theses by an authorized administrator of Dartmouth Digital Commons. For more information, please contact [email protected]. Devising a framework for efficient and accurate matchmaking and real-time web communication Charles Li Department of Computer Science Dartmouth College Hanover, NH [email protected] Abstract—Many modern applications have a great need for propose and evaluate original matchmaking algorithms, and matchmaking and real-time web communication. This paper first also discuss and evaluate implementations of real-time explores and details the specifics of original algorithms for communication using existing web protocols. effective matchmaking, and then proceeds to dive into implementations of real-time communication between different clients across the web. Finally, it discusses how to apply the II. PRIOR WORK AND RELEVANT TECHNOLOGIES techniques discussed in the paper in practice, and provides samples based on the framework. A. Matchmaking: No Stable Matching Exists Consider a pool of users. What does matchmaking entail? It Keywords—matchmaking; real-time; web could be the task of finding, for each user, the best compatriot or opponent in the pool to match with, given the user’s set of I.