Assisting World Wide Web Navigation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Microsoft's Internet Exploration: Predatory Or Competitive?

Cornell Journal of Law and Public Policy Volume 9 Article 3 Issue 1 Fall 1999 Microsoft’s Internet Exploration: Predatory or Competitive Thomas W. Hazlett Follow this and additional works at: http://scholarship.law.cornell.edu/cjlpp Part of the Law Commons Recommended Citation Hazlett, Thomas W. (1999) "Microsoft’s Internet Exploration: Predatory or Competitive," Cornell Journal of Law and Public Policy: Vol. 9: Iss. 1, Article 3. Available at: http://scholarship.law.cornell.edu/cjlpp/vol9/iss1/3 This Article is brought to you for free and open access by the Journals at Scholarship@Cornell Law: A Digital Repository. It has been accepted for inclusion in Cornell Journal of Law and Public Policy by an authorized administrator of Scholarship@Cornell Law: A Digital Repository. For more information, please contact [email protected]. MICROSOFT'S INTERNET EXPLORATION: PREDATORY OR COMPETITIVE? Thomas W. Hazlettt In May 1998 the U.S. Department of Justice ("DOJ") accused Microsoft of violatirig the Sherman Antitrust Act by vigorously compet- ing against Netscape's Navigator software with Microsoft's rival browser, Internet Explorer. The substance of the allegation revolves around defensive actions taken by Microsoft to protect the dominant po- sition enjoyed by Microsoft's Windows operating system. The DOJ's theory is that, were it not for Microsoft's overly aggressive reaction to Netscape, Navigator software would have been more broadly distributed, thus enabling competition to Windows. This competition would have come directly from Java, a computer language developed by Sun Microsystems and embedded in Netscape software, allowing applications to run on any underlying operating system. -

Netscape 6.2.3 Software for Solaris Operating Environment

What’s New in Netscape 6.2 Netscape 6.2 builds on the successful release of Netscape 6.1 and allows you to do more online with power, efficiency and safety. New is this release are: Support for the latest operating systems ¨ BETTER INTEGRATION WITH WINDOWS XP q Netscape 6.2 is now only one click away within the Windows XP Start menu if you choose Netscape as your default browser and mail applications. Also, you can view the number of incoming email messages you have from your Windows XP login screen. ¨ FULL SUPPORT FOR MACINTOSH OS X Other enhancements Netscape 6.2 offers a more seamless experience between Netscape Mail and other applications on the Windows platform. For example, you can now easily send documents from within Microsoft Word, Excel or Power Point without leaving that application. Simply choose File, “Send To” to invoke the Netscape Mail client to send the document. What follows is a more comprehensive list of the enhancements delivered in Netscape 6.1 CONFIDENTIAL UNTIL AUGUST 8, 2001 Netscape 6.1 Highlights PR Contact: Catherine Corre – (650) 937-4046 CONFIDENTIAL UNTIL AUGUST 8, 2001 Netscape Communications Corporation ("Netscape") and its licensors retain all ownership rights to this document (the "Document"). Use of the Document is governed by applicable copyright law. Netscape may revise this Document from time to time without notice. THIS DOCUMENT IS PROVIDED "AS IS" WITHOUT WARRANTY OF ANY KIND. IN NO EVENT SHALL NETSCAPE BE LIABLE FOR INDIRECT, SPECIAL, INCIDENTAL, OR CONSEQUENTIAL DAMAGES OF ANY KIND ARISING FROM ANY ERROR IN THIS DOCUMENT, INCLUDING WITHOUT LIMITATION ANY LOSS OR INTERRUPTION OF BUSINESS, PROFITS, USE OR DATA. -

Web Browsers

WEB BROWSERS Page 1 INTRODUCTION • A Web browser acts as an interface between the user and Web server • Software application that resides on a computer and is used to locate and display Web pages. • Web user access information from web servers, through a client program called browser. • A web browser is a software application for retrieving, presenting, and traversing information resources on the World Wide Web Page 2 FEATURES • All major web browsers allow the user to open multiple information resources at the same time, either in different browser windows or in different tabs of the same window • A refresh and stop buttons for refreshing and stopping the loading of current documents • Home button that gets you to your home page • Major browsers also include pop-up blockers to prevent unwanted windows from "popping up" without the user's consent Page 3 COMPONENTS OF WEB BROWSER 1. User Interface • this includes the address bar, back/forward button , bookmarking menu etc 1. Rendering Engine • Rendering, that is display of the requested contents on the browser screen. • By default the rendering engine can display HTML and XML documents and images Page 4 HISTROY • The history of the Web browser dates back in to the late 1980s, when a variety of technologies laid the foundation for the first Web browser, WorldWideWeb, by Tim Berners-Lee in 1991. • Microsoft responded with its browser Internet Explorer in 1995 initiating the industry's first browser war • Opera first appeared in 1996; although it have only 2% browser usage share as of April 2010, it has a substantial share of the fast-growing mobile phone Web browser market, being preinstalled on over 40 million phones. -

Why Websites Can Change Without Warning

Why Websites Can Change Without Warning WHY WOULD MY WEBSITE LOOK DIFFERENT WITHOUT NOTICE? HISTORY: Your website is a series of files & databases. Websites used to be “static” because there were only a few ways to view them. Now we have a complex system, and telling your webmaster what device, operating system and browser is crucial, here’s why: TERMINOLOGY: You have a desktop or mobile “device”. Desktop computers and mobile devices have “operating systems” which are software. To see your website, you’ll pull up a “browser” which is also software, to surf the Internet. Your website is a series of files that needs to be 100% compatible with all devices, operating systems and browsers. Your website is built on WordPress and gets a weekly check up (sometimes more often) to see if any changes have occured. Your site could also be attacked with bad files, links, spam, comments and other annoying internet pests! Or other components will suddenly need updating which is nothing out of the ordinary. WHAT DOES IT LOOK LIKE IF SOMETHING HAS CHANGED? Any update to the following can make your website look differently: There are 85 operating systems (OS) that can update (without warning). And any of the most popular roughly 7 browsers also update regularly which can affect your site visually and other ways. (Lists below) Now, with an OS or browser update, your site’s 18 website components likely will need updating too. Once website updates are implemented, there are currently about 21 mobile devices, and 141 desktop devices that need to be viewed for compatibility. -

Netscape Application Server 4.0 Installation Guide

World Wide Web security URLmerchant systemChat community system server navigator TCP/IP HTML Publishing Personal Installation GuideInter Netscape Application Server ww Version 4.0 Proxy SSL Mozilla IStore Publishing Internet secure sockets layer mail encryption HTMLhttp://www comp.syselectronic commerce JavaScript directory server news certificate Proxy Netscape Communications Corporation ("Netscape") and its licensors retain all ownership rights to the software programs offered by Netscape (referred to herein as "Software") and related documentation. Use of the Software and related documentation is governed by the license agreement accompanying the Software and applicable copyright law. Your right to copy this documentation is limited by copyright law. Making unauthorized copies, adaptations, or compilation works is prohibited and constitutes a punishable violation of the law. Netscape may revise this documentation from time to time without notice. THIS DOCUMENTATION IS PROVIDED "AS IS" WITHOUT WARRANTY OF ANY KIND. IN NO EVENT SHALL NETSCAPE BE LIABLE FOR INDIRECT, SPECIAL, INCIDENTAL, OR CONSEQUENTIAL DAMAGES OF ANY KIND ARISING FROM ANY ERROR IN THIS DOCUMENTATION, INCLUDING WITHOUT LIMITATION ANY LOSS OR INTERRUPTION OF BUSINESS, PROFITS, USE, OR DATA. The Software and documentation are copyright ©1999 Netscape Communications Corporation. All rights reserved. Netscape, Netscape Navigator, Netscape Certificate Server, Netscape DevEdge, Netscape FastTrack Server, Netscape ONE, SuiteSpot, and the Netscape N and Ship’s Wheel logos are registered trademarks of Netscape Communications Corporation in the United States and other countries. Other Netscape logos, product names, and service names are also trademarks of Netscape Communications Corporation, which may be registered in other countries. Other product and brand names are trademarks of their respective owners. -

Web Browser Web Browser Is an Application Software That Allows Us to View and Explore Information on the Web

WWEEBB BBRROOWWSSEERR http://www.tutorialspoint.com/internet_technologies/web_browsers.htm Copyright © tutorialspoint.com Web Browser web Browser is an application software that allows us to view and explore information on the web. User can request for any web page by just entering a URL into address bar. Web browser can show text, audio, video, animation and more. It is the responsibility of a web browser to interpret text and commands contained in the web page. Earlier the web browsers were text-based while now a days graphical-based or voice-based web browsers are also available. Following are the most common web browser available today: Browser Vendor Internet Explorer Microsoft Google Chrome Google Mozilla Firefox Mozilla Netscape Navigator Netscape Communications Corp. Opera Opera Software Safari Apple Sea Monkey Mozilla Foundation K-meleon K-meleon Architecture There are a lot of web browser available in the market. All of them interpret and display information on the screen however their capabilities and structure varies depending upon implementation. But the most basic component that all web browser must exhibit are listed below: Controller/Dispatcher Interpreter Client Programs Controller works as a control unit in CPU. It takes input from the keyboard or mouse, interpret it and make other services to work on the basis of input it receives. Interpreter receives the information from the controller and execute the instruction line by line. Some interpreter are mandatory while some are optional For example, HTML interpreter program is mandatory and java interpreter is optional. Client Program describes the specific protocol that will be used to access a particular service. -

Microsoft's Internet Exploration: Predatory Or Competitive?

MICROSOFT'S INTERNET EXPLORATION: PREDATORY OR COMPETITNE? Thomas W Hazlettt In May 1998 the U.S. Department of Justice ("DOJ") accused Microsoft of violatirtg the Sherman Antitrust Act by vigorously compet ing against Netscape's Navigator software with Microsoft's rival browser, Internet Explorer. The substance of the allegation revolves around defensive actions taken by Microsoft to protect the dominant po sition enjoyed by Microsoft's Windows operating system. The DOJ' s theory is that, were it not for Microsoft's overly aggressive reaction to Netscape, Navigator software would have been more broadly distributed, thus enabling competition to Windows. This competition would have come directly from Java, a computer language developed by Sun Microsystems and embedded in Netscape software, allowing applications to run on any underlying operating system. Fearing the spread of Java would render a Windows monopoly moot, the DOJ argues, Microsoft engaged in predatory behavior to pre-empt Netscape and, therefore, Java. This set of allegations can most usefully be analyzed under a four-part test for predation examining opportunity, intent, conduct, and effect. The analysis hereunder shows that while Microsoft's aggressive actions have likely injured specific competitors, the "browser jihad" featured as Ex hibit A in the DOJ' s antitrust case is a dramatic illustration of the pro consumer consequences of robust market rivalry. I. THE GOVERNMENT'S CASE: PREDATION "To protect its valuable Windows monopoly against such potential competitive threats [from Netscape and other new software products], and to extend its operating system monopoly into other software markets, Microsoft has engaged in a series of anticompetitive activities. -

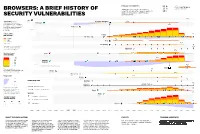

BROWSERS: a Brief Historyof Securityvulnerabilities

BROWSERS: A brief history oF PROJECT BY GROUP 5 SNEHA BALIGA / JULIA CHLASTACZ / DANG TRUNG NGUYEN / MARIJA NIKOLIC / BOGDAN NOVAKOVIC / PREETI PURI / security vulnerabilities CHENYUN ZENG 2.8.9 Lynx Vulnerabilities refer to Thomas Netscape Navigator 8.1.3 the Common Vulnerabilities Dickey Netscape and Exposures (CVE) - a system 75.1 that provides a reference method for publicly known information - K-Meleon security vulnerabilities and exposures. Netscape Netscape created the Mozilla Organization in Vulnerabilities 1998 to co-ordinate the development of the 69 Mozilla Application Suite. Netscape acquired AOL Firefox Risk severity in 1999 and went on to create multiple browsers. AOL Phoenix Critical 1.5 High AOL Medium 2.49 Low Seamonkey Risk refers to the potential that 28.7 the threat will be realized for a particular vulnerability. Palemoon 12.18 Number of open Opera vulnerabilities Opera Software 1,200 11.0 1,000 800 Internet 600 Explorer 400 Microsoft 44.0 200 Microsoft created Edge in 2015 in an 0 attempt to phase out Internet Explorer. Edge Open vulnerabilities refer to 11.4 the number of vulnerabilities open Amaya and unresolved in that year. W3C, INRIA 3.34 Konqueror Usage share KDE 0.11 ELinks 0% Twibright 5.2 Relationship lines Labs Maxthon 1 50% Maxthon International 3.34 Company Name GNOME Web 100% Epiphany 1 1 Successor browser GNOME Project Usage share refers to the 12.1 percentage of user share in Forked from another browser the browser market. Safari Apple Only 7 browsers accomodate 19 usage share of more than 1%. Symbols -

Design Issues for World Wide Web Navigation Visualisation Tools

Design Issues for World Wide Web Navigation Visualisation Tools Andy Cockburny Steve Jonesyx Department of Computer Science Department of Computer Science University of Canterbury University of Waikato Christchurch, New Zealand Hamilton, New Zealand [email protected] [email protected] +64 3 364 2987 ext 7768 +64 7 838 4490 Abstract The World Wide Web (WWW) is a successful hypermedia information space used by millions of people, yet it suffers from many deficiencies and problems in support for navigation around its vast information space. In this paper we identify the origins of these navigation problems, namely WWW browser design, WWW page design, and WWW page description languages. Regardless of their origins, these problems are eventually represented to the user at the browser’s user interface. To help overcome these problems, many tools are being developed which allow users to visualise WWW subspaces. We identify five key issues in the design and functionality of these visualisation systems: characteristics of the visual representation, the scope of the subspace representation, the mechanisms for generating the visualisation, the degree of browser independence, and the navigation support facilities. We provide a critical review of the diverse range of WWW visualisation tools with respect to these issues. Keywords World Wide Web; hypermedia navigation; design issues; visualisation. 1 Introduction With millions of users searching, browsing, and surfing the WWW, economies of scale are clearly relevant. Even a small inefficiency in user navigation within the WWW will result in enormous productivity losses if it is common to a fraction of WWW users. Our previous research has noted that users commonly have incorrect mental-models of even the most fundamental methods of WWW navigation, and has suggested interface mechanisms to ease the problems [CJ96]. -

Chapterbackground PROPERTIES

ChapterBACKGROUND PROPERTIES Topics in This Chapter • background-color • background-image • background-repeat • background-attachment • background-position • background • background-position-x • background-position-y • background-clip • background-origin • background-size • background-quantity • background-spacing 14 Chapter The background family of properties enables Web authors to tile background images with precision. Prior to the advent of the CSS3 specification, various color-related properties used to be part of the same family as the background properties, but as both have been expanded and grown more specialized, they have now been sepa- rated into families of properties. 14.1 background-color Property The background-color property sets the background color of an HTML element, and for the most part functions in exactly the same way as the bgcolor attribute asso- ciated with the body element under the HTML 4.0 specification. What makes this property so versatile is that it can be added to virtually any Web element. It can take on any type of CSS color value, ranging from standard name val- ues (such as “green” and “aqua”) to standard hexadecimals (“#ff0000”, which equals red) to rgb color values — in short, any type of color value that the color property can take (see Chapter 13, “Color”). It can also take on a transparent value, which is designed to let the immediate “parent” color show through. It can also take on an inherit value, which adopts whatever parent value may be available. The code 387 388 Chapter 14 ■ Background Properties depicted in Listing 14.1 shows some examples of how it can be added to many differ- ent Web page elements, and is shown in Figure 14–1. -

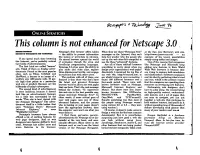

This Column Is Not Enhanced for Netscape 3.0

! I I ONLI~E STRATEGIES This column is not enhanced for Netscape 3.0 , 1 Netsc&pe's fIrst browser offers tables When they see these "Netscape Now!" at the San Jose Mercury's web site, ---......... I ._"'~Irn Til •• - the ability to present information messages on the Internet, they can't http://www.sjmercury.comlan like menus or price-lists in columns. help but wonder why the people who example of the layout possibilities H you spend much time browsing It's second browser opened the world set up the web sites felt compelled to simply using tables and images. the Internet, you've probably noticed of animation though the Java and use the these "advanced" features. One of the reasons that companies two kinds of advertisements. J avaScript programming languages. Are all of those odd-ball browsers like Netscape and Microsoft keep The fIrst kind are called "banner" Netscape 3.0 gives users the ability to something to worry about when you adding new features to their World ads. Think of them. as display adver play audio and video clips, explore design your organization's web pages? Wide Web browsers is beca1l8e they tisements. Found on the most popular three-dimensional worlds, and engage Absolutely. I examined the log files of are trying to capture both mindshare sites, such as Yahoo, InfoSeek. and in real-time chat with other users. my web site, http://vineyard.netJ,to and marketshare. Software companies HotWired, a banner is an image of a The problem with all of these new see which browsers were connecting. -

The Making of Netscape

The Making of Netscape This case was written by Professor Rajeev Kohli, Graduate School of Business, Columbia University, for class discussion rather than to illustrate either effective or ineffective handling of an administrative situation. 1 The Making of Netscape Marc Andreessen started working on the Mosaic web browser in 1992. He was a computer science student at the University of Illinois, but he spent a lot more time at the NCSA, the University’s supercomputing center, than he did in the classroom. “Working at NCSA was fascinating,” says Andreessen. “The super-computing program in the mid-1980's originated because the government thought it was crucial that a powerful computing infrastructure be provided for scientists. An interesting thing happened around 1990: all the supercomputing centers essentially stopped running supercomputers. They shut down the Crays. They could no longer afford to keep them running, especially with the advent of more cost efficient microprocessors. Since it no longer made sense to run a supercomputer, what do you do? And so networking quickly became a very big part of NCSA, the theory being that the next stage would be to link together everything -- especially scientists and educators who up until that point had been using the Crays. Networking had arrived so fast, and everything was happening so rapidly, that people just hadn't yet gotten around to making it accessible.” The Internet phenomenon had been gaining momentum for a decade, but its use was still limited to a small group of skilled programmers. “People had also been talking about hypertext for a long time, and it finally happened at NCSA,” says Andreessen.