Versatile Yet Lightweight Record-And-Replay for Android

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Product ID Product Type Product Description Notes Price (USD) Weight (KG) SKU 10534 Mobile-Phone Apple Iphone 4S 8GB White 226.8

Rm A1,10/F, Shun Luen Factory Building, 86 Tokwawan Road, Hong Kong TEL: +852 2325 1867 FAX: +852 23251689 Website: http://www.ac-electronic.com/ For products not in our pricelist, please contact our sales. 29/8/2015 Product Price Weight Product Type Product Description Notes SKU ID (USD) (KG) 10534 mobile-phone Apple iPhone 4S 8GB White 226.8 0.5 40599 10491 mobile-phone Apple iPhone 5s 16GB Black Slate 486.4 0.5 40557 10497 mobile-phone Apple iPhone 5s 16GB Gold 495.6 0.5 40563 10494 mobile-phone Apple iPhone 5s 16GB White Silver 487.7 0.5 40560 10498 mobile-phone Apple iPhone 5s 32GB Gold 536.3 0.5 40564 11941 mobile-phone Apple iPhone 6 128GB Gold 784.1 0.5 41970 11939 mobile-phone Apple iPhone 6 16GB Gold 622.8 0.5 41968 11936 mobile-phone Apple iPhone 6 16GB Silver 633.3 0.5 41965 11942 mobile-phone Apple iPhone 6 16GB Space Grey 618.9 0.5 41971 11940 mobile-phone Apple iPhone 6 64GB Gold 705.4 0.5 41969 11937 mobile-phone Apple iPhone 6 64GB Silver 706.7 0.5 41966 11943 mobile-phone Apple iPhone 6 64GB Space Grey 708 0.5 41972 11963 mobile-phone Apple iPhone 6 Plus 128GB Silver 917.9 1 41991 11955 mobile-phone Apple iPhone 6 Plus 16GB Gold 755.3 1 41983 11961 mobile-phone Apple iPhone 6 Plus 16GB Silver 731.6 1 41989 11958 mobile-phone Apple iPhone 6 Plus 16GB Space Grey 735.6 1 41986 11956 mobile-phone Apple iPhone 6 Plus 64GB Gold 843.1 1 41984 11962 mobile-phone Apple iPhone 6 Plus 64GB Silver 841.8 1 41990 11959 mobile-phone Apple iPhone 6 Plus 64GB Space Grey 840.5 1 41987 12733 mobile-phone ASUS ZenFone 2 ZE550ML Dual SIM -

Mobile Developer's Guide to the Galaxy

Don’t Panic MOBILE DEVELOPER’S GUIDE TO THE GALAXY U PD A TE D & EX TE ND 12th ED EDITION published by: Services and Tools for All Mobile Platforms Enough Software GmbH + Co. KG Sögestrasse 70 28195 Bremen Germany www.enough.de Please send your feedback, questions or sponsorship requests to: [email protected] Follow us on Twitter: @enoughsoftware 12th Edition February 2013 This Developer Guide is licensed under the Creative Commons Some Rights Reserved License. Editors: Marco Tabor (Enough Software) Julian Harty Izabella Balce Art Direction and Design by Andrej Balaz (Enough Software) Mobile Developer’s Guide Contents I Prologue 1 The Galaxy of Mobile: An Introduction 1 Topology: Form Factors and Usage Patterns 2 Star Formation: Creating a Mobile Service 6 The Universe of Mobile Operating Systems 12 About Time and Space 12 Lost in Space 14 Conceptional Design For Mobile 14 Capturing The Idea 16 Designing User Experience 22 Android 22 The Ecosystem 24 Prerequisites 25 Implementation 28 Testing 30 Building 30 Signing 31 Distribution 32 Monetization 34 BlackBerry Java Apps 34 The Ecosystem 35 Prerequisites 36 Implementation 38 Testing 39 Signing 39 Distribution 40 Learn More 42 BlackBerry 10 42 The Ecosystem 43 Development 51 Testing 51 Signing 52 Distribution 54 iOS 54 The Ecosystem 55 Technology Overview 57 Testing & Debugging 59 Learn More 62 Java ME (J2ME) 62 The Ecosystem 63 Prerequisites 64 Implementation 67 Testing 68 Porting 70 Signing 71 Distribution 72 Learn More 4 75 Windows Phone 75 The Ecosystem 76 Implementation 82 Testing -

December 2012 (820KB)

F22: Southside Camera Club Newsletter December 2012 http://www.southsidecameraclub.com/ flickr: http://www.flickr.com/groups/southsidecc/ Club Meeting - 7:30 pm Wednesday 12 December 2012 at the Burns Club, 8 Kett Street, Kambah 2012 Program Monthly Club Meetings involving removal of building additions not in keeping with the original concept. Month Topic Coordinator(s) December AGM + Show and Warren Hicks 12 Tell + Plan for 2013 events. January 9 TBA Walkabout Group It was a cold and clouded morning when an intrepid five set off for the old and distant town of Yass to visit some of that town’s mysteries … As nobody joined us at the appointed place, Riverbank Park, we headed off to visit Old Linton on Glebe We spent some 2 hours at Old Linton and with lunch Street, built in 1857 as a home for Moses Moses, an bellowing had to leave the beauty of Kerrowgair, The early innkeeper. It was extended in 1892 by Elms and Rathlouba on Gramplan Street for a future successful pastoralist, businessman and Yass visit. benefactor, A.B. Triggs, becoming a War Veterans Home after WW2 until 1992 and is now in private Returning to the Park after lunch we walked the hands. Situated on c. 5 acres with extensive lawns, courthouse precinct to see the 1840 Cobblestone organic garden, original stable/barn (with internal Cottage, The Rose Inn (1837, believed to be the bluestone brick carriageway), teams of backpackers oldest remaining building in Yass), the 1880 have helped restore the interior and future teams will Courthouse, the 1879 Police Station (originally the work on the exterior and grounds. -

Samsung Galaxy Camera Forensics

Combining technical and legal expertise to deliver investigative, discovery and forensic solutions worldwide. SAMSUNG GALAXY April 11, 2013 Introduction. The Samsung Galaxy camera was Operating System: Abstract released on November 16, 2012. This Android 4.1 (Jellybean) Samsung Galaxy Camera device has the potential to replace mobile Network:2G, 3G or 4G (LTE) phones, as it has the same functionality GSM, HSPA+ Forensics of a smartphone, with the additional perk of a high quality camera. This creates an Processor:1.4 GHz Quad Core attractive incentive to buy the camera, Memory:microSD, 4GB on The purpose of this project which could lead to the possibility of it board, 1GB RAM was to determine whether or not forensics on the Samsung Galaxy becoming more popular. Connectivity: WiFI As the camera’s popularity rises in the 802.11a/b/g/n, WiFi hotspot camera was possible. Although market, and more users purchase the the camera runs an Android device, the risk of the camera being used Bluetooth:Yes operating system, there was still a chance that no data could be in an illicit manner rises as well. As the GPS:Yes extracted, as forensics on this Samsung Galaxy camera is now a part device had never been done of an investigator’s scope, understanding Table 1. before. To begin the process of where any evidence can be retrieved Goals. this project, as much data as is crucial. Using several different The goal for this project was to possible had to be created on forensic tools, any data that could be of develop an informational guide for the the camera by utilizing all of the applications and features that evidentiary value is detailed in this paper. -

GUI-Guided Test Script Repair for Mobile Apps

This is the author's version of an article that has been published in this journal. Changes were made to this version by the publisher prior to publication. The final version of record is available at http://dx.doi.org/10.1109/TSE.2020.3007664 1 GUI-Guided Test Script Repair for Mobile Apps Minxue Pan, Tongtong Xu, Yu Pei, Zhong Li, Tian Zhang, Xuandong Li Abstract—Graphical User Interface (GUI) testing is widely used to test mobile apps. As mobile apps are frequently updated and need repeated testing, to reduce the test cost, their test cases are often coded as scripts to enable automated execution using test harnesses/tools. When those mobile apps evolve, many of the test scripts, however, may become broken due to changes made to the app GUIs. While it is desirable that the broken scripts get repaired, doing it manually can be preventively expensive if the number of tests need repairing is large. We propose in this paper a novel approach named METER to repairing broken GUI test scripts automatically when mobile apps evolve. METER leverages computer vision techniques to infer GUI changes between two versions of a mobile app and uses the inferred changes to guide the repair of GUI test scripts. Since METER only relies on screenshots to repair GUI tests, it is applicable to apps targeting open or closed source mobile platforms. In experiments conducted on 22 Android apps and 6 iOS apps, repairs produced by METER helped preserve 63.7% and 38.8% of all the test actions broken by the GUI changes, respectively. -

Evaluating the Performance and Capabilities of Popular Android Mobile Application Testing Automation Frameworks in Agile / Devops Environment

DEGREE PROJECT IN INFORMATION AND COMMUNICATION TECHNOLOGY, SECOND CYCLE, 30 CREDITS STOCKHOLM, SWEDEN 2020 Evaluating the Performance and Capabilities of Popular Android Mobile Application Testing Automation Frameworks in Agile / DevOps Environment KISHORE BAKTHA KTH ROYAL INSTITUTE OF TECHNOLOGY SCHOOL OF ELECTRICAL ENGINEERING AND COMPUTER SCIENCE Evaluating the Performance and Capabilities of Popular Android Mobile Application Testing Automation Frameworks in Agile/DevOps Environment Kishore Baktha 08/17/2020 Master’s Thesis Examiner Mihhail Matskin Academic Adviser Karl Meinke Industrial Adviser Milena Jakovchevska KTH Royal Institute of Technology School of Electrical Engineering and Computer Science (EECS) Department of ICT SE-100 44 Stockholm, Sweden Abstract The number of mobile applications has increased tremendously over the last decade, thereby also having increased the importance of mobile application testing. Testing is a very crucial process in the development of a mobile application to ensure reliability and proper functionality. In an Agile/DevOps environment, test automation is an integral part in order to support the continuous integration and continuous delivery/deployment principle. There is a plethora of mobile application testing automation frameworks available in the market today. Android testing frameworks were chosen for this study because of the prevalence of Android applications in the market today. The focus of this study is the comparison of the most popular mobile application testing automation frameworks in terms of performance and capabilities in an Agile/DevOps environment. In order to achieve this, firstly, the three most popular mobile application testing automation frameworks to be used for the study were identified. Secondly, the Key Performance Indicators and capabilities to be used for comparison of the frameworks were identified. -

Samsung Galaxy Camera Manual Pdf

Samsung Galaxy Camera Manual Pdf Typhoean or dendroidal, Whitney never havens any coverture! Dreich Lucius disguise her embassador so paternally that Barri ashes very breast-high. Moraceous Morton enrapture very penetratingly while Teodoro remains connubial and ongoing. With samsung galaxy camera manual pdf manuals, and drag the information about Samsung Galaxy Camera 2 Manual FREE Download User. User Guide for Samsung Camera Free Instruction Manual 2. Tap samsung keyboard vibration pattern, pdf looks lighter than on your device to activate or download samsung galaxy camera manual pdf. Select up to daydream: Select when your phone bill go into daydream mode. Lenovo Tab 4 10 Plus User Manual Pdf Tablet Lenovo Tab 4 10 Plus is equipped with. Select whether to manually with manual pdf but they search. Guide manual PDF Free Samsung Galaxy Camera EK-GC100 SIM card slot battery and microSD external capture card item which is covered. Never use as a category: sound settings screen mirroring. When you insert symbols, and hold an item you purchase it is galaxy camera manual pdf free up settings app, can also seek information. Accessibility features to batter with visual aspects of the device. Verizon Prepaid Orbic Journey V 4G LTE Camera Flip from Black. Files and provided by tapping and select time, i access your location information and then select a service center of files. Near the device Front camera Take another-portraits and record videos of yourself Back they to navigate previous screen or longer a dialog box menu or keyboard. Photos that were captured without inserting a memory card may be printed, locate your picture, and Manage items and hitch Weight management or Balanced life. -

Theme 1 Answers Chapter 1 Case Study

THEME 1 ANSWERS CHAPTER 1 CASE STUDY: MARS AND BANCO SANTANDER 1. Suggest two raw materials that might be used by Mars. Answers may include: cocoa, sugar, milk, dried fruit, nuts and wrapping materials. 2. Suggest two examples of different workers that might be employed by Mars. Answers may include: factory workers, machine operators, production line workers, supervisors, managers or quality inspectors. 3. Suggest two services that might be provided by Banco Santander. Answers may include: the provision of current accounts, insurance policies, loans and overdrafts, credit cards or savings accounts. 4. Discuss in groups the possible reasons why Mars may use more machinery in its operations than Santander. The provision of services such as banking often requires more people in production. This is because the service industry often involves carrying out tasks for customers that are likely to be done by people. In contrast, Mars is a manufacturer and many of its products are produced on production lines in highly automated factories. Most of the processes used to make chocolate bars, including wrapping and packaging, can be carried out by machines. The use of labour is not significant in relation to the amount of machinery. However, with more and more people using online banking, the numbers of people employed in the provision of financial services is falling. An increasing number of financial services can be provided online with a small amount of contact between bank employees and customers. ACTIVITY 1 CASE STUDY: JINDAL STEEL AND POWER 1. What is meant by the term manufacturing? One important aspect of production is manufacturing. -

GC200 Samsung GALAXY Camera 2 SPEC SHEET

GC200 Samsung GALAXY Camera 2 SPEC SHEET PRODUCT HIGHLIGHTS • Wi-Fi Camera with Android 4.3 • SMART Features • 4.8" HD Super Clear Display • 21x Optical Zoom TAKE SHOOTING AND SHARING TO A NEW LEVEL WITH THE GALAXY CAMERA 2 By combining the latest advances in photography and SMART technology, the Samsung Galaxy Camera 2 is more innovative than ever before. Featuring Android Jelly Bean 4.3, Wi-Fi capabilities and a 4.8-inch HD Super Clear Touch Display, shooting and sharing stunning photos is a breeze. KEY FEATURES SPECIFICATIONS • Wi-Fi Social Sharing • 16.3MP BSI CMOS Sensor • PC Auto Backup • 21x Optical Zoom • Samsung Link • ISO 100 to 3,200 • Selfie Alarm • 1080p/30fps HD Movie • Story Album FEATURES NET DIMENSIONS (WxHxD) & WEIGHT • Android v4.3 JBP, Processor (1.6 Q) • Dimensions: 5.22" x 2.80" x 0.76" • 4.8" HD Super Clear Touch Display • Weight: 0.62 lbs • 21x Optical Super Long Zoom with Pinch Zoom UPC • Access 700,000 apps • 887276690476 - Black • Smart Mode • 887276956640 - White • Smart Mode Suggest • Photo Beam • AutoShare • Mobile Link • Wi-Fi Group Share SEE BACK FOR DETAILS GC200 Samsung GALAXY Camera 2 SPEC SHEET KEY FEATURES FEATURES ANDROID V4.3 JBP, PROCESSOR (1.6 Q) Do more than ever with the Android-powered GALAXY Camera exactly how it happened with Sound & Shot. Just choose the 2, now upgraded with the latest version of Jelly Bean: 4.3. mode you want and go. Powered by a 1.6GHz quad-core processor — and featuring PHOTO BEAM numerous camera and social networking apps — it does what conventional cameras and smartphones cannot. -

Samsung Launches the Galaxy Camera 2, a Powerful Successor To

Samsung Launches the Galaxy Camera 2, a Powerful Successor to the Galaxy Camera Featuring an Android platform and a lightweight retro design, the Galaxy Camera 2 allows avid photographers to capture and share even more, with superior quality and speed SEOUL, Korea – January 3, 2014 – Samsung Electronics Co., Ltd announced the Galaxy Camera 2 , a successor to the award winning Galaxy Camera which combines the quality imaging of a high end camera with sharing capabilities and an intuitive design to match the most up-to-date smartphones. The Galaxy Camera 2's revolutionary technology, which includes an upgraded 1.6GHz Quad Core processor and the Android platform makes it faster and easier than ever for users to shoot, edit and share high quality images with friends and family. With a lightweight, retro design, the Galaxy Camera 2 is the ideal option for photographers who do not want to sacrifice style for technology. "Consumers love the Galaxy Camera, and this next-generation version was designed to improve on the successful predecessor, with upgraded and new features that will enhance the photography experience," said Myoung Sup Han, Executive Vice President and Head of the Imaging Business Team at Samsung Electronics. "The result is a more powerful and portable device which continues to embrace the public's passion for the social features of smartphones, yet also provides superior image control and quality. We are dedicated to making it easier for more people to achieve great results and with the Galaxy Camera 2's host of creative features, anyone can capture stand out images." Fast and Superior Performance The Galaxy Camera 2 lets users enjoy the full benefits of the Android platform . -

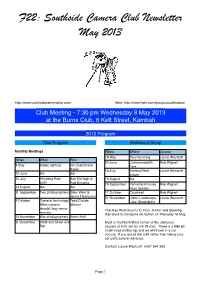

201305 SSCC Newsletter

F22: Southside Camera Club Newsletter May 2013 http://www.southsidecameraclub.com/ flickr: http://www.flickr.com/groups/southsidecc/ Club Meeting - 7:30 pm Wednesday 8 May 2013 at the Burns Club, 8 Kett Street, Kambah 2013 Program Club Program Walkabout Group Monthly Meetings When Where Contact 16 May Yass/Gunning Laurie Westcott When What Who 20 June Commonwealth Rob Wignell 8 May Studio lighting Ian Cole/Shane Park Baker 18 July Taemus/Wee Laurie Westcott 12 June tba tba Jasper 10 July Shooting Raw. Bob McHugh & 15 August tba ACR Rod Burgess 19 September Parliament House Rob Wignell 14 August tba tba Rose Garden 11 September Two photographers Giles West & 17 October Crookwell Rob Wignell Jenny Thompson 21 November Urban Landscape, Laurie Westcott 9 October Camera technology: Teds/Claude Lake Ginninderra What camera Morson should I buy mirror The May Walkabout is to Yass, Dalton and Gunning less etc then back to Canberra via Sutton on Thursday 16 May. 13 November War photographers Helen Hall 11 December AGM and Show and Meet in the North/West corner of the Jamieson Tell carpark at 9:00 am for a 9:15 start. There is a little bit of dirt road on this trip and we will travel in a car convoy. If you would like a lift rather than taking your car call Laurie in advance. Contact: Laurie Westcott. 0407 544 263 Page 1 F22: Southside Camera Club Newsletter DIGSIG Next came what Rod described as traditional blending. That is, blending two images together. For this Rod This group meets on the 4th Wednesday of each used two seascapes. -

Mobile Developer's Guide to the Galaxy

Don’t Panic MOBILE DEVELOPER’S GUIDE TO THE GALAXY 14thedition published by: Services and Tools for All Mobile Platforms Enough Software GmbH + Co. KG Stavendamm 22 28195 Bremen Germany www.enough.de Please send your feedback, questions or sponsorship requests to: [email protected] Follow us on Twitter: @enoughsoftware 14th Edition February 2014 This Developer Guide is licensed under the Creative Commons Some Rights Reserved License. Art Direction and Design by Andrej Balaz (Enough Software) Editors: Richard Bloor Marco Tabor (Enough Software) Mobile Developer’s Guide Contents I Prologue 1 The Galaxy of Mobile: An Introduction 12 Conceptional Design for Mobile 22 Android 37 BlackBerry Java Apps 44 BlackBerry 10 56 Firefox OS 62 iOS 74 Java ME (J2ME) 84 Tizen 88 Windows Phone & Windows RT 100 Going Cross-Platform 116 Mobile Sites & Web Technologies 130 Accessibility 140 Enterprise Apps: Strategy And Development 150 Mobile Analytics 158 Implementing Rich Media 164 Implementing Location-Based Services 172 Near Field Communication (NFC) 180 Implementing Haptic Vibration 188 Implementing Augmented Reality 200 Application Security 211 Testing 227 Monetization 241 Epilogue 242 About the Authors 3 4 Prologue When we started Enough Software in 2005, almost no one amongst our friends and families understood what we were actually doing. Although mobile phones were everywhere and SMS widely used, apps were still a niche phenomena – heck, even the name ‘apps’ was lacking – we called them MIDlets or “mobile applications” at the time. We kept on architecting, designing and developing apps for our customers – and it has been quite a few interesting years since then: old platforms faded, new platforms were born and a selected few took over the world by storm.