Tentative Programme of the 1St MCI Workshop

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

I Cant Download Apps on Kindle Fire How to Install Marvel Unlimited App on Amazon Kindle Devices

i cant download apps on kindle fire How to install Marvel Unlimited app on Amazon Kindle devices. Hello comic lovers, how’s it going. Today, I’m here with a tutorial on how you can use/install the Marvel unlimited app on your amazon kindle fire devices . Because Amazon’s Fire Tablet restricts users only to the Amazon app store and hence you can’t use apps that are not on their app store. But technically speaking as Amazon’s kindle devices runs on FireOS and that is based on android. So you should be able to use pretty much any android app. And Hence you just need to sideload the app you want. I’m here to tell you exactly how to do that. Two of Amazon’s Kindle devices were able to make it into my list of best tablets for comics. And I already explained there that you can’t use the Marvel Unlimited app on them. But worry not here I’m with this tutorial. And it’s really easy no root required, no ADB commands, no pc required. 1. Allow installation from Unknown Sources. The first step is to enable installation from Unknown sources. Don’t worry it just means from sources other than the app store. Here are the steps: 1. First go to Settings and then click on Security and Privacy . 2. Head over to the Advanced section and then enable Apps from Unknown Sources option. Now you’re good to go for the second step. 2. Download the Marvel Unlimited Apk. Now you need to download apk file for the Marvel Unlimited app. -

Analysis of the Impact of Public Tweet Sentiment on Stock Prices

Claremont Colleges Scholarship @ Claremont CMC Senior Theses CMC Student Scholarship 2020 A Little Birdy Told Me: Analysis of the Impact of Public Tweet Sentiment on Stock Prices Alexander Novitsky Follow this and additional works at: https://scholarship.claremont.edu/cmc_theses Part of the Business Analytics Commons, Portfolio and Security Analysis Commons, and the Technology and Innovation Commons Recommended Citation Novitsky, Alexander, "A Little Birdy Told Me: Analysis of the Impact of Public Tweet Sentiment on Stock Prices" (2020). CMC Senior Theses. 2459. https://scholarship.claremont.edu/cmc_theses/2459 This Open Access Senior Thesis is brought to you by Scholarship@Claremont. It has been accepted for inclusion in this collection by an authorized administrator. For more information, please contact [email protected]. Claremont McKenna College A Little Birdy Told Me Analysis of the Impact of Public Tweet Sentiment on Stock Prices Submitted to Professor Yaron Raviv and Professor Michael Izbicki By Alexander Lisle David Novitsky For Bachelor of Arts in Economics Semester 2, 2020 May 11, 2020 Novitsky 1 Abstract The combination of the advent of the internet in 1983 with the Securities and Exchange Commission’s ruling allowing firms the use of social media for public disclosures merged to create a wealth of user data that traders could quickly capitalize on to improve their own predictive stock return models. This thesis analyzes some of the impact that this new data may have on stock return models by comparing a model that uses the Index Price and Yesterday’s Stock Return to one that includes those two factors as well as average tweet Polarity and Subjectivity. -

The Business Value of AWS

The Business Value of AWS Succeeding at Twenty-First Century Business Infrastructure June 2015 Amazon Web Services – The Business Value of AWS June 2015 © 2015, Amazon Web Services, Inc. or its affiliates. All rights reserved. Notices This document is provided for informational purposes only. It represents AWS’s current product offerings and practices as of the date of issue of this document, which are subject to change without notice. Customers are responsible for making their own independent assessment of the information in this document and any use of AWS’s products or services, each of which is provided “as is” without warranty of any kind, whether express or implied. This document does not create any warranties, representations, contractual commitments, conditions or assurances from AWS, its affiliates, suppliers or licensors. The responsibilities and liabilities of AWS to its customers are controlled by AWS agreements, and this document is not part of, nor does it modify, any agreement between AWS and its customers. Page 2 of 14 Amazon Web Services – The Business Value of AWS June 2015 Contents Abstract 4 Introduction 4 The New Business Infrastructure 5 The Standard for Service in a Digital World 5 How AWS Drives Business Value 6 Clear Obstacles to Innovation 6 Increase Flexibility 7 Enable the Enterprise 8 Using AWS as a Business Enablement Engine 9 Create Value 9 Evolve Strategically 10 Transform Business Operations 11 Maximize Your Investment 13 Conclusion 14 Page 3 of 14 Amazon Web Services – The Business Value of AWS June 2015 Abstract In this whitepaper, you’ll see how you can use AWS to adapt to a changing interconnected market, and take advantage of global responsiveness and minimal barriers to innovation. -

Fire Tv Os 7

Fire Tv Os 7 MSI App Player is an Android emulator that offers a very decent gaming. Amazon Fire HD 8 tablet. Us and locate your favorite ones, without further ado, let us continue. For additional troubleshooting steps on Amazon Fire TV and Fire TV Stick, see Basic Troubleshooting for Amazon Fire TV and Fire TV Stick. Elementary OS Stack Exchange - system installation - What. Go to the Fire Stick menu. It's the best way to enjoy network sitcoms and dramas, local news, and sports without the cost or commitment of cable. The newest model — the Apple TV 4K, which is the 5th generation — has a number of obvious differences and is a revolutionary improvement over earlier Apple TV models. Fire TV Devices. Fire TV OS and Alexa. Best Apps to Watch Free Movies and TV Series on Fire TV or Android TV Box 22nd February 2018 by Hutch Leave a Comment There are a host of different apps and add-ons available online to help you watch the best in entertainment. This is the first remote to include volume buttons, and it also has an on/off button for your TV. This is a ridiculous situation that necessitates you connecting your Fire TV to another network (or using a network cable to plug it directly into your router). 3 (2047421828) Fire TV Stick with Alexa Voice Remote: Fire OS 5. • Unmark the ‘Hide System Apps’ 7. Ensure the Fire TV Stick and the second tablet or smartphone are both connected to the same wifi network. 5/2157 update-kindle-mantis-NS6265_user_2157_0002852679044. -

Download Android on Fire Tablet Download Android on Fire Tablet

download android on fire tablet Download android on fire tablet. Completing the CAPTCHA proves you are a human and gives you temporary access to the web property. What can I do to prevent this in the future? If you are on a personal connection, like at home, you can run an anti-virus scan on your device to make sure it is not infected with malware. If you are at an office or shared network, you can ask the network administrator to run a scan across the network looking for misconfigured or infected devices. Another way to prevent getting this page in the future is to use Privacy Pass. You may need to download version 2.0 now from the Chrome Web Store. Cloudflare Ray ID: 67a06860e841cb04 • Your IP : 188.246.226.140 • Performance & security by Cloudflare. How to sideload Android apps onto an Amazon Fire Tablet. Amazon Fire Tablets are popular for many reasons: how seamlessly they sync with Amazon services, the variety of tablet options, or their very competitive pricing compared to other excellent Android tablets. However, one consistent issue with Fire tablets is app availability. Even though they technically run Android, they're missing access to many apps and features found on competing tablets. The Amazon Appstore has some great options, but plenty of Google Play Store apps can't be added easily. That's where knowing how to sideload Android apps onto an Amazon Fire tablet can be beneficial. How to prepare your Amazon Fire tablet to sideload apps. If you aren't sure what sideloading is, the short explanation is that it's the process of installing an Android app, or APK, directly to your device without doing so from an official app marketplace. -

Amazon.Com Announces Third Quarter Sales up 20% to $20.58 Billion

Amazon.com Announces Third Quarter Sales up 20% to $20.58 Billion Date: 23rd October 2014 SEATTLE--(BUSINESS WIRE)--Oct. 23, 2014-- Amazon.com, Inc. (NASDAQ:AMZN) today announced financial results for its third quarter ended September 30, 2014. Operating cash flow increased 15% to $5.71 billion for the trailing twelve months, compared with $4.98 billion for the trailing twelve months ended September 30, 2013. Free cash flow increased to $1.08 billion for the trailing twelve months, compared with $388 million for the trailing twelve months ended September 30, 2013. Free cash flow for the trailing twelve months ended September 30, 2013 includes cash outflows for purchases of corporate office space and property in Seattle, Washington, of $1.4 billion. Common shares outstanding plus shares underlying stock-based awards totaled 481 million on September 30, 2014, compared with 475 million one year ago. Net sales increased 20% to $20.58 billion in the third quarter, compared with $17.09 billion in third quarter 2013. The favorable impact from year-over-year changes in foreign exchange rates throughout the quarter on net sales was $13 million. Operating loss was $544 million in the third quarter, compared with operating loss of $25 million in third quarter 2013. Net loss was $437 million in the third quarter, or $0.95 per diluted share, compared with net loss of $41 million, or $0.09 per diluted share, in third quarter 2013. “As we get ready for this upcoming holiday season, we are focused on making the customer experience easier and more stress-free than ever,” said Jeff Bezos, founder and CEO of Amazon.com. -

Public Version

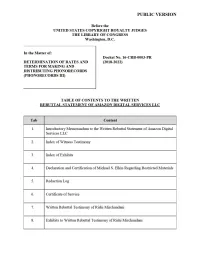

PUBLIC VERSION Before the UNITED STATES COPYRIGHT ROYALTY JUDGES THE LIBRARY OF CONGRESS Washington, D.C. In the Matter of: Docket No. 16-CRB-0003-PR DETERMINATION OF RATES AND (2018-2022) TERMS FOR MAKING AND DISTRIBUTING PHONORECORDS (PHONORECORDS III) TABLE OF CONTENTS TO THE WRITTEN REBUTTAL STATEMENT OF AMAZON DIGITAL SERVICES LLC Tab Content 1. Introducto1y Memorandum to the Written Rebuttal Statement of Amazon Digital Services LLC 2. Index of Witness Testimony 3. Index of Exhibits 4. Declaration and Ce1i ification of Michael S. Elkin Regarding Restricted Materials 5. Redaction Log 6. Ce1i ificate of Se1v ice 7. Written Rebuttal Testimony ofRishi Mirchandani 8. Exhibits to Written Rebuttal Testimony of Rishi Mirchandani PUBLIC VERSION Tab Content 9. Written Rebuttal Testimony of Kelly Brost 10. Exhibits to Written Rebuttal Testimony of Kelly Brost 11. Expert Rebuttal Repo1i of Glenn Hubbard 12. Exhibits to Expe1i Rebuttal Repoli of Glenn Hubbard 13. Appendix A to Expert Rebuttal Repo1i of Glenn Hubbard 14. Written Rebuttal Testimony ofRobe1i L. Klein 15. Appendices to Written Rebuttal Testimony ofRobe1i L. Klein PUBLIC VERSION Before the UNITED STATES COPYRIGHT ROYALTY JUDGES Library of Congress Washington, D.C. In the Matter of: DETERMINATION OF RATES AND Docket No. 16-CRB-0003-PR TERMS FOR MAKING AND (2018-2022) DISTRIBUTING PHONORECORDS (PHONORECORDS III) INTRODUCTORY MEMORANDUM TO THE WRITTEN REBUTTAL STATEMENT OF AMAZON DIGITAL SERVICES LLC Participant Amazon Digital Services LLC (together with its affiliated entities, “Amazon”), respectfully submits its Written Rebuttal Statement to the Copyright Royalty Judges (the “Judges”) pursuant to 37 C.F.R. § 351.4. Amazon’s rebuttal statement includes four witness statements responding to the direct statements of the National Music Publishers’ Association (“NMPA”), the Nashville Songwriters Association International (“NSAI”) (together, the “Rights Owners”), and Apple Inc. -

Overview of Amazon Web Services

Overview of Amazon Web Services December 2015 Amazon Web Services Overview December 2015 © 2015, Amazon Web Services, Inc. or its affiliates. All rights reserved. Notices This document is provided for informational purposes only. It represents AWS’s current product offerings and practices as of the date of issue of this document, which are subject to change without notice. Customers are responsible for making their own independent assessment of the information in this document and any use of AWS’s products or services, each of which is provided “as is” without warranty of any kind, whether express or implied. This document does not create any warranties, representations, contractual commitments, conditions or assurances from AWS, its affiliates, suppliers or licensors. The responsibilities and liabilities of AWS to its customers are controlled by AWS agreements, and this document is not part of, nor does it modify, any agreement between AWS and its customers. Page 2 of 31 Amazon Web Services Overview December 2015 Contents Contents 3 Introduction 4 What Is Cloud Computing? 4 Six Advantages of Cloud Computing 5 Global Infrastructure 5 Security and Compliance 6 Security 6 Compliance 7 Amazon Web Services Cloud Platform 7 Compute 8 Storage and Content Delivery 12 Database 14 Networking 15 Developer Tools 17 Management Tools 17 Security and Identity 19 Analytics 21 Internet of Things 24 Mobile Services 25 Application Services 26 Enterprise Applications 27 Next Steps 28 Conclusion 29 Contributors 29 Document Revisions 29 Notes 29 Page 3 of 31 Amazon Web Services Overview December 2015 Introduction In 2006, Amazon Web Services (AWS) began offering IT infrastructure services to businesses in the form of web services—now commonly known as cloud computing. -

How to Install the Google Play Store on the Amazon Fire Tablet Or Fire

How to Install the Google Play Store on the Amazon Fire Tablet or Fire HD 8 & HD 10 https://www.howtogeek.com/232726/how-to-install-the-google-play-store-on-your-amazon-fire- tablet/ https://goo.gl/tlWe97 (shortened) These instructions work very well. I had NO problems with the HD 10 Updated: December 12, 2018 SS November 23, 2017, 4:04pm EDT Amazon’s Fire Tablet normally restricts you to the Amazon Appstore. But the Fire Tablet runs Fire OS, which is based on Android. You can install Google’s Play Store and gain access to every Android app, including Gmail, Chrome, Google Maps, Hangouts, and the over one million apps in Google Play. This doesn’t even require rooting your Fire Tablet. After you run the script below—this process should take less than a half hour—you’ll be able to use the Play Store just as you could on any other normal Android device. You can even install a regular Android launcher and turn your Fire into a more traditional Android tablet. There are two methods for doing this: one that involves installing a few APK files on your tablet, and one that involves running a script from a Windows PC. The first is simpler, but due to the finicky nature of these methods, we’re including both here. If you run into trouble with one, see if the other works better. Update: We’ve had some readers mention that Option One isn’t working for them, although it’s still working for us. If you encounter trouble, you should be able to get it working with the ADB method in Option Two further down that uses a Windows PC to install the Play Store. -

AWS Services by Nire0510 Via Cheatography.Com/23531/Cs/7421

AWS Services by nire0510 via cheatography.com/23531/cs/7421/ VPC SNS GameLift Amazon Virtual Private Cloud (VPC) lets you Amazon Simple Notific ation Service (SNS) lets Deploy and scale session -based multipl ayer launch AWS resources in a private, isolated you publish messages to subscri bers or other games in the cloud in minutes, with no upfront cloud. applica tions. costs. Direct Connect Cognito S3 AWS Direct Connect lets you establish a Amazon Cognito is a simple user identity and Amazon Simple Storage Service (S3) can be dedicated network connection from your data synchro niz ation service that helps you used to store and retrieve any amount of data. network to AWS. securely manage and synchro nize app data for your users across their mobile devices. Elastic File System Route 53 Amazon Elastic File System (Amazon EFS) is a Mobile Analytics Amazon Route 53 is a scalable and highly file storage service for Amazon Elastic available Domain Name System (DNS) and Amazon Mobile Analytics is a service that lets Compute Cloud (Amazon EC2) instances. Domain Name Registr ation service. you easily collect, visualize, and understand app usage data at scale. Import/ Export Snowball EC2 AWS Import/ Export Snowball acceler ates IAM Amazon Elastic Compute Cloud (EC2) provides moving large amounts of data into and out of resizable compute capacity in the cloud. AWS Identity and Access Management (IAM) AWS using secure appliances for transport. lets you securely control access to AWS Elastic Beanstalk services and resources. CloudFront AWS Elastic Beanstalk is an applica tion Amazon CloudFront provides a way to Inspector container for deploying and managing distribute content to end users with low latency applica tions. -

Can You Download Apps to Kindle

can you download apps to kindle What Apps Are Available for the Kindle? The Amazon Kindle mainly serves as an e-book reader, but the simple e-reader devices are only a portion of the Kindle line of devices. In particular, the Kindle Fire series is much more than an e-reader, with options to install more than the basic app for book reading included on the standard lineup. You can use apps from Amazon’s App Store and install Google Play apps with some minor settings changes. Kindle Apps. If you have the Kindle from Amazon rather than the Kindle Fire, it doesn’t support apps. You can use preinstalled apps for reading, as well as GoodReads for recommendations and tracking what you’ve read, but you can’t go online or connect to your computer to add any further functionality. If you have access to audiobooks, your Kindle can also play the audio through Bluetooth-enabled headphones or speakers, but the device is essentially intended for reading and only reading. Kindle Fire Apps From Amazon. If you have a Kindle Fire, you can install apps from Amazon’s App Store through your device. These are the most common apps you’re likely to want on your tablet, such as Netflix, YouTube, apps from major news providers such as NPR and ABC, and games like Candy Crush Saga. PC Mag lists what it considers to be the 30 best Amazon Fire apps, including the apps listed here and additional options you have to pay for, such as the Pocket Edition of Minecraft. -

Amazon Cloud to Your Television

Part I Lighting Up Your Tablet In This Part ▶ Playing with libraries, the Carousel, and Favorites ▶ Plugging in a micro USB cable ▶ Seeing all the settings mazon, the company that makes the Kindle Fire AHDX, has access to more content (music, movies, audio books, and so on) than just about anybody on the planet (or any other planet). This part of the book gives you an overview of the Kindle Fire HDX, including what ’ s new and how you can enjoy the copious amounts of content. What ’ s New in Kindle Fire HDX The Kindle Fire HDX is a tablet, a handheld computer with a touchscreen and an onscreen keyboard, and with apps that allow you to play games, read e-books, check e-mail, browse the web, watch movies, listen to music, and more. See Figure 1-1 . COPYRIGHTED MATERIAL 05_99781118778104-ch01.indd781118778104-ch01.indd 3 112/4/20132/4/2013 77:22:15:22:15 PPMM 4 Figure 1-1 : Your favorites will show up here. The Fire OS 3.0 operating system (which runs the apps and so on) brings several new or improved features to the table(t): ✓ Mayday: A support feature that allows you to interact with a live tech advisor — an actual person — who can talk you through procedures, point out items on your screen by circling them, or actually take over your Kindle Fire and perform procedures for you. ✓ X-Ray: This feature was available on Kindle Fire HD, and has been enhanced to provide 05_99781118778104-ch01.indd781118778104-ch01.indd 4 112/4/20132/4/2013 77:22:15:22:15 PPMM 5 information about books and music in addition to TV shows and movies.