Flexible Data Extraction for Analysis Using Multidimensional Databases and OLAP Cubes

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

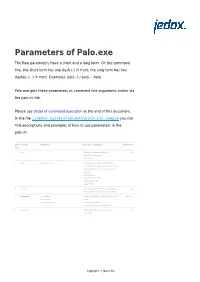

Parameters of Palo.Exe

Parameters of Palo.exe The Palo parameters have a short and a long form. On the command line, the short form has one dash (-) in front; the long form has two dashes (- -) in front. Examples: palo -? / palo – -help. Palo.exe gets these parameters as command line arguments and/or via the palo.ini file. Please see Order of command execution at the end of this document. In the file …\Jedox Suite\olap\data\palo.ini.sample you can find descriptions and examples of how to use parameters in the palo.ini. Short Long form Argument(s) Description / Example(s) Default value form ? help Displays the parameters of palo.exe. False Only for the command line. On/off switch. a admin <address> <port> Http interface with server browser and online documentation. An address can be a server name, an internet address or “” for all server internet addresses. Port is a number: admin 192.168.1.2 7777 admin localhost 7770 admin “” 7778 A auto-load Loads all databases on server start into memory True which are defined in the palo.csv. On/off switch. b cache-barrier <max number Sets the max number of cells to store in each cube 100000000 of cells to store cache. in each cube cache> cache-barrier 150000000 cache-barrier 0 (sets cache-barrier to 0). B auto-commit Commits all changes on server shutdown. True On/off switch. Copyright © Jedox AG c crypt Turns on encrypting of the database files. Newly False saved files are encrypted if this is set using the Blowfish algorithm. On/off switch. -

A Platform for Networked Business Analytics BUSINESS INTELLIGENCE

BROCHURE A platform for networked business analytics BUSINESS INTELLIGENCE Infor® Birst's unique networked business analytics technology enables centralized and decentralized teams to work collaboratively by unifying Leveraging Birst with existing IT-managed enterprise data with user-owned data. Birst automates the enterprise BI platforms process of preparing data and adds an adaptive user experience for business users that works across any device. Birst networked business analytics technology also enables customers to This white paper will explain: leverage and extend their investment in ■ Birst’s primary design principles existing legacy business intelligence (BI) solutions. With the ability to directly connect ■ How Infor Birst® provides a complete unified data and analytics platform to Oracle Business Intelligence Enterprise ■ The key elements of Birst’s cloud architecture Edition (OBIEE) semantic layer, via ODBC, ■ An overview of Birst security and reliability. Birst can map the existing logical schema directly into Birst’s logical model, enabling Birst to join this Enterprise Data Tier with other data in the analytics fabric. Birst can also map to existing Business Objects Universes via web services and Microsoft Analysis Services Cubes and Hyperion Essbase cubes via MDX and extend those schemas, enabling true self-service for all users in the enterprise. 61% of Birst’s surveyed reference customers use Birst as their only analytics and BI standard.1 infor.com Contents Agile, governed analytics Birst high-performance in the era of -

The Role of Multidimensional Databases in Modern Organizations

Vol.IV (LXVII) 95 - 102 Economic Insights – Trends and Challenges No. 2/2015 The Role of Multidimensional Databases in Modern Organizations Ana Tănăsescu Faculty of Economic Sciences, Petroleum-Gas University of Ploieşti, Bd. Bucureşti 39, 100680, Ploieşti, Romania e-mail: [email protected] Abstract The relational databases represent an ineffective data storage method when organizations manipulate a large amount of heterogeneous data and must realize complex analyses on these data. Thus, a new concept is required, the multidimensional database concept. In this paper the multidimensional database concept will be presented, its characteristics will be described, a comparative analysis between multidimensional databases and relational databases will be realized and a multidimensional database will be presented. This database was designed using Palo for Excel tool and can be used in order to analyse the academic staff research activity from a faculty. Keywords: multidimensional databases; multidimensional modelling; relational databases JEL Classification: C63; C81 Introduction The large amount of heterogeneous data accumulated by organizations represents one of the reasons why organizations need a technology that allows them to quickly achieve complex analyses on these data. Because the relational databases are ineffective from this point of view, a new concept is required, the multidimensional database concept. Annually, a large volume of information regarding the teaching and research activity of the academic staff from faculty departments is accumulated. The departments’ managers as well as the teaching process and research activity responsible persons must, periodically, elaborate reports, synthesizing the information accumulated in different time periods. A multidimensional database where this information will be stored is required to be designed in order to streamline their activity. -

Data Extraction Techniques for Spreadsheet Records

Data Extraction Techniques for Spreadsheet Records Volume 2, Number 1 2007 page 119 – 129 Mark G. Simkin University of Nevada Reno, [email protected], (775) 784-4840 Downloaded from http://meridian.allenpress.com/aisej/article-pdf/2/1/119/2070089/aise_2007_2_1_119.pdf by guest on 28 September 2021 Abstract Many accounting applications use spreadsheets as repositories of accounting records, and a common requirement is the need to extract specific information from them. This paper describes a number of techniques that accountants can use to perform such tasks directly using common spreadsheet tools. These techniques include (1) simple and advanced filtering techniques, (2) database functions, (3) methods for both simple and stratified sampling, and, (4) tools for finding duplicate or unmatched records. Keywords Data extraction techniques, spreadsheet databases, spreadsheet filtering methods, spreadsheet database functions, sampling. INTRODUCTION A common use of spreadsheets is as a repository of accounting records (Rose 2007). Examples include employee records, payroll records, inventory records, and customer records. In all such applications, the format is typically the same: the rows of the worksheet contain the information for any one record while the columns contain the data fields for each record. Spreadsheet formatting capabilities encourage such applications inasmuch as they also allow the user to create readable and professional-looking outputs. A common user requirement of records-based spreadsheets is the need to extract subsets of information from them (Severson 2007). In fact, a survey by the IIA in the U.S. found that “auditor use of data extraction tools has progressively increased over the last 10 years” (Holmes 2002). -

Beyond Relational Databases

EXPERT ANALYSIS BY MARCOS ALBE, SUPPORT ENGINEER, PERCONA Beyond Relational Databases: A Focus on Redis, MongoDB, and ClickHouse Many of us use and love relational databases… until we try and use them for purposes which aren’t their strong point. Queues, caches, catalogs, unstructured data, counters, and many other use cases, can be solved with relational databases, but are better served by alternative options. In this expert analysis, we examine the goals, pros and cons, and the good and bad use cases of the most popular alternatives on the market, and look into some modern open source implementations. Beyond Relational Databases Developers frequently choose the backend store for the applications they produce. Amidst dozens of options, buzzwords, industry preferences, and vendor offers, it’s not always easy to make the right choice… Even with a map! !# O# d# "# a# `# @R*7-# @94FA6)6 =F(*I-76#A4+)74/*2(:# ( JA$:+49>)# &-)6+16F-# (M#@E61>-#W6e6# &6EH#;)7-6<+# &6EH# J(7)(:X(78+# !"#$%&'( S-76I6)6#'4+)-:-7# A((E-N# ##@E61>-#;E678# ;)762(# .01.%2%+'.('.$%,3( @E61>-#;(F7# D((9F-#=F(*I## =(:c*-:)U@E61>-#W6e6# @F2+16F-# G*/(F-# @Q;# $%&## @R*7-## A6)6S(77-:)U@E61>-#@E-N# K4E-F4:-A%# A6)6E7(1# %49$:+49>)+# @E61>-#'*1-:-# @E61>-#;6<R6# L&H# A6)6#'68-# $%&#@:6F521+#M(7#@E61>-#;E678# .761F-#;)7-6<#LNEF(7-7# S-76I6)6#=F(*I# A6)6/7418+# @ !"#$%&'( ;H=JO# ;(\X67-#@D# M(7#J6I((E# .761F-#%49#A6)6#=F(*I# @ )*&+',"-.%/( S$%=.#;)7-6<%6+-# =F(*I-76# LF6+21+-671># ;G';)7-6<# LF6+21#[(*:I# @E61>-#;"# @E61>-#;)(7<# H618+E61-# *&'+,"#$%&'$#( .761F-#%49#A6)6#@EEF46:1-# -

Jedox – Seamless Enterprise Performance Management

Jedox – Seamless Enterprise Performance Management Jedox Enterprise Performance Management software The unique Jedox ExcelPLUS approach lets you work streamlines budgeting, planning, and forecasting across directly in the fl exible Microsoft Excel environment. the entire organization. Jedox simplifi es planning for Alternatively, Jedox off ers intuitive web and mobile business users in all departments and provides controlled, applications for Excel-like planning, analytics, and role-based access to a single source of truth. Integrated reporting anywhere, anytime. Add the lightning fast fi nancial and operational planning and collaboration Jedox in-memory database, data governance, and between Finance, Sales, Human Resources, Operations, security, workfl ows, and audit capabilities and you’ve Procurement, and other functions can boost data quality got a powerful enterprise-grade solution that optimizes and analysis, slash planning cycles in half, and speed-up business processes and quickly adjusts to changing company reporting. Easily integrate data from multiple requirements in a modern, fast-paced, digital company. source systems such as ERP, CRM, and BI tools without the need to involve your IT department. Shorter Budget Cycles – Effi cient Planning – Up-to-Date Forecasts Supplier Evaluation Workforce Planning Target Costing Procurement HR Compensation & Benefi ts Inventory Planning Balanced Score Card Forecasting & S&OP Budgeting & Planning Modeling Supply Chain Management Integrated Financial Costing & Planning Finance Operations Profi tability Production Planning & Control Management Reporting Consolidation Marketing Analytics Sales Planning Other Project Controlling Sales Sales Forecasting Functions …and many more… Incentive Compensation Three Ways to Design Your Planning, Analytics & Reporting Solution with Jedox Embrace Your Spreadsheets, Create Custom Applications or Confi gure Pre-Built Software Content for Your Needs 1. -

Tip for Data Extraction for Meta-Analysis - 11

Tip for data extraction for meta-analysis - 11 How can you make data extraction more efficient? Kathy Taylor Data extraction that’s not well organised will add delays to the analysis of data. There are a number of ways in which you can improve the efficiency of the data extraction process and also help in the process of analysing the extracted data. The following list of recommendations is derived from a number of different sources and my experiences of working on systematic reviews and meta- analysis. 1. Highlight the extracted data on the pdfs of your included studies. This will help if the other data extractor disagrees with you, as you’ll be able to quickly find the data that you extracted. You obviously need to do this on your own copies of the pdfs. 2. Keep a record of your data sources. If a study involves multiple publications, record what you found in each publication, so if the other data extractor disagrees with you, you can quickly find the source of your extracted data. 3. Create folders to group sources together. This is useful when studies involve multiple publications. 4. Provide informative names of sources. When studies involve multiple publications, it is useful to identify, through names of files, which is the protocol publication (source of data for quality assessment) and which includes the main results (sources of data for meta- analysis). 5. Document all your calculations and estimates. Using calculators does not leave a record of what you did. It’s better to do your calculations in EXCEL, or better still, in a computer program. -

The Cedar Programming Environment: a Midterm Report and Examination

The Cedar Programming Environment: A Midterm Report and Examination Warren Teitelman The Cedar Programming Environment: A Midterm Report and Examination Warren Teitelman t CSL-83-11 June 1984 [P83-00012] © Copyright 1984 Xerox Corporation. All rights reserved. CR Categories and Subject Descriptors: D.2_6 [Software Engineering]: Programming environments. Additional Keywords and Phrases: integrated programming environment, experimental programming, display oriented user interface, strongly typed programming language environment, personal computing. t The author's present address is: Sun Microsystems, Inc., 2550 Garcia Avenue, Mountain View, Ca. 94043. The work described here was performed while employed by Xerox Corporation. XEROX Xerox Corporation Palo Alto Research Center 3333 Coyote Hill Road Palo Alto, California 94304 1 Abstract: This collection of papers comprises a report on Cedar, a state-of-the-art programming system. Cedar combines in a single integrated environment: high-quality graphics, a sophisticated editor and document preparation facility, and a variety of tools for the programmer to use in the construction and debugging of his programs. The Cedar Programming Language is a strongly-typed, compiler-oriented language of the Pascal family. What is especially interesting about the Ce~ar project is that it is one of the few examples where an interactive, experimental programming environment has been built for this kind of language. In the past, such environments have been confined to dynamically typed languages like Lisp and Smalltalk. The first paper, "The Roots of Cedar," describes the conditions in 1978 in the Xerox Palo Alto Research Center's Computer Science Laboratory that led us to embark on the Cedar project and helped to define its objectives and goals. -

AIMMX: Artificial Intelligence Model Metadata Extractor

AIMMX: Artificial Intelligence Model Metadata Extractor Jason Tsay Alan Braz Martin Hirzel [email protected] [email protected] Avraham Shinnar IBM Research IBM Research Todd Mummert Yorktown Heights, New York, USA São Paulo, Brazil [email protected] [email protected] [email protected] IBM Research Yorktown Heights, New York, USA ABSTRACT International Conference on Mining Software Repositories (MSR ’20), October Despite all of the power that machine learning and artificial intelli- 5–6, 2020, Seoul, Republic of Korea. ACM, New York, NY, USA, 12 pages. gence (AI) models bring to applications, much of AI development https://doi.org/10.1145/3379597.3387448 is currently a fairly ad hoc process. Software engineering and AI development share many of the same languages and tools, but AI de- 1 INTRODUCTION velopment as an engineering practice is still in early stages. Mining The combination of sufficient hardware resources, the availability software repositories of AI models enables insight into the current of large amounts of data, and innovations in artificial intelligence state of AI development. However, much of the relevant metadata (AI) models has brought about a renaissance in AI research and around models are not easily extractable directly from repositories practice. For this paper, we define an AI model as all the software and require deduction or domain knowledge. This paper presents a and data artifacts needed to define the statistical model for a given library called AIMMX that enables simplified AI Model Metadata task, train the weights of the statistical model, and/or deploy the eXtraction from software repositories. -

BI SEARCH and TEXT ANALYTICS New Additions to the BI Technology Stack

SECOND QUARTER 2007 TDWI BEST PRACTICES REPORT BI SEARCH AND TEXT ANALYTICS New Additions to the BI Technology Stack By Philip Russom TTDWI_RRQ207.inddDWI_RRQ207.indd cc11 33/26/07/26/07 111:12:391:12:39 AAMM Research Sponsors Business Objects Cognos Endeca FAST Hyperion Solutions Corporation Sybase, Inc. TTDWI_RRQ207.inddDWI_RRQ207.indd cc22 33/26/07/26/07 111:12:421:12:42 AAMM SECOND QUARTER 2007 TDWI BEST PRACTICES REPORT BI SEARCH AND TEXT ANALYTICS New Additions to the BI Technology Stack By Philip Russom Table of Contents Research Methodology and Demographics . 3 Introduction to BI Search and Text Analytics . 4 Defining BI Search . 5 Defining Text Analytics . 5 The State of BI Search and Text Analytics . 6 Quantifying the Data Continuum . 7 New Data Warehouse Sources from the Data Continuum . 9 Ramifications of Increasing Unstructured Data Sources . .11 Best Practices in BI Search . 12 Potential Benefits of BI Search . 12 Concerns over BI Search . 13 The Scope of BI Search . 14 Use Cases for BI Search . 15 Searching for Reports in a Single BI Platform Searching for Reports in Multiple BI Platforms Searching Report Metadata versus Other Report Content Searching for Report Sections Searching non-BI Content along with Reports BI Search as a Subset of Enterprise Search Searching for Structured Data BI Search and the Future of BI . 18 Best Practices in Text Analytics . 19 Potential Benefits of Text Analytics . 19 Entity Extraction . 20 Use Cases for Text Analytics . 22 Entity Extraction as the Foundation of Text Analytics Entity Clustering and Taxonomy Generation as Advanced Text Analytics Text Analytics Coupled with Predictive Analytics Text Analytics Applied to Semi-structured Data Processing Unstructured Data in a DBMS Text Analytics and the Future of BI . -

Open Source As an Alternative to Commercial Software

OPEN-SOURCE AS AN ALTERNATIVE TO COMMERCIAL SOFTWARE Final Report 583 Prepared by: Sean Coleman 2401 E Rio Salado Pkwy. #1179 Tempe, Arizona 85281 March 2009 Prepared for: Arizona Department of Transportation 206 South 17th Avenue Phoenix, Arizona 85007 in cooperation with U.S. Department of Transportation Federal Highway Administration The contents of this report reflect the views of the authors who are responsible for the facts and the accuracy of the data presented herein. The contents do not necessarily reflect the official views or policies of the Arizona Department of Transportation or the Federal Highway Administration. This report does not constitute a standard, specification, or regulation. Trade or manufacturers’ names which may appear herein are cited only because they are considered essential to the objectives of the report. The U.S. Government and the State of Arizona do not endorse products or manufacturers. TECHNICAL REPORT DOCUMENTATION PAGE 1. Report No. 2. Government Accession No. 3. Recipient’s Catalog No. FHWA-AZ-09-583 4. Title and Subtitle 5. Report Date: March, 2009 Open-Source as an Alternative to Commercial Software 6. Performing Organization Code 7. Authors: 8. Performing Organization Sean Coleman Report No. 9. Performing Organization Name and Address 10. Work Unit No. Sean Coleman 11. Contract or Grant No. 2401 E Rio Salado Pkwy, #1179 SPR-583 Tempe, AZ 85281 12. Sponsoring Agency Name and Address 13. Type of Report & Period Arizona Department of Transportation Covered 206 S. 17th Ave. Phoenix, AZ 85007 14. Sponsoring Agency Code Project Managers: Frank DiBugnara, John Semmens, and Steve Rost 15. Supplementary Notes 16. -

Benchmarking Distributed Data Warehouse Solutions for Storing Genomic Variant Information

Research Collection Journal Article Benchmarking distributed data warehouse solutions for storing genomic variant information Author(s): Wiewiórka, Marek S.; Wysakowicz, David P.; Okoniewski, Michał J.; Gambin, Tomasz Publication Date: 2017-07-11 Permanent Link: https://doi.org/10.3929/ethz-b-000237893 Originally published in: Database 2017, http://doi.org/10.1093/database/bax049 Rights / License: Creative Commons Attribution 4.0 International This page was generated automatically upon download from the ETH Zurich Research Collection. For more information please consult the Terms of use. ETH Library Database, 2017, 1–16 doi: 10.1093/database/bax049 Original article Original article Benchmarking distributed data warehouse solutions for storing genomic variant information Marek S. Wiewiorka 1, Dawid P. Wysakowicz1, Michał J. Okoniewski2 and Tomasz Gambin1,3,* 1Institute of Computer Science, Warsaw University of Technology, Nowowiejska 15/19, Warsaw 00-665, Poland, 2Scientific IT Services, ETH Zurich, Weinbergstrasse 11, Zurich 8092, Switzerland and 3Department of Medical Genetics, Institute of Mother and Child, Kasprzaka 17a, Warsaw 01-211, Poland *Corresponding author: Tel.: þ48693175804; Fax: þ48222346091; Email: [email protected] Citation details: Wiewiorka,M.S., Wysakowicz,D.P., Okoniewski,M.J. et al. Benchmarking distributed data warehouse so- lutions for storing genomic variant information. Database (2017) Vol. 2017: article ID bax049; doi:10.1093/database/bax049 Received 15 September 2016; Revised 4 April 2017; Accepted 29 May 2017 Abstract Genomic-based personalized medicine encompasses storing, analysing and interpreting genomic variants as its central issues. At a time when thousands of patientss sequenced exomes and genomes are becoming available, there is a growing need for efficient data- base storage and querying.