Open Source As an Alternative to Commercial Software

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Parameters of Palo.Exe

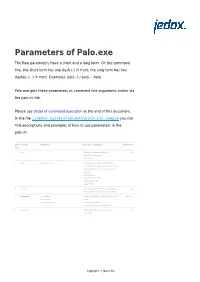

Parameters of Palo.exe The Palo parameters have a short and a long form. On the command line, the short form has one dash (-) in front; the long form has two dashes (- -) in front. Examples: palo -? / palo – -help. Palo.exe gets these parameters as command line arguments and/or via the palo.ini file. Please see Order of command execution at the end of this document. In the file …\Jedox Suite\olap\data\palo.ini.sample you can find descriptions and examples of how to use parameters in the palo.ini. Short Long form Argument(s) Description / Example(s) Default value form ? help Displays the parameters of palo.exe. False Only for the command line. On/off switch. a admin <address> <port> Http interface with server browser and online documentation. An address can be a server name, an internet address or “” for all server internet addresses. Port is a number: admin 192.168.1.2 7777 admin localhost 7770 admin “” 7778 A auto-load Loads all databases on server start into memory True which are defined in the palo.csv. On/off switch. b cache-barrier <max number Sets the max number of cells to store in each cube 100000000 of cells to store cache. in each cube cache> cache-barrier 150000000 cache-barrier 0 (sets cache-barrier to 0). B auto-commit Commits all changes on server shutdown. True On/off switch. Copyright © Jedox AG c crypt Turns on encrypting of the database files. Newly False saved files are encrypted if this is set using the Blowfish algorithm. On/off switch. -

The Role of Multidimensional Databases in Modern Organizations

Vol.IV (LXVII) 95 - 102 Economic Insights – Trends and Challenges No. 2/2015 The Role of Multidimensional Databases in Modern Organizations Ana Tănăsescu Faculty of Economic Sciences, Petroleum-Gas University of Ploieşti, Bd. Bucureşti 39, 100680, Ploieşti, Romania e-mail: [email protected] Abstract The relational databases represent an ineffective data storage method when organizations manipulate a large amount of heterogeneous data and must realize complex analyses on these data. Thus, a new concept is required, the multidimensional database concept. In this paper the multidimensional database concept will be presented, its characteristics will be described, a comparative analysis between multidimensional databases and relational databases will be realized and a multidimensional database will be presented. This database was designed using Palo for Excel tool and can be used in order to analyse the academic staff research activity from a faculty. Keywords: multidimensional databases; multidimensional modelling; relational databases JEL Classification: C63; C81 Introduction The large amount of heterogeneous data accumulated by organizations represents one of the reasons why organizations need a technology that allows them to quickly achieve complex analyses on these data. Because the relational databases are ineffective from this point of view, a new concept is required, the multidimensional database concept. Annually, a large volume of information regarding the teaching and research activity of the academic staff from faculty departments is accumulated. The departments’ managers as well as the teaching process and research activity responsible persons must, periodically, elaborate reports, synthesizing the information accumulated in different time periods. A multidimensional database where this information will be stored is required to be designed in order to streamline their activity. -

Rhetorical Code Studies Revised Pages

Revised Pages rhetorical code studies Revised Pages Sweetland Digital rhetoric collaborative Series Editors: Anne Ruggles Gere, University of Michigan Naomi Silver, University of Michigan The Sweetland Digital Rhetoric Collaborative Book Series publishes texts that investigate the multiliteracies of digitally mediated spaces both within academia as well as other contexts. Rhetorical Code Studies: Discovering Arguments in and around Code Kevin Brock Developing Writers in Higher Education: A Longitudinal Study Anne Ruggles Gere, Editor Sites of Translation: What Multilinguals Can Teach Us about Digital Writing and Rhetoric Laura Gonzales Rhizcomics: Rhetoric, Technology, and New Media Composition Jason Helms Making Space: Writing, Instruction, Infrastrucure, and Multiliteracies James P. Purdy and Dànielle Nicole DeVoss, Editors Digital Samaritans: Rhetorical Delivery and Engagement in the Digital Humanities Jim Ridolfo diGitalculturebooks, an imprint of the University of Michigan Press, is dedicated to publishing work in new media studies and the emerging field of digital humanities. Revised Pages Rhetorical Code Studies discovering arguments in and around code Kevin Brock University of Michigan Press ann arbor Revised Pages Copyright © 2019 by Kevin Brock Some rights reserved This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License. Note to users: A Creative Commons license is only valid when it is applied by the person or entity that holds rights to the licensed work. Works may contain components (e.g., photo- graphs, illustrations, or quotations) to which the rightsholder in the work cannot apply the license. It is ultimately your responsibility to independently evaluate the copyright status of any work or component part of a work you use, in light of your intended use. -

Travstar1 V10.01.02-XXXX XXX

Travstar1 v10.01.02-XXXX_XXX Fiscal Systems, Inc. 4946 Research Drive Huntsville, AL 35805 Office: (256) 772-8920 Help Desk: (800) 838-4549, Option 3 www.fis-cal.com Document Version History Version Description Approved Date 1.0 Initial Release for Version 9.00 KDS 15 JUL 09 1.1 Changed Patch and Update Instructions KDS 11 NOV 09 Annual Review 1.2 KDS 22 APR 10 Corrected Typographical Errors 1.3 Annual Review KDS 12 JUL 11 Annual Review Verified reference web links are valid 1.4 KDS 10 AUG 12 Updated web link on qualified QSAs Updated web link for PCI wireless guidelines Annual Review 12 JUN 13 1.5 Verified reference web links are valid KDS Added Log Review Instructions Annual Review 1.6 KDS 16 JUN 14 Verified reference web links are valid Annual Review Verified reference web links are valid 1.7 Updated Business Address NBJ 30 MAR 15 Updated Secure Access Control Added detailed Log Review Setup Instructions 1.8 Added Software Versioning Methodology NBJ 14 OCT 15 Added Required Ports/Services 1.9 Modified Log review Setup Instructions NBJ 19 OCT 15 Added Centralized Logging Updated Required Ports/Services 2.0 Updated Secure Remote Updates NBJ 02 FEB 16 Updated Auditing and Centralized Logging 2.1 Updated Software Versioning Methodology NBJ 17 Feb 16 Full Certification 2.2 BTH 5 May 17 Updated Software Versioning Methodology Updated Introduction Updated Remote Access to “Multi-factor” 2.3 Revised Version number BTH 22 JUN 17 Updated “Travstar1 POS” to just “Travstar1 ” for clarity. Minor wording changes throughout for clarity Adjusted Wording of PCI-DSS requirements to match PCI-DSS 3.2 Updated network connections to clearly define 2.4 BTH 02 AUG 17 every connection Updated references to stored cardholder data to state that it is only stored during preauthorization. -

50109195.Pdf

UNIVERSIDAD DE EL SALVADOR FACULTAD MULTIDISCIPLINARIA ORIENTAL DEPARTAMENTO DE INGENIERÍA Y ARQUITECTURA TRABAJO DE GRADO: IMPACTO DEL SOFTWARE LIBRE EN LAS INSTITUCIONES DE EDUCACIÓN MEDIA DEL MUNICIPIO DE SAN MIGUEL DURANTE EL AÑO 2019 Y CREACIÓN DE PLATAFORMA VIRTUAL PARA EL REGISTRO DE DICHA INFORMACIÓN. PARA OPTAR AL TÍTULO DE: INGENIERO DE SISTEMAS INFORMÁTICOS PRESENTADO POR: EVER FERNANDO ARGUETA CONTRERAS. ROBERTO CARLOS CÁRDENAS RAMÍREZ. GERSON ALEXANDER SANDOVAL GUERRERO. DOCENTE ASESOR: INGENIERO LUIS JOVANNI AGUILAR CIUDAD UNIVERSITARIA ORIENTAL, 11 DE SEPTIEMBRE DE 2020 SAN MIGUEL, EL SALVADOR, CENTRO AMÉRICA UNIVERSIDAD DE EL SALVADOR AUTORIDADES Msc. ROGER ARMANDO ARIAS RECTOR PhD. RAÚL ERNESTO AZCÚNAGA LÓPEZ VICERECTOR ACADÉMICO INGENIERO JUAN ROSA QUINTANILLA VICERECTOR ADMINISTRATIVO INGENIERO FRANCISCO ALARCÓN SECRETARIO GENERAL LICENCIADO RAFAEL HUMBERTO PEÑA MARÍN FISCAL GENERAL LICENCIADO LUIS ANTONIO MEJÍA LIPE DEFENSOR DE LOS DERECHOS UNIVERSITARIOS FACULTAD MULTIDISCIPLINARIA ORIENTAL AUTORIDADES LICENCIADO CRISTÓBAL HERNÁN RÍOS BENÍTEZ DECANO LICENCIADO OSCAR VILLALOBOS VICEDECANO LICENCIADO ISRRAEL LÓPEZ MIRANDA SECRETARIO INTERINO LICENCIADO JORGE PASTOR FUENTES CABRERA DIRECTOR GENERAL DE PROCESOS DE GRADUACIÓN DEPARTAMENTO DE INGENIERIA Y ARQUITECTURA AUTORIDADES INGENIERO JUAN ANTONIO GRANILLO COREAS. JEFE DEL DEPARTAMENTO. INGENIERA LIGIA ASTRID HERNANDEZ BONILLA COORDINADORA DE LA CARRERA DE INGENIERIA EN SISTEMAS INFORMATICOS INGENIERA MILAGRO DE MARÍA ROMERO DE GARCÍA COORDINADORA DE PROCESOS DE GRADUACIÓN TRIBUNAL EVALUADOR INGENIERO LUIS JOVANNI AGUILAR JURADO ASESOR INGENIERO LUDWIN ALDUVÍ HERNÁNDEZ VÁSQUEZ DOCENTE JURADO CALIFICADOR INGENIERA LIGIA ASTRID HERNANDEZ BONILLA DOCENTE JURADO CALIFICADOR AGRADECIMIENTOS A DIOS: Por darme la oportunidad de vivir y por haberme dado la sabiduría para poder culminar mis estudios y por fortalecer mi corazón e iluminar mi mente, por haber puesto en mi camino a aquellas personas que han sido mi soporte y compañía durante todo el periodo de estudio. -

Book of Abstracts

The Association for Literary and Lingustic Computing The Association for Computers and the Humanities Society for Digital Humanities — Société pour l’étude des médias interactifs Digital Humanities 2008 The 20th Joint International Conference of the Association for Literary and Linguistic Computing, and the Association for Computers and the Humanities and The 1st Joint International Conference of the Association for Literary and Linguistic Computing, the Association for Computers and the Humanities, and the Society for Digital Humanities — Société pour l’étude des médias interactifs University of Oulu, Finland 24 – 29 June, 2008 Conference Abstracts International Programme Committee • Espen Ore, National Library of Norway, Chair • Jean Anderson, University of Glasgow, UK • John Nerbonne, University of Groningen, The Netherlands • Stephen Ramsay, University of Nebraska, USA • Thomas Rommel, International Univ. Bremen, Germany • Susan Schreibman, University of Maryland, USA • Paul Spence, King’s College London, UK • Melissa Terras, University College London, UK • Claire Warwick, University College London, UK, Vice Chair Local organizers • Lisa Lena Opas-Hänninen, English Philology • Riikka Mikkola, English Philology • Mikko Jokelainen, English Philology • Ilkka Juuso, Electrical and Information Engineering • Toni Saranpää, English Philology • Tapio Seppänen, Electrical and Information Engineering • Raili Saarela, Congress Services Edited by • Lisa Lena Opas-Hänninen • Mikko Jokelainen • Ilkka Juuso • Tapio Seppänen ISBN: 978-951-42-8838-8 Published by English Philology University of Oulu Cover design: Ilkka Juuso, University of Oulu © 2008 University of Oulu and the authors. _____________________________________________________________________________Digital Humanities 2008 Introduction On behalf of the local organizers I am delighted to welcome you to the 25th Joint International Conference of the Association for Literary and Linguistic Computing (ALLC) and the Association for Computers and the Humanities (ACH) at the University of Oulu. -

The Cedar Programming Environment: a Midterm Report and Examination

The Cedar Programming Environment: A Midterm Report and Examination Warren Teitelman The Cedar Programming Environment: A Midterm Report and Examination Warren Teitelman t CSL-83-11 June 1984 [P83-00012] © Copyright 1984 Xerox Corporation. All rights reserved. CR Categories and Subject Descriptors: D.2_6 [Software Engineering]: Programming environments. Additional Keywords and Phrases: integrated programming environment, experimental programming, display oriented user interface, strongly typed programming language environment, personal computing. t The author's present address is: Sun Microsystems, Inc., 2550 Garcia Avenue, Mountain View, Ca. 94043. The work described here was performed while employed by Xerox Corporation. XEROX Xerox Corporation Palo Alto Research Center 3333 Coyote Hill Road Palo Alto, California 94304 1 Abstract: This collection of papers comprises a report on Cedar, a state-of-the-art programming system. Cedar combines in a single integrated environment: high-quality graphics, a sophisticated editor and document preparation facility, and a variety of tools for the programmer to use in the construction and debugging of his programs. The Cedar Programming Language is a strongly-typed, compiler-oriented language of the Pascal family. What is especially interesting about the Ce~ar project is that it is one of the few examples where an interactive, experimental programming environment has been built for this kind of language. In the past, such environments have been confined to dynamically typed languages like Lisp and Smalltalk. The first paper, "The Roots of Cedar," describes the conditions in 1978 in the Xerox Palo Alto Research Center's Computer Science Laboratory that led us to embark on the Cedar project and helped to define its objectives and goals. -

Component Evolution and Versioning State of the Art

ACM SIGSOFT Software Engineering Notes Page 1 January 2005 Volume 30 Number 1 Component Evolution and Versioning State of the Art Alexander Stuckenholz FernUniversitat¨ in Hagen Lehrgebiet fur¨ Datenverarbeitungstechnik Universitatsstrasse¨ 27, 58084 Hagen, Germany [email protected] October 26, 2004 Abstract the kind of information about the running system as well as the lifecycle of the component in which the mechanisms are applied. Emerging component-based software development architectures The most frequently used technology in this area is versioning. promise better re-use of software components, greater flexibility, Versioning mechanisms are typically used to distinguish evolv- scalability and higher quality of services. But like any other piece ing software artifacts over time. As we will show in section 4 on of software too, software components are hardly perfect, when be- page 4, these mechanisms play an important role in component ing created. Problems and bugs have to be fixed and new features based software development too. need to be added. This paper presents an overview of current versioning mecha- This paper analyzes the problem of component evolution and nisms and substitutability checks in the area of component based the incompatibilities which result during component upgrades. software development. In a comparison, these systems will be We present the state of the art in component versioning and com- evaluated against their usability in the case of component up- pare the different methods in component models, frameworks and grades. Here we come to the conclusions that the available so- programming languages. Special attention is put on the automa- lutions are not sufficient to reduce version conflicts in component tion of processes and tool support in this area. -

Chrome, Edge, and Firefox Integration

Blue Prism 7.0 Chrome, Edge, and Firefox Integration Document Revision: 1.0 Blue Prism 7.0 | Chrome, Edge, and Firefox Integration Trademarks and Copyright Trademarks and Copyright The information contained in this document is the proprietary and confidential information of Blue Prism Limited and should not be disclosed to a third-party without the written consent of an authorized Blue Prism representative. No part of this document may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopying without the written permission of Blue Prism Limited. © Blue Prism Limited, 2001 – 2021 © “Blue Prism”, the “Blue Prism” logo and Prism device are either trademarks or registered trademarks of Blue Prism Limited and its affiliates. All Rights Reserved. All trademarks are hereby acknowledged and are used to the benefit of their respective owners. Blue Prism is not responsible for the content of external websites referenced by this document. Blue Prism Limited, 2 Cinnamon Park, Crab Lane, Warrington, WA2 0XP, United Kingdom. Registered in England: Reg. No. 4260035. Tel: +44 370 879 3000. Web: www.blueprism.com Commercial in Confidence Page ii Blue Prism 7.0 | Chrome, Edge, and Firefox Integration Contents Contents TrademarksContents and Copyright iiiii Chrome, Edge, and Firefox integration 4 Browser extension compatibility 4 Chrome browser extension 5 Prerequisites 5 Install the Chrome browser extension using the Blue Prism installer 6 Install from the Bluecommand Prism line installer 67 Install the -

(GNU Mailman) Mailing Lists

Usability and efficiency improvements of the (GNU Mailman) mailing lists Rudy Borgstede ([email protected]) System and Network Engineering University of Amsterdam July 5, 2008 Versions Version Date Changes 0.0.1 18 May 2008 First setup of the report 0.0.2 9 June 2008 Release Candidate 1 of the project proposal 0.1.1 17 June 2008 A rewrite of the document because of the change of project result. The project will deliver an advice rather then a product like a patch or add-on for GNU Mailman. This means that the report becomes an consultancy report instead of a project proposal. 1.0.0 30 June 2008 Final version 1 of the report. 1.0.1 1 July 2008 A spelling check of the report. 1.0.2 5 July 2008 Extending the conclusion en future work chapters. Participants Name Contact Information University of Amsterdam Rudy Borgstede (Student) [email protected] Cees de Laat (Supervisor) [email protected] NLnet Michiel Leenaars (Supervisor) [email protected] Abstract This report is the result of a research project of four weeks at the NLnet Foundation1 in Amster- dam. The NLnet Foundation is a foundation who financially supports the open-source community and their projects. The purpose of the project is to improve the usability and the administration of the mailing lists (of the foundation) and giving a more clear view on mailing list server software to anyone who is interested in using mailing list server software or developing new mail or mail- ing list server software. The report describes the research of the usability of several open-source mailing list server software for scalable environments with several well known mail servers. -

Analysis and Performance Optimization of E-Mail Server

Analysis and Performance Optimization of E-mail Server Disserta¸c~aoapresentada ao Instituto Polit´ecnicode Bragan¸capara cumprimento dos requisitos necess´arios `aobten¸c~aodo grau de Mestre em Sistemas de Informa¸c~ao,sob a supervis~aode Prof. Dr. Rui Pedro Lopes. Eduardo Manuel Mendes Costa Outubro 2010 Preface The e-mail service is increasingly important for organizations and their employees. As such, it requires constant monitoring to solve any performance issues and to maintain an adequate level of service. To cope with the increase of traffic as well as the dimension of organizations, several architectures have been evolving, such as cluster or cloud computing, promising new paradigms of service delivery, which can possibility solve many current problems such as scalability, increased storage and processing capacity, greater rationalization of resources, cost reduction, and increase in performance. However, it is necessary to check whether they are suitable to receive e-mail servers, and as such the purpose of this dissertation will concentrate on evaluating the performance of e-mail servers, in different hosting architectures. Beyond computing platforms, was also analze different server applications. They will be tested to determine which combinations of computer platforms and applications obtained better performances for the SMTP, POP3 and IMAP services. The tests are performed by measuring the number of sessions per ammount of time, in several test scenarios. This dissertation should be of interest for all system administrators of public and private organizations that are considering implementing enterprise wide e-mail services. i Acknowledgments This work would not be complete without thanking all who helped me directly or indirectly to complete it. -

Taxonomies of Visual Programming and Program Visualization

Taxonomies of Visual Programming and Program Visualization Brad A. Myers September 20, 1989 School of Computer Science Carnegie Mellon University Pittsburgh, PA 15213-3890 [email protected] (412) 268-5150 The research described in this paper was partially funded by the National Science and Engineering Research Council (NSERC) of Canada while I was at the Computer Systems Research Institute, Univer- sity of Toronto, and partially by the Defense Advanced Research Projects Agency (DOD), ARPA Order No. 4976 under contract F33615-87-C-1499 and monitored by the Avionics Laboratory, Air Force Wright Aeronautical Laboratories, Aeronautical Systems Division (AFSC), Wright-Patterson AFB, OH 45433-6543. The views and conclusions contained in this document are those of the author and should not be interpreted as representing the of®cial policies, either expressed or implied, of the Defense Advanced Research Projects Agency of the US Government. This paper is an updated version of [4] and [5]. Part of the work for this article was performed while the author was at the University of Toronto in Toronto, Ontario, Canada. Taxonomies of Visual Programming and Program Visualization Brad A. Myers ABSTRACT There has been a great interest recently in systems that use graphics to aid in the programming, debugging, and understanding of computer systems. The terms ``Visual Programming'' and ``Program Visualization'' have been applied to these systems. This paper attempts to provide more meaning to these terms by giving precise de®nitions, and then surveys a number of sys- tems that can be classi®ed as providing Visual Programming or Program Visualization. These systems are organized by classifying them into three different taxonomies.