Machine Annotation of Traditional Irish Dance Music

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The KNIGHT REVISION of HORNBOSTEL-SACHS: a New Look at Musical Instrument Classification

The KNIGHT REVISION of HORNBOSTEL-SACHS: a new look at musical instrument classification by Roderic C. Knight, Professor of Ethnomusicology Oberlin College Conservatory of Music, © 2015, Rev. 2017 Introduction The year 2015 marks the beginning of the second century for Hornbostel-Sachs, the venerable classification system for musical instruments, created by Erich M. von Hornbostel and Curt Sachs as Systematik der Musikinstrumente in 1914. In addition to pursuing their own interest in the subject, the authors were answering a need for museum scientists and musicologists to accurately identify musical instruments that were being brought to museums from around the globe. As a guiding principle for their classification, they focused on the mechanism by which an instrument sets the air in motion. The idea was not new. The Indian sage Bharata, working nearly 2000 years earlier, in compiling the knowledge of his era on dance, drama and music in the treatise Natyashastra, (ca. 200 C.E.) grouped musical instruments into four great classes, or vadya, based on this very idea: sushira, instruments you blow into; tata, instruments with strings to set the air in motion; avanaddha, instruments with membranes (i.e. drums), and ghana, instruments, usually of metal, that you strike. (This itemization and Bharata’s further discussion of the instruments is in Chapter 28 of the Natyashastra, first translated into English in 1961 by Manomohan Ghosh (Calcutta: The Asiatic Society, v.2). The immediate predecessor of the Systematik was a catalog for a newly-acquired collection at the Royal Conservatory of Music in Brussels. The collection included a large number of instruments from India, and the curator, Victor-Charles Mahillon, familiar with the Indian four-part system, decided to apply it in preparing his catalog, published in 1880 (this is best documented by Nazir Jairazbhoy in Selected Reports in Ethnomusicology – see 1990 in the timeline below). -

Savannah Scottish Games & Highland Gathering

47th ANNUAL CHARLESTON SCOTTISH GAMES-USSE-0424 November 2, 2019 Boone Hall Plantation, Mount Pleasant, SC Judge: Fiona Connell Piper: Josh Adams 1. Highland Dance Competition will conform to SOBHD standards. The adjudicator’s decision is final. 2. Pre-Premier will be divided according to entries received. Medals and trophies will be awarded. Medals only in Primary. 3. Premier age groups will be divided according to entries received. Cash prizes will be awarded as follows: Group 1 Awards 1st $25.00, 2nd $15.00, 3rd $10.00, 4th $5.00 Group 2 Awards 1st $30.00, 2nd $20.00, 3rd $15.00, 4th $8.00 Group 3 Awards 1st $50.00, 2nd $40.00, 3rd $30.00, 4th $15.00 4. Trophies will be awarded in each group. Most Promising Beginner and Most Promising Novice 5. Dancer of the Day: 4 step Fling will be danced by Premier dancer who placed 1st in any dance. Primary, Beginner and Novice Registration: Saturday, November 2, 2019, 9:00 AM- Competition to begin at 9:30 AM Beginner Steps Novice Steps Primary Steps 1. Highland Fling 4 5. Highland Fling 4 9. 16 Pas de Basque 2. Sword Dance 2&1 6. Sword Dance 2&1 10. 6 Pas de Basque & 4 High Cuts 3. Seann Triubhas 3&1 7. Seann Triubhas 3&1 11. Highland Fling 4 4. 1/2 Tulloch 8. 1/2 Tulloch 12. Sword Dance 2&1 Intermediate and Premier Registration: Saturday, November 2, 2019, 12:30 PM- Competition to begin at 1:00 PM Intermediate Premier 15 Jig 3&1 20 Hornpipe 4 16 Hornpipe 4 21 Jig 3&1 17 Highland Fling 4 22. -

The Chieftains 3 Album Download MQS Albums Download

the chieftains 3 album download MQS Albums Download. Mastering Quality Sound,Hi-Res Audio Download, 高解析音樂, 高音質の音楽. Van Morrison & The Chieftains – Irish Heartbeat (Remastered) (1988/2020) [FLAC 24bit/96kHz] Van Morrison & The Chieftains – Irish Heartbeat (Remastered) (1988/2020) FLAC (tracks) 24-bit/96 kHz | Time – 38:56 minutes | 815 MB | Genre: Rock Studio Master, Official Digital Download | Front Cover | © Legacy Recordings. “Irish Heartbeat”, a collaboration album with Van Morrison, was Van’s first attempt to reconcile his Celtic roots with his blues and soul music. It was also The Chieftains’ first full blown collaboration album. Although there were worries about working with a major rock star, the recording was a success, introducing elements of Van’s unique soul and jazz style singing to traditional songs and music. This was the beginning of an ongoing successful musical relationship between Van and The Chieftains. “Irish Heartbeat” was nominated for a Grammy Award in 1989, and there followed a tour of Europe and the UK. “On their wide musical journeys in the ’80s, the Chieftains decided to collaborate with Van Morrison, who had an artistic peak at the end of the decade. The result was a highlight in both of their ’80s productions: the traditional Irish Heartbeat, with Morrison on lead vocals and a guest appearance from the Mary Black. Morrisonand Moloney’s production puts the vocals up front with a sparse background, sometimes with a backdrop of intertwining strings and flutes, the same way Morrison would later use the Chieftains on his Hymns to the Silence. The arrangement and the artist’s engaged singing leads to a brilliant result, and these Irish classics are made very accessible without being transformed into pop songs. -

Physical Education Dance (PEDNC) 1

Physical Education Dance (PEDNC) 1 Zumba PHYSICAL EDUCATION DANCE PEDNC 140 1 Credit/Unit 2 hours of lab (PEDNC) A fusion of Latin and international music-dance themes, featuring aerobic/fitness interval training with a combination of fast and slow Ballet-Beginning rhythms that tone and sculpt the body. PEDNC 130 1 Credit/Unit Hula 2 hours of lab PEDNC 141 1 Credit/Unit Beginning ballet technique including barre and centre work. [PE, SE] 2 hours of lab Ballroom Dance: Mixed Focus on Hawaiian traditional dance forms. [PE,SE,GE] PEDNC 131 1-3 Credits/Units African Dance 6 hours of lab PEDNC 142 1 Credit/Unit Fundamentals, forms and pattern of ballroom dance. Develop confidence 2 hours of lab through practice with a variety of partners in both smooth and latin style Introduction to African dance, which focuses on drumming, rhythm, and dances to include: waltz, tango, fox trot, quick step and Viennese waltz, music predominantly of West Africa. [PE,SE,GE] mambo, cha cha, rhumba, samba, salsa. Bollywood Ballroom Dance: Smooth PEDNC 143 1 Credit/Unit PEDNC 132 1 Credit/Unit 2 hours of lab 2 hours of lab Introduction to dances of India, sometimes referred to as Indian Fusion. Fundamentals, forms and pattern of ballroom dance. Develop confidence Dance styles focus on semi-classical, regional, folk, bhangra, and through practice with a variety of partners. Smooth style dances include everything in between--up to westernized contemporary bollywood dance. waltz, tango, fox trot, quick step and Viennese waltz. [PE,SE,GE] [PE,SE,GE] Ballroom Dance: Latin Irish Dance PEDNC 133 1 Credit/Unit PEDNC 144 1 Credit/Unit 2 hours of lab 2 hours of lab Fundamentals, forms and pattern of ballroom dance. -

The Chieftains Boil the Breakfast Early Mp3, Flac, Wma

The Chieftains Boil The Breakfast Early mp3, flac, wma DOWNLOAD LINKS (Clickable) Genre: Folk, World, & Country Album: Boil The Breakfast Early Country: US Style: Folk, Celtic MP3 version RAR size: 1255 mb FLAC version RAR size: 1271 mb WMA version RAR size: 1718 mb Rating: 4.2 Votes: 401 Other Formats: MP2 AHX DXD TTA MP1 APE VOX Tracklist 1 Boil The Breakfast Early 3:56 2 Mrs. Judge 3:43 3 March From Oscar And Malvina 4:16 4 When A Man's In Love 3:40 5 Bealach An Doirín 3:46 6 Ag Taisteal Na Blárnan 3:21 7 Carolan's Welcome 2:53 8 Up Against The Buachalawns 4:04 9 Gol Na Mban San Ár 4:20 Chase Around The Windmil (Medley) (5:01) 10.a Toss The Feathers 10.b Ballinasloe Fair 10.c Cailleach an Airgid 10.d Cuil Aodha Slide 10.e The Pretty Girl Companies, etc. Copyright (c) – CBS Records Inc. Phonographic Copyright (p) – Claddagh Records Ltd. Manufactured By – Columbia Records Recorded At – Windmill Lane Studios Mixed At – Windmill Lane Studios Credits Bagpipes [Uilleann Pipes], Tin Whistle – Paddy Moloney Bodhrán, Vocals – Kevin Conneff Cello – Jolyon Jackson Drums – The Rathcoole Pipe Band Drum Corps* Engineer – Brian Masterson Fiddle – Seán Keane* Fiddle, Bones – Martin Fay Flute, Tin Whistle – Matt Molloy Harp [Neo Irish Harp, Mediaval Harp], Dulcimer [Tiompan] – Derek Bell Liner Notes – Ciarán Mac Mathúna Producer – Paddy Moloney Notes Originally recorded in 1979. Barcode and Other Identifiers Barcode: 0 7464 36401 2 Other (DADC Code): DIPD 071877 Other versions Category Artist Title (Format) Label Category Country Year Boil The Breakfast -

Bizourka and Freestyle Flemish Mazurka

118 Bizourka and Freestyle Flemish Mazurka (Flanders, Belgium, Northern France) These are easy-going Flemish mazurka (also spelled mazourka) variations which Richard learned while attending a Carnaval ball near Dunkerque, northern France, in 2009. Participants at the ball were mostly locals, but also included dancers from Belgium and various parts of France. Older Flemish mazurka is usually comprised of combina- tions of the Mazurka Step and Waltz, in various simple patterns (see Freestyle Flemish Mazurka below). Like most folk mazurkas, their style descended from the Polka Mazurka once done in European ballrooms, over 150 years ago. This folk process is sometimes compared to a bridge. French or English dance masters picked up an ethnic dance step, in this case a mazurka step from the Polish Mazur, and standardized it for ballroom use. It was then spread throughout the world by itinerant dance teachers, visiting social dancers, and thousands of easily available dance manuals. From this propagation, the most popular steps (the Polka Mazurka was indeed one of the favorites) then “sank” through many simplified forms in villages throughout Europe, the Americas, Australia and elsewhere. In other words, towns in rural France or England didn’t get their mazurka step directly from Poland, or their polka directly from Bohemia, but rather through the “bridge” of dance teachers, traveling social dancers and books, that spread the ballroom versions of the original ethnic steps throughout the world. Once the steps left the formal setting of the ballroom, they quickly simplified. Then folk dances evolve. Typically steps and styles are borrowed from other dance forms that the dancers also happen to know, adding a twist to a traditional form. -

Irish Music If You Are a Student, Faculty Member Or Graduate of Wake and Would Like to Play Irish Music This May Be for You

Irish Music If you are a student, faculty member or graduate of Wake and would like to play Irish music this may be for you. Do you know the difference between a slip jig and a slide? What was the historic connection between Captain O’Neill of Chicago and Irish music? Who was Patsy Touhey and was he American or Irish? What are Uilleann pipes? What is sean-nós singing and is there a connection with Irish poetry? For the answers, join this Irish Music association. GOALS: to have a load of fun playing Irish music and in the process: Create a forum for a dynamic musical interaction recognizing and promoting Irish music Provide an opportunity for musicians to study, learn, and play together in the vibrant Irish folk tradition Promote co-operation between outside music/fine arts departments in Winston-Salem Enhance a community awareness of Irish music, song and culture Expose interested music, and other fine arts, students to an international dimension of folk music based on the Irish tradition as a model Explore the vocal and song traditions in Ireland in the English and Gaelic languages Learn about the history of Irish folk music in America HOW THIS WILL BE DONE: Meet every two weeks and play with a Gaelic-speaking piper and whistler from Ireland, define goals, share pieces, develop a repertoire; organize educational and academic demonstrations/projects; hear historic shellac, vinyl and cylinder recordings of famous pipers, fiddlers, and singers. Play more music. WHAT THIS IS: Irish, pure 100%..... to learn not only common dance music forms including double jigs, reels, hornpipes, polkas but also less common tune forms such as sets, mazurkas, song airs and lullabies etc WHAT THIS IS NOT: Respecting our Celtic brethren, this is NOT Celtic, Scottish, Welsh, Breton, Galician or Canadian/Cape Breton music which is already very well represented by some fine players here in North Carolina. -

Rhythm Bones Player a Newsletter of the Rhythm Bones Society Volume 10, No

Rhythm Bones Player A Newsletter of the Rhythm Bones Society Volume 10, No. 3 2008 In this Issue The Chieftains Executive Director’s Column and Rhythm As Bones Fest XII approaches we are again to live and thrive in our modern world. It's a Bones faced with the glorious possibilities of a new chance too, for rhythm bone players in the central National Tradi- Bones Fest in a new location. In the thriving part of our country to experience the camaraderie tional Country metropolis of St. Louis, in the ‗Show Me‘ state of brother and sisterhood we have all come to Music Festival of Missouri, Bones Fest XII promises to be a expect of Bones Fests. Rhythm Bones great celebration of our nation's history, with But it also represents a bit of a gamble. We're Update the bones firmly in the center of attention. We betting that we can step outside the comfort zones have an opportunity like no other, to ride the where we have held bones fests in the past, into a Dennis Riedesel great Mississippi in a River Boat, and play the completely new area and environment, and you Reports on bones under the spectacular arch of St. Louis. the membership will respond with the fervor we NTCMA But this bones Fest represents more than just have come to know from Bones Fests. We're bet- another great party, with lots of bones playing ting that the rhythm bone players who live out in History of Bones opportunity, it's a chance for us bone players to Missouri, Kansas, Illinois, Oklahoma, and Ne- in the US, Part 4 relive a part of our own history, and show the braska will be as excited about our foray into their people of Missouri that rhythm bones continue (Continued on page 2) Russ Myers’ Me- morial Update Norris Frazier Bones and the Chieftains: a Musical Partnership Obituary What must surely be the most recognizable Paddy Maloney. -

Traditional Irish Music Presentation

Traditional Irish Music Topics Covered: 1. Traditional Irish Music Instruments 2 Traditional Irish tunes 3. Music notation & Theory Related to Traditional Irish Music Trad Irish Instruments ● Fiddle ● Bodhrán ● Irish Flute ● Button Accordian ● Tin/Penny Whistle ● Guitar ● Uilleann Pipes ● Mandolin ● Harp ● Bouzouki Fiddle ● A fiddle is the same as a violin. For Irish music, it is tuned the same, low to high string: G, D, A, E. ● The medieval fiddle originated in Europe in ● The term “fiddle” is used the 10th century, which when referring to was relatively square traditional or folk music. shaped and held in the ● The fiddle is one of the arms. primarily used instruments for traditional Irish music and has been used for over 200 years in Ireland. Fiddle (cont.) ● The violin in its current form was first created in the early 16th century (early 1500s) in Northern Italy. ● When fiddlers play traditional Irish music, they ornament the music with slides, cuts (upper grace note), taps (lower grace note), rolls, drones (also known as a double stop), accents, staccato and sometimes trills. ● Irish fiddlers tend to make little use of vibrato, except for slow airs and waltzes, which is also used sparingly. Irish Flute ● Flutes have been played in Ireland for over a thousand years. ● There are two types of flutes: Irish flute and classical flute. ● Irish flute is typically used ● This flute originated when playing Irish music. in England by flautist ● Irish flutes are made of wood Charles Nicholson and have a conical bore, for concert players, giving it an airy tone that is but was adapted by softer than classical flute and Irish flautists as tin whistle. -

A Sampling of Twenty-First-Century American Baroque Flute Pedagogy" (2018)

University of Nebraska - Lincoln DigitalCommons@University of Nebraska - Lincoln Student Research, Creative Activity, and Music, School of Performance - School of Music 4-2018 State of the Art: A Sampling of Twenty-First- Century American Baroque Flute Pedagogy Tamara Tanner University of Nebraska-Lincoln, [email protected] Follow this and additional works at: https://digitalcommons.unl.edu/musicstudent Part of the Music Pedagogy Commons, and the Music Performance Commons Tanner, Tamara, "State of the Art: A Sampling of Twenty-First-Century American Baroque Flute Pedagogy" (2018). Student Research, Creative Activity, and Performance - School of Music. 115. https://digitalcommons.unl.edu/musicstudent/115 This Article is brought to you for free and open access by the Music, School of at DigitalCommons@University of Nebraska - Lincoln. It has been accepted for inclusion in Student Research, Creative Activity, and Performance - School of Music by an authorized administrator of DigitalCommons@University of Nebraska - Lincoln. STATE OF THE ART: A SAMPLING OF TWENTY-FIRST-CENTURY AMERICAN BAROQUE FLUTE PEDAGOGY by Tamara J. Tanner A Doctoral Document Presented to the Faculty of The Graduate College at the University of Nebraska In Partial Fulfillment of Requirements For the Degree of Doctor of Musical Arts Major: Flute Performance Under the Supervision of Professor John R. Bailey Lincoln, Nebraska April, 2018 STATE OF THE ART: A SAMPLING OF TWENTY-FIRST-CENTURY AMERICAN BAROQUE FLUTE PEDAGOGY Tamara J. Tanner, D.M.A. University of Nebraska, 2018 Advisor: John R. Bailey During the Baroque flute revival in 1970s Europe, American modern flute instructors who were interested in studying Baroque flute traveled to Europe to work with professional instructors. -

The Haybaler's

The Haybaler’s Jig An 11 x 32 bar jig for 4 couples in a square. Composed 4 June 2013 by Stanford Ceili. Mixer variant of Haymaker’s Jig. (24) Ring Into The Center, Pass Through, Set. (4) Ring Into The Center. (4) Pass through (pass opposite by Right shoulders).1 (4) Set twice. (12) Repeat.2 (32) Corners Dance. (4) 1st corners turn by Right elbow. (4) 2nd corners repeat. (4) 1st corners turn by Left elbow. (4) 2nd corners repeat. (8) 1st corners swing (“long swing”). (8) 2nd corners repeat. (16) Reel The Set. Note: dancers always turn people of the opposite gender in this figure. (4) #1 couple turn once and a half by Right elbow. (2) Turn top side-couple dancers by Left elbow once around. (2) Turn partner in center by Right elbow once and a half. (2) Repeat with next side-couple dancer. (2) Repeat with partner. (2) Repeat with #2 couple dancer. (2) Repeat with partner. (2) #1 Couple To The Top. 1Based on the “Drawers” figure from the Russian Mazurka Quadrille. All pass their opposites by Right shoulder to opposite’s position. Ladies dance quickly at first, to pass their corners; then men dance quickly, to pass their contra-corners. 2Pass again by Right shoulders, with ladies passing the first man and then allowing the second man to pass her. This time, ladies pass their contra-corners, and are passed by their corners. (14) Cast Off, Arch, Turn. (12) Cast off and arch. #1 couple casts off at the top, each on own side. -

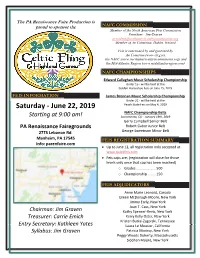

June 22, 2019

The PA Renaissance Faire Production is proud to sponsor the NAFC COMMISSION Member of the North American Feis Commission President: Jim Graven [email protected] Member of An Coimisiun, Dublin, Ireland Feis is sanctioned by and governed by An Comisiun (www.clrg.ie), the NAFC (www.northamericanfeiscommission.org) and the Mid-Atlantic Region (www.midatlanticregion.com) NAFC CHAMPIONSHIPS Edward Callaghan Music Scholarship Championship Under 15 - will be held at the Golden Horseshoe Feis on June 15, 2019 FEIS INFORMATION James Brennan Music Scholarship Championship Under 21 - will be held at the Peach State Feis on May 4, 2019 Saturday - June 22, 2019 NAFC Championship Belts Starting at 9:00 am! Sacramento, CA - January 19th, 2019 Gerry Campbell Senior Belt PA Renaissance Fairegrounds Robert Gabor Junior Belt 2775 Lebanon Rd. George Sweetnam Minor Belt Manheim, PA 17545 FEIS REGISTRATION SUMMARY Info: parenfaire.com Up to June 12, all registration only accepted at www.quickfeis.com Feis caps are: (registration will close for those levels only once that cap has been reached) o Grades ..................... 500 o Championship ......... 150 FEIS ADJUDICATORS Anne Marie Leonard, Canada Eileen McDonagh-Moore, New York Jimmy Early, New York Joan T. Cass, New York Chairman: Jim Graven Kathy Spencer-Revis, New York Treasurer: Carrie Emich Kerry Kelly Oster, New York Kristen Butke-Zagorski, Tennessee Entry Secretary: Kathleen Yates Laura Le Meusier, California Syllabus: Jim Graven Patricia Morissy, New York Peggy Woods Doherty, Massachusetts Siobhan Moore, New York COMPETITION FEES CELTIC FLING FEIS FESTIVAL Feis Admission includes ONE day ticket to the festival Competition Fees FEES (you can purchase a second day ticket at registration) Solo Dancing / TR / Sets / Non-Dance Kick-off concert on Friday featuring great performers! $ 10 Bring your appetite and enjoy delicious foods and (per competitor and per dance) refreshing wines, ales & ciders while listening to the live Preliminary Championship music! Gates open at 4PM.