A Primer on Pretrained Multilingual Language Models

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Begräbnistexte Im Sozialen Wandel Der Han-Zeit: Eine Typologische Untersuchung Der Jenseitsvorstellung, Heidelberg: Crossasia-Ebooks, 2021

Literaturverzeichnis Akizuki 1987: Akizuki Kan’ei 秋月觀暎, Hrsg. Dokyō to shūkyō bunka 道教と宗教文化 [Taoism and Religious Culture]. Tokyo: Hirakawa Shuppansha 平河出版社, 1987. Allan & Xing 2004: Allan, Sarah 艾蘭, und Xing Wen 邢文. Xinchu jianbo yanjiu 新出簡帛研究. Beijing: Wenwu chubanshe 文物出版社, 2004. An Jinhuai et al 1993: An Jinhuai 安金槐, Wang Yugang 王與剛, Xu Shunzhan 許順湛, Liu Jianz- hou 劉建洲 et al, und Henan sheng wenwu yanjiusuo 河南省文物研究所. Mixian dahuting hanmu 密縣打虎亭漢墓. Beijing: Wenwu chubanshe 文物出版社, 1993. An Zhimin 1973: An Zhimin 安志敏. „Changsha xin faxian de xihan bohua shitan 長沙新發現的西 漢帛畫試探“. Kaogu 考古, Nr. 1 (1973): 43-53. An Zhongyi 2012: An Zhongyi 安忠義. „Qinhan jiandu zhong de zhishu yu zhiji kaobian 秦漢簡牘 中的“致書”與“致籍”考辨“. Jianghan kaogu 江漢考古, Nr. 1 (2012): 111-16. Ao Chenglong 1959: Ao Chenglong 敖承隆, und Hebei sheng wenhuaju wenwu gongzuodui 河北 省文化局文物工作隊. Wangdu erhao hanmu 望都二號漢墓. Beijing: Wenwu chubanshe 文 物出版社, 1959. Ao Chenglong 1964: Ao Chenglong 敖承隆, und Hebei sheng wenhuaju wenwu gongzuodui 河北 省文化局文物工作隊. „Hebei dingxian beizhuang hanmu fajue baogao 河北定縣北莊漢墓 發掘報告“. Kaogu xuebao 考古學報, Nr. 2 (1964): 127-194/243-254. Assmann & Hölscher 1988: Assmann, Jan, und Tonio Hölscher. Kultur und Gedächtnis. Frankfurt am Main: Suhrkamp, 1988. Assmann 1992: Assmann, Jan. Das kulturelle Gedächtnis: Schrift, Erinnerung und politische Identität in frühen Hochkulturen. München: Beck, 1992. Bai Qianshen 2006: Bai Qianshen 白謙慎. Übersetzt von Sun Jingru 孫靜如, und Zhang Jiajie 張 佳傑. Fu Shan de shijie: shiqi shiji zhongguo shufa de shanbian 傅山的世界: 十七世紀中國 書法的嬗變 [Fu Shan‘s World: the Transformation of Chinese Calligraphy in the Seventeenth Century]. Beijing: Sanlian shudian 三聯书店, 2006. -

救苦(X2)天尊 Shi Fang Jiu Ku (X2) Tian Zun

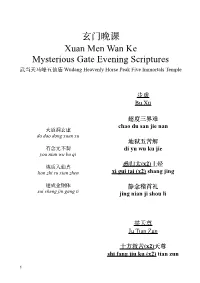

玄门晚课 Xuan Men Wan Ke Mysterious Gate Evening Scriptures 武当天马峰五仙庙 Wudang Heavenly Horse Peak Five Immortals Temple 步虚 Bu Xü 超度三界难 chao du san jie nan ⼤道洞玄虚 da dao dong xuan xu 地狱五苦解 有念无不契 di yu wu ku jie you nian wu bu qi (x2) 炼质入仙真 悉归太 上经 lian zhi ru xian zhen xi gui tai (x2) shang jing 遂成⾦钢体 静念稽⾸礼 sui cheng jin gang ti jing nian ji shou li 举天尊 Ju Tian Zun ⼗⽅救苦(x2)天尊 shi fang jiu ku (x2) tian zun !1 吊挂 便是升天(x2)得道⼈ Diao Gua bian shi sheng tian (x2) de dao ren 种种无名(x2)是苦(x2)根 zhong zhong wu ming (x2) shi ku (x2)gen ⾹供养 苦根除尽(x2)善根存 Xiang Gong Yang ku gen chu jin (x2) shan gen (only on 1st and 15th) cun ⾹供养太⼄(x2)救苦天尊 但凭慧剑(x2)威神(x2)⼒ xiang gong yang tai yi (x2) jiu dan ping hui jian (x2)wei ku tian zun shen (x2) li 跳出轮回(x2)无苦门 提纲 tiao chu lun hui(x2) wu ku Ti Gang men (only on 1st and 15th) 道以无⼼(x2)度有(x2)情 ⼀柱真⾹烈⽕焚 dao yi wu xin (x2) du you (x2) yi zhu zhen xiang lie huo fen qing ⾦童⽟女上遥闻 ⼀切⽅便(x2)是修真 jin tong yu nü shang yao wen yi qie fang bian (x2) shi xiu zhen 此⾹径达青华府 ci xiang jing da qing hua fu 若皈圣智(x2)圆通(x2)地 ruo gui sheng zhi (x2) yuan 奏启寻声救苦尊 tong(x2) di zou qi xun sheng jiu ku zun !2 反八天 提纲 Fan Ba Tian Ti Gang (only on 1st and 15th) 道场众等 dao chang zhong deng 救苦天尊妙难量 jiu ku tian zun miao nan liang ⼈各恭敬 ren ge gong jing 开化⼈天度众⽣ kai hua ren tian du zhong 恭对道前 sheng gong dui dao qian 存亡两途皆利济 诵经如法 cun wang liang tu jie li ji song jing ru fa 众等讽诵太上经 zhong deng feng song tai shang jing !3 玄蕴咒 真⼈无上德 Xuan Yun Zhou zhen ren wu shang de 寂寂至无宗 世世为仙家 ji ji zhi wu zong shi shi wei xian jia 虚峙劫仞阿 xu zhi jie ren e 豁落洞玄⽂ huo luo dong xuan wen 谁测此幽遐 -

Pre-Train, Prompt, and Predict: a Systematic Survey of Prompting Methods in Natural Language Processing

Pre-train, Prompt, and Predict: A Systematic Survey of Prompting Methods in Natural Language Processing Pengfei Liu Weizhe Yuan Jinlan Fu Carnegie Mellon University Carnegie Mellon University National University of Singapore [email protected] [email protected] [email protected] Zhengbao Jiang Hiroaki Hayashi Graham Neubig Carnegie Mellon University Carnegie Mellon University Carnegie Mellon University [email protected] [email protected] [email protected] Abstract This paper surveys and organizes research works in a new paradigm in natural language processing, which we dub “prompt-based learning”. Unlike traditional supervised learning, which trains a model to take in an input x and predict an output y as P (y x), prompt-based learning is based on language models that model the probability of text directly. To usej these models to perform prediction tasks, the original input x is modified using a template into a textual string prompt x0 that has some unfilled slots, and then the language model is used to probabilistically fill the unfilled information to obtain a final string x^, from which the final output y can be derived. This framework is powerful and attractive for a number of reasons: it allows the language model to be pre-trained on massive amounts of raw text, and by defining a new prompting function the model is able to perform few-shot or even zero-shot learning, adapting to new scenarios with few or no labeled data. In this paper we introduce the basics of this promising paradigm, describe a unified set of mathematical notations that can cover a wide variety of existing work, and organize existing work along several dimensions, e.g. -

Acupuncture Heals Erectile Dysfunction Finding Published by Healthcmi on 02 May 2018

Acupuncture Heals Erectile Dysfunction Finding Published by HealthCMI on 02 May 2018. Acupuncture and herbs are effective for the treatment of erectile dysfunction. In research conducted at the Yunnan Provincial Hospital of Traditional Chinese Medicine, acupuncture plus herbs produced a 46.56% total effective rate. Using only herbal medicine produced a 16.17% total effective rate and using only acupuncture produced an 13.24% total effective rate. [1] The researchers conclude, “Acupuncture combined with herbal medicine has a synergistic therapeutic effect on erectile dysfunction.” Erectile dysfunction (ED) is a sexual disorder characterized by the persistent inability to achieve or maintain an erection sufficient for satisfactory sexual intercourse (>3 months). [2] Recently, ED has become a global health problem and is estimated to affect 322 million males by 2025. [3] In Traditional Chinese medicine (TCM), ED is in the scope of Jin Wei (translated as tendon wilting) and is related to aging, excessive masturbation or sex, emotional conditions (e.g., depression), congenital deficiencies, or improper diet. The main TCM diagnostic patterns are liver qi stagnation, blood stasis, and kidney essence deficiency. In this study, the researchers note, “Emotion conditions can harm the liver and cause liver qi stagnation. According to TCM principles, the liver governs the tendons and the genitals are the gathering place of the ancestral tendon. The liver failing to course freely causes obstruction of the liver governed meridians and malnourishment of the ancestral tendon, leading to ED. On the other hand, insufficiency of kidney essence may result in debilitation of the life gate fire. In other words, essence from the kidney fails to be transported into the ancestral tendon, making the genitalia become limp and wilted.” The treatment principle is to smooth the flow of liver qi, free the meridians for invigorating blood circulation, and tonifying kidney essence. -

Chinese Folk Religion

Chinese folk religion: The '''Chinese folk religion''' or '''Chinese traditional religion''' ( or |s=中国民间宗教 or 中国民间信 仰|p=Zhōngguó mínjiān zōngjiào or Zhōngguó mínjiān xìnyăng), sometimes called '''Shenism''' (pinyin+: ''Shénjiào'', 神教),refn|group=note| * The term '''Shenism''' (神教, ''Shénjiào'') was first used in 1955 by anthropologist+ Allan J. A. Elliott, in his work ''Chinese Spirit-Medium Cults in Singapore''. * During the history of China+ it was named '''Shendao''' (神道, ''Shéndào'', the "way of the gods"), apparently since the time of the spread of Buddhism+ to the area in the Han period+ (206 BCE–220 CE), in order to distinguish it from the new religion. The term was subsequently adopted in Japan+ as ''Shindo'', later ''Shinto+'', with the same purpose of identification of the Japanese indigenous religion. The oldest recorded usage of ''Shindo'' is from the second half of the 6th century. is the collection of grassroots+ ethnic+ religious+ traditions of the Han Chinese+, or the indigenous religion of China+.Lizhu, Na. 2013. p. 4. Chinese folk religion primarily consists in the worship of the ''shen+'' (神 "gods+", "spirit+s", "awarenesses", "consciousnesses", "archetype+s"; literally "expressions", the energies that generate things and make them thrive) which can be nature deities+, city deities or tutelary deities+ of other human agglomerations, national deities+, cultural+ hero+es and demigods, ancestor+s and progenitor+s, deities of the kinship. Holy narratives+ regarding some of these gods are codified into the body of Chinese mythology+. Another name of this complex of religions is '''Chinese Universism''', especially referring to its intrinsic metaphysical+ perspective. The Chinese folk religion has a variety of sources, localised worship forms, ritual and philosophical traditions. -

Herbal Formulas Chinamed® Capsules

Sun Herbal Professional Seminar Supporting you to achieve outstanding results for your patients Herbal formulas ChinaMed® capsules • MODERATE: 2-3 capsules, 2-3 times/day - chronic conditions, weak and sensitive patients • MEDIUM: 3-4 capsules, 3 times/day - general use in the initial stages of treatment • HIGH: 6 capsules, 3-4 times/day - acute infections • VERY HIGH: 10-12 capsules, 3 times/day for 3-4 days: severe and acute infection in a robust patient Black Pearl® pills • MODERATE: 8 pills, 3 times/day - chronic conditions, weak and sensitive patients • MEDIUM: 12 pills, 3 times/day - general use in the initial stages of treatment • HIGH: 12-15 pills, 3-4 times/day - acute infections • VERY HIGH: 18-20 pills, 3-4 times/day for 3-4 days: severe and acute infection in a robust patient • develop your own experiences • contraindications in manual Move the blood MENSTRUAL RELIEF (CM127) TCM ACTIONS & SYNDROMES TONG JING FANG/ Dang-gui & Notoginseng (BP026) Activates the blood and breaks up stasis. Alleviates pain. INGREDIENTS • dang-gui/angelica root, bai shao/paeonia lactiflora root, chi Blood stasis in the Chong & Ren shao/paeonia obovata root, chuan xiong/ligusticum root, dan shen/salvia root: nourish the blood, promote movement of channels (uterus) blood • dan shen/salvia root, chi shao/paeonia obovata root, yan hu suo/corydalis rhizome, pu huang/typha pollen, tao ren/prunus seed, e zhu/curcuma rhizome, tian qi/notoginseng, hong hua/ carthamus flower: activate the blood, breaks stasis and alleviates pain • xiang fu/cyperus rhizome, wu -

Herbs That Tonify Yang

HERBS THAT TONIFY YANG Revised: 9/7/2019 Sources: • Bensky, D. (2004). Chinese Herbal Medicine: Materia Medica. Seattle, WA: Eastland Press. pp. 743-766 • Chen, J. and Chen, T. (2004). Chinese Medical Herbology and Pharmacology. Art of Medicine Press. pp. 918-938 Herbs that Tonify Yang Signs and Symptoms of Kidney yang deficiency: • Low back and knee pain • Urination problems • Tinnitus • Sexual problems and infertility problems • OB/GYN and menstrual problems • Watery, thin, early-morning diarrhea with undigested food • Deep pulse • Pale (blue) tongue, swollen, wet, teeth marks These herbs are commonly combined with Herbs that Warm the Interior. Many of these herbs tonify Kidney Yin as well. Some of these herbs can treat respiratory problems by strengthening the Kidney so that it can grasp descending Lung qi. Caution: These herbs are warm and acrid, and may cause heat signs if used longterm. Herbs that Tonify Yang Taste: • sweet Temperature: • warm Channels: • KI, SP, HT Cautions & • These herbs are sweet and cloying; overuse can cause Contraindications: digestive problems Main Action: • Tonify blood and nourish yin Combine with herbs the move or regulate qi to prevent Other: • stagnation Herbs that Tonify Yang • lù róng • gǒu jǐ • gé jiè • hé táo rén • dōng chóng xià cǎo • yì zhì rén • ròu cōng róng • xiān máo • suǒ yáng • dù zhòng • yín yáng huò • xù duàn • bā jǐ tiān • gǔ suì bǔ • hú lú bā • tù sī zǐ • bǔ gǔ zhī • zǐ hé chē lù róng cervi cornu pantotrichum ⿅ Temp: warm Taste: sweet, salty Channels: KI, LV 茸 Dosage: 1-2 grams (pill or powder) 1. -

An Integrated Approach for Studying Exposure, Metabolism and Disposition of Multiple Component Herbal Medicines Using High Resolution

DMD Fast Forward. Published on March 24, 2016 as DOI: 10.1124/dmd.115.068189 This article has not been copyedited and formatted. The final version may differ from this version. DMD # 68189 An integrated approach for studying exposure, metabolism and disposition of multiple component herbal medicines using high resolution mass spectrometry and multiple data processing tools Caisheng Wu, Haiying Zhang, Caihong Wang, Hailin Qin, Mingshe Zhu and Jinlan Zhang Downloaded from State Key Laboratory of Bioactive Substance and Function of Natural Medicines, Institute of Materia Medica, Chinese Academy of Medical Sciences& Peking Union Medical College, Beijing, China (C.W., C.W., H.Q., J.Z.), and Department of Biotransformation, Bristol-Myers Squibb Company, Princeton, USA (H.Z., M.Z.) dmd.aspetjournals.org at ASPET Journals on September 27, 2021 1 DMD Fast Forward. Published on March 24, 2016 as DOI: 10.1124/dmd.115.068189 This article has not been copyedited and formatted. The final version may differ from this version. DMD # 68189 Running Title Study ADME of multiple TCM components using HRMS technology Corresponding author: Mingshe Zhu Department of Biotransformation, Bristol-Myers Squibb Company, Princeton, NJ 08540, USA; Tel (609) 252-3324; E-mail: [email protected] Jinlan Zhang State Key Laboratory of Bioactive Substances and Functions of Natural Medicines, Institute of Downloaded from Materia Medica, Chinese Academy of Medical Sciences& Peking Union Medical College, 2 Nanwei Road,Beijing 100050, China; Tel & Fax: 086 10 83154880; E-mail: -

Two Immortals Formula Er Xian Tang

Far East Summit ®® Two Immortals Formula Er Xian Tang Biomedical Presentations Essential hypertension, urinary tract infection, chronic glomerulonephritis, chronic pyelonephritis, polycystic kidneys, hypofunction of the anterior pituitary, hyperthyroidism, and menopausal syndrome . Clinical Presentation Hot flashes , cold lower extremities , night sweats , insomnia, headache, tinnitus, dizziness, low back and knee soreness and weakness , irritability , frequent urination , nocturia , decreased libido, impotence and irregular menstruation. Tongue Pale tongue with scant coat. Pulse Fine, wiry, rapid, and possibly floating pulse. Energetic Functions Enriches and supplements the liver and kidneys, warms yang while draining fire, regulates and harmonizes chong and ren. Chinese Medical Indications Liver blood and kidney yin and yang vacuity. Loss of harmony of the chong and ren. Herbal Ingredients and Analysis Pharmaceutical Latin Binomial Pinyin English Rhizoma Curculiginis Orchoidis Curculigo orchioides xian mao Curculigo rhizome Herba Epimedii Epimedium xian ling pi Epimedium herb grandiflorum (yin yang huo ) Radix Morindae Officinalis Morinda officinalis ba ji tian Morinda root Cortex Phellodendri Phellodendron huang bai Phellodendron bark chinense or P. amurense Rhizoma Anemarrhenae Asphodeloidis Anemarrhena zhi mu Anemarrhena rhizome asphodeloides Radix Angelicae Sinensis Angelica sinensis dang gui Dong Quai root Two Immortals (cont.) Herbal Ingredients and Analysis (cont.) Cuculigo, Epimedium and Morinda warm and supplement the kidney. Epimedium supplements both kidney yin and yang and harnesses ascendant liver yang. Phellodendron and Anemarrhena nourish kidney yin and drain fire. Dong Quai root moistens, nourishes and quickens the blood and regulates the penetrating conception vessels . Formula Differentiation Anem-Phello & Rehmannia Formula and Two Immortals Formula both treat menopausal syndrome. While Anem-Phello & Rehmannia Formula treats yin vacuity-fire without yang vacuity, Two Immortals Formula treats dual yin and yang vacuity with vacuity-fire. -

Simultaneous Quantification of Multiple Representative

molecules Article Simultaneous Quantification of Multiple Representative Components in the Xian-Ling-Gu-Bao Capsule by Ultra-Performance Liquid Chromatography Coupled with Quadrupole Time-of-Flight Tandem Mass Spectrometry Zhi-Hong Yao 1,2,†,*, Zi-Fei Qin 1,2,3,†, Hong Cheng 1, Xiao-Meng Wu 1, Yi Dai 1,2,*, Xin-Luan Wang 4, Ling Qin 4, Wen-Cai Ye 1,2,3 and Xin-Sheng Yao 1,2,3 1 College of Pharmacy, Jinan University, Guangzhou 510632, China; [email protected] (Z.-F.Q.); [email protected] (H.C.); [email protected] (X.-M.W.); [email protected] (W.-C.Y.); [email protected] (X.-S.Y.) 2 Guangdong Provincial Key Laboratory of Pharmacodynamic Constituents of TCM and New Drugs Research, College of Pharmacy, Jinan University, Guangzhou 510632, China 3 G Integrated Chinese and Western Medicine Postdoctoral Research Station, Jinan University, Guangzhou 510632, China 4 Musculoskeletal Research Laboratory, Department of Orthopedics and Traumatology, the Chinese University of Hong Kong, Satin, N.T. Hong Kong SAR, China; [email protected] (X.-L.W.); [email protected] (L.Q.) * Correspondence: [email protected] or [email protected] (Z.-H.Y.); [email protected] (Y.D.); Tel.: + 86-20-85221767(Z.-H.Y.); +86-20-85220785 (Y.D.); Fax: +86-20-85221559 (Z.-H.Y. & Y.D.) † These authors contributed equally to this work. Academic Editor: Derek J. McPhee Received: 19 April 2017; Accepted: 1 June 2017; Published: 2 June 2017 Abstract: Xian-Ling-Gu-Bao capsule (XLGB), a famous traditional Chinese medicine prescription, is extensively used for the treatment of osteoporosis in China. -

Download Formula Appendix

Appendix 4 PRESCRIPTIONS AI FU NUAN GONG WAN AN YING NIU HUANG WAN Artemisia-Cyperus Warming the Uterus Pill Calming the Nutritive-Qi [Level] Calculus Bovis Pill Ai Ye Folium Artemisiae argyi 9 g Niu Huang Calculus Bovis 3 g Wu Zhu Yu Fructus Evodiae 4.5 g Yu Jin Radix Curcumae 9 g Rou Gui Cortex Cinnamomi 4.5 g Shui Niu Jiao Cornu Bubali 6 g Xiang Fu Rhizoma Cyperi 9 g Huang Lian Rhizoma Coptidis 6 g Dang Gui Radix Angelicae sinensis 9 g Zhu Sha Cinnabaris 1.5 g Chuan Xiong Rhizoma Chuanxiong 6 g Shan Zhi Zi Fructus Gardeniae 6 g Bai Shao Radix Paeoniae alba 6 g Xiong Huang Realgar 0.15 g Huang Qi Radix Astragali 6 g Huang Qin Radix Scutellariae 9 g Sheng Di Huang Radix Rehmanniae 9 g Zhen Zhu Mu Concha Margatiriferae usta 12 g Xu Duan Radix Dipsaci 6 g Bing Pian Borneolum 3 g She Xiang Moschus 1 g AN SHEN DING ZHI WAN Calming the Mind and Settling the Spirit Pill Ren Shen Radix Ginseng 9 g Fu Ling Poria 12 g Chang Pu Rhizoma Acori tatarinowii. Fu Shen Sclerotium Poriae pararadicis 9 g Long Chi Fossilia Dentis Mastodi 15 g BA XIAN CHANG SHOU WAN Eight Immortals Longevity Pill Yuan Zhi Radix Polygalae 6 g Shi Chang Pu Rhizoma Acori tatarinowii 8 g Shu Di Huang Radix Rehmanniae preparata 24 g Shan Zhu Yu Fructus Corni 12 g AN SHEN DING ZHI WAN Calming the Mind and Settling the Spirit Pill Variation Shan Yao Rhizoma Dioscoreae 12 g Ze Xie Rhizoma Alismatis 9 g Mu Dan Pi Cortex Moutan 9 g Ren Shen Radix Ginseng 9 g Fu Ling Poria 9 g Mai Men Dong Radix Ophiopogonis 9 g Fu Shen Sclerotium Poriae pararadicis 9 g Wu Wei Zi Fructus Schisandrae -

White Button Mushroom Extracts Modulate Hepatic Fibrosis Progression, Inflammation, and Oxidative Stress in Vitro and in LDLR-/- Mice

foods Article White Button Mushroom Extracts Modulate Hepatic Fibrosis Progression, Inflammation, and Oxidative Stress In Vitro and in LDLR-/- Mice Paloma Gallego 1,†, Amparo Luque-Sierra 2,† , Gonzalo Falcon 3, Pilar Carbonero 3, Lourdes Grande 1, Juan D. Bautista 3, Franz Martín 2,4,* and José A. Del Campo 1,5,* 1 Unit for Clinical Management of Digestive Diseases and CIBERehd, Valme University Hospital, 41014 Seville, Spain; [email protected] (P.G.); [email protected] (L.G.) 2 Andalusian Center for Molecular Biology and Regenerative Medicine (CABIMER), University of Pablo de Olavide-University of Sevilla-CSIC, 41013 Seville, Spain; [email protected] 3 Department of Biochemistry and Molecular Biology, Faculty of Pharmacy, University of Sevilla, 41012 Seville, Spain; [email protected] (G.F.); [email protected] (P.C.); [email protected] (J.D.B.) 4 Biomedical Research Network on Diabetes and Related Metabolic Diseases-CIBERDEM, 28029 Madrid, Spain 5 ALGAENERGY S.A. Avda. de Europa 19, Alcobendas, 28018 Madrid, Spain * Correspondence: [email protected] (F.M.); [email protected] (J.A.D.C.); Tel.: +34-954-467-427 (F.M.); +34-955-015-485 (J.A.D.C.) † These authors contributed equally to this work. Citation: Gallego, P.; Luque-Sierra, Abstract: Liver fibrosis can be caused by non-alcoholic steatohepatitis (NASH), among other condi- A.; Falcon, G.; Carbonero, P.; Grande, tions. We performed a study to analyze the effects of a nontoxic, water-soluble extract of the edible L.; Bautista, J.D.; Martín, F.; Del mushroom Agaricus bisporus (AB) as a potential inhibitor of fibrosis progression in vitro using human Campo, J.A.