Neutrality-Based Symmetric Cryptanalysis

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Increasing Cryptography Security Using Hash-Based Message

ISSN (Print) : 2319-8613 ISSN (Online) : 0975-4024 Seyyed Mehdi Mousavi et al. / International Journal of Engineering and Technology (IJET) Increasing Cryptography Security using Hash-based Message Authentication Code Seyyed Mehdi Mousavi*1, Dr.Mohammad Hossein Shakour 2 1-Department of Computer Engineering, Shiraz Branch, Islamic AzadUniversity, Shiraz, Iran . Email : [email protected] 2-Assistant Professor, Department of Computer Engineering, Shiraz Branch, Islamic Azad University ,Shiraz ,Iran Abstract Nowadays, with the fast growth of information and communication technologies (ICTs) and the vulnerabilities threatening human societies, protecting and maintaining information is critical, and much attention should be paid to it. In the cryptography using hash-based message authentication code (HMAC), one can ensure the authenticity of a message. Using a cryptography key and a hash function, HMAC creates the message authentication code and adds it to the end of the message supposed to be sent to the recipient. If the recipient of the message code is the same as message authentication code, the packet will be confirmed. The study introduced a complementary function called X-HMAC by examining HMAC structure. This function uses two cryptography keys derived from the dedicated cryptography key of each packet and the dedicated cryptography key of each packet derived from the main X-HMAC cryptography key. In two phases, it hashes message bits and HMAC using bit Swapp and rotation to left. The results show that X-HMAC function can be a strong barrier against data identification and HMAC against the attacker, so that it cannot attack it easily by identifying the blocks and using HMAC weakness. -

CHAPTER 9: ANALYSIS of the SHA and SHA-L HASH ALGORITHMS

CHAPTER 9: ANALYSIS OF THE SHA AND SHA-l HASH ALGORITHMS In this chapter the SHA and SHA-l hash functions are analysed. First the SHA and SHA-l hash functions are described along with the relevant notation used in this chapter. This is followed by describing the algebraic structure of the message expansion algorithm used by SHA. We then proceed to exploit this algebraic structure of the message expansion algorithm by applying the generalised analysis framework presented in Chapter 8. We show that it is possible to construct collisions for each of the individual rounds of the SHA hash function. The source code that implements the attack is attached in Appendix F. The same techniques are then applied to SHA-l. SHA is an acronym for Secure Hash Algorithm. SHA and SHA-l are dedicated hash func- tions based on the iterative Damgard-Merkle construction [22] [23]. Both of the round func- tions utilised by these algorithms take a 512 bit input (or a multiple of 512) and produce a 160 bit hash value. SHA was first published as Federal Information Processing Standard 180 (FIPS 180). The secure hash algorithm is based on principles similar to those used in the design of MD4 [10]. SHA-l is a technical revision of SHA and was published as FIPS 180-1 [13]. It is believed that this revision makes SHA-l more secure than SHA [13] [50] [59]. SHA and SHA-l differ from MD4 with regard to the number of rounds used, the size of the hash result and the definition of a single step. -

Hash Functions from Sigma Protocols and Improvements to VSH

Hash Functions from Sigma Protocols and Improvements to VSH Mihir Bellare and Todor Ristov Department of Computer Science and Engineering, University of California San Diego, 9500 Gilman Drive, La Jolla, CA 92093-0404, USA. URL: www-cse.ucsd.edu/users/mihir, www-cse.ucsd.edu/users/tristov Abstract. We present a general way to get a provably collision-resistant hash function from any (suitable) Σ-protocol. This enables us to both get new designs and to unify and improve previous work. In the first category, we obtain, via a modified version of the Fiat-Shamir proto- col, the fastest known hash function that is provably collision-resistant based on the standard factoring assumption. In the second category, we provide a modified version VSH* of VSH which is faster when hash- ing short messages. (Most Internet packets are short.) We also show that Σ-hash functions are chameleon, thereby obtaining several new and efficient chameleon hash functions with applications to on-line/off-line signing, chameleon signatures and designated-verifier signatures. 1 Introduction The failure of popular hash functions MD5 and SHA-1 [42, 43] lends an impetus to the search for new ones. The contention of our paper is that there will be a \niche" market for proven-secure even if not-so-fast hash functions. Towards this we provide a general paradigm that yields hash functions provably secure under number-theoretic assumptions, and also unifies, clarifies and improves previous constructs. Our hash functions have extra features such as being chameleon [25]. Let us now look at all this in more detail. -

Ray Bradbury Creative Contest Literary Journal

32nd Annual Ray Bradbury Creative Contest Literary Journal 2016 Val Mayerik Val Ray Bradbury Creative Contest A contest of writing and art by the Waukegan Public Library. This year’s literary journal is edited, designed, and produced by the Waukegan Public Library. Table of Contents Elementary School Written page 1 Middle School Written page 23 High School Written page 52 Adult Written page 98 Jennifer Herrick – Designer Rose Courtney – Staff Judge Diana Wence – Staff Judge Isaac Salgado – Staff Judge Yareli Facundo – Staff Judge Elementary School Written The Haunted School Alexis J. In one wonderful day there was a school-named “Hyde Park”. One day when, a kid named Logan and his friend Mindy went to school they saw something new. Hyde Park is hotel now! Logan and Mindy Went inside to see what was going on. So they could not believe what they say. “Hyde Park is also now haunted! When Logan took one step they saw Slender Man. Then they both walk and there was a scary mask. Then mummies started coming out of the grown and zombies started coming from the grown and they were so stinky yuck! Ghost came out all over the school and all the doors were locked. Now Mindy had a plan to scare all the monsters away. She said “we should put all the monsters we saw all together. So they make Hyde Park normal again. And they live happy ever after and now it is back as normal. THE END The Haunted House Angel A. One day it was night. And it was so dark a lot of people went on a house called “dead”. -

MD5 Collisions the Effect on Computer Forensics April 2006

Paper MD5 Collisions The Effect on Computer Forensics April 2006 ACCESS DATA , ON YOUR RADAR MD5 Collisions: The Impact on Computer Forensics Hash functions are one of the basic building blocks of modern cryptography. They are used for everything from password verification to digital signatures. A hash function has three fundamental properties: • It must be able to easily convert digital information (i.e. a message) into a fixed length hash value. • It must be computationally impossible to derive any information about the input message from just the hash. • It must be computationally impossible to find two files to have the same hash. A collision is when you find two files to have the same hash. The research published by Wang, Feng, Lai and Yu demonstrated that MD5 fails this third requirement since they were able to generate two different messages that have the same hash. In computer forensics hash functions are important because they provide a means of identifying and classifying electronic evidence. Because hash functions play a critical role in evidence authentication, a judge and jury must be able trust the hash values to uniquely identify electronic evidence. A hash function is unreliable when you can find any two messages that have the same hash. Birthday Paradox The easiest method explaining a hash collision is through what is frequently referred to as the Birthday Paradox. How many people one the street would you have to ask before there is greater than 50% probability that one of those people will share your birthday (same day not the same year)? The answer is 183 (i.e. -

Design Principles for Hash Functions Revisited

Design Principles for Hash Functions Revisited Bart Preneel Katholieke Universiteit Leuven, COSIC bartDOTpreneel(AT)esatDOTkuleuvenDOTbe http://homes.esat.kuleuven.be/ preneel � October 2005 Outline Definitions • Generic Attacks • Constructions • A Comment on HMAC • Conclusions • are secure; they can be reduced to @ @ 2 classes based on linear transfor @ @ mations of variables. The properties @ of these 12 schemes with respect to @ @ weaknesses of the underlying block @ @ cipher are studied. The same ap proach can be extended to study keyed hash functions (MACs) based - -63102392168 on block ciphers and hash functions h based on modular arithmetic. Fi nally a new attack is presented on a scheme suggested by R. Merkle. This slide is now shown at the VI Spanish meeting on Information Se curity and Cryptology in a presenta tion on the state of hash functions. 2 Informal definitions (1) no secret parameters • x arbitrary length fixed length n • ) computation “easy” • One Way Hash Function (OWHF): preimage resistant: ! h(x) x with h(x) = h(x ) • 6) 0 0 2nd preimage resistant: • ! x; h(x) x (= x) with h(x ) = h(x) 6) 0 6 0 Collision Resistant Hash Function (CRHF) = OWHF + collision resistant: • x, x (x = x) with h(x) = h(x ). 6) 0 0 6 0 3 Informal definitions (2) preimage resistant 2nd preimage resistant 6) take a preimage resistant hash function; add an input bit b and • replace one input bit by the sum modulo 2 of this input bit and b 2nd preimage resistant preimage resistant 6) if h is OWHF, h is 2nd preimage resistant but not preimage • resistant 0 X if X n h(X) = 1kh(X) otherwise.j j < ( k collision resistant 2nd preimage resistant ) [Simon 98] one cannot derive collision resistance from ‘general’ preimage resistance 4 Formal definitions: (2nd) preimage resistance Notation: L = 0; 1 , l(n) > n f g A one-way hash function H is a function with domain D = Ll(n) and range R = Ln that satisfies the following conditions: preimage resistance: let x be selected uniformly in D and let M • be an adversary that on input h(x) uses time t and outputs < M(h(x)) D. -

Cryptanalysis of Stream Ciphers Based on Arrays and Modular Addition

KATHOLIEKE UNIVERSITEIT LEUVEN FACULTEIT INGENIEURSWETENSCHAPPEN DEPARTEMENT ELEKTROTECHNIEK{ESAT Kasteelpark Arenberg 10, 3001 Leuven-Heverlee Cryptanalysis of Stream Ciphers Based on Arrays and Modular Addition Promotor: Proefschrift voorgedragen tot Prof. Dr. ir. Bart Preneel het behalen van het doctoraat in de ingenieurswetenschappen door Souradyuti Paul November 2006 KATHOLIEKE UNIVERSITEIT LEUVEN FACULTEIT INGENIEURSWETENSCHAPPEN DEPARTEMENT ELEKTROTECHNIEK{ESAT Kasteelpark Arenberg 10, 3001 Leuven-Heverlee Cryptanalysis of Stream Ciphers Based on Arrays and Modular Addition Jury: Proefschrift voorgedragen tot Prof. Dr. ir. Etienne Aernoudt, voorzitter het behalen van het doctoraat Prof. Dr. ir. Bart Preneel, promotor in de ingenieurswetenschappen Prof. Dr. ir. Andr´eBarb´e door Prof. Dr. ir. Marc Van Barel Prof. Dr. ir. Joos Vandewalle Souradyuti Paul Prof. Dr. Lars Knudsen (Technical University, Denmark) U.D.C. 681.3*D46 November 2006 ⃝c Katholieke Universiteit Leuven { Faculteit Ingenieurswetenschappen Arenbergkasteel, B-3001 Heverlee (Belgium) Alle rechten voorbehouden. Niets uit deze uitgave mag vermenigvuldigd en/of openbaar gemaakt worden door middel van druk, fotocopie, microfilm, elektron- isch of op welke andere wijze ook zonder voorafgaande schriftelijke toestemming van de uitgever. All rights reserved. No part of the publication may be reproduced in any form by print, photoprint, microfilm or any other means without written permission from the publisher. D/2006/7515/88 ISBN 978-90-5682-754-0 To my parents for their unyielding ambition to see me educated and Prof. Bimal Roy for making cryptology possible in my life ... My Gratitude It feels awkward to claim the thesis to be singularly mine as a great number of people, directly or indirectly, participated in the process to make it see the light of day. -

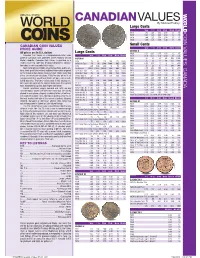

*UPDATED Canadian Values 07-04 201 7/26/2016 4:42:21 PM *UPDATED Canadian Values 07-04 202 COIN VALUES: CANADA 02 .0 .0 12

CANADIAN VALUES By Michael Findlay Large Cents VG-8 F-12 VF-20 EF-40 MS-60 MS-63R 1917 1.00 1.25 1.50 2.50 13. 45. CANADA COIN VALUES: 1918 1.00 1.25 1.50 2.50 13. 45. 1919 1.00 1.25 1.50 2.50 13. 45. 1920 1.00 1.25 1.50 3.00 18. 70. CANADIAN COIN VALUES Small Cents PRICE GUIDE VG-8 F-12 VF-20 EF-40 MS-60 MS-63R GEORGE V All prices are in U.S. dollars LargeL Cents C t 1920 0.20 0.35 0.75 1.50 12. 45. Canadian Coin Values is a comprehensive retail value VG-8 F-12 VF-20 EF-40 MS-60 MS-63R 1921 0.50 0.75 1.50 4.00 30. 250. guide of Canadian coins published online regularly at Coin VICTORIA 1922 20. 23. 28. 40. 200. 1200. World’s website. Canadian Coin Values is provided as a 1858 70. 90. 120. 200. 475. 1800. 1923 30. 33. 42. 55. 250. 2000. reader service to collectors desiring independent informa- 1858 Coin Turn NI NI 2500. 5000. BNE BNE 1924 6.00 8.00 11. 16. 120. 800. tion about a coin’s potential retail value. 1859 4.00 5.00 6.00 10. 50. 200. 1925 25. 28. 35. 45. 200. 900. Sources for pricing include actual transactions, public auc- 1859 Brass 16000. 22000. 30000. BNE BNE BNE 1926 3.50 4.50 7.00 12. 90. 650. tions, fi xed-price lists and any additional information acquired 1859 Dbl P 9 #1 225. -

Cs 255 (Introduction to Cryptography)

CS 255 (INTRODUCTION TO CRYPTOGRAPHY) DAVID WU Abstract. Notes taken in Professor Boneh’s Introduction to Cryptography course (CS 255) in Winter, 2012. There may be errors! Be warned! Contents 1. 1/11: Introduction and Stream Ciphers 2 1.1. Introduction 2 1.2. History of Cryptography 3 1.3. Stream Ciphers 4 1.4. Pseudorandom Generators (PRGs) 5 1.5. Attacks on Stream Ciphers and OTP 6 1.6. Stream Ciphers in Practice 6 2. 1/18: PRGs and Semantic Security 7 2.1. Secure PRGs 7 2.2. Semantic Security 8 2.3. Generating Random Bits in Practice 9 2.4. Block Ciphers 9 3. 1/23: Block Ciphers 9 3.1. Pseudorandom Functions (PRF) 9 3.2. Data Encryption Standard (DES) 10 3.3. Advanced Encryption Standard (AES) 12 3.4. Exhaustive Search Attacks 12 3.5. More Attacks on Block Ciphers 13 3.6. Block Cipher Modes of Operation 13 4. 1/25: Message Integrity 15 4.1. Message Integrity 15 5. 1/27: Proofs in Cryptography 17 5.1. Time/Space Tradeoff 17 5.2. Proofs in Cryptography 17 6. 1/30: MAC Functions 18 6.1. Message Integrity 18 6.2. MAC Padding 18 6.3. Parallel MAC (PMAC) 19 6.4. One-time MAC 20 6.5. Collision Resistance 21 7. 2/1: Collision Resistance 21 7.1. Collision Resistant Hash Functions 21 7.2. Construction of Collision Resistant Hash Functions 22 7.3. Provably Secure Compression Functions 23 8. 2/6: HMAC And Timing Attacks 23 8.1. HMAC 23 8.2. -

Stream Ciphers

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by HAL-CEA Stream ciphers: A Practical Solution for Efficient Homomorphic-Ciphertext Compression Anne Canteaut, Sergiu Carpov, Caroline Fontaine, Tancr`edeLepoint, Mar´ıa Naya-Plasencia, Pascal Paillier, Renaud Sirdey To cite this version: Anne Canteaut, Sergiu Carpov, Caroline Fontaine, Tancr`edeLepoint, Mar´ıaNaya-Plasencia, et al.. Stream ciphers: A Practical Solution for Efficient Homomorphic-Ciphertext Compression. FSE 2016 : 23rd International Conference on Fast Software Encryption, Mar 2016, Bochum, Germany. Springer, 9783 - LNCS (Lecture Notes in Computer Science), pp.313-333, Fast Software Encryption 23rd International Conference, FSE 2016, Bochum, Germany, March 20- 23, 2016, <http://fse.rub.de/>. <10.1007/978-3-662-52993-5 16>. <hal-01280479> HAL Id: hal-01280479 https://hal.archives-ouvertes.fr/hal-01280479 Submitted on 28 Nov 2016 HAL is a multi-disciplinary open access L'archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destin´eeau d´ep^otet `ala diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publi´esou non, lished or not. The documents may come from ´emanant des ´etablissements d'enseignement et de teaching and research institutions in France or recherche fran¸caisou ´etrangers,des laboratoires abroad, or from public or private research centers. publics ou priv´es. Stream ciphers: A Practical Solution for Efficient Homomorphic-Ciphertext Compression? -

Generic Attacks on Stream Ciphers

Generic Attacks on Stream Ciphers John Mattsson Generic Attacks on Stream Ciphers 2/22 Overview What is a stream cipher? Classification of attacks Different Attacks Exhaustive Key Search Time Memory Tradeoffs Distinguishing Attacks Guess-and-Determine attacks Correlation Attacks Algebraic Attacks Sidechannel Attacks Summary Generic Attacks on Stream Ciphers 3/22 What is a stream cipher? Input: Secret key (k bits) Public IV (v bits). Output: Sequence z1, z2, … (keystream) The state (s bits) can informally be defined as the values of the set of variables that describes the current status of the cipher. For each new state, the cipher outputs some bits and then jumps to the next state where the process is repeated. The ciphertext is a function (usually XOR) of the keysteam and the plaintext. Generic Attacks on Stream Ciphers 4/22 Classification of attacks Assumed that the attacker has knowledge of the cryptographic algorithm but not the key. The aim of the attack Key recovery Prediction Distinguishing The information available to the attacker. Ciphertext-only Known-plaintext Chosen-plaintext Chosen-chipertext Generic Attacks on Stream Ciphers 5/22 Exhaustive Key Search Can be used against any stream cipher. Given a keystream the attacker tries all different keys until the right one is found. If the key is k bits the attacker has to try 2k keys in the worst case and 2k−1 keys on average. An attack with a higher computational complexity than exhaustive key search is not considered an attack at all. Generic Attacks on Stream Ciphers 6/22 Time Memory Tradeoffs (state) Large amounts of precomputed data is used to lower the computational complexity. -

Stream Cipher Designs: a Review

SCIENCE CHINA Information Sciences March 2020, Vol. 63 131101:1–131101:25 . REVIEW . https://doi.org/10.1007/s11432-018-9929-x Stream cipher designs: a review Lin JIAO1*, Yonglin HAO1 & Dengguo FENG1,2* 1 State Key Laboratory of Cryptology, Beijing 100878, China; 2 State Key Laboratory of Computer Science, Institute of Software, Chinese Academy of Sciences, Beijing 100190, China Received 13 August 2018/Accepted 30 June 2019/Published online 10 February 2020 Abstract Stream cipher is an important branch of symmetric cryptosystems, which takes obvious advan- tages in speed and scale of hardware implementation. It is suitable for using in the cases of massive data transfer or resource constraints, and has always been a hot and central research topic in cryptography. With the rapid development of network and communication technology, cipher algorithms play more and more crucial role in information security. Simultaneously, the application environment of cipher algorithms is in- creasingly complex, which challenges the existing cipher algorithms and calls for novel suitable designs. To accommodate new strict requirements and provide systematic scientific basis for future designs, this paper reviews the development history of stream ciphers, classifies and summarizes the design principles of typical stream ciphers in groups, briefly discusses the advantages and weakness of various stream ciphers in terms of security and implementation. Finally, it tries to foresee the prospective design directions of stream ciphers. Keywords stream cipher, survey, lightweight, authenticated encryption, homomorphic encryption Citation Jiao L, Hao Y L, Feng D G. Stream cipher designs: a review. Sci China Inf Sci, 2020, 63(3): 131101, https://doi.org/10.1007/s11432-018-9929-x 1 Introduction The widely applied e-commerce, e-government, along with the fast developing cloud computing, big data, have triggered high demands in both efficiency and security of information processing.