Using MRAM in an Intelligent Memory Hierarchy (IMH)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Data Storage the CPU-Memory

Data Storage •Disks • Hard disk (HDD) • Solid state drive (SSD) •Random Access Memory • Dynamic RAM (DRAM) • Static RAM (SRAM) •Registers • %rax, %rbx, ... Sean Barker 1 The CPU-Memory Gap 100,000,000.0 10,000,000.0 Disk 1,000,000.0 100,000.0 SSD Disk seek time 10,000.0 SSD access time 1,000.0 DRAM access time Time (ns) Time 100.0 DRAM SRAM access time CPU cycle time 10.0 Effective CPU cycle time 1.0 0.1 CPU 0.0 1985 1990 1995 2000 2003 2005 2010 2015 Year Sean Barker 2 Caching Smaller, faster, more expensive Cache 8 4 9 10 14 3 memory caches a subset of the blocks Data is copied in block-sized 10 4 transfer units Larger, slower, cheaper memory Memory 0 1 2 3 viewed as par@@oned into “blocks” 4 5 6 7 8 9 10 11 12 13 14 15 Sean Barker 3 Cache Hit Request: 14 Cache 8 9 14 3 Memory 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 Sean Barker 4 Cache Miss Request: 12 Cache 8 12 9 14 3 12 Request: 12 Memory 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 Sean Barker 5 Locality ¢ Temporal locality: ¢ Spa0al locality: Sean Barker 6 Locality Example (1) sum = 0; for (i = 0; i < n; i++) sum += a[i]; return sum; Sean Barker 7 Locality Example (2) int sum_array_rows(int a[M][N]) { int i, j, sum = 0; for (i = 0; i < M; i++) for (j = 0; j < N; j++) sum += a[i][j]; return sum; } Sean Barker 8 Locality Example (3) int sum_array_cols(int a[M][N]) { int i, j, sum = 0; for (j = 0; j < N; j++) for (i = 0; i < M; i++) sum += a[i][j]; return sum; } Sean Barker 9 The Memory Hierarchy The Memory Hierarchy Smaller On 1 cycle to access CPU Chip Registers Faster Storage Costlier instrs can L1, L2 per byte directly Cache(s) ~10’s of cycles to access access (SRAM) Main memory ~100 cycles to access (DRAM) Larger Slower Flash SSD / Local network ~100 M cycles to access Cheaper Local secondary storage (disk) per byte slower Remote secondary storage than local (tapes, Web servers / Internet) disk to access Sean Barker 10. -

Managing the Memory Hierarchy

Managing the Memory Hierarchy Jeffrey S. Vetter Sparsh Mittal, Joel Denny, Seyong Lee Presented to SOS20 Asheville 24 Mar 2016 ORNL is managed by UT-Battelle for the US Department of Energy http://ft.ornl.gov [email protected] Exascale architecture targets circa 2009 2009 Exascale Challenges Workshop in San Diego Attendees envisioned two possible architectural swim lanes: 1. Homogeneous many-core thin-node system 2. Heterogeneous (accelerator + CPU) fat-node system System attributes 2009 “Pre-Exascale” “Exascale” System peak 2 PF 100-200 PF/s 1 Exaflop/s Power 6 MW 15 MW 20 MW System memory 0.3 PB 5 PB 32–64 PB Storage 15 PB 150 PB 500 PB Node performance 125 GF 0.5 TF 7 TF 1 TF 10 TF Node memory BW 25 GB/s 0.1 TB/s 1 TB/s 0.4 TB/s 4 TB/s Node concurrency 12 O(100) O(1,000) O(1,000) O(10,000) System size (nodes) 18,700 500,000 50,000 1,000,000 100,000 Node interconnect BW 1.5 GB/s 150 GB/s 1 TB/s 250 GB/s 2 TB/s IO Bandwidth 0.2 TB/s 10 TB/s 30-60 TB/s MTTI day O(1 day) O(0.1 day) 2 Memory Systems • Multimode memories – Fused, shared memory – Scratchpads – Write through, write back, etc – Virtual v. Physical, paging strategies – Consistency and coherence protocols • 2.5D, 3D Stacking • HMC, HBM/2/3, LPDDR4, GDDR5, WIDEIO2, etc https://www.micron.com/~/media/track-2-images/content-images/content_image_hmc.jpg?la=en • New devices (ReRAM, PCRAM, Xpoint) J.S. -

What Is Cloud-Based Backup and Recovery?

White paper Cover Image Pick an image that is accurate and relevant to the content, in a glance, the image should be able to tell the story of the asset 550x450 What is cloud-based backup and recovery? Q120-20057 Executive Summary Companies struggle with the challenges of effective backup and recovery. Small businesses lack dedicated IT resources to achieve and manage a comprehensive data protection platform, and enterprise firms often lack the budget and resources for implementing truly comprehensive data protection. Cloud-based backup and recovery lets companies lower their Cloud-based backup and recovery lets data protection cost or expand their capabilities without raising costs or administrative overhead. Firms of all sizes can companies lower their data protection benefit from cloud-based backup and recovery by eliminating cost or expand their capabilities without on-premises hardware and software infrastructure for data raising costs or administrative overhead.” protection, and simplifying their backup administration, making it something every company should consider. Partial or total cloud backup is a good fit for most companies given not only its cost-effectiveness, but also its utility. Many cloud- based backup vendors offer continuous snapshots of virtual machines, applications, and changed data. Some offer recovery capabilities for business-critical applications such as Microsoft Office 365. Others also offer data management features such as analytics, eDiscovery and regulatory compliance. This report describes the history of cloud-based backup and recovery, its features and capabilities, and recommendations for companies considering a cloud-based data protection solution. What does “backup and recovery” mean? There’s a difference between “backup and recovery” and “disaster recovery.” Backup and recovery refers to automated, regular file storage that enables data recovery and restoration following a loss. -

Embedded DRAM

Embedded DRAM Raviprasad Kuloor Semiconductor Research and Development Centre, Bangalore IBM Systems and Technology Group DRAM Topics Introduction to memory DRAM basics and bitcell array eDRAM operational details (case study) Noise concerns Wordline driver (WLDRV) and level translators (LT) Challenges in eDRAM Understanding Timing diagram – An example References Slide 1 Acknowledgement • John Barth, IBM SRDC for most of the slides content • Madabusi Govindarajan • Subramanian S. Iyer • Many Others Slide 2 Topics Introduction to memory DRAM basics and bitcell array eDRAM operational details (case study) Noise concerns Wordline driver (WLDRV) and level translators (LT) Challenges in eDRAM Understanding Timing diagram – An example Slide 3 Memory Classification revisited Slide 4 Motivation for a memory hierarchy – infinite memory Memory store Processor Infinitely fast Infinitely large Cycles per Instruction Number of processor clock cycles (CPI) = required per instruction CPI[ ∞ cache] Finite memory speed Memory store Processor Finite speed Infinite size CPI = CPI[∞ cache] + FCP Finite cache penalty Locality of reference – spatial and temporal Temporal If you access something now you’ll need it again soon e.g: Loops Spatial If you accessed something you’ll also need its neighbor e.g: Arrays Exploit this to divide memory into hierarchy Hit L2 L1 (Slow) Processor Miss (Fast) Hit Register Cache size impacts cycles-per-instruction Access rate reduces Slower memory is sufficient Cache size impacts cycles-per-instruction For a 5GHz -

PROTECTING DATA from RANSOMWARE and OTHER DATA LOSS EVENTS a Guide for Managed Service Providers to Conduct, Maintain and Test Backup Files

PROTECTING DATA FROM RANSOMWARE AND OTHER DATA LOSS EVENTS A Guide for Managed Service Providers to Conduct, Maintain and Test Backup Files OVERVIEW The National Cybersecurity Center of Excellence (NCCoE) at the National Institute of Standards and Technology (NIST) developed this publication to help managed service providers (MSPs) improve their cybersecurity and the cybersecurity of their customers. MSPs have become an attractive target for cyber criminals. When an MSP is vulnerable its customers are vulnerable as well. Often, attacks take the form of ransomware. Data loss incidents—whether a ransomware attack, hardware failure, or accidental or intentional data destruction—can have catastrophic effects on MSPs and their customers. This document provides recommend- ations to help MSPs conduct, maintain, and test backup files in order to reduce the impact of these data loss incidents. A backup file is a copy of files and programs made to facilitate recovery. The recommendations support practical, effective, and efficient back-up plans that address the NIST Cybersecurity Framework Subcategory PR.IP-4: Backups of information are conducted, maintained, and tested. An organization does not need to adopt all of the recommendations, only those applicable to its unique needs. This document provides a broad set of recommendations to help an MSP determine: • items to consider when planning backups and buying a backup service/product • issues to consider to maximize the chance that the backup files are useful and available when needed • issues to consider regarding business disaster recovery CHALLENGE APPROACH Backup systems implemented and not tested or NIST Interagency Report 7621 Rev. 1, Small Business planned increase operational risk for MSPs. -

Backup Reminders Once You Have Created Your Backup, Take a Moment 1

External Hard Drives—There are a number of easily accessible, but if your laptop was stolen you’d external hard drives that can be connected to your be without a machine and without your backup! computer through the Firewire or USB port. Many Treat your backups with extreme care! of these hard drives can be configured to automati- cally synchronize folders on your desktop with the Destroy your old backups. As you conduct your folders on the external drive. Although this some- backups more frequently, you’ll end up with a pile what automates the backup process, these drives can of old backup media. Be sure to dispose of old also be easily stolen! media properly. CD and DVDs can be destroyed simply by using a pair of scissors and scratching the How to Backup back of the CD several times. There are also media Backing up your data can be as easy as copying and shredders which work just like a paper shredder pasting the files to the backup drive or you can use leaving your backups in hundred of pieces. Just be specialized programs to help create your backup. sure that the data is no longer readable before you Most ITS Windows computers have Roxio Easy CD toss the backups in the trash. Creator installed. Instructions for using Roxio are located on our website at smu.edu/help/resources/ Keep a copy of your backup off site. You may wish backup/backup.asp. For Macs, simply drag the files to store a backup copy at home in the event of theft to the CDRW drive and click Burn. -

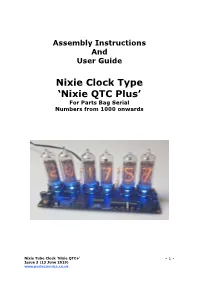

Nixie Clock Type 'Nixie QTC Plus'

Assembly Instructions And User Guide Nixie Clock Type ‘Nixie QTC Plus’ For Parts Bag Serial Numbers from 1000 onwards Nixie Tube Clock ‘Nixie QTC+’ - 1 - Issue 3 (13 June 2019) www.pvelectronics.co.uk REVISION HISTORY Issue Date Reason for Issue Number 3 13 June 2019 Added support for Dekatron Sync Pulse 2 01 October 2018 C5 changed to 15pF Draft 1 29 August 2018 New document Nixie Tube Clock ‘Nixie QTC+’ - 2 - Issue 3 (13 June 2019) www.pvelectronics.co.uk 1. INTRODUCTION 1.1 Nixie QTC Plus - Features Hours, Minutes and Seconds display Drives a wide range of medium sized solder-in tubes Uses a Quartz Crystal Oscillator as the timebase 12 or 24 hour modes Programmable leading zero blanking Date display in either DD.MM.YY or MM.DD.YY or YY.MM.DD format Programmable date display each minute Scrolling display of date or standard display Alarm, with programmable snooze period Optional GPS / WiFi / XTERNA synchronisation with status indicator LED Dedicated DST button to switch between DST and standard time Supercapacitor backup. Keeps time during short power outages Simple time setting using two buttons Configurable for leading zero blanking Double dot colon neon lamps 11 colon neon modes including AM / PM indication (top / bottom or left / right), railroad (slow or fast) etc. Seconds can be reset to zero to precisely the set time Programmable night mode - blanked or dimmed display to save tubes or prevent sleep disturbance Rear Indicator LEDs dim at night to prevent sleep disturbance Weekday aware ‘Master Blank’ function to turn off tubes and LEDs on weekends or during working hours Separate modes for colon neons during night mode Standard, fading, or crossfading with scrollback display modes ‘Slot Machine’ Cathode poisoning prevention routine Programmable RGB tube lighting – select your favourite colour palette 729 colours possible. -

Cloudberry Backup

CloudBerry Backup Installation and Configuration Guide CloudBerry Backup Installation and Configuration Guide Getting Started with CloudBerry Backup CloudBerry Backup (CBB) solution was designed to facilitate PC and server data backup operations to multiple remote locations. It is integrated with top Cloud storage providers, allowing you to access each of your storage or start a sign up to a Cloud platform directly from CBB. It also works fine with network destinations like NAS (Network Attached Storage) or directly connected drives. This document is the complete guide to the CloudBerry Backup deployment, configuration, and usage. Product Editions & Licensing The CBB can be downloaded directly from CloudBerry website with several editions. ● Windows Desktop (including FREE Edition) / Server. ● Microsoft SQL Server. ● Microsoft Exchange Server. ● Oracle Database. ● Ultimate (former Enterprise). ● CloudBerry Backup for NAS (QNAP and Synology). They differ in functionality, storage limits and individual solutions availability. We accomplished a chart with basic editions to give a clear perspective. CBB Edition Desktop Desktop Server MS SQL MS Ultimate Free Pro Exchange File-level + + + + + + Backup Image - - + + + + CloudBerry Backup Installation and Configuration Guide Based Backup MS SQL - - - + - + Server Backup MS - - - - + + Exchange Server Backup Encryption - - + + + + and Compressio n Storage 200GB 1TB 1TB 1TB 1TB Unlimited Limits (for one account) Network 1 1 5 5 5 Unlimited Shares for Backup Support Superuser.c Email, 48 Email, 48 Email, 48 Email, 48 Email, 48 Type om forum hours hours hours hours hours Only response response response response response On the download page, there are also links for Mac and Linux Editions. For Windows, CBB is distributed within Universal Installer so that you can choose the desired edition after the download. -

The Memory Hierarchy

Chapter 6 The Memory Hierarchy To this point in our study of systems, we have relied on a simple model of a computer system as a CPU that executes instructions and a memory system that holds instructions and data for the CPU. In our simple model, the memory system is a linear array of bytes, and the CPU can access each memory location in a constant amount of time. While this is an effective model as far as it goes, it does not reflect the way that modern systems really work. In practice, a memory system is a hierarchy of storage devices with different capacities, costs, and access times. CPU registers hold the most frequently used data. Small, fast cache memories nearby the CPU act as staging areas for a subset of the data and instructions stored in the relatively slow main memory. The main memory stages data stored on large, slow disks, which in turn often serve as staging areas for data stored on the disks or tapes of other machines connected by networks. Memory hierarchies work because well-written programs tend to access the storage at any particular level more frequently than they access the storage at the next lower level. So the storage at the next level can be slower, and thus larger and cheaper per bit. The overall effect is a large pool of memory that costs as much as the cheap storage near the bottom of the hierarchy, but that serves data to programs at the rate of the fast storage near the top of the hierarchy. -

Lecture 10: Memory Hierarchy -- Memory Technology and Principal of Locality

Lecture 10: Memory Hierarchy -- Memory Technology and Principal of Locality CSCE 513 Computer Architecture Department of Computer Science and Engineering Yonghong Yan [email protected] https://passlab.github.io/CSCE513 1 Topics for Memory Hierarchy • Memory Technology and Principal of Locality – CAQA: 2.1, 2.2, B.1 – COD: 5.1, 5.2 • Cache Organization and Performance – CAQA: B.1, B.2 – COD: 5.2, 5.3 • Cache Optimization – 6 Basic Cache Optimization Techniques • CAQA: B.3 – 10 Advanced Optimization Techniques • CAQA: 2.3 • Virtual Memory and Virtual Machine – CAQA: B.4, 2.4; COD: 5.6, 5.7 – Skip for this course 2 The Big Picture: Where are We Now? Processor Input Control Memory Datapath Output • Memory system – Supplying data on time for computation (speed) – Large enough to hold everything needed (capacity) 3 Overview • Programmers want unlimited amounts of memory with low latency • Fast memory technology is more expensive per bit than slower memory • Solution: organize memory system into a hierarchy – Entire addressable memory space available in largest, slowest memory – Incrementally smaller and faster memories, each containing a subset of the memory below it, proceed in steps up toward the processor • Temporal and spatial locality insures that nearly all references can be found in smaller memories – Gives the allusion of a large, fast memory being presented to the processor 4 Memory Hierarchy Memory Hierarchy 5 Memory Technology • Random Access: access time is the same for all locations • DRAM: Dynamic Random Access Memory – High density, low power, cheap, slow – Dynamic: need to be “refreshed” regularly – 50ns – 70ns, $20 – $75 per GB • SRAM: Static Random Access Memory – Low density, high power, expensive, fast – Static: content will last “forever”(until lose power) – 0.5ns – 2.5ns, $2000 – $5000 per GB Ideal memory: • Magnetic disk • Access time of SRAM – 5ms – 20ms, $0.20 – $2 per GB • Capacity and cost/GB of disk 6 Static RAM (SRAM) 6-Transistor Cell – 1 Bit 6-Transistor SRAM Cell word word 0 1 (row select) 0 1 bit bit bit bit • Write: 1. -

Data Backup Options Paul Ruggiero and Matthew A

Data Backup Options Paul Ruggiero and Matthew A. Heckathorn All computer users, from home users to professional information security officers, should back up the critical data they have on their desktops, laptops, servers, and even mobile devices to protect it from loss or corruption. Saving just one backup file may not be enough to safeguard your information. To increase your chances of recovering lost or corrupted data, follow the 3-2-1 rule:1 3 – Keep 3 copies of any important file: 1 primary and 2 backups. 2 – Keep the files on 2 different media types to protect against different types of hazards. 1 – Store 1 copy offsite (e.g., outside your home or business facility). This paper summarizes the pros, cons, and security considerations of backup options for critical personal and business data. Remote Backup – Cloud Storage Recent expansions of broadband internet service have made cloud storage available to a wide range of computer users. Cloud service customers use the internet to access a shared pool of computing resources (e.g., networks, servers, storage, applications, and services) owned by a 2 cloud service provider. 3 Pros Remote backup services can help protect your data against some of the worst-case scenarios, such as natural disasters or critical failures of local devices due to malware. Additionally, cloud services give you anytime access to data and applications anywhere you have an internet connection, with no need for you to invest in networks, servers, and other hardware. You can purchase more or less cloud service as needed, and the service provider transparently manages 1 Krogh, Peter. -

Chapter 2: Memory Hierarchy Design

Computer Architecture A Quantitative Approach, Fifth Edition Chapter 2 Memory Hierarchy Design Copyright © 2012, Elsevier Inc. All rights reserved. 1 Contents 1. Memory hierarchy 1. Basic concepts 2. Design techniques 2. Caches 1. Types of caches: Fully associative, Direct mapped, Set associative 2. Ten optimization techniques 3. Main memory 1. Memory technology 2. Memory optimization 3. Power consumption 4. Memory hierarchy case studies: Opteron, Pentium, i7. 5. Virtual memory 6. Problem solving dcm 2 Introduction Introduction Programmers want very large memory with low latency Fast memory technology is more expensive per bit than slower memory Solution: organize memory system into a hierarchy Entire addressable memory space available in largest, slowest memory Incrementally smaller and faster memories, each containing a subset of the memory below it, proceed in steps up toward the processor Temporal and spatial locality insures that nearly all references can be found in smaller memories Gives the allusion of a large, fast memory being presented to the processor Copyright © 2012, Elsevier Inc. All rights reserved. 3 Memory hierarchy Processor L1 Cache L2 Cache L3 Cache Main Memory Latency Hard Drive or Flash Capacity (KB, MB, GB, TB) Copyright © 2012, Elsevier Inc. All rights reserved. 4 PROCESSOR L1: I-Cache D-Cache I-Cache instruction cache D-Cache data cache U-Cache unified cache L2: U-Cache Different functional units fetch information from I-cache and D-cache: decoder and L3: U-Cache scheduler operate with I- cache, but integer execution unit and floating-point unit Main: Main Memory communicate with D-cache. Copyright © 2012, Elsevier Inc. All rights reserved.