Grimoirelab: a Toolset for Software Development Analytics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

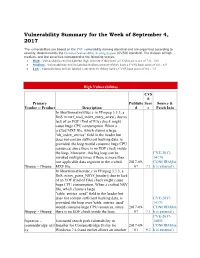

Vulnerability Summary for the Week of September 4, 2017

Vulnerability Summary for the Week of September 4, 2017 The vulnerabilities are based on the CVE vulnerability naming standard and are organized according to severity, determined by the Common Vulnerability Scoring System (CVSS) standard. The division of high, medium, and low severities correspond to the following scores: High - Vulnerabilities will be labeled High severity if they have a CVSS base score of 7.0 - 10.0 Medium - Vulnerabilities will be labeled Medium severity if they have a CVSS base score of 4.0 - 6.9 Low - Vulnerabilities will be labeled Low severity if they have a CVSS base score of 0.0 - 3.9 High Vulnerabilities CVS S Primary Publishe Scor Source & Vendor -- Product Description d e Patch Info In libavformat/mxfdec.c in FFmpeg 3.3.3, a DoS in mxf_read_index_entry_array() due to lack of an EOF (End of File) check might cause huge CPU consumption. When a crafted MXF file, which claims a large "nb_index_entries" field in the header but does not contain sufficient backing data, is provided, the loop would consume huge CPU resources, since there is no EOF check inside the loop. Moreover, this big loop can be CVE-2017- invoked multiple times if there is more than 14170 one applicable data segment in the crafted 2017-09- CONFIRM(lin ffmpeg -- ffmpeg MXF file. 07 7.1 k is external) In libavformat/nsvdec.c in FFmpeg 3.3.3, a DoS in nsv_parse_NSVf_header() due to lack of an EOF (End of File) check might cause huge CPU consumption. When a crafted NSV file, which claims a large "table_entries_used" field in the header but does not contain sufficient backing data, is CVE-2017- provided, the loop over 'table_entries_used' 14171 would consume huge CPU resources, since 2017-09- CONFIRM(lin ffmpeg -- ffmpeg there is no EOF check inside the loop. -

Asynchronous Intrusion Recovery for Interconnected Web Services

Asynchronous intrusion recovery for interconnected web services The MIT Faculty has made this article openly available. Please share how this access benefits you. Your story matters. Citation Chandra, Ramesh, Taesoo Kim, and Nickolai Zeldovich. “Asynchronous Intrusion Recovery for Interconnected Web Services.” Proceedings of the Twenty-Fourth ACM Symposium on Operating Systems Principles - SOSP ’13 (2013), Nov. 3-6, 2013, Farminton, Pennsylvania, USA. ACM. As Published http://dx.doi.org/10.1145/2517349.2522725 Publisher Association for Computing Machinery Version Author's final manuscript Citable link http://hdl.handle.net/1721.1/91473 Terms of Use Creative Commons Attribution-Noncommercial-Share Alike Detailed Terms http://creativecommons.org/licenses/by-nc-sa/4.0/ Asynchronous intrusion recovery for interconnected web services Ramesh Chandra, Taesoo Kim, and Nickolai Zeldovich MIT CSAIL Abstract in the access control service, she could give herself write access to the employee management service, use these Recovering from attacks in an interconnected system is new-found privileges to make unauthorized changes to difficult, because an adversary that gains access to one employee data, and corrupt other services. Manually re- part of the system may propagate to many others, and covering from such an intrusion requires significant effort tracking down and recovering from such an attack re- to track down what services were affected by the attack quires significant manual effort. Web services are an and what changes were made by the attacker. important example of an interconnected system, as they are increasingly using protocols such as OAuth and REST Many web services interact with one another using pro- APIs to integrate with one another. -

Annual Report

The Document Founda��on 2016 Annual Report Document Liberation Own your content Welcome This annual report is the sixth chapter in the story of a long journey, started by a group of people that were sharing the common goal of create something new – finally made by the community, for the community. Today, public administraons, enterprises and individual users worldwide can reap the benefits of the hard work made by a constantly growing community of volunteers and supporters. The report is a showcase of the acvies of the foundaon. Looking back, we have accomplished a large number of objecves in 2016 and we are on track for 2017. We have funded improvements to the organizaon and the product, and supported local acvies carried out by nave language projects. Behind the scenes, the foundaon is growing thanks to the commitment of an amazing group of people, with dozens of volunteers in every geography, and a Photo: Maeo G.P. Flora, CC BY-ND 2.5 few paid staff - led by Florian Effenberger - who take care of daily acvies related to documentaon, localizaon, markeng, design, development, QA, websites and system administraon. The management of a foundaon is somemes complicated; o5en you are called to take important decisions achieved only a5er longer debates. Thanks to the diverse approaches and aptudes the Directors are also focusing on new goals for keeping TDF in the right direcon. I would personally thank Thorsten Behrens, Osvaldo Gervasi, Jan Holesovsky, Andreas Mantke, Michael Meeks, Björn Michaelsen, Simon Phipps, Eike Rathke and Norbert Thiebaud for their big commitment to guide the foundaon where is it today. -

Virtual Coffee Room

Virtual Coffee Room https://cafe.elixir.ut.ee Ivan Kuzmin, Hedi Peterson Contents 1 Motivation3 2 Choice of technical platform3 2.1 Technology stack.............................4 2.2 ELIXIR AAI integration.........................4 2.3 Groups, privacy levels..........................4 3 Use cases4 3.1 Basic usage by an ELIXIR member at cafe.elixir.ut.ee.........5 3.2 VCR as a Node support service.....................5 3.3 VCR as a bioinformatics service support................5 4 Shortcomings and future directions6 2 1 Motivation Large virtual organisations acting in international space need convenient technological platforms to ease communication among its members located in various countries. Communication that in local organisations might happen during specialised meetings or as ad hoc get togethers cannot take place the same way when members of the community meet face to face only a few times per year. Therefore, convenient virtual environments are needed to enhance knowledge exchange and seemingless sharing of best practices. StackOverflow and Biostars are Question & Answer platforms well received and used among the life science and bioinformatics community. However, these platforms are meant for more general questions about programming and bioin- formatics. So these existing platforms would not suit as a platform to discuss more specific topics about European Life Science Infrastructure ELIXIR. Thus, we de- cided to dedicate part of the ELIXIR-Excelerate project to provide a communication platform to enhance knowledge exchange among the hundreds of people working for ELIXIR across its 23 member countries. 2 Choice of technical platform There are several technological platforms developed by companies or open software enthusiasts that had the key features that we were looking for. -

Development Tools Release 2017.11.14

Development tools Release 2017.11.14 Patrick Vergain 2017-12-9 Contents 1 Documentation 3 1.1 Documentation news...........................................3 1.1.1 Documentation news 2017...................................3 1.1.2 Documentation news 2016...................................3 1.2 Documentation Advices.........................................4 1.2.1 You are what you document (Monday, May 5, 2014).....................4 1.2.2 13 Things People Hate about Your Open Source Docs.....................5 1.2.3 Beautiful docs..........................................5 1.2.4 Designing Great API Docs (11 Jan 2012)...........................5 1.2.5 Docness.............................................5 1.2.6 Hacking distributed (february 2013)..............................6 1.2.7 Jacob Kaplan-Moss (November 10, 2009)...........................6 1.2.8 Agile documentation best practices...............................6 1.2.9 Best Practices for Documenting Technical Procedures Melanie Seibert............7 1.2.10 Plone..............................................7 1.2.11 Twilio..............................................7 1.2.12 Other advices..........................................7 1.3 Documentation generators........................................ 10 1.3.1 Sphinx.............................................. 10 1.3.2 Authorea............................................ 144 1.3.3 Doxygen............................................ 144 1.3.4 Javadoc............................................. 159 1.3.5 Jekyll............................................. -

How to Run a Successful Free Software Project

Producing Open Source Software How to Run a Successful Free Software Project Karl Fogel Producing Open Source Software: How to Run a Successful Free Software Project by Karl Fogel Copyright © 2005-2021 Karl Fogel, under the CreativeCommons Attribution-ShareAlike (4.0) license. Version: 2.3214 Home site: https://producingoss.com/ Dedication This book is dedicated to two dear friends without whom it would not have been possible: Karen Underhill and Jim Blandy. i Table of Contents Preface ........................................................................................................................................................... vi Why Write This Book? ............................................................................................................................. vi Who Should Read This Book? ................................................................................................................... vi Sources .................................................................................................................................................. vii Acknowledgements ................................................................................................................................. viii For the first edition (2005) .............................................................................................................. viii For the second edition (2021) ............................................................................................................ ix Disclaimer ............................................................................................................................................. -

Producing Open Source Software How to Run a Successful Free Software Project

Producing Open Source Software How to Run a Successful Free Software Project Karl Fogel Producing Open Source Software: How to Run a Successful Free Software Project by Karl Fogel Copyright © 2005-2017 Karl Fogel, under the CreativeCommons Attribution-ShareAlike (4.0) license. Version: 2.3088 Home site: http://producingoss.com/ Dedication This book is dedicated to two dear friends without whom it would not have been possible: Karen Under- hill and Jim Blandy. i Table of Contents Preface ............................................................................................................................. vi Why Write This Book? ............................................................................................... vi Who Should Read This Book? .................................................................................... vii Sources ................................................................................................................... vii Acknowledgements ................................................................................................... viii For the first edition (2005) ................................................................................ viii For the second edition (2017) ............................................................................... x Disclaimer .............................................................................................................. xiii 1. Introduction ................................................................................................................... -

Fedora Infrastructure Best Practices Documentation Release 1.0.0

Fedora Infrastructure Best Practices Documentation Release 1.0.0 The Fedora Infrastructure Team Sep 09, 2021 Full Table of Contents: 1 Getting Started 3 1.1 Create a Fedora Account.........................................3 1.2 Subscribe to the Mailing List......................................3 1.3 Join IRC.................................................3 1.4 Next Steps................................................4 2 Full Table of Contents 5 2.1 Developer Guide.............................................5 2.2 System Administrator Guide....................................... 28 2.3 (Old) System Administrator Guides................................... 317 3 Indices and tables 335 i ii Fedora Infrastructure Best Practices Documentation, Release 1.0.0 This contains a development and system administration guide for the Fedora Infrastructure team. The development guide covers how to get started with application development as well as application best practices. You will also find several sample projects that serve as demonstrations of these best practices and as an excellent starting point for new projects. The system administration guide covers how to get involved in the system administration side of Fedora Infrastructure as well as the standard operating procedures (SOPs) we use. The source repository for this documentation is maintained here: https://pagure.io/infra-docs Full Table of Contents: 1 Fedora Infrastructure Best Practices Documentation, Release 1.0.0 2 Full Table of Contents: CHAPTER 1 Getting Started Fedora Infrastructure is full of projects that need help. In fact, there is so much work to do, it can be a little over- whelming. This document is intended to help you get ready to contribute to the Fedora Infrastructure. 1.1 Create a Fedora Account The first thing you should do is create a Fedora account. -

Progress Report About 2013 Fiscal Year Table of Content Progress Report About 2013 Fiscal Year

The Document Foundation Kurfürstendamm 188 10707 Berlin Telefon: 030 5557992-0 Telefax: 030 5557992-99 E-Mail: [email protected] Web: http://www.documentfoundation.org Progress report about 2013 fiscal year Table of content Progress report about 2013 fiscal year.................................................................................................1 Introduction..........................................................................................................................................4 Statement.........................................................................................................................................4 Explanation of Terms.......................................................................................................................4 Development of the LibreOffice Software...........................................................................................5 With interoperability and stability to success..................................................................................5 LibreOffice 4.0.................................................................................................................................6 LibreOffice 4.1.................................................................................................................................7 The way to LibreOffice 4.2..............................................................................................................8 Statistics for the program development...........................................................................................8 -

The Infrastructure of the Libreoffice Project

TheThe infrastructureinfrastructure ofof thethe LibreOffceLibreOffce projectproject Alexander Werner The Document Foundation LibreOffce Conference Bern September 2#1 1 About Alex A lon$ lon$ time of activity for free software 'ember of The Document Foundation responsible for the project's infrastructure as freelancer )ython enthusiast " Looking into the engine room * Controlled chaos O,n dedicated servers Debian 2 Dedicated servers rented by others 1buntu 1"3# -osted services Amavisd .ginx 'ailman Apache 'lmmj '&!/L !altstack )ostgre!/L O,ncloud Deployment on bare metal 'ediawi+i 0's Dovecot 1FW 'irmon !hore,all 4therpad )ostfx )lanet 'irrorbrain 4tc. pp. Askbot )lone !ilverstripe 6ump to the next level 5 Taming the chaos Goals -igh a%ailability of services Fe,er services ,ith the same purpose Better maintainability Better use of resources Easier scalability 7 Taming the chaos Reaching the goals -igh a%ailability 1se current %irtualization and stora$e technolo$ies Build a cloud9like infrastructure :et better suited hard,are Fe,er services ,ith the same purpose Find duplicate services Choose the one that ,orks best Better maintainability ;educe the number of different speced servers ;educe conf$uration complexity 2 Taming the chaos Reaching the goals Better use of resources !oftware that needs less C)1=RAM Looking for simpler conf$uration Easier scalability :et hard,are that is suffcient for quite a number of VMs 'ake use of an infrastructure=cloud pro%ider < Our new engine 74 cores "57:B RA' x2TB Enterprise Level SATA HDDs -ardware ;AID -

Desarrollo Para Hacer Llegar Perceval a Las Masas ______

Participación en el proyecto de código abierto Perceval: Desarrollo para hacer llegar Perceval a las masas __________________________________________ Desarrollo de aplicaciones Autor: José Miguel Cañibano Iglesias Consultor: Gregorio Robles Martínez Tutor externo: Santiago Dueñas Domínguez 8 de Enero de 2.017 LICENCIA La licencia de todo el contenido del proyecto, tanto de la memoria como del código, así como de cualquier otro contenido, está ligada a todos los efectos a la misma que la del proyecto Perceval [1], y que en el momento de hacer el presente trabajo está basada en GPU v3 (5.4 Licencia GNU v3, 29 de Junio de 2007, página. 65) RESUMEN DEL PROYECTO El proyecto gira en torno a la aplicación de recuperación y recolección de datos de repositorios Perceval [2]. Perceval puede manejar distintos tipos de repositorios como pueden ser: Bugzilla, Gerrit, Git, Jenkins, ReMo, etc. Debido a que Perceval es un proyecto colaborativo que evoluciona constantemente, adolece de determinadas características, y en este proyecto se ha intentado realizar la colaboración aportando al mismo esas características que lo harán una herramienta mucho más completa. La idea básica ha sido intentar llegar a un más amplio grupo de usuarios, haciendo el hincapié en dos puntos: por un lado el sistema operativo que use; por otro en qué formato de salida dé el resultado. Una de las bases ha sido usar herramientas de software libre en detrimento de las propietarias, haciendo un estudio en cada caso de las distintas posibilidades. En cualquier caso, en la realización del trabajo no sólo se basa en la realización de un documento o de unas líneas de código, sino en el trabajo continuo en una comunidad de Open Source, colaborando y participando con ella, pues, al fin y al cabo, parte esencial del Software Libre son las distintas comunidades que existen y que dan una razón de ser a este máster. -

D5.11 Second Portal Release

D5.11 - SECOND PORTAL RELEASE Grant Agreement 676547 Project Acronym CoeGSS Project Title Centre of Excellence for Global Systems Science Topic EINFRA-5-2015 Project website http://www.coegss-project.eu Start Date of project October 1st, 2015 Duration 36 months Due date May 31 st , 2017 Dissemination level Public Nature Report Version 1.1 Work Package WP5 Leading Partner ATOS (F. Javier Nieto) F. Javier Nieto, Burak Karaboğa, Michael Authors Gienger, Yossandra Sandoval, Michal Palka, Marcin Lawenda, Paweł Wolniewicz Internal Reviewers Andreas Geiges, Paweł Wolniewicz Keywords Portal, Tools, CoE Services Total number of pages: 30 D5.11 - SECOND PORTAL RELEASE Copyright (c) 2016 Members of the CoeGSS Project. The CoeGSS (“Centre of Excellence for Global Systems Science”) project is funded by the European Union. For more information on the project please see the website http:// http://coegss-project.eu/ The information contained in this document represents the views of the CoeGSS as of the date they are published. The CoeGSS does not guarantee that any information contained herein is error-free, or up to date. THE CoeGSS MAKES NO WARRANTIES, EXPRESS, IMPLIED, OR STATUTORY, BY PUBLISHING THIS DOCUMENT. Version History Version Name Partner Date From F. Javier Nieto ATOS 04.05.2017 Initial Template Burak Karaboga ATOS 18.05.2017 Burak Karaboga, Marcin Lawenda, ATOS, 23.05.2017 First Draft Michael Gienger, Michal Palka, Pawel CHALMERS, Wolniewicz, Yosandra Sandoval HLRS, PSNC First Version Burak Karaboga ATOS 27.05.2017 Reviewed Burak Karaboga ATOS 31.05.2017 version Approved by ECM Board ALL 31.05.2017 2 D5.11 - SECOND PORTAL RELEASE Abstract The second release of the CoeGSS Portal introduces several changes to the existing system and adds some new features and components.