Fedora Infrastructure Best Practices Documentation Release 1.0.0

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Red Hat GFS 6.0 Administrator's Guide

Red Hat GFS 6.0 Administrator's Guide Red Hat GFS 6.0: Administrator's Guide Copyright © 2004 and 2005 Red Hat, Inc. Red Hat, Inc. 1801 Varsity Drive Raleigh NC 27606-2072 USA Phone: +1 919 754 3700 Phone: 888 733 4281 Fax: +1 919 754 3701 PO Box 13588 Research Triangle Park NC 27709 USA rh-gfsg(EN)-6.0-Print-RHI (2005-08-02T11:07-0400) Copyright © 2005 by Red Hat, Inc. This material may be distributed only subject to the terms and conditions set forth in the Open Publication License, V1.0 or later (the latest version is presently available at http://www.opencontent.org/openpub/). Distribution of substantively modified versions of this document is prohibited without the explicit permission of the copyright holder. Distribution of the work or derivative of the work in any standard (paper) book form for commercial purposes is prohibited unless prior permission is obtained from the copyright holder. Red Hat and the Red Hat "Shadow Man" logo are registered trademarks of Red Hat, Inc. in the United States and other countries. All other trademarks referenced herein are the property of their respective owners. The GPG fingerprint of the [email protected] key is: CA 20 86 86 2B D6 9D FC 65 F6 EC C4 21 91 80 CD DB 42 A6 0E Table of Contents Introduction.......................................................................................................................................... i 1. Audience ................................................................................................................................ i 2. Document -

Vpro-1085-R Course for RHV/Ovirt/OLVM Implementation & Administration Lab Exercises

vPro-1085-R - Storware vProtect - Implementation & Administration Lab Exercises - v7.md 2/24/2021 vPro-1085-R Course for RHV/oVirt/OLVM Implementation & Administration Lab Exercises Credentials and access details Attribute Value Download URL http://10.40.0.253/lab-materials/vprotect/vpro-1085 vProtect host 10.41.0.4 vProtect username root vProtect password St0rL@bs vProtect Web UI https://10.41.0.4 vProtect Web UI username admin vProtect Web UI password vPr0tect RHV manager UI https://rhv-m.storware.lab/ovirt-engine RHV user admin@internal in vProtect in UI, and admin in RHV manager UI RHV password St0rL@bs Lab 1 - Demo of all-in-one installation In this section we'll show you how to install vProtect components quickly using all-in-one setup scripts. Before installation steps please update and then reboot system dnf -y update Remote repository (option 1) 1. Export VPROTECT_REPO variable to point to the repository URL export VPROTECT_REPO=http://10.40.0.253/vprotect/current/el8 2. Execute script: bash < <(curl -s http://repo.storware.eu/vprotect/vprotect-local-install.sh) 1 / 31 vPro-1085-R - Storware vProtect - Implementation & Administration Lab Exercises - v7.md 2/24/2021 Lab 2 - Installation with RPMs In this section you're going to install vProtect using RPMs - so that all necessary steps are done Prerequisites 1. Access vlab.vpro.proxy.v3 2. Open putty on your vlab.vpro.proxy.v3 3. Connect to vProtect machine with a root access 4. Use your CentOS 8 minimal 5. Make sure your OS is up to date: dnf -y update If kernel is updated, then You need to reboot your operating system. -

DETECTING BOTS in INTERNET CHAT by SRITI KUMAR Under The

DETECTING BOTS IN INTERNET CHAT by SRITI KUMAR (Under the Direction of Kang Li) ABSTRACT Internet chat is a real-time communication tool that allows on-line users to communicate via text in virtual spaces, called chat rooms or channels. The abuse of Internet chat by bots also known as chat bots/chatterbots poses a serious threat to the users and quality of service. Chat bots target popular chat networks to distribute spam and malware. We first collect data from a large commercial chat network and then conduct a series of analysis. While analyzing the data, different patterns were detected which represented different bot behaviors. Based on the analysis on the dataset, we proposed a classification system with three main components (1) content- based classifiers (2) machine learning classifier (3) communicator. All three components of the system complement each other in detecting bots. Evaluation of the system has shown some measured success in detecting bots in both log-based dataset and in live chat rooms. INDEX WORDS: Yahoo! Chat room, Chat Bots, ChatterBots, SPAM, YMSG DETECTING BOTS IN INTERNET CHAT by SRITI KUMAR B.E., Visveswariah Technological University, India, 2006 A Thesis Submitted to the Graduate Faculty of The University of Georgia in Partial Fulfillment of the Requirements for the Degree MASTER OF SCIENCE ATHENS, GEORGIA 2010 © 2010 Sriti Kumar All Rights Reserved DETECTING BOTS IN INTERNET CHAT by SRITI KUMAR Major Professor: Kang Li Committee: Lakshmish Ramaxwamy Prashant Doshi Electronic Version Approved: Maureen Grasso Dean of the Graduate School The University of Georgia December 2010 DEDICATION I would like to dedicate my work to my mother to be patient with me, my father for never questioning me, my brother for his constant guidance and above all for their unconditional love. -

Red Hat Enterprise Linux 8 Installing, Managing, and Removing User-Space Components

Red Hat Enterprise Linux 8 Installing, managing, and removing user-space components An introduction to AppStream and BaseOS in Red Hat Enterprise Linux 8 Last Updated: 2021-06-25 Red Hat Enterprise Linux 8 Installing, managing, and removing user-space components An introduction to AppStream and BaseOS in Red Hat Enterprise Linux 8 Legal Notice Copyright © 2021 Red Hat, Inc. The text of and illustrations in this document are licensed by Red Hat under a Creative Commons Attribution–Share Alike 3.0 Unported license ("CC-BY-SA"). An explanation of CC-BY-SA is available at http://creativecommons.org/licenses/by-sa/3.0/ . In accordance with CC-BY-SA, if you distribute this document or an adaptation of it, you must provide the URL for the original version. Red Hat, as the licensor of this document, waives the right to enforce, and agrees not to assert, Section 4d of CC-BY-SA to the fullest extent permitted by applicable law. Red Hat, Red Hat Enterprise Linux, the Shadowman logo, the Red Hat logo, JBoss, OpenShift, Fedora, the Infinity logo, and RHCE are trademarks of Red Hat, Inc., registered in the United States and other countries. Linux ® is the registered trademark of Linus Torvalds in the United States and other countries. Java ® is a registered trademark of Oracle and/or its affiliates. XFS ® is a trademark of Silicon Graphics International Corp. or its subsidiaries in the United States and/or other countries. MySQL ® is a registered trademark of MySQL AB in the United States, the European Union and other countries. -

Automating Identity Management with Ansible Automation

Automating Identity Management with Ansible Automation Brad Krumme Solutions Architect 1 INTRODUCTION SysAdmin Background RHCE and Ansible Specialist Love Sports/Performance Cars Also love Craft Beer and Bourbon Brad Krumme Solutions Architect 2 Agenda What we’ll ▸ Overview of Red Hat Identity Management ▸ Overview of Ansible Automation Platform discuss today ▸ Identity Management Automation Use Case ▸ Ansible Setup Considerations ▸ Automation In Practice ▸ Extra Resources 3 Red Hat Identity Red Hat Identity Management provides a centralized and clear Management Overview method for managing identities for users, machines, and services within large Linux/Unix enterprise environments. 4 IdM Server - responsibilities Identity Store ● Users, Hosts, Services ● Groups (User and Host) Authentication ● Passwords, 2FA (Smart Cards, OTP soft/hard tokens) ● SSO ● What is expected from the Client/Server certificates (PKI) service? Authorization ● Access rules per host ● Privileged operations ● IdM itself - RBAC - user roles and admin delegations Security-related service management ● Secrets (passwords) ● Linux - SUDO, SELinux, etc. 5 Auditing and reporting IdM Server - standard Infrastructure ● LDAP: old & proven protocol for sharing data, interfaces sometimes authentication too (v3 from *1997) ● Kerberos: old & proven protocol for authentication (*1993, revised 2005) ● Deprecated: NIS, NTLM How Identity Servers interact Applications with the outer world ● LDAP: user details, often authentication too ● Kerberos: authentication (SSO), mostly for internal -

Product Appendix 1 Software and Support Subscriptions 产品附录1 软件和支持订阅

PRODUCT APPENDIX 1 产品附录 1 SOFTWARE AND SUPPORT 软件和支持订阅 SUBSCRIPTIONS This Product Appendix (which includes Exhibits applicable to specific 本产品附录(包括具体红帽产品所适用的附件)包含参数描述条款及 Red Hat Products) contains terms that describe the parameters and govern your use of Software Subscriptions and Support 贵方使用软件订阅和支持订阅所适用的条款。红帽托管或在线订阅商 Subscriptions. This Product Appendix does not apply to Red Hat 品/服务不适用本产品附录。我们在本产品附录中使用但未在本产品 hosted or on-line subscription offerings. When we use a capitalized term in this Product Appendix without defining it in this Product 附 录 中 定 义 的 术 语 , 具有本产 品 附 录 适 用 的 协 议 ( 如 Appendix, the term has the meaning defined in the Agreement to http://www.redhat.com/agreements 上规定的红帽企业协议,或客户 which this Product Appendix applies, either the Red Hat Enterprise Agreement set forth at http://www.redhat.com/agreements or, if 与红帽之间共同签署的协议(如适用))中定义的含义。如果本产品 applicable, a mutually signed agreement between Client and Red 附录与本产品附录的附件有冲突、不一致或差异,以附件的条款 Hat. In the event of a conflict, inconsistency or difference between this Product Appendix and an Exhibit to this Product Appendix, the 为准。 terms of the Exhibit control. Red Hat may modify or update this Product Appendix either by 红帽可通过在 http://www.redhat.com/agreements 发布本产品附录 posting a revised version of this Product Appendix at http://www.redhat.com/agreements, or by providing notice using 的修订版或以其他合理的方式提供通知,对本产品附录进行修改或 other reasonable means. If you do not agree to the revised version 更新。如果贵方不同意经修改的版本,则 (a) 截至更新日期贵方已购 then, (a) the existing Product Appendix will continue to apply to Red Hat Products you have purchased as of the date of the update for the 买的红帽产品,将在当时的订阅期的剩余期限内继续适用现有的产 remainder of the then-current Subscription term(s); and (b) the 品附录;且 (b) 在经修改的版本的生效日期之后对红帽产品的任何新 revised version will apply to any new purchases or renewals of Red Hat Products made after the effective date of the revised version. -

IBM Qradar : Installation Guide Chapter 1

IBM QRadar 7.4 Installation Guide IBM Note Before you use this information and the product that it supports, read the information in “Notices” on page 69. Product information This document applies to IBM® QRadar® Security Intelligence Platform 7.4.2 and subsequent releases unless superseded by an updated version of this document. © Copyright International Business Machines Corporation 2004, 2020. US Government Users Restricted Rights – Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents Introduction to QRadar installations ......................................................................v Chapter 1. QRadar deployment overview................................................................1 License keys................................................................................................................................................. 1 Integrated Management Module.................................................................................................................1 Management controller................................................................................................................................2 Prerequisite hardware accessories for QRadar installations......................................................................2 Environmental restrictions...........................................................................................................................2 Supported web browsers ............................................................................................................................3 -

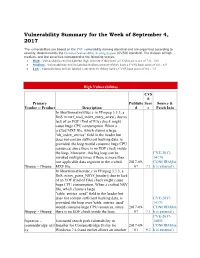

Vulnerability Summary for the Week of September 4, 2017

Vulnerability Summary for the Week of September 4, 2017 The vulnerabilities are based on the CVE vulnerability naming standard and are organized according to severity, determined by the Common Vulnerability Scoring System (CVSS) standard. The division of high, medium, and low severities correspond to the following scores: High - Vulnerabilities will be labeled High severity if they have a CVSS base score of 7.0 - 10.0 Medium - Vulnerabilities will be labeled Medium severity if they have a CVSS base score of 4.0 - 6.9 Low - Vulnerabilities will be labeled Low severity if they have a CVSS base score of 0.0 - 3.9 High Vulnerabilities CVS S Primary Publishe Scor Source & Vendor -- Product Description d e Patch Info In libavformat/mxfdec.c in FFmpeg 3.3.3, a DoS in mxf_read_index_entry_array() due to lack of an EOF (End of File) check might cause huge CPU consumption. When a crafted MXF file, which claims a large "nb_index_entries" field in the header but does not contain sufficient backing data, is provided, the loop would consume huge CPU resources, since there is no EOF check inside the loop. Moreover, this big loop can be CVE-2017- invoked multiple times if there is more than 14170 one applicable data segment in the crafted 2017-09- CONFIRM(lin ffmpeg -- ffmpeg MXF file. 07 7.1 k is external) In libavformat/nsvdec.c in FFmpeg 3.3.3, a DoS in nsv_parse_NSVf_header() due to lack of an EOF (End of File) check might cause huge CPU consumption. When a crafted NSV file, which claims a large "table_entries_used" field in the header but does not contain sufficient backing data, is CVE-2017- provided, the loop over 'table_entries_used' 14171 would consume huge CPU resources, since 2017-09- CONFIRM(lin ffmpeg -- ffmpeg there is no EOF check inside the loop. -

Release Notes for Fedora 20

Fedora 20 Release Notes Release Notes for Fedora 20 Edited by The Fedora Docs Team Copyright © 2013 Fedora Project Contributors. The text of and illustrations in this document are licensed by Red Hat under a Creative Commons Attribution–Share Alike 3.0 Unported license ("CC-BY-SA"). An explanation of CC-BY-SA is available at http://creativecommons.org/licenses/by-sa/3.0/. The original authors of this document, and Red Hat, designate the Fedora Project as the "Attribution Party" for purposes of CC-BY-SA. In accordance with CC-BY-SA, if you distribute this document or an adaptation of it, you must provide the URL for the original version. Red Hat, as the licensor of this document, waives the right to enforce, and agrees not to assert, Section 4d of CC-BY-SA to the fullest extent permitted by applicable law. Red Hat, Red Hat Enterprise Linux, the Shadowman logo, JBoss, MetaMatrix, Fedora, the Infinity Logo, and RHCE are trademarks of Red Hat, Inc., registered in the United States and other countries. For guidelines on the permitted uses of the Fedora trademarks, refer to https:// fedoraproject.org/wiki/Legal:Trademark_guidelines. Linux® is the registered trademark of Linus Torvalds in the United States and other countries. Java® is a registered trademark of Oracle and/or its affiliates. XFS® is a trademark of Silicon Graphics International Corp. or its subsidiaries in the United States and/or other countries. MySQL® is a registered trademark of MySQL AB in the United States, the European Union and other countries. All other trademarks are the property of their respective owners. -

'Building' Architects and Use of Open-Source Tools Towards Achievement of Millennium Development Goals

'Building' Architects and Use of Open-source Tools Towards Achievement of Millennium Development Goals. Oku, Onyeibo Chidozie Department of Architecture Faculty of Environmental Sciences Enugu State University of Science and Technology Email: [email protected] ABSTRACT Millennium Development Goals (MDGs) were established by the United Nations to improve the well-being of humans and their habitat. Whether they are the target beneficiaries or amongst the parties administering services for achieving the MDGs, humans must carry out these activities in a physical environment. Hence, the Seventh Goal of the MDG has an indirect and far-reaching relationship with the others because it deals with the sustainable development of the built environment. Architects deliver consultancy services that span the design, documentation and construction supervision of the built environment. This study sought to determine the extent to which these professionals can do this, with respect to the Seventh Millennium Development Goal, using mainly open-source tools. The study draws from literature reviews, end-user feedback or reports, interviews with developers of applicable open-source products, and statistics from a survey, launched in 2011, for capturing how architects use ICT in their businesses. Analysis of popular open-source technologies for the Architecture, Engineering and Construction (AEC) industry show a concentration of resources in favour of the later stages of the Architect's role, rather than the design and contract-drawing stages. Some of the better-implemented tools are either too cryptic for professionals who communicate in graphical terms, or heavily biased towards software engineering practices. The products that promise Building Information Modelling (BIM) capabilities are still at an early developmental stage. -

Attacker Chatbots for Randomised and Interactive Security Labs, Using Secgen and Ovirt

Hackerbot: Attacker Chatbots for Randomised and Interactive Security Labs, Using SecGen and oVirt Z. Cliffe Schreuders, Thomas Shaw, Aimée Mac Muireadhaigh, Paul Staniforth, Leeds Beckett University Abstract challenges, rewarding correct solutions with flags. We deployed an oVirt infrastructure to host the VMs, and Capture the flag (CTF) has been applied with success in leveraged the SecGen framework [6] to generate lab cybersecurity education, and works particularly well sheets, provision VMs, and provide randomisation when learning offensive techniques. However, between students. defensive security and incident response do not always naturally fit the existing approaches to CTF. We present 2. Related Literature Hackerbot, a unique approach for teaching computer Capture the flag (CTF) is a type of cyber security game security: students interact with a malicious attacker which involves collecting flags by solving security chatbot, who challenges them to complete a variety of challenges. CTF events give professionals, students, security tasks, including defensive and investigatory and enthusiasts an opportunity to test their security challenges. Challenges are randomised using SecGen, skills in competition. CTFs emerged out of the and deployed onto an oVirt infrastructure. DEFCON hacker conference [7] and remain common Evaluation data included system performance, mixed activities at cybersecurity conferences and online [8]. methods questionnaires (including the Instructional Some events target students with the goal of Materials Motivation Survey (IMMS) and the System encouraging interest in the field: for example, PicoCTF Usability Scale (SUS)), and group interviews/focus is an annual high school competition [9], and CSAW groups. Results were encouraging, finding the approach CTF is an annual competition for students in Higher convenient, engaging, fun, and interactive; while Education (HE) [10]. -

Tao-Of-Tmux Documentation 发布 V1.0.2

tao-of-tmux Documentation 发布 v1.0.2 Tony Narlock 2020 年 04 月 18 日 Contents 1 前言 3 1.1 关于本书 ............................................... 3 1.2 代码等风格说明 ........................................... 4 1.3 本书主要内容 ............................................. 4 1.4 打赏 .................................................. 5 1.5 书籍形式(Formats) ........................................ 5 1.6 勘误说明(Errata){#errata} ................................... 5 1.7 感谢 .................................................. 6 1.8 本书跟新和 tmux 的变动 ...................................... 6 2 tmux 初识 {#thinking-tmux} 7 2.1 terminal 的窗口管理器 ....................................... 8 2.2 多任务处理 .............................................. 9 2.3 在后台运行程序 ........................................... 10 2.4 Powerful combos ........................................... 11 2.5 小节 .................................................. 12 3 Terminal 基础知识(fundamentals){#terminal-fundamentals} 13 3.1 POSIX 标准 ............................................. 13 3.2 Terminal interface .......................................... 14 3.3 Terminal emulators ......................................... 15 3.4 Shell languages {#shell-languages} ................................ 15 3.5 Shell interpreters (Shells) {#shells} ................................ 15 3.6 小节 .................................................. 16 4 开始使用(Practical usage){#practical-usage} 17 4.1 前缀组合快捷键(prefix key ){#prefix-key} ........................... 17 4.2 Session persistence and the server model ............................. 19