NSS File System Administration Guide for Linux Is Available on the OES Documentation Website

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

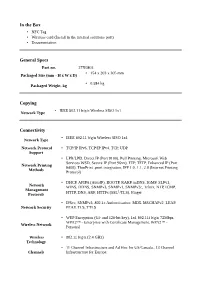

General Specs Copying Connectivity

General Specs Part no. 27X0128 Packaged Size (mm - H x 24 x 178 x 191 mm W x D) Packaged Weight, kg 0.099 kg Copying Network Type IEEE 802.11 b/g/n Wireless SISO 1x1 Connectivity Network Type IEEE 802.11 b/g/n Wireless SISO 1x1 Connectors One USB port (Type B) for Configuration Network Protocol Support TCP/IP IPv6, TCP/IP IPv4, AppleTalk™, TCP, UDP Network Printing Methods LPR/LPD, Function may vary by printer or MFP., Direct IP (Port 9100), HTTP, Telnet, FTP, TFTP, Enhanced IP (Port 9400), ThinPrint .print integration, IPP 1.0, 1.1, 2.0 (Internet Printing Protocol) Network Management DHCP, APIPA (AutoIP), BOOTP, RARP, mDNS, IGMP, SLPv1, Bonjour, WINS, DDNS, SNMPv3, Function may Protocols vary by printer or MFP., SNMPv2c, Telnet, NTP, ICMP, DNS, ARP, HTTPs (SSL1 /TLS), Finger Network Security IPSec, SNMPv3, 802.1x Authentication: MD5, MSCHAPv2, LEAP, PEAP, TLS, TTLS Wireless Network WEP Encryption (64- and 128-bit key), 1x1 802.11i b/g/n 72Mbps, WPA2™ - Enterprise with Certificate Management, WPA2™ - Personal Supported Operating Systems Microsoft Windows Windows Server 2008 x64 running Terminal Services, Windows Server 2008 x64, Windows Server 2008 running Operating Systems Terminal Services, Windows 8.1, Windows Server 2008 R2 Supported running Terminal Services, Windows 8, Windows Server 2008, Windows Server 2008 R2, Windows 8 x64, Windows 7 x64, Windows Server 2012, Windows RT 8.1, Windows XP x64, Windows Server 2003 x64, Windows RT, Windows Server 2003, Windows Server 2012 R2, Windows XP, Windows Server 2003 x64 running -

Micro Focus File Reporter 3.6 Installation Guide

Micro Focus File Reporter 3.6 Installation Guide January 6, 2020 Legal Notices Condrey Corporation makes no representations or warranties with respect to the contents or use of this documentation, and specifically disclaims any express or implied warranties of merchantability or fitness for any particular purpose. Further, Condrey Corporation reserves the right to revise this publication and to make changes to its content, at any time, without obligation to notify any person or entity of such revisions or changes. Further, Condrey Corporation makes no representations or warranties with respect to any software, and specifically disclaims any express or implied warranties of merchantability or fitness for any particular purpose. Further, Condrey Corporation reserves the right to make changes to any and all parts of the software at any time, without obligation to notify any person or entity of such revisions or changes. See the Software EULA for full license and warranty information with regard to the Software. Any products or technical information provided under this Agreement may be subject to U.S. export controls and the trade laws of other countries. You agree to comply with all export control regulations and to obtain any required licenses or classification to export, re-export, or import deliverables. You agree not to export or re-export to entities on the current U.S. export exclusion lists or to any embargoed or terrorist countries as specified in the U.S. export laws. You agree to not use deliverables for prohibited nuclear, missile, or chemical biological weaponry end uses. Condrey Corporation assumes no responsibility for your failure to obtain any necessary export approvals. -

Netbackup ™ Enterprise Server and Server 8.0 - 8.X.X OS Software Compatibility List Created on September 08, 2021

Veritas NetBackup ™ Enterprise Server and Server 8.0 - 8.x.x OS Software Compatibility List Created on September 08, 2021 Click here for the HTML version of this document. <https://download.veritas.com/resources/content/live/OSVC/100046000/100046611/en_US/nbu_80_scl.html> Copyright © 2021 Veritas Technologies LLC. All rights reserved. Veritas, the Veritas Logo, and NetBackup are trademarks or registered trademarks of Veritas Technologies LLC in the U.S. and other countries. Other names may be trademarks of their respective owners. Veritas NetBackup ™ Enterprise Server and Server 8.0 - 8.x.x OS Software Compatibility List 2021-09-08 Introduction This Software Compatibility List (SCL) document contains information for Veritas NetBackup 8.0 through 8.x.x. It covers NetBackup Server (which includes Enterprise Server and Server), Client, Bare Metal Restore (BMR), Clustered Master Server Compatibility and Storage Stacks, Deduplication, File System Compatibility, NetBackup OpsCenter, NetBackup Access Control (NBAC), SAN Media Server/SAN Client/FT Media Server, Virtual System Compatibility and NetBackup Self Service Support. It is divided into bookmarks on the left that can be expanded. IPV6 and Dual Stack environments are supported from NetBackup 8.1.1 onwards with few limitations, refer technote for additional information <http://www.veritas.com/docs/100041420> For information about certain NetBackup features, functionality, 3rd-party product integration, Veritas product integration, applications, databases, and OS platforms that Veritas intends to replace with newer and improved functionality, or in some cases, discontinue without replacement, please see the widget titled "NetBackup Future Platform and Feature Plans" at <https://sort.veritas.com/netbackup> Reference Article <https://www.veritas.com/docs/100040093> for links to all other NetBackup compatibility lists. -

Oes 2018 Sp2)

Open Enterprise Server 2018 SP2 Installation Guide August 2020 Legal Notices Copyright © 2017 - 2020 Micro Focus or one of its affiliates. The only warranties for products and services of Micro Focus and its affiliates and licensors (“Micro Focus”) are as may be set forth in the express warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. Micro Focus shall not be liable for technical or editorial errors or omissions contained herein. The information contained herein is subject to change without notice. Contents About This Guide 9 1 What’s New or Changed in the OES Install 11 1.1 What’s New or Changed (OES 2018 SP2) . .11 1.2 What’s New or Changed (OES 2018 SP1) . .11 1.3 What's New or Changed (Update 6 - OES 2018 - Recommended) . 12 1.4 What’s New or Changed (OES 2018) . 12 2 Preparing to Install OES 2018 SP2 13 2.1 Before You Install . 13 2.2 Meeting All Server Software and Hardware Requirements . 13 2.2.1 Server Software . 13 2.2.2 Server Hardware . 14 2.3 NetIQ eDirectory Rights Needed for Installing OES. .15 2.3.1 Rights to Install the First OES Server in a Tree . 15 2.3.2 Rights to Install the First Three Servers in an eDirectory Tree . 15 2.3.3 Rights to Install the First Three Servers in any eDirectory Partition . 15 2.4 Installing and Configuring OES as a Subcontainer Administrator . 15 2.4.1 Rights Required for Subcontainer Administrators . 16 2.4.2 Providing Required Rights to the Subcontainer Administrator for Installing and Managing Samba. -

In the Box General Specs Copying Connectivity

In the Box • NFC Tag • Wireless card (Install in the internal solutions port) • Documentation General Specs Part no. 27X0803 154 x 203 x 305 mm Packaged Size (mm - H x W x D) • 0.584 kg Packaged Weight, kg • Copying IEEE 802.11 b/g/n Wireless SISO 1x1 Network Type • Connectivity IEEE 802.11 b/g/n Wireless SISO 1x1 Network Type • Network Protocol • TCP/IP IPv6, TCP/IP IPv4, TCP, UDP Support • LPR/LPD, Direct IP (Port 9100), Pull Printing, Microsoft Web Services WSD, Secure IP (Port 96xx), FTP, TFTP, Enhanced IP (Port Network Printing 9400), ThinPrint .print integration, IPP 1.0, 1.1, 2.0 (Internet Printing Methods Protocol) • DHCP, APIPA (AutoIP), BOOTP, RARP, mDNS, IGMP, SLPv1, Network WINS, DDNS, SNMPv3, SNMPv1, SNMPv2c, Telnet, NTP, ICMP, Management 1 Protocols HTTP, DNS, ARP, HTTPs (SSL /TLS), Finger • IPSec, SNMPv3, 802.1x Authentication: MD5, MSCHAPv2, LEAP, Network Security PEAP, TLS, TTLS • WEP Encryption (64- and 128-bit key), 1x1 802.11i b/g/n 72Mbps, WPA2™ - Enterprise with Certificate Management, WPA2™ - Wireless Network Personal Wireless • 802.11 b/g/n (2.4 GHz) Technology • 11 Channel Infrastructure and Ad Hoc for US/Canada., 13 Channel Channels Infrastructure for Europe. • The transmission speed and range varies in the surrounding environment., Direct print range up to 15.2 metres (50 feet)., Operating Range Infrastructure mode range up to 45.7 metres (150 feet). Supported Operating Systems • Windows Server 2008 x64 running Terminal Services, Windows Server 2008 x64, Windows Server 2008 running Terminal Services, Windows -

Enterprise Server Administration Guide

Client for Open Enterprise Server Administration Guide May 2019 Legal Notices For information about legal notices, trademarks, disclaimers, warranties, export and other use restrictions, U.S. Government rights, patent policy, and FIPS compliance, see https://www.microfocus.com/about/legal/. Copyright © 2019 Micro Focus Software, Inc. All Rights Reserved. Contents About This Guide 7 1 Overview of the Client for Open Enterprise Server 9 1.1 Features and Benefits . 9 1.2 Rebranding Changes . 10 1.3 How the Client for Open Enterprise Server Differs from the Novell Client for Windows XP/2003 . 14 1.3.1 Novell Client for Windows XP/2003 Features Not Included in the Client for Open Enterprise Server . 14 1.3.2 Service Location Protocol (SLP) Differences. 15 1.3.3 LDAP Contextless Login Differences. 17 1.4 Features Not Included in the Client for Open Enterprise Server . 17 2 Advanced Installation Options 19 2.1 Understanding the Basic Client Installation (setup.exe). 19 2.1.1 Selecting a Language . 20 2.2 Understanding the Client Install Manager (nciman.exe) . 23 2.2.1 Creating the Client Properties File. 23 2.3 Using the Install.ini File . 24 2.4 Understanding Automatic Client Update (acu.exe) . 25 2.4.1 Setting Up the Client Update Agent . 26 2.5 Selecting a Network Server Distribution Option . 27 2.5.1 Distributing the Client Using Login Scripts. 27 2.5.2 Sample Client Installation Login Script . 28 2.6 Signing Requirements for the Client Installation . 28 2.6.1 Pre-distributing a Trusted Publisher Certificate for the Client Installation . -

Lexmark M5255

Lexmark M5255 The Lexmark M5255 combines a first page as fast as 4.5 seconds and impressive output speed with a 4.3-inch touch screen offering productivity-enhancing apps. Part Number Novell Operating Systems Supported Standard Ports 50G0720 Novell NetWare 6.5 Open Enterprise Server, One Internal Card Slot, USB 2.0 Specification Print Technology Novell NetWare 5.1(SP 6+), 6.0(SP 3+) with Novell Hi-Speed Certified (Type B), Gigabit Ethernet Monochrome Laser Distributed Print Services (NDPS), Novell Open (10/100/1000), Front USB 2.0 Specification Hi- Enterprise Server on SUSE Linux Enterprise Speed Certified port (Type A), Rear USB 2.0 Workgroup Size Server, Novell Open Enterprise Server 2 on SUSE Specification Hi-Speed Certified Port (Type A) Large Workgroup Linux Enterprise Server 10 SP4, Novell Open Optional Local Ports Touch Screen Display Enterprise Server 11 on SUSE Linux Enterprise Internal RS-232C serial, Internal 1284-B Yes Server 11, Novell Open Enterprise Server 2 SP2 Bidirectional Parallel on SuSE Linux Enterprise Server 10 SP3 3 Display Optional Network Ports Lexmark e-Task 4.3-inch (10.9 cm) color touch UNIX Operating Systems Supported Marknet N8370 WiFi Option, MarkNet N8230 screen Sun Solaris x86 10, 11, Sun Solaris SPARC 10, 11, Fiber Ethernet Print Server, Marknet N8372 WiFi Print Speed (Letter, Black) IBM AIX 6.1, 7.1 Option 6 Up to 55 ppm Included Paper Handling Direct USB 550-Sheet Input, 100-Sheet Multipurpose Feeder, Yes Print Speed (Letter, Black) Duplex 550-Sheet Output Bin, Integrated Duplex Up to 33 spm6 Ethernet -

Novell Groupwise 2014 Feature Enhancement Summary March 2014

Novell GroupWise 2014 Feature Enhancement Summary March 2014 Guide www.novell.com Product Guide Collaboration Collaboration Product Guide Novell GroupWise 2014 Feature Enhancement Summary Novell GroupWise 2014 Novell® GroupWise® 2014, known by its codename Windermere, has now released. What follows is a summary of the major enhancements available in this release. Novell GroupWise 2014 Platform Details Platform Support Active Directory Support With this release, GroupWise will run on Windows 2008R2 and Win Novell continues to support and recommend NetIQ eDirectory for dows 2012, as well as Novell Open Enterprise Server 11 and SUSE Novell GroupWise customers, but GroupWise 2014 additionally en Linux Enterprise Server 11. If you’re using Windows 2003, Open En ables support for Active Directory or running without any directory at terprise Server 2, or SUSE Linux Enterprise Server 10 as a post office all. A single GroupWise system can be linked to multiple directories server, you’ll need to upgrade to a supported platform. Supported and even allows users from different directories to coexist on the same browsers for the new admin interface include Firefox and Internet post office. GroupWise uses the directory to synchronize user, group Explorer (IE) 10. On the desktop, GroupWise 2014 will run on Windows and resource account information. It no longer writes any GroupWise XP, Windows 7 and Windows 8. GroupWise 2014 WebAccess also specific information back into the directory other than email addresses. supports the following browser versions or their later counterparts: IE 8 This means that no schema modifications are necessary to enable on XP, IE 9/10, the latest versions of Firefox and Chrome, and Safari 6.0 GroupWise to work with your directory. -

Why Open Enterprise Server Excels at Mixed IT

Top Reasons Open Enterprise Server Why Open Enterprise Server Excels at Mixed IT If you’re thinking of migrating to exclusively Windows, there’s something you should know. As attractive as it sounds to be an “all Microsoft” IT shop, getting there is a grueling process that saps money and productivity in ways you won’t even know about until it’s all said and done. You could be getting more value and spending far less utilizing a mixed IT approach. Nobody got fired for migrating to an all Windows IT environment, right? Wrong! Behind that Look what you get with crowded Windows landscape, you’ll find numbers that have scuttled more than a few careers. Open Enterprise Server: Microsoft may be ubiquitous, but ubiquity is not excellence or cost efficiency. And settling for “good enough” for uniformity’s sake doesn’t make good budget sense. So before you adopt an all-Windows approach, take a hard look at the real numbers you’ll be paying. Then explore what Files that follow users anywhere you will gain—what only Micro Focus® Open Enterprise Server can give you—against the cost Printing from anywhere without and downtime that come with a uniform Windows environment. wrestling with drivers Free hypervisor for virtualization Here are ten solid reasons for building a mixed IT environment with Open Enterprise Server: Support for mixed IT, including No exploding licenses. Beware of the hidden costs in the guise of standardization. Windows, Mac, Linux, iOS and If you move to all Microsoft servers, you’re not just buying licenses for processors Android 1. -

Netiq Imanager Installation Guide

NetIQ® iManager Installation Guide December 2014 Legal Notice THIS DOCUMENT AND THE SOFTWARE DESCRIBED IN THIS DOCUMENT ARE FURNISHED UNDER AND ARE SUBJECT TO THE TERMS OF A LICENSE AGREEMENT OR A NON-DISCLOSURE AGREEMENT. EXCEPT AS EXPRESSLY SET FORTH IN SUCH LICENSE AGREEMENT OR NON-DISCLOSURE AGREEMENT, NETIQ CORPORATION PROVIDES THIS DOCUMENT AND THE SOFTWARE DESCRIBED IN THIS DOCUMENT "AS IS" WITHOUT WARRANTY OF ANY KIND, EITHER EXPRESS OR IMPLIED, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE. SOME STATES DO NOT ALLOW DISCLAIMERS OF EXPRESS OR IMPLIED WARRANTIES IN CERTAIN TRANSACTIONS; THEREFORE, THIS STATEMENT MAY NOT APPLY TO YOU. For purposes of clarity, any module, adapter or other similar material ("Module") is licensed under the terms and conditions of the End User License Agreement for the applicable version of the NetIQ product or software to which it relates or interoperates with, and by accessing, copying or using a Module you agree to be bound by such terms. If you do not agree to the terms of the End User License Agreement you are not authorized to use, access or copy a Module and you must destroy all copies of the Module and contact NetIQ for further instructions. This document and the software described in this document may not be lent, sold, or given away without the prior written permission of NetIQ Corporation, except as otherwise permitted by law. Except as expressly set forth in such license agreement or non-disclosure agreement, no part of this document or the software described in this document may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, electronic, mechanical, or otherwise, without the prior written consent of NetIQ Corporation. -

Avira Server Security “Freedom to Keep Our Servers Safe and Secure – 24/7”

Avira Server Security “Freedom to keep our servers safe and secure – 24/7” Reliable Protection For Windows And Unix Servers Your business relies on servers to be running securely at all Avira Server Security uses minimum memory and processing times. The last thing you want is unexpected downtime due to power, scans files and archives fast in real-time, plus malware. Life is too short to spend ridding drives of viruses or updates itself automatically to guarantee total protection. carrying out time-consuming file system repairs. Free yourself The powerful Avira Management Console (AMC) is easy to from threats spreading onto your network from the internet by configure, allowing you to troubleshoot, scan or repair any investing in Avira Server Security. connected server right from your desktop. Whether you need to protect a single central fileserver, or a mix of Windows or Unix servers across multiple sites, Avira takes care of it, reliably and efficiently. Why Choose Avira Server Security? Optimal Safety Easy To Configure And Manage One solution for all your Windows and UNIX servers Stay in control Truly integrated, cross-platform virus and malware protection. Administer your whole network from one place using the Avira Management Console (AMC). Comprehensive notification High performance protection and reporting functions are built in, integrated scheduler Powerful proprietary antivirus technology that consistently lets you automate routine tasks like updates when it’s most wins top awards for detection and performance. convenient. New dangers neutralised fast Simple, intuitive new interface Our in-house virus lab works around the clock to prevent the Start actions like scans and test runs directly from Avira latest threats. -

Mcafee Virusscan Enterprise for Linux, V1.6 Installation Guide COPYRIGHT Copyright © 2010 Mcafee, Inc

McAfee VirusScan Enterprise for Linux, v1.6 Installation Guide COPYRIGHT Copyright © 2010 McAfee, Inc. All Rights Reserved. No part of this publication may be reproduced, transmitted, transcribed, stored in a retrieval system, or translated into any language in any form or by any means without the written permission of McAfee, Inc., or its suppliers or affiliate companies. TRADEMARK ATTRIBUTIONS AVERT, EPO, EPOLICY ORCHESTRATOR, FOUNDSTONE, GROUPSHIELD, INTRUSHIELD, LINUXSHIELD, MAX (MCAFEE SECURITYALLIANCE EXCHANGE), MCAFEE, NETSHIELD, PORTALSHIELD, PREVENTSYS, SECURITYALLIANCE, SITEADVISOR, TOTAL PROTECTION, VIRUSSCAN, WEBSHIELD are registered trademarks or trademarks of McAfee, Inc. and/or its affiliates in the US and/or other countries. McAfee Red in connection with security is distinctive of McAfee brand products. All other registered and unregistered trademarks herein are the sole property of their respective owners. LICENSE INFORMATION License Agreement NOTICE TO ALL USERS: CAREFULLY READ THE APPROPRIATE LEGAL AGREEMENT CORRESPONDING TO THE LICENSE YOU PURCHASED, WHICH SETS FORTH THE GENERAL TERMS AND CONDITIONS FOR THE USE OF THE LICENSED SOFTWARE. IF YOU DO NOT KNOW WHICH TYPE OF LICENSE YOU HAVE ACQUIRED, PLEASE CONSULT THE SALES AND OTHER RELATED LICENSE GRANT OR PURCHASE ORDER DOCUMENTS THAT ACCOMPANY YOUR SOFTWARE PACKAGING OR THAT YOU HAVE RECEIVED SEPARATELY AS PART OF THE PURCHASE (AS A BOOKLET, A FILE ON THE PRODUCT CD, OR A FILE AVAILABLE ON THE WEBSITE FROM WHICH YOU DOWNLOADED THE SOFTWARE PACKAGE). IF YOU DO NOT AGREE TO ALL OF THE TERMS SET FORTH IN THE AGREEMENT, DO NOT INSTALL THE SOFTWARE. IF APPLICABLE, YOU MAY RETURN THE PRODUCT TO MCAFEE OR THE PLACE OF PURCHASE FOR A FULL REFUND.