Intrachip Optical Networks for a Future Supercomputer-On-A-Chip

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

OFC/NFOEC 2011 Program Archive

OFC/NFOEC 2011 Archive Technical Conference: March 6-10, 2011 Exposition: March 8-10, 2011 Los Angeles Convention Center, Los Angeles, CA, USA At OFC/NFOEC 2011, the optical communications industry was buzzing with the sounds of a larger exhibit hall, expanded programming, product innovations, cutting-edge research presentations, and increased attendance March 6 - 10 in Los Angeles. The exhibit hall grew by 20 percent over last year, featuring new programming for service providers and data center operators, and more exhibitors filling a larger space, alongside its core show floor programs and activities. The more than 500 companies in the exhibition hall showcased innovations in areas such as 100G, tunable XFPs, metro networking, Photonic Integrated Circuits, and more. On hand to demonstrate where the industry is headed were network and test equipment vendors, sub-system and component manufacturers, as well as software, fiber cable and specialty fiber manufacturers. Service providers and enterprises were there to get the latest information on building or upgrading networks or datacenters. OFC/NFOEC also featured expanded program offerings in the areas of high-speed data communications, optical internetworking, wireless backhaul and supercomputing for its 2011 conference and exhibition. This new content and more was featured in standing-room only programs such as the Optical Business Forum, Ethernet Alliance Program, Optical Internetworking Forum Program, Green Touch Panel Session, a special symposium on Meeting the Computercom Challenge and more. Flagship programs Market Watch and the Service Provider Summit also featured topics on data centers, wireless, 100G, and optical networking. Hundreds of educational workshops, short courses, tutorial sessions and invited talks at OFC/NFOEC covered hot topics such as datacom, FTTx/in-home, wireless backhaul, next generation data transfer technology, 100G, coherent, and photonic integration. -

Boston University Photonics Center Annual Report | 2015

Boston University Photonics Center Annual Report | 2015 Letter from the Director THIS ANNUAL REPORT summarizes activities of the Boston University Photonics Center in the 2014–2015 academic year. In it, you will find quantitative and descrip- tive information regarding our photonics programs in education, interdisciplinary research, business innovation, and technology development. Located at the heart of Boston University’s large urban campus, the Photonics Center is an interdisciplinary hub for education, research, scholarship, innovation, and tech- nology development associated with practical uses of light. Our iconic building houses world-class research facilities and shared laboratories dedicated to photonics research, and sustains the work of 46 faculty members, 10 staff members, and more than 100 grad- uate students and postdoctoral fellows. This has been a good year for the Photonics Center. In the following pages, you will see that the center’s faculty received prodigious honors and awards, generated more than 100 notable scholarly publications in the leading journals in our field, and attracted $18.6M in new research grants/contracts. Faculty and staff also expanded their efforts in education and training, and were awarded two new National Science Foundation– sponsored sites for Research Experiences for Undergraduates and for Teachers. As a community, we hosted a compelling series of distinguished invited speakers, and empha- The Boston sized the theme of Advanced Materials by Design for the 21st Century at our annual symposium. We continued to support the National Photonics Initiative, and are a part University of a New York–based consortium that won the competition for a new photonics-themed Photonics Center node in the National Network of Manufacturing Institutes. -

Nanoelectronics, Nanophotonics, and Nanomagnetics Report of the National Nanotechnology Initiative Workshop February 11–13, 2004, Arlington, VA

National Science and Technology Council Committee on Technology Nanoelectronics, Subcommittee on Nanoscale Science, Nanophotonics, and Nanomagnetics Engineering, and Technology National Nanotechnology Report of the National Nanotechnology Initiative Workshop Coordination Office February 11–13, 2004 4201 Wilson Blvd. Stafford II, Rm. 405 Arlington, VA 22230 703-292-8626 phone 703-292-9312 fax www.nano.gov About the Nanoscale Science, Engineering, and Technology Subcommittee The Nanoscale Science, Engineering, and Technology (NSET) Subcommittee is the interagency body responsible for coordinating, planning, implementing, and reviewing the National Nanotechnology Initiative (NNI). The NSET is a subcommittee of the Committee on Technology of the National Science and Technology Council (NSTC), which is one of the principal means by which the President coordinates science and technology policies across the Federal Government. The National Nanotechnology Coordination Office (NNCO) provides technical and administrative support to the NSET Subcommittee and supports the subcommittee in the preparation of multi- agency planning, budget, and assessment documents, including this report. For more information on NSET, see http://www.nano.gov/html/about/nsetmembers.html . For more information on NSTC, see http://www.ostp.gov/cs/nstc . For more information on NNI, NSET and NNCO, see http://www.nano.gov . About this document This document is the report of a workshop held under the auspices of the NSET Subcommittee on February 11–13, 2004, in Arlington, Virginia. The primary purpose of the workshop was to examine trends and opportunities in nanoscale science and engineering as applied to electronic, photonic, and magnetic technologies. About the cover Cover design by Affordable Creative Services, Inc., Kathy Tresnak of Koncept, Inc., and NNCO staff. -

CLEO:2012 Program Archive

CLEO: 2012 Laser Science to Photonic Applications Technical Conference: 6-11 May 2012 Expo: 6-11 May 2012 Short Courses: 6-8 May 2012 Baltimore Convention Center, Baltimore, Maryland, USA Applications in ultrafast lasers, nanophotonics, biophotonics, sensing among hot topics SAN JOSE, May 14—CLEO: 2012,concluded in San Jose last week after six days of technical and business programming highlighting the latest research and developments in the fields of lasers and electro-optics. Attendees heard presentations on ultrafast lasers, OCT, optical sensing, and nanophotonic devices from some of the top scientists, engineers, and business people around the world. High-Quality Technical Programming The week kicked off with a special tribute symposium to the late laser pioneer Anthony Siegman, which featured talks on unstable laser cavities, speckle, and Siegman’s founding contributions to the field of quantum nonlinear optics. It was one of seven special symposia at the conference, ranging in topics from quantum engineering to space optical systems. The ubiquity of lasers in research and applications was evident in the more than 1,800 technical presentations in three core areas. The CLEO: Applications & Technology track included a presentation on the development of a small, flexible endoscope fitted with a femtosecond laser “scalpel” that can remove diseased or damaged tissue while leaving healthy cells untouched. Under the CLEO: Science & Innovations program, researchers demonstrated a counterintuitive concept: solar cells should be designed to be more like LEDs, able to emit light as well as absorb it. The CLEO: QELS Fundamental Science track featured research from French and Canadian scientists who developed a new method to study electron motion using isolated, precisely timed, and incredibly fast pulses of light. -

Ph.D. in Nanoscale Science

. ~'i; The University of North Carolina at Charlotte 9201 University City Boulevard Charlotte, N C 28223-0001 Office of the Chancellor Telephone: 704/687-2201 April 28, 2006 Facsimile: 704/687-3219 Dr. Alan Mabe Interim Senior Vice President for Academic Affairs and Vice President for Academic Planning Office of the President University of North Carolina Post Office Box 2688 Chapel Hill, North Carolina 27515-2688 Dear Dr. Mabe: Enclosed is UNC Charlotte's request for authorization to establish a Ph.D. program in Nanoscale Science. The proposed Nanoscale Science program emerged from a feasibility study conducted by our Department of Chemistry, College of Arts and Sciences, and Graduate School. The proposed program will build on the UNC Charlotte's strengths in precision metrology, optics, biomedical engineering and biotechnology. Thank you for your consideration of this request. Provost Joan Lorden or I would be pleased to respond to any questions that you may have regarding this request. Cordially, W Philip L. Dubois Chancellor Enclosure (5 copies of the proposal) cc: Provost Joan F. Lorden Dr. Nancy Gutierrez The University of North Carolina is composedof the sixteenpublic senior institutions in North Carolina An Equal Opportunity/Affirmative Action Employer . The University of North Carolina at Charlotte Ph.D. in Nanoscale Science Request for Authorization to Plan Request for Authorization to Establish Ph.D. in Business Administration THE UNIVERSITY OF NORTH CAROLINA Request for Authorization to Establish a New Degree Program INSTRUCTIONS: Please submit five copies of the proposal to the Senior Vice President for Academic Affairs, UNC Office of the President. Each proposal should include a 2-3 page executive summary. -

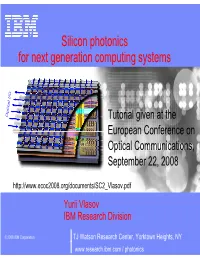

Silicon Photonics for Next Generation Computing Systems

Silicon photonics for next generation computing systems O / I l a ic t p O Tutorial given at the European Conference on Optical Communications, September 22, 2008 http://www.ecoc2008.org/documents/SC2_Vlasov.pdf Yurii Vlasov IBM Research Division © 2008 IBM Corporation TJ Watson Research Center, Yorktown Heights, NY www.research.ibm.com / photonics Outline . Hierarchy of interconnects in HPC . Active cables for rack-to-rack communications . Board level interconnects . On-chip optical interconnects – CMOS integration challenges – Photonic network on a chip . Silicon nanophotonics: – WDM – Light sources – Modulators – Switches – Detectors . Conclusions DISCLAIMER. The views expressed in this document are those of the author and do not necessarily represent. the views of IBM Corporation. 2 ECOC September 2008 www.research.ibm.com / photonics September 2008 Hierarchy of interconnects in HPC systems Backplanes Blades Server Server Cabinet I/O Cabinet Server Server Cabinet Switch Cabinet Cabinet Server Server Cabinet Cabinet Switch Cabinet Server … Server … Cabinet… Cabinet Shelves . Multiple blades on shelf interconnected through an electrical backplane . Optical interconnects between shelves – Interconnects moderated by the switch . Many Tb/sec off node card and growing (20-50% CGR) 3 ECOC September 2008 www.research.ibm.com / photonics September 2008 Moore’s law in HPCS 100 PB/s Biggest machine 1 PB/s 10 TB/s 100 GB/s After Jack Dongarra, top500.org 209 of the top 500 supercomputers have been built by IBM Sum total performance 14.03596 PF