Data Storage and Memory Technology (Part 1)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Chapter 3 Semiconductor Memories

Chapter 3 Semiconductor Memories Jin-Fu Li Department of Electrical Engineering National Central University Jungli, Taiwan Outline Introduction Random Access Memories Content Addressable Memories Read Only Memories Flash Memories Advanced Reliable Systems (ARES) Lab. Jin-Fu Li, EE, NCU 2 Overview of Memory Types Semiconductor Memories Read/Write Memory or Random Access Memory (RAM) Read Only Memory (ROM) Random Access Non-Random Access Memory (RAM) Memory (RAM) •Mask (Fuse) ROM •Programmable ROM (PROM) •Erasable PROM (EPROM) •Static RAM (SRAM) •FIFO/LIFO •Electrically EPROM (EEPROM) •Dynamic RAM (DRAM) •Shift Register •Flash Memory •Register File •Content Addressable •Ferroelectric RAM (FRAM) Memory (CAM) •Magnetic RAM (MRAM) Advanced Reliable Systems (ARES) Lab. Jin-Fu Li, EE, NCU 3 Memory Elements – Memory Architecture Memory elements may be divided into the following categories Random access memory Serial access memory Content addressable memory Memory architecture 2m+k bits row decoder row decoder 2n-k words row decoder row decoder column decoder k column mux, n-bit address sense amp, 2m-bit data I/Os write buffers Advanced Reliable Systems (ARES) Lab. Jin-Fu Li, EE, NCU 4 1-D Memory Architecture S0 S0 Word0 Word0 S1 S1 Word1 Word1 S2 S2 Word2 Word2 A0 S3 S3 A1 Decoder Ak-1 Sn-2 Storage Sn-2 Wordn-2 element Wordn-2 Sn-1 Sn-1 Wordn-1 Wordn-1 m-bit m-bit Input/Output Input/Output n select signals are reduced n select signals: S0-Sn-1 to k address signals: A0-Ak-1 Advanced Reliable Systems (ARES) Lab. Jin-Fu Li, EE, NCU 5 Memory Architecture S0 Word0 Wordi-1 S1 A0 A1 Ak-1 Row Decoder Sn-1 Wordni-1 A 0 Column Decoder Aj-1 Sense Amplifier Read/Write Circuit m-bit Input/Output Advanced Reliable Systems (ARES) Lab. -

The Law on Optical Discs

THE LAW ON OPTICAL DISCS I. GENERAL PROVISIONS Subject-matter of the Law Article 1 This Law shall regulate the manufacturing, commercial duplication, circulation and exports of optical discs, and imports and exports of production parts, raw materials and equipment used for manufacturing of optical discs. Definitions Article 2 The terms used in this Law, shall have the following meaning: 1) Optical Disc shall mean any medium or device, other than a production part, on which data in digital form, readable by means of an optical scanning mechanism employing a high-density light source (a laser), has been stored or is capable of being stored, including all the formats, notably: - CD (compact disc) - CD-DA (compact disc digital audio) - CD-I (compact disc – interactive) - CD-P (compact disc – photo) - CD-ROM (compact disc read-only memory) - CD-R (compact disc recordable) - CD-RW (compact disc rewritable) - CD-WO (compact disc write once) - DVD (digital versatile disc) - DVD-RAM (digital versatile disc random access memory) - DVD-ROM (digital versatile disc read-only memory) - LD (laserdisc) - MD (mini-disc) - VCD (video compact disc) - CVD (China video disc) - SVCD (super video compact disc) - SACD (Super audio compact disc) 2) Production Part shall mean any component of a mould (a “stamper”), that embodies data in a digital form and is capable of being used to mould optical discs, or any other device embodying data from which a stamper may be produced by means of an electroplating process; 3) Master disc shall mean a disc made of glass or polymer that contains data in digital form used to produce Production Part (a “stamper”);. -

An Overview of the 50 Most Common Web Scraping Tools

AN OVERVIEW OF THE 50 MOST COMMON WEB SCRAPING TOOLS WEB SCRAPING IS THE PROCESS OF USING BOTS TO EXTRACT CONTENT AND DATA FROM A WEBSITE. UNLIKE SCREEN SCRAPING, WHICH ONLY COPIES PIXELS DISPLAYED ON SCREEN, WEB SCRAPING EXTRACTS UNDERLYING CODE — AND WITH IT, STORED DATA — AND OUTPUTS THAT INFORMATION INTO A DESIGNATED FILE FORMAT. While legitimate uses cases exist for data harvesting, illegal purposes exist as well, including undercutting prices and theft of copyrighted content. Understanding web scraping bots starts with understanding the diverse and assorted array of web scraping tools and existing platforms. Following is a high-level overview of the 50 most common web scraping tools and platforms currently available. PAGE 1 50 OF THE MOST COMMON WEB SCRAPING TOOLS NAME DESCRIPTION 1 Apache Nutch Apache Nutch is an extensible and scalable open-source web crawler software project. A-Parser is a multithreaded parser of search engines, site assessment services, keywords 2 A-Parser and content. 3 Apify Apify is a Node.js library similar to Scrapy and can be used for scraping libraries in JavaScript. Artoo.js provides script that can be run from your browser’s bookmark bar to scrape a website 4 Artoo.js and return the data in JSON format. Blockspring lets users build visualizations from the most innovative blocks developed 5 Blockspring by engineers within your organization. BotScraper is a tool for advanced web scraping and data extraction services that helps 6 BotScraper organizations from small and medium-sized businesses. Cheerio is a library that parses HTML and XML documents and allows use of jQuery syntax while 7 Cheerio working with the downloaded data. -

Storage & Accessories Product Catalogue

Storage and Accessories Product Catalogue www.verbatim.com ABOUT VERBATIM Verbatim is a group company of Mitsubishi Chemical, one of the world’s largest chemical companies. For almost 50 years, the Verbatim brand has been at the forefront of the evolution in data storage technology and remains one of the most recognisable and respected names in the industry. Not only is Verbatim the world’s No 1 supplier of optical media (Blu-ray, DVD and CD), it also markets external hard drive storage solutions, SSDs, USB drives, memory cards, and a wide range of related accessories. The company has a strong reputation as a trusted global supplier providing quality products backed up by a fast and reliable service. Verbatim also has a significant presence in the LED lighting market, where it develops and sells a wide range of LED lamps and luminaires for offices, hotels, restaurants and domestic use. Many of the lighting products have been developed by the Mitsubishi Chemical R&D team, resulting in LEDs with world class thermal management, high colour rendering and low glare. Another Verbatim business category to have benefited from this development expertise is 3D Printing, where Verbatim has recently introduced a range of high quality filaments incorporating unique materials from Mitsubishi Chemical. Further information on these two exciting business categories can be found on www.verbatim.com. OPTICAL MEDIA MDisc .................................4 Blu-ray ................................5 DVD ..................................5 CD ....................................6 Professional Optical Media ...............7 Optical Writers .........................8 CD/DVD Cases .........................8 OPTICAL FLASH MEMORY MEDIA USB Drives .............................9 Solid State Drives (SSD). .12 FLASH Flash Memory Cards. -

Hard Disk Drives

37 Hard Disk Drives The last chapter introduced the general concept of an I/O device and showed you how the OS might interact with such a beast. In this chapter, we dive into more detail about one device in particular: the hard disk drive. These drives have been the main form of persistent data storage in computer systems for decades and much of the development of file sys- tem technology (coming soon) is predicated on their behavior. Thus, it is worth understanding the details of a disk’s operation before building the file system software that manages it. Many of these details are avail- able in excellent papers by Ruemmler and Wilkes [RW92] and Anderson, Dykes, and Riedel [ADR03]. CRUX: HOW TO STORE AND ACCESS DATA ON DISK How do modern hard-disk drives store data? What is the interface? How is the data actually laid out and accessed? How does disk schedul- ing improve performance? 37.1 The Interface Let’s start by understanding the interface to a modern disk drive. The basic interface for all modern drives is straightforward. The drive consists of a large number of sectors (512-byte blocks), each of which can be read or written. The sectors are numbered from 0 to n − 1 on a disk with n sectors. Thus, we can view the disk as an array of sectors; 0 to n − 1 is thus the address space of the drive. Multi-sector operations are possible; indeed, many file systems will read or write 4KB at a time (or more). However, when updating the disk, the only guarantee drive manufacturers make is that a single 512-byte write is atomic (i.e., it will either complete in its entirety or it won’t com- plete at all); thus, if an untimely power loss occurs, only a portion of a larger write may complete (sometimes called a torn write). -

Data and Computer Communications (Eighth Edition)

DATA AND COMPUTER COMMUNICATIONS Eighth Edition William Stallings Upper Saddle River, New Jersey 07458 Library of Congress Cataloging-in-Publication Data on File Vice President and Editorial Director, ECS: Art Editor: Gregory Dulles Marcia J. Horton Director, Image Resource Center: Melinda Reo Executive Editor: Tracy Dunkelberger Manager, Rights and Permissions: Zina Arabia Assistant Editor: Carole Snyder Manager,Visual Research: Beth Brenzel Editorial Assistant: Christianna Lee Manager, Cover Visual Research and Permissions: Executive Managing Editor: Vince O’Brien Karen Sanatar Managing Editor: Camille Trentacoste Manufacturing Manager, ESM: Alexis Heydt-Long Production Editor: Rose Kernan Manufacturing Buyer: Lisa McDowell Director of Creative Services: Paul Belfanti Executive Marketing Manager: Robin O’Brien Creative Director: Juan Lopez Marketing Assistant: Mack Patterson Cover Designer: Bruce Kenselaar Managing Editor,AV Management and Production: Patricia Burns ©2007 Pearson Education, Inc. Pearson Prentice Hall Pearson Education, Inc. Upper Saddle River, NJ 07458 All rights reserved. No part of this book may be reproduced in any form or by any means, without permission in writing from the publisher. Pearson Prentice Hall™ is a trademark of Pearson Education, Inc. All other tradmarks or product names are the property of their respective owners. The author and publisher of this book have used their best efforts in preparing this book.These efforts include the development, research, and testing of the theories and programs to determine their effectiveness.The author and publisher make no warranty of any kind, expressed or implied, with regard to these programs or the documentation contained in this book.The author and publisher shall not be liable in any event for incidental or consequential damages in connection with, or arising out of, the furnishing, performance, or use of these programs. -

Section 10 Flash Technology

10 FLASH TECHNOLOGY Overview Flash memory technology is a mix of EPROM and EEPROM technologies. The term “flash” was chosen because a large chunk of memory could be erased at one time. The name, therefore, distinguishes flash devices from EEPROMs, where each byte is erased individually. Flash memory technology is today a mature technology. Flash memory is a strong com- petitor to other memories such as EPROMs, EEPROMs, and to some DRAM applications. Figure 10-1 shows the density comparison of a flash versus other memories. 64M 16M 4M DRAM/EPROM 1M SRAM/EEPROM Density 256K Flash 64K 1980 1982 1984 1986 1988 1990 1992 1994 1996 Year Source: Intel/ICE, "Memory 1996" 18613A Figure 10-1. Flash Density Versus Other Memory How the Device Works The elementary flash cell consists of one transistor with a floating gate, similar to an EPROM cell. However, technology and geometry differences between flash devices and EPROMs exist. In particular, the gate oxide between the silicon and the floating gate is thinner for flash technology. It is similar to the tunnel oxide of an EEPROM. Source and INTEGRATED CIRCUIT ENGINEERING CORPORATION 10-1 Flash Technology drain diffusions are also different. Figure 10-2 shows a comparison between a flash cell and an EPROM cell with the same technology complexity. Due to thinner gate oxide, the flash device will be more difficult to process. CMOS Flash Cell CMOS EPROM Cell Mag. 10,000x Mag. 10,000x Flash Memory Cell – Larger transistor – Thinner floating gate – Thinner oxide (100-200Å) Photos by ICE 17561A Figure 10-2. -

Nanotechnology ? Nram (Nano Random Access

International Journal Of Engineering Research and Technology (IJERT) IFET-2014 Conference Proceedings INTERFACE ECE T14 INTRACT – INNOVATE - INSPIRE NANOTECHNOLOGY – NRAM (NANO RANDOM ACCESS MEMORY) RANJITHA. T, SANDHYA. R GOVERNMENT COLLEGE OF TECHNOLOGY, COIMBATORE 13. containing elements, nanotubes, are so small, NRAM technology will Abstract— NRAM (Nano Random Access Memory), is one of achieve very high memory densities: at least 10-100 times our current the important applications of nanotechnology. This paper has best. NRAM will operate electromechanically rather than just been prepared to cull out answers for the following crucial electrically, setting it apart from other memory technologies as a questions: nonvolatile form of memory, meaning data will be retained even What is NRAM? when the power is turned off. The creators of the technology claim it What is the need of it? has the advantages of all the best memory technologies with none of How can it be made possible? the disadvantages, setting it up to be the universal medium for What is the principle and technology involved in NRAM? memory in the future. What are the advantages and features of NRAM? The world is longing for all the things it can use within its TECHNOLOGY palm. As a result nanotechnology is taking its head in the world. Nantero's technology is based on a well-known effect in carbon Much of the electronic gadgets are reduced in size and increased nanotubes where crossed nanotubes on a flat surface can either be in efficiency by the nanotechnology. The memory storage devices touching or slightly separated in the vertical direction (normal to the are somewhat large in size due to the materials used for their substrate) due to Van der Waal's interactions. -

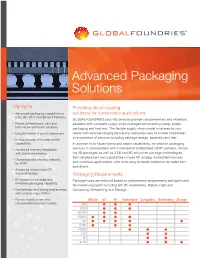

Advanced Packaging Solutions

Advanced Packaging Solutions Highlights Providing silicon-scaling • Advanced packaging capabilities in solutions for tomorrow’s applications 2.5D, 3D, WLP and Silicon Photonics GLOBALFOUNDRIES post-fab services provide complementary and extended • Power, performance, cost and solutions with complete supply chain management including bump, probe, form-factor optimized solutions packaging and final test. The flexible supply chain model is tailored to your • Industry leader in smart interposers needs with services ranging from bump and probe only to a more comprehen- sive spectrum of services including package design, assembly and test. • In-house bump and wafer probe capabilities In addition to in-house bump and probe capabilities, we provide packaging • Advanced memory integration services in collaboration with a network of established OSAT partners, includ- with stacked memories ing 2D packages as well as 2.5D and 3D advanced package technologies. Test development and capabilities include RF, analog, embedded memory, • Ownership and process maturity for HVM and mmWave applications, with wide array of tester platforms for wafer sort operations. • Advanced silicon node CPI and qualification Packaging Requirements • RF system-in-package and Package types are selected based on performance requirements and optimized mmWave packaging capability for market segments including IoT, RF, Automotive, Mobile, High-end • Partnerships and strong relationships Computing, Networking and Storage. with leading-edge OSATs • Flexible supply chain and Mobile IoT RF Automotive Computing Networking Storage collaborative business models QFN FBGA WLCSP FOWLP SiP fcCSP FCBGA 2.5D 3D Si-PH Advanced Packaging Solutions Packaging Technologies TSV Si Interposer Availability GF Si nodes are qualified in a wide range of package Full Reticle 26x33mm2 technologies including 2D wirebond designs, flip Stitched Interposer >1300 mm2 chip, WLCSP and FOWLP configurations, as well 10:1 Aspect Ratio TSV 10um Dia./ 100um Depth as 2.5D, 3D and Si-Photonics. -

AXP Internal 2-Apr-20 1

2-Apr-20 AXP Internal 1 2-Apr-20 AXP Internal 2 2-Apr-20 AXP Internal 3 2-Apr-20 AXP Internal 4 2-Apr-20 AXP Internal 5 2-Apr-20 AXP Internal 6 Class 6 Subject: Computer Science Title of the Book: IT Planet Petabyte Chapter 2: Computer Memory GENERAL INSTRUCTIONS: • Exercises to be written in the book. • Assignment questions to be done in ruled sheets. • You Tube link is for the explanation of Primary and Secondary Memory. YouTube Link: ➢ https://youtu.be/aOgvgHiazQA INTRODUCTION: ➢ Computer can store a large amount of data safely in their memory for future use. ➢ A computer’s memory is measured either in Bits or Bytes. ➢ The memory of a computer is divided into two categories: Primary Memory, Secondary Memory. ➢ There are two types of Primary Memory: ROM and RAM. ➢ Cache Memory is used to store program and instructions that are frequently used. EXPLANATION: Computer Memory: Memory plays a very important role in a computer. It is the basic unit where data and instructions are stored temporarily. Memory usually consists of one or more chips on the mother board, or you can say it consists of electronic components that store instructions waiting to be executed by the processor, data needed by those instructions, and the results of processing the data. Memory Units: Computer memory is measured in bits and bytes. A bit is the smallest unit of information that a computer can process and store. A group of 4 bits is known as nibble, and a group of 8 bits is called byte. -

Fast Non-Volatile RAM Products Superior Price and Performance from a Source You Can Trust

Fast Non-Volatile RAM Products Superior price and performance from a source you can trust www.freescale.com/MRAM The Future of Non-Volatile Read/Write Memory is Magnetic Freescale Semiconductor delivers the world’s non-volatile for greater than 20 years. Commercial MRAM Products Selector Guide Freescale MRAM Features first commercial magnetoresistive random SRAM-compatible packaging assures Part Number Density Configuration Voltage Speed Grades Temperature Package RoHS Compliant • 35 ns read/write cycle time access memory (MRAM) products. Our alternate sourcing from other suppliers. MR2A16ATS35C 4 Mb 256 Kb x 16 3.3V 35 ns 0 to 70°C 44-TSOP Type II √ • Unlimited read/write endurance MRAM products store data using magnetic MR1A16AYS35 2 Mb 128 Kb x 16 3.3V 35 ns 0 to 70°C 44-TSOP Type II √ • 3.3V ± 10 percent power supply polarization rather than electric charge. Extended Temperature Range and MR0A16AYS35 1 Mb 64 Kb x 16 3.3V 35 ns 0 to 70°C 44-TSOP Type II √ • Always non-volatile with greater than MRAM stores data for decades while reading Superior Reliability 20-year retention and writing at SRAM speed without wear- MRAM delivers a 3 volt high-density out. MRAM products use small, simple • Magnetically shielded to greater than non-volatile RAM that operates over extended cells to deliver the highest density and best 25 oersted (Oe) temperature. MRAM does not exhibit the price/performance in the non-volatile RAM • Commercial, Industrial and Extended charge storage failure modes that limit the marketplace. With our new expanded product Temperature Options data retention or endurance of line, we serve a growing portion of the other technologies. -

Nasdeluxe Z-Series

NASdeluxe Z-Series Benefit from scalable ZFS data storage By partnering with Starline and with Starline Computer’s NASdeluxe Open-E, you receive highly efficient Z-series and Open-E JovianDSS. This and reliable storage solutions that software-defined storage solution is offer: Enhanced Storage Performance well-suited for a wide range of applica- tions. It caters perfectly to the needs • Great adaptability Tiered RAM and SSD cache of enterprises that are looking to de- • Tiered and all-flash storage Data integrity check ploy a flexible storage configuration systems which can be expanded to a high avail- Data compression and in-line • High IOPS through RAM and SSD ability cluster. Starline and Open-E can data deduplication caching look back on a strategic partnership of Thin provisioning and unlimited • Superb expandability with more than 10 years. As the first part- number of snapshots and clones ner with a Gold partnership level, Star- Starline’s high-density JBODs – line has always been working hand in without downtime Simplified management hand with Open-E to develop and de- Flexible scalability liver innovative data storage solutions. Starline’s NASdeluxe Z-Series offers In fact, Starline supports worldwide not only great features, but also great Hardware independence enterprises in managing and pro- flexibility – thanks to its modular archi- tecting their storage, with over 2,800 tecture. Open-E installations to date. www.starline.de Z-Series But even with a standard configuration with nearline HDDs IOPS and SSDs for caching, you will be able to achieve high IOPS 250 000 at a reasonable cost.