Turing's Two Tests for Intelligence

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Alan M. Turing – Simplification in Intelligent Computing Theory and Algorithms”

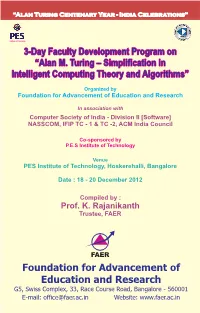

“Alan Turing Centenary Year - India Celebrations” 3-Day Faculty Development Program on “Alan M. Turing – Simplification in Intelligent Computing Theory and Algorithms” Organized by Foundation for Advancement of Education and Research In association with Computer Society of India - Division II [Software] NASSCOM, IFIP TC - 1 & TC -2, ACM India Council Co-sponsored by P.E.S Institute of Technology Venue PES Institute of Technology, Hoskerehalli, Bangalore Date : 18 - 20 December 2012 Compiled by : Prof. K. Rajanikanth Trustee, FAER “Alan Turing Centenary Year - India Celebrations” 3-Day Faculty Development Program on “Alan M. Turing – Simplification in Intelligent Computing Theory and Algorithms” Organized by Foundation for Advancement of Education and Research In association with Computer Society of India - Division II [Software], NASSCOM, IFIP TC - 1 & TC -2, ACM India Council Co-sponsored by P.E.S Institute of Technology December 18 – 20, 2012 Compiled by : Prof. K. Rajanikanth Trustee, FAER Foundation for Advancement of Education and Research G5, Swiss Complex, 33, Race Course Road, Bangalore - 560001 E-mail: [email protected] Website: www.faer.ac.in PREFACE Alan Mathison Turing was born on June 23rd 1912 in Paddington, London. Alan Turing was a brilliant original thinker. He made original and lasting contributions to several fields, from theoretical computer science to artificial intelligence, cryptography, biology, philosophy etc. He is generally considered as the father of theoretical computer science and artificial intelligence. His brilliant career came to a tragic and untimely end in June 1954. In 1945 Turing was awarded the O.B.E. for his vital contribution to the war effort. In 1951 Turing was elected a Fellow of the Royal Society. -

Much Has Been Written About the Turing Test in the Last Few Years, Some of It

1 Much has been written about the Turing Test in the last few years, some of it preposterously off the mark. People typically mis-imagine the test by orders of magnitude. This essay is an antidote, a prosthesis for the imagination, showing how huge the task posed by the Turing Test is, and hence how unlikely it is that any computer will ever pass it. It does not go far enough in the imagination-enhancement department, however, and I have updated the essay with a new postscript. Can Machines Think?1 Can machines think? This has been a conundrum for philosophers for years, but in their fascination with the pure conceptual issues they have for the most part overlooked the real social importance of the answer. It is of more than academic importance that we learn to think clearly about the actual cognitive powers of computers, for they are now being introduced into a variety of sensitive social roles, where their powers will be put to the ultimate test: In a wide variety of areas, we are on the verge of making ourselves dependent upon their cognitive powers. The cost of overestimating them could be enormous. One of the principal inventors of the computer was the great 1 Originally appeared in Shafto, M., ed., How We Know (San Francisco: Harper & Row, 1985). 2 British mathematician Alan Turing. It was he who first figured out, in highly abstract terms, how to design a programmable computing device--what we not call a universal Turing machine. All programmable computers in use today are in essence Turing machines. -

Turing Test Does Not Work in Theory but in Practice

Int'l Conf. Artificial Intelligence | ICAI'15 | 433 Turing test does not work in theory but in practice Pertti Saariluoma1 and Matthias Rauterberg2 1Information Technology, University of Jyväskylä, Jyväskylä, Finland 2Industrial Design, Eindhoven University of Technology, Eindhoven, The Netherlands Abstract - The Turing test is considered one of the most im- Essentially, the Turing test is an imitation game. Like any portant thought experiments in the history of AI. It is argued good experiment, it has two conditions. In the case of control that the test shows how people think like computers, but is this conditions, it is assumed that there is an opaque screen. On actually true? In this paper, we discuss an entirely new per- one side of the screen is an interrogator whose task is to ask spective. Scientific languages have their foundational limita- questions and assess the nature of the answers. On the other tions, for example, in their power of expression. It is thus pos- side, there are two people, A and B. The task of A and B is to sible to discuss the limitations of formal concepts and theory answer the questions, and the task of the interrogator is to languages. In order to represent real world phenomena in guess who has given the answer. In the case of experimental formal concepts, formal symbols must be given semantics and conditions, B is replaced by a machine (computer) and again, information contents; that is, they must be given an interpreta- the interrogator must decide whether it was the human or the tion. They key argument is that it is not possible to express machine who answered the questions. -

Semantics and Syntax a Legacy of Alan Turing Scientific Report

Semantics and Syntax A Legacy of Alan Turing Scientific Report Arnold Beckmann (Swansea) S. Barry Cooper (Leeds) Benedikt L¨owe (Amsterdam) Elvira Mayordomo (Zaragoza) Nigel P. Smart (Bristol) 1 Basic theme and background information Why was the programme organised on this particular topic? The programme Semantics and Syn- tax was one of the central activities of the Alan Turing Year 2012 (ATY). The ATY was the world-wide celebration of the life and work of the exceptional scientist Alan Mathison Turing (1912{1954) with a particu- lar focus on the places where Turing has worked during his life: Cambridge, Bletchley Park, and Manchester. The research programme Semantics and Syntax took place during the first six months of the ATY in Cam- bridge, included Turing's 100th birthday on 23 June 1912 at King's College, and combined the character of an intensive high-level research semester with outgoing activities as part of the centenary celebrations. The programme had xx visiting fellows, xx programme participants, and xx workshop participants, many of which were leaders of their respective fields. Alan Turing's work was too broad for a coherent research programme, and so we focused on only some of the areas influenced by this remarkable scientists: logic, complexity theory and cryptography. This selection was motivated by a common phenomenon of a divide in these fields between aspects coming from logical considerations (called Syntax in the title of the programme) and those coming from structural, mathematical or algorithmic considerations (called Semantics in the title of the programme). As argued in the original proposal, these instances of the syntax-semantics divide are a major obstacle for progress in our fields, and the programme aimed at bridging this divide. -

THE TURING TEST RELIES on a MISTAKE ABOUT the BRAIN For

THE TURING TEST RELIES ON A MISTAKE ABOUT THE BRAIN K. L. KIRKPATRICK Abstract. There has been a long controversy about how to define and study intel- ligence in machines and whether machine intelligence is possible. In fact, both the Turing Test and the most important objection to it (called variously the Shannon- McCarthy, Blockhead, and Chinese Room arguments) are based on a mistake that Turing made about the brain in his 1948 paper \Intelligent Machinery," a paper that he never published but whose main assumption got embedded in his famous 1950 paper and the celebrated Imitation Game. In this paper I will show how the mistake is a false dichotomy and how it should be fixed, to provide a solid foundation for a new understanding of the brain and a new approach to artificial intelligence. In the process, I make an analogy between the brain and the ribosome, machines that translate information into action, and through this analogy I demonstrate how it is possible to go beyond the purely information-processing paradigm of computing and to derive meaning from syntax. For decades, the metaphor of the brain as an information processor has dominated both neuroscience and artificial intelligence research and allowed the fruitful appli- cations of Turing's computation theory and Shannon's information theory in both fields. But this metaphor may be leading us astray, because of its limitations and the deficiencies in our understanding of the brain and AI. In this paper I will present a new metaphor to consider, of the brain as both an information processor and a producer of physical effects. -

The Turing Test and Other Design Detection Methodologies∗

Detecting Intelligence: The Turing Test and Other Design Detection Methodologies∗ George D. Montanez˜ 1 1Machine Learning Department, Carnegie Mellon University, Pittsburgh PA, USA [email protected] Keywords: Turing Test, Design Detection, Intelligent Agents Abstract: “Can machines think?” When faced with this “meaningless” question, Alan Turing suggested we ask a dif- ferent, more precise question: can a machine reliably fool a human interviewer into believing the machine is human? To answer this question, Turing outlined what came to be known as the Turing Test for artificial intel- ligence, namely, an imitation game where machines and humans interacted from remote locations and human judges had to distinguish between the human and machine participants. According to the test, machines that consistently fool human judges are to be viewed as intelligent. While popular culture champions the Turing Test as a scientific procedure for detecting artificial intelligence, doing so raises significant issues. First, a simple argument establishes the equivalence of the Turing Test to intelligent design methodology in several fundamental respects. Constructed with similar goals, shared assumptions and identical observational models, both projects attempt to detect intelligent agents through the examination of generated artifacts of uncertain origin. Second, if the Turing Test rests on scientifically defensible assumptions then design inferences become possible and cannot, in general, be wholly unscientific. Third, if passing the Turing Test reliably indicates intelligence, this implies the likely existence of a designing intelligence in nature. 1 THE IMITATION GAME gin et al., 2003), this paper presents a novel critique of the Turing Test in the spirit of a reductio ad ab- In his seminal paper on artificial intelligence (Tur- surdum. -

A Review on Neural Turing Machine

A review on Neural Turing Machine Soroor Malekmohammadi Faradounbeh, Faramarz Safi-Esfahani Faculty of Computer Engineering, Najafabad Branch, Islamic Azad University, Najafabad, Iran. Big Data Research Center, Najafabad Branch, Islamic Azad University, Najafabad, Iran. [email protected], [email protected] Abstract— one of the major objectives of Artificial Intelligence is to design learning algorithms that are executed on a general purposes computational machines such as human brain. Neural Turing Machine (NTM) is a step towards realizing such a computational machine. The attempt is made here to run a systematic review on Neural Turing Machine. First, the mind-map and taxonomy of machine learning, neural networks, and Turing machine are introduced. Next, NTM is inspected in terms of concepts, structure, variety of versions, implemented tasks, comparisons, etc. Finally, the paper discusses on issues and ends up with several future works. Keywords— NTM, Deep Learning, Machine Learning, Turing machine, Neural Networks 1 INTRODUCTION The Artificial Intelligence (AI) seeks to construct real intelligent machines. The neural networks constitute a small portion of AI, thus the human brain should be named as a Biological Neural Network (BNN). Brain is a very complicated non-linear and parallel computer [1]. The almost relatively new neural learning technology in AI named ‘Deep Learning’ that consists of multi-layer neural networks learning techniques. Deep Learning provides a computerized system to observe the abstract pattern similar to that of human brain, while providing the means to resolve cognitive problems. As to the development of deep learning, recently there exist many effective methods for multi-layer neural network training such as Recurrent Neural Network (RNN)[2]. -

Alan M. Turing 23.Juni 1912 – 7.Juni 1954

Alan M. Turing 23.juni 1912 – 7.juni 1954 Denne lista inneholder oversikt over bøker og DVD-er relatert til Alan M.Turings liv og virke. De fleste dokumentene er til utlån i Informa- tikkbiblioteket. Biblioteket har mange bøker om Turingmaskiner og be- regnbarhet, sjekk hylla på F.0 og F.1.*. Litteratur om Kryptografi finner du på E.3.0 og om Kunstig intelligens på I.2.0. Du kan også bruke de uthevete ordene som søkeord i BIBSYS: http://app.uio.no/ub/emnesok/?id=ureal URL-ene i lista kan brukes for å sjekke dokumentets utlånsstatus i BIBSYS eller bestille og reservere. Du må ha en pdf-leser som takler lenker. Den kryptiske opplysningen i parentes på linja før URL viser plas- sering på hylla i Informatikkbiblioteket (eller bestillingsstatus). Normalt vil bøkene i denne lista være plassert på noen hyller nær utstillingsmon- teren, så lenge Turing-utstillingen er oppstilt. Bøkene kan lånes derfra på normalt vis. [1] Jon Agar. Turing and the universal machine: the making of the modern computer. Icon, Cambridge, 2001. (K.2.0 AGA) http://ask.bibsys.no/ask/action/show?pid=010911804&kid=biblio. [2] H. Peter Alesso and C.F. Smith. Thinking on the Web: Berners-Lee, Gödel, and Turing. John Wiley & Sons, Hoboken, N.J., 2006. (I.2.4 Ale) http://ask.bibsys.no/ask/action/show?pid=08079954x&kid=biblio. [3] Michael Apter. Enigma. Basert på: Enigma av Robert Harris. Teks- tet på norsk, svensk, dansk, finsk, engelsk. In this twisty thriller about Britain’s secret code breakers during World War II, Tom Je- richo devised the means to break the Nazi Enigma code (DVD) http://ask.bibsys.no/ask/action/show?pid=082617570&kid=biblio. -

The Future Just Arrived

BLOG The Future Just Arrived Brad Neuman, CFA Senior Vice President Director of Market Strategy What if a computer were actually smart? You wouldn’t purpose natural language processing model. The have to be an expert in a particular application to interface is “text-in, text-out” that goal. but while the interact with it – you could just talk to it. You wouldn’t input is ordinary English language instructions, the need an advanced degree to train it to do something output text can be anything from prose to computer specific - it would just know how to complete a broad code to poetry. In fact, with simple commands, the range of tasks. program can create an entire webpage, generate a household income statement and balance sheet from a For example, how would you create the following digital description of your financial activities, create a cooking image or “button” in a traditional computer program? recipe, translate legalese into plain English, or even write an essay on the evolution of the economy that this strategist fears could put him out of a job! Indeed, if you talk with the program, you may even believe it is human. But can GPT-3 pass the Turing test, which assesses a machine’s ability to exhibit human-level intelligence. The answer: GPT-3 puts us a lot closer to that goal. I have no idea because I can’t code. But a new Data is the Fuel computer program (called GPT-3) is so smart that all you have to do is ask it in plain English to generate “a The technology behind this mind-blowing program has button that looks like a watermelon.” The computer then its roots in a neural network, deep learning architecture i generates the following code: introduced by Google in 2017. -

(GPT-3) in Healthcare Delivery ✉ Diane M

www.nature.com/npjdigitalmed COMMENT OPEN Considering the possibilities and pitfalls of Generative Pre- trained Transformer 3 (GPT-3) in healthcare delivery ✉ Diane M. Korngiebel 1 and Sean D. Mooney2 Natural language computer applications are becoming increasingly sophisticated and, with the recent release of Generative Pre- trained Transformer 3, they could be deployed in healthcare-related contexts that have historically comprised human-to-human interaction. However, for GPT-3 and similar applications to be considered for use in health-related contexts, possibilities and pitfalls need thoughtful exploration. In this article, we briefly introduce some opportunities and cautions that would accompany advanced Natural Language Processing applications deployed in eHealth. npj Digital Medicine (2021) 4:93 ; https://doi.org/10.1038/s41746-021-00464-x A seemingly sophisticated artificial intelligence, OpenAI’s Gen- language texts. Although its developers at OpenAI think it performs erative Pre-trained Transformer 3, or GPT-3, developed using well on translation, question answering, and cloze tasks (e.g., a fill-in- computer-based processing of huge amounts of publicly available the-blank test to demonstrate comprehension of text by providing textual data (natural language)1, may be coming to a healthcare the missing words in a sentence)1, it does not always predict a 1234567890():,; clinic (or eHealth application) near you. This may sound fantastical, correct string of words that are believable as a conversation. And but not too long ago so did a powerful computer so tiny it could once it has started a wrong prediction (ranging from a semantic fit in the palm of your hand. -

Lesson Plan Alan Turing

Lesson Plan Alan Turing Plaque marking Alan Turing's former home in Wilmslow, Cheshire Grade Level(s): 9-12 Subject(s): Physics, History, Computation In-Class Time: 60 min Prep Time: 10 min Materials • Either computers or printed copies of the CIA’s cypher games • Prize for the winner of the Code War Game • Discussion Sheets Objective In this lesson students will learn about the life and legacy of Alan Turing, father of the modern-day computer. Introduction Alan Turing was born on June 23,1912 in Paddington, London. At age 13, he attended boarding school at Sherbon School. Here he excelled in math and science. He paid little attention to liberal arts classes to the frustration of his teachers. After Sherbon, Turing went on to study at King’s College in Cambridge. Here Turing produced an elegant solution to the Entscheidungsproblem (Decision problem) for universal machines. At this time, Alonzo Church also published a paper solving the problem, which led to their collaboration and the development of the Church-Turing Thesis, and the idea of Turing machines, which by theory can compute anything that is computable. Prepared by the Center for the History of Physics at AIP 1 During WWII Turing worked as a cryptanalysist breaking cyphers for the Allies at Blectchy Park. It was here that Turing built his “Bombe”, a machine that could quickly break any German cipher by running through hundreds of options per second. Turing’s machine and team were so quick at breaking these codes the German army was convinced they had a British spy in their ranks. -

The Legacy of Alan Turing and John Von Neumann

Chapter 1 Computational Intelligence: The Legacy of Alan Turing and John von Neumann Heinz Muhlenbein¨ Abstract In this chapter fundamental problems of collaborative computa- tional intelligence are discussed. The problems are distilled from the semi- nal research of Alan Turing and John von Neumann. For Turing the creation of machines with human-like intelligence was only a question of program- ming time. In his research he identified the most relevant problems con- cerning evolutionary computation, learning, and structure of an artificial brain. Many problems are still unsolved, especially efficient higher learn- ing methods which Turing called initiative. Von Neumann was more cau- tious. He doubted that human-like intelligent behavior could be described unambiguously in finite time and finite space. Von Neumann focused on self-reproducing automata to create more complex systems out of simpler ones. An early proposal from John Holland is analyzed. It centers on adapt- ability and population of programs. The early research of Newell, Shaw, and Simon is discussed. They use the logical calculus to discover proofs in logic. Only a few recent research projects have the broad perspectives and the am- bitious goals of Turing and von Neumann. As examples the projects Cyc, Cog, and JANUS are discussed. 1.1 Introduction Human intelligence can be divided into individual, collaborative, and collec- tive intelligence. Individual intelligence is always multi-modal, using many sources of information. It developed from the interaction of the humans with their environment. Based on individual intelligence, collaborative in- telligence developed. This means that humans work together with all the available allies to solve problems.