Comparing Optical Tracking Techniques in Distributed Asteroid Orbiter Missions Using Ray-Tracing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

An Overview of Hayabusa2 Mission and Asteroid 162173 Ryugu

Asteroid Science 2019 (LPI Contrib. No. 2189) 2086.pdf AN OVERVIEW OF HAYABUSA2 MISSION AND ASTEROID 162173 RYUGU. S. Watanabe1,2, M. Hira- bayashi3, N. Hirata4, N. Hirata5, M. Yoshikawa2, S. Tanaka2, S. Sugita6, K. Kitazato4, T. Okada2, N. Namiki7, S. Tachibana6,2, M. Arakawa5, H. Ikeda8, T. Morota6,1, K. Sugiura9,1, H. Kobayashi1, T. Saiki2, Y. Tsuda2, and Haya- busa2 Joint Science Team10, 1Nagoya University, Nagoya 464-8601, Japan ([email protected]), 2Institute of Space and Astronautical Science, JAXA, Japan, 3Auburn University, U.S.A., 4University of Aizu, Japan, 5Kobe University, Japan, 6University of Tokyo, Japan, 7National Astronomical Observatory of Japan, Japan, 8Research and Development Directorate, JAXA, Japan, 9Tokyo Institute of Technology, Japan, 10Hayabusa2 Project Summary: The Hayabusa2 mission reveals the na- Combined with the rotational motion of the asteroid, ture of a carbonaceous asteroid through a combination global surveys of Ryugu were conducted several times of remote-sensing observations, in situ surface meas- from ~20 km above the sub-Earth point (SEP), includ- urements by rovers and a lander, an active impact ex- ing global mapping from ONC-T (Fig. 1) and TIR, and periment, and analyses of samples returned to Earth. scan mapping from NIRS3 and LIDAR. Descent ob- Introduction: Asteroids are fossils of planetesi- servations covering the equatorial zone were performed mals, building blocks of planetary formation. In partic- from 3-7 km altitudes above SEP. Off-SEP observa- ular carbonaceous asteroids (or C-complex asteroids) tions of the polar regions were also conducted. Based are expected to have keys identifying the material mix- on these observations, we constructed two types of the ing in the early Solar System and deciphering the global shape models (using the Structure-from-Motion origin of water and organic materials on Earth [1]. -

Enhanced Gravity Tractor Technique for Planetary Defense

4th IAA Planetary Defense Conference – PDC 2015 13-17 April 2015, Frascati, Roma, Italy IAA-PDC-15-04-11 ENHANCED GRAVITY TRACTOR TECHNIQUE FOR PLANETARY DEFENSE Daniel D. Mazanek(1), David M. Reeves(2), Joshua B. Hopkins(3), Darren W. Wade(4), Marco Tantardini(5), and Haijun Shen(6) (1)NASA Langley Research Center, Mail Stop 462, 1 North Dryden Street, Hampton, VA, 23681, USA, 1-757-864-1739, (2) NASA Langley Research Center, Mail Stop 462, 1 North Dryden Street, Hampton, VA, 23681, USA, 1-757-864-9256, (3)Lockheed Martin Space Systems Company, Mail Stop H3005, PO Box 179, Denver, CO 80201, USA, 1-303- 971-7928, (4)Lockheed Martin Space Systems Company, Mail Stop S8110, PO Box 179, Denver, CO 80201, USA, 1-303-977-4671, (5)Independent, via Tibaldi 5, Cremona, Italy, +393381003736, (6)Analytical Mechanics Associates, Inc., 21 Enterprise Parkway, Suite 300, Hampton, VA 23666, USA, 1-757-865-0000, Keywords: enhanced gravity tractor, in-situ mass augmentation, asteroid and comet deflection, planetary defense, low-thrust, high-efficiency propulsion, gravitational attraction, robotic mass collection Abstract Given sufficient warning time, Earth-impacting asteroids and comets can be deflected with a variety of different “slow push/pull” techniques. The gravity tractor is one technique that uses the gravitational attraction of a rendezvous spacecraft to the impactor and a low-thrust, high-efficiency propulsion system to provide a gradual velocity change and alter its trajectory. An innovation to this technique, known as the Enhanced Gravity Tractor (EGT), uses mass collected in-situ to augment the mass of the spacecraft, thereby greatly increasing the gravitational force between the objects. -

Stardust Sample Return

National Aeronautics and Space Administration Stardust Sample Return Press Kit January 2006 www.nasa.gov Contacts Merrilee Fellows Policy/Program Management (818) 393-0754 NASA Headquarters, Washington DC Agle Stardust Mission (818) 393-9011 Jet Propulsion Laboratory, Pasadena, Calif. Vince Stricherz Science Investigation (206) 543-2580 University of Washington, Seattle, Wash. Contents General Release ............................................................................................................... 3 Media Services Information ……………………….................…………….................……. 5 Quick Facts …………………………………………..................………....…........…....….. 6 Mission Overview …………………………………….................……….....……............…… 7 Recovery Timeline ................................................................................................ 18 Spacecraft ………………………………………………..................…..……...........……… 20 Science Objectives …………………………………..................……………...…..........….. 28 Why Stardust?..................…………………………..................………….....………............... 31 Other Comet Missions .......................................................................................... 33 NASA's Discovery Program .................................................................................. 36 Program/Project Management …………………………........................…..…..………...... 40 1 2 GENERAL RELEASE: NASA PREPARES FOR RETURN OF INTERSTELLAR CARGO NASA’s Stardust mission is nearing Earth after a 2.88 billion mile round-trip journey -

Space Propulsion.Pdf

Deep Space Propulsion K.F. Long Deep Space Propulsion A Roadmap to Interstellar Flight K.F. Long Bsc, Msc, CPhys Vice President (Europe), Icarus Interstellar Fellow British Interplanetary Society Berkshire, UK ISBN 978-1-4614-0606-8 e-ISBN 978-1-4614-0607-5 DOI 10.1007/978-1-4614-0607-5 Springer New York Dordrecht Heidelberg London Library of Congress Control Number: 2011937235 # Springer Science+Business Media, LLC 2012 All rights reserved. This work may not be translated or copied in whole or in part without the written permission of the publisher (Springer Science+Business Media, LLC, 233 Spring Street, New York, NY 10013, USA), except for brief excerpts in connection with reviews or scholarly analysis. Use in connection with any form of information storage and retrieval, electronic adaptation, computer software, or by similar or dissimilar methodology now known or hereafter developed is forbidden. The use in this publication of trade names, trademarks, service marks, and similar terms, even if they are not identified as such, is not to be taken as an expression of opinion as to whether or not they are subject to proprietary rights. Printed on acid-free paper Springer is part of Springer Science+Business Media (www.springer.com) This book is dedicated to three people who have had the biggest influence on my life. My wife Gemma Long for your continued love and companionship; my mentor Jonathan Brooks for your guidance and wisdom; my hero Sir Arthur C. Clarke for your inspirational vision – for Rama, 2001, and the books you leave behind. Foreword We live in a time of troubles. -

1950 Da, 205, 269 1979 Va, 230 1991 Ry16, 183 1992 Kd, 61 1992

Cambridge University Press 978-1-107-09684-4 — Asteroids Thomas H. Burbine Index More Information 356 Index 1950 DA, 205, 269 single scattering, 142, 143, 144, 145 1979 VA, 230 visual Bond, 7 1991 RY16, 183 visual geometric, 7, 27, 28, 163, 185, 189, 190, 1992 KD, 61 191, 192, 192, 253 1992 QB1, 233, 234 Alexandra, 59 1993 FW, 234 altitude, 49 1994 JR1, 239, 275 Alvarez, Luis, 258 1999 JU3, 61 Alvarez, Walter, 258 1999 RL95, 183 amino acid, 81 1999 RQ36, 61 ammonia, 223, 301 2000 DP107, 274, 304 amoeboid olivine aggregate, 83 2000 GD65, 205 Amor, 251 2001 QR322, 232 Amor group, 251 2003 EH1, 107 Anacostia, 179 2007 PA8, 207 Anand, Viswanathan, 62 2008 TC3, 264, 265 Angelina, 175 2010 JL88, 205 angrite, 87, 101, 110, 126, 168 2010 TK7, 231 Annefrank, 274, 275, 289 2011 QF99, 232 Antarctic Search for Meteorites (ANSMET), 71 2012 DA14, 108 Antarctica, 69–71 2012 VP113, 233, 244 aphelion, 30, 251 2013 TX68, 64 APL, 275, 292 2014 AA, 264, 265 Apohele group, 251 2014 RC, 205 Apollo, 179, 180, 251 Apollo group, 230, 251 absorption band, 135–6, 137–40, 145–50, Apollo mission, 129, 262, 299 163, 184 Apophis, 20, 269, 270 acapulcoite/ lodranite, 87, 90, 103, 110, 168, 285 Aquitania, 179 Achilles, 232 Arecibo Observatory, 206 achondrite, 84, 86, 116, 187 Aristarchus, 29 primitive, 84, 86, 103–4, 287 Asporina, 177 Adamcarolla, 62 asteroid chronology function, 262 Adeona family, 198 Asteroid Zoo, 54 Aeternitas, 177 Astraea, 53 Agnia family, 170, 198 Astronautica, 61 AKARI satellite, 192 Aten, 251 alabandite, 76, 101 Aten group, 251 Alauda family, 198 Atira, 251 albedo, 7, 21, 27, 185–6 Atira group, 251 Bond, 7, 8, 9, 28, 189 atmosphere, 1, 3, 8, 43, 66, 68, 265 geometric, 7 A- type, 163, 165, 167, 169, 170, 177–8, 192 356 © in this web service Cambridge University Press www.cambridge.org Cambridge University Press 978-1-107-09684-4 — Asteroids Thomas H. -

(OSIRIS-Rex) Asteroid Sample Return Mission

Planetary Defense Conference 2013 IAA-PDC13-04-16 Trajectory and Mission Design for the Origins Spectral Interpretation Resource Identification Security Regolith Explorer (OSIRIS-REx) Asteroid Sample Return Mission Mark Beckmana,1, Brent W. Barbeea,2,∗, Bobby G. Williamsb,3, Ken Williamsb,4, Brian Sutterc,5, Kevin Berrya,2 aNASA/GSFC, Code 595, 8800 Greenbelt Road, Greenbelt, MD, 20771, USA bKinetX Aerospace, Inc., 21 W. Easy St., Suite 108, Simi Valley, CA 93065, USA cLockheed Martin Space Systems Company, PO Box 179, Denver CO, 80201, MS S8110, USA Abstract The Origins Spectral Interpretation Resource Identification Security Regolith Explorer (OSIRIS-REx) mission is a NASA New Frontiers mission launching in 2016 to rendezvous with near-Earth asteroid (NEA) 101955 (1999 RQ36) in 2018, study it, and return a pristine carbonaceous regolith sample in 2023. This mission to 1999 RQ36 is motivated by the fact that it is one of the most hazardous NEAs currently known in terms of Earth collision probability, and it is also an attractive science target because it is a primitive solar system body and relatively accessible in terms of spacecraft propellant requirements. In this paper we present an overview of the design of the OSIRIS-REx mission with an emphasis on trajectory design and optimization for rendezvous with the asteroid and return to Earth following regolith sample collection. Current results from the OSIRIS-REx Flight Dynamics Team are presented for optimized primary and backup mission launch windows. Keywords: interplanetary trajectory, trajectory optimization, gravity assist, mission design, sample return, asteroid 1. Introduction The Origins Spectral Interpretation Resource Identification Security Regolith Explorer (OSIRIS-REx) mission is a NASA New Frontiers mission launching in 2016. -

Overcoming the Challenges Associated with Image-Based Mapping of Small Bodies in Preparation for the OSIRIS-Rex Mission to (101955) Bennu

Preprint of manuscript submitted to Earth and Space Science Overcoming the Challenges Associated with Image-based Mapping of Small Bodies in Preparation for the OSIRIS-REx Mission to (101955) Bennu D. N. DellaGiustina1, C. A. Bennett1, K. Becker1, D. R Golish1, L. Le Corre2, D. A. Cook3†, K. L. Edmundson3, M. Chojnacki1, S. S. Sutton1, M. P. Milazzo3, B. Carcich4, M. C. Nolan1, N. Habib1, K. N. Burke1, T. Becker1, P. H. Smith1, K. J. Walsh5, K. Getzandanner6, D. R. Wibben4, J. M. Leonard4, M. M. Westermann1, A. T. Polit1, J. N. Kidd Jr.1, C. W. Hergenrother1, W. V. Boynton1, J. Backer3, S. Sides3, J. Mapel3, K. Berry3, H. Roper1, C. Drouet d’Aubigny1, B. Rizk1, M. K. Crombie7, E. K. Kinney-Spano8, J. de León9, 10, J. L. Rizos9, 10, J. Licandro9, 10, H. C. Campins11, B. E. Clark12, H. L. Enos1, and D. S. Lauretta1 1Lunar and Planetary Laboratory, University of Arizona, Tucson, AZ, USA 2Planetary Science Institute, Tucson, AZ, USA 3U.S. Geological Survey Astrogeology Science Center, Flagstaff, AZ, USA 4KinetX Space Navigation & Flight Dynamics Practice, Simi Valley, CA, USA 5Southwest Research Institute, Boulder, CO, USA 6Goddard Spaceflight Center, Greenbelt, MD, USA 7Indigo Information Services LLC, Tucson, AZ, USA 8 MDA Systems, Ltd, Richmond, BC, Canada 9Instituto de Astrofísica de Canarias, Santa Cruz de Tenerife, Spain 10Departamento de Astrofísica, Universidad de La Laguna, Santa Cruz de Tenerife, Spain 11Department of Physics, University of Central Florida, Orlando, FL, USA 12Department of Physics and Astronomy, Ithaca College, Ithaca, NY, USA †Retired from this institution Corresponding author: Daniella N. -

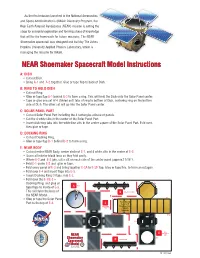

NEAR Shoemaker Spacecraft Model Instructions

As the first mission launched in the National Aeronautics and Space Administration’s (NASA) Discovery Program, the Near Earth Asteroid Rendezvous (NEAR) mission is setting the stage for asteroidal exploration and forming a base of knowledge that will be the framework for future missions. The NEAR Shoemaker spacecraft was designed and built by The Johns Hopkins University Applied Physics Laboratory, which is managing the mission for NASA. NEAR Shoemaker Spacecraft Model Instructions A: DISH • Cut out Dish. • Bring A-1 and A-2 together. Glue or tape flap to back of Dish. B: RING TO HOLD DISH • Cut out Ring. • Glue or tape flap B-1 behind B-2 to form a ring. This will hold the Dish onto the Solar Panel center. • Tape or glue one set of 4 (folded out) tabs of ring to bottom of Dish, centering ring on the bottom side of Dish. The other set will go into the Solar Panel center. C: SOLAR PANEL PART • Cut out Solar Panel Part including the 4 rectangles at base of panels. • Cut the 4 white slits in the center of the Solar Panel Part. • Insert dish/ring tabs into the white line slits in the center square of the Solar Panel Part. Fold over, then glue or tape. D: DOCKING RING • Cut out Docking Ring. • Glue or tape flap D-1 behind D-2 to form a ring. E: NEAR BODY • Cut out entire NEAR Body, center circle of E-1, and 4 white slits in the center of E-2. • Score all interior black lines so they fold easily. -

Astrodynamic Fundamentals for Deflecting Hazardous Near-Earth Objects

IAC-09-C1.3.1 Astrodynamic Fundamentals for Deflecting Hazardous Near-Earth Objects∗ Bong Wie† Asteroid Deflection Research Center Iowa State University, Ames, IA 50011, United States [email protected] Abstract mate change caused by this asteroid impact may have caused the dinosaur extinction. On June 30, 1908, an The John V. Breakwell Memorial Lecture for the As- asteroid or comet estimated at 30 to 50 m in diameter trodynamics Symposium of the 60th International As- exploded in the skies above Tunguska, Siberia. Known tronautical Congress (IAC) presents a tutorial overview as the Tunguska Event, the explosion flattened trees and of the astrodynamical problem of deflecting a near-Earth killed other vegetation over a 500,000-acre area with an object (NEO) that is on a collision course toward Earth. energy level equivalent to about 500 Hiroshima nuclear This lecture focuses on the astrodynamic fundamentals bombs. of such a technically challenging, complex engineering In the early 1990s, scientists around the world initi- problem. Although various deflection technologies have ated studies to prevent NEOs from striking Earth [1]. been proposed during the past two decades, there is no However, it is now 2009, and there is no consensus on consensus on how to reliably deflect them in a timely how to reliably deflect them in a timely manner even manner. Consequently, now is the time to develop prac- though various mitigation approaches have been inves- tically viable technologies that can be used to mitigate tigated during the past two decades [1-8]. Consequently, threats from NEOs while also advancing space explo- now is the time for initiating a concerted R&D effort for ration. -

Near Earth Asteroid Rendezvous: Mission Summary 351

Cheng: Near Earth Asteroid Rendezvous: Mission Summary 351 Near Earth Asteroid Rendezvous: Mission Summary Andrew F. Cheng The Johns Hopkins Applied Physics Laboratory On February 14, 2000, the Near Earth Asteroid Rendezvous spacecraft (NEAR Shoemaker) began the first orbital study of an asteroid, the near-Earth object 433 Eros. Almost a year later, on February 12, 2001, NEAR Shoemaker completed its mission by landing on the asteroid and acquiring data from its surface. NEAR Shoemaker’s intensive study has found an average density of 2.67 ± 0.03, almost uniform within the asteroid. Based upon solar fluorescence X-ray spectra obtained from orbit, the abundance of major rock-forming elements at Eros may be consistent with that of ordinary chondrite meteorites except for a depletion in S. Such a composition would be consistent with spatially resolved, visible and near-infrared (NIR) spectra of the surface. Gamma-ray spectra from the surface show Fe to be depleted from chondritic values, but not K. Eros is not a highly differentiated body, but some degree of partial melting or differentiation cannot be ruled out. No evidence has been found for compositional heterogeneity or an intrinsic magnetic field. The surface is covered by a regolith estimated at tens of meters thick, formed by successive impacts. Some areas have lesser surface age and were apparently more recently dis- turbed or covered by regolith. A small center of mass offset from the center of figure suggests regionally nonuniform regolith thickness or internal density variation. Blocks have a nonuniform distribution consistent with emplacement of ejecta from the youngest large crater. -

Asteroid Surface Geophysics

Asteroid Surface Geophysics Naomi Murdoch Institut Superieur´ de l’Aeronautique´ et de l’Espace (ISAE-SUPAERO) Paul Sanchez´ University of Colorado Boulder Stephen R. Schwartz University of Nice-Sophia Antipolis, Observatoire de la Coteˆ d’Azur Hideaki Miyamoto University of Tokyo The regolith-covered surfaces of asteroids preserve records of geophysical processes that have oc- curred both at their surfaces and sometimes also in their interiors. As a result of the unique micro-gravity environment that these bodies posses, a complex and varied geophysics has given birth to fascinating features that we are just now beginning to understand. The processes that formed such features were first hypothesised through detailed spacecraft observations and have been further studied using theoretical, numerical and experimental methods that often combine several scientific disciplines. These multiple approaches are now merging towards a further understanding of the geophysical states of the surfaces of asteroids. In this chapter we provide a concise summary of what the scientific community has learned so far about the surfaces of these small planetary bodies and the processes that have shaped them. We also discuss the state of the art in terms of experimental techniques and numerical simulations that are currently being used to investigate regolith processes occurring on small-body surfaces and that are contributing to the interpretation of observations and the design of future space missions. 1. INTRODUCTION wide range of geological features have been observed on the surfaces of asteroids and other small bodies such as the nu- Before the first spacecraft encounters with asteroids, cleus of comet 103P/Hartley 2 (Thomas et al. -

“Target Selection for a HAIV Flight Demo Mission,” IAA-PDC13-04

Planetary Defense Conference 2013 IAA-PDC13-04-08 Target Selection for a Hypervelocity Asteroid Intercept Vehicle Flight Validation Mission Sam Wagnera,1,∗, Bong Wieb,2, Brent W. Barbeec,3 aIowa State University, 2271 Howe Hall, Room 2348, Ames, IA 50011-2271, USA bIowa State University, 2271 Howe Hall, Room 2325, Ames, IA 50011-2271, USA cNASA/GSFC, Code 595, 8800 Greenbelt Road, Greenbelt, MD, 20771, USA, 301.286.1837 Abstract Asteroids and comets have collided with the Earth in the past and will do so again in the future. Throughout Earth’s history these collisions have played a significant role in shaping Earth’s biological and geological histories. The planetary defense community has been examining a variety of options for mitigating the impact threat of asteroids and comets that approach or cross Earth’s orbit, known as near-Earth objects (NEOs). This paper discusses the preliminary study results of selecting small (100-m class) NEO targets and mission design trade-offs for flight validating some key planetary defense technologies. In particular, this paper focuses on a planetary defense demo mission for validating the effectiveness of a Hypervelocity Asteroid Intercept Vehicle (HAIV) concept, currently being investigated by the Asteroid Deflection Research Center (ADRC) for a NIAC (NASA Advanced Innovative Concepts) Phase 2 project. Introduction Geological evidence shows that asteroids and comets have collided with the Earth in the past and will do so in the future. Such collisions have played an important role in shaping the Earth’s biological and geological histories. Many researchers in the planetary defense community have examined a variety of options for mitigating the impact threat of Earth approaching or crossing asteroids and comets, known as near-Earth objects (NEOs).