Style Transfer Generative Adversarial Networks: Learning to Play Chess

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Application of Chess Theory Free

FREE APPLICATION OF CHESS THEORY PDF Efim Geller,K.P. Neat | 270 pages | 01 Jan 1995 | EVERYMAN CHESS | 9781857440676 | English | London, United Kingdom Application of Chess Theory - Geller – Chess House The game of chess is commonly divided into three phases: the openingApplication of Chess Theoryand endgame. Those who write about chess theorywho are often also eminent players, are referred to as "theorists" or "theoreticians". The development of theory in all of these areas has been assisted by the vast literature on the game. Inpreeminent chess historian H. Murray wrote in his page magnum opus A History of Chess that, "The game possesses a literature which in contents probably exceeds that of all other games combined. Wood estimated that the number had increased to about 20, No one knows how many have been printed White Collection [10] at the Cleveland Public Librarycontains over 32, chess books and serials, including over 6, bound volumes of chess periodicals. The earliest printed work on chess theory whose date can be established with some exactitude is Repeticion de Amores y Arte de Ajedrez by the Spaniard Luis Ramirez de Lucenapublished c. Fifteen years Application of Chess Theory Lucena's book, Portuguese apothecary Pedro Damiano published the book Questo libro e da imparare giocare a scachi et de la partiti in Rome. It includes analysis of the Queen's Gambit Application of Chess Theory, showing what happens when Black tries to keep the gambit pawn with Nf3 f6? These books and later ones discuss games played with various openings, opening traps, and the best way for both sides to play. -

Chess Openings

Chess Openings PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Tue, 10 Jun 2014 09:50:30 UTC Contents Articles Overview 1 Chess opening 1 e4 Openings 25 King's Pawn Game 25 Open Game 29 Semi-Open Game 32 e4 Openings – King's Knight Openings 36 King's Knight Opening 36 Ruy Lopez 38 Ruy Lopez, Exchange Variation 57 Italian Game 60 Hungarian Defense 63 Two Knights Defense 65 Fried Liver Attack 71 Giuoco Piano 73 Evans Gambit 78 Italian Gambit 82 Irish Gambit 83 Jerome Gambit 85 Blackburne Shilling Gambit 88 Scotch Game 90 Ponziani Opening 96 Inverted Hungarian Opening 102 Konstantinopolsky Opening 104 Three Knights Opening 105 Four Knights Game 107 Halloween Gambit 111 Philidor Defence 115 Elephant Gambit 119 Damiano Defence 122 Greco Defence 125 Gunderam Defense 127 Latvian Gambit 129 Rousseau Gambit 133 Petrov's Defence 136 e4 Openings – Sicilian Defence 140 Sicilian Defence 140 Sicilian Defence, Alapin Variation 159 Sicilian Defence, Dragon Variation 163 Sicilian Defence, Accelerated Dragon 169 Sicilian, Dragon, Yugoslav attack, 9.Bc4 172 Sicilian Defence, Najdorf Variation 175 Sicilian Defence, Scheveningen Variation 181 Chekhover Sicilian 185 Wing Gambit 187 Smith-Morra Gambit 189 e4 Openings – Other variations 192 Bishop's Opening 192 Portuguese Opening 198 King's Gambit 200 Fischer Defense 206 Falkbeer Countergambit 208 Rice Gambit 210 Center Game 212 Danish Gambit 214 Lopez Opening 218 Napoleon Opening 219 Parham Attack 221 Vienna Game 224 Frankenstein-Dracula Variation 228 Alapin's Opening 231 French Defence 232 Caro-Kann Defence 245 Pirc Defence 256 Pirc Defence, Austrian Attack 261 Balogh Defense 263 Scandinavian Defense 265 Nimzowitsch Defence 269 Alekhine's Defence 271 Modern Defense 279 Monkey's Bum 282 Owen's Defence 285 St. -

For Emblems and Decorations P. 8 Bringing Back Lost Items P. 16 a Harbour of Hospitality P. 34

of Hospitality Hospitality of and Decorations Decorations and Lost Items Items Lost p. 34 p. p. 8 p. p. 16 p. A Harbour Harbour A For Emblems Emblems For Bringing Back Back Bringing Апрель 2016 Выпуск 4/23 April 2016 / Issue 4/23 2016 / Issue April With English pages pages Русский / Russian Maecenas Меценат Russian Russian With With Апрель 2016 / Выпуск 4/23 Апрель April 2016 April Issue 4/23 Issue Для бескорыстия Без перерыва «Кармен», нет рамок стр. 4 на кризис стр. 28 какой не было стр. 46 Fair Government Welcome! Strong Business Prosperous Citizens Arkady Sosnov — Editor-in-Chief Igor Domrachev — Art Director Evgeny Sinyaver — Photographer Alla Bernarducci — Projects Elena Morozova — Copy Editor Regeneration David Hicks — Translator Editorial Office: 5 Universitetskaya nab, flat 213, 199034, St. Petersburg. Tel. / Fax +7 (812) 328 2012, of Emotions tel. +7 (921) 909 5151, e-mail: [email protected] Website: www.rusmecenat.ru Chairman of the Board of Trustees: M. B. Piotrovsky Last December at the St. Petersburg Cultural Fo- graphs for?’ I asked him through the interpreter (with rum I spoke to the well-known drama teacher Mikhail the unspoken thought: ‘if you can’t see’). ‘For my wife’, Founder: Arkady Sosnov Borisov, the curator of a production of Carmen in Mos- he replied via the interpreter’s palm. ‘It’s such a beautiful Publisher: St Petersburg Social Organization ‘Journalist Centre of International Co-operation’ cow in which people with development peculiarities per- room. She’ll look at it and will want to come’. Address: 5 Universitetskaya nab, flat 212, 199034, St. -

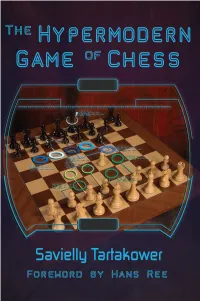

Hypermodern Game of Chess the Hypermodern Game of Chess

The Hypermodern Game of Chess The Hypermodern Game of Chess by Savielly Tartakower Foreword by Hans Ree 2015 Russell Enterprises, Inc. Milford, CT USA 1 The Hypermodern Game of Chess The Hypermodern Game of Chess by Savielly Tartakower © Copyright 2015 Jared Becker ISBN: 978-1-941270-30-1 All Rights Reserved No part of this book maybe used, reproduced, stored in a retrieval system or transmitted in any manner or form whatsoever or by any means, electronic, electrostatic, magnetic tape, photocopying, recording or otherwise, without the express written permission from the publisher except in the case of brief quotations embodied in critical articles or reviews. Published by: Russell Enterprises, Inc. PO Box 3131 Milford, CT 06460 USA http://www.russell-enterprises.com [email protected] Translated from the German by Jared Becker Editorial Consultant Hannes Langrock Cover design by Janel Norris Printed in the United States of America 2 The Hypermodern Game of Chess Table of Contents Foreword by Hans Ree 5 From the Translator 7 Introduction 8 The Three Phases of A Game 10 Alekhine’s Defense 11 Part I – Open Games Spanish Torture 28 Spanish 35 José Raúl Capablanca 39 The Accumulation of Small Advantages 41 Emanuel Lasker 43 The Canticle of the Combination 52 Spanish with 5...Nxe4 56 Dr. Siegbert Tarrasch and Géza Maróczy as Hypermodernists 65 What constitutes a mistake? 76 Spanish Exchange Variation 80 Steinitz Defense 82 The Doctrine of Weaknesses 90 Spanish Three and Four Knights’ Game 95 A Victory of Methodology 95 Efim Bogoljubow -

Raetsky, Alexander & Chetverik, Maxim

A. Raetsky,M. Chetverik NO PASSION FOR CHESS FASHION Fierce Openings For Your New Repertoire © 2011 A. Raetsky, M. Chetverik English Translation© 2011 Mongoose Press All rights reserved. No part of this book may be reproduced or transmitted in any fo rm by any means, electronic or mechanical, including photocopying, recording, or by an information storage and retrieval system, without written permissionfr om the Publisher. Publisher: Mongoose Press 1005 Boylston Street, Suite 324 Newton Highlands, MA 02461 [email protected] www.MongoosePress.com ISBN 978-1 -936277-26-1 Library of Congress Control Number: 2011925050 Distributed to the trade by National Book Network [email protected], 800-462-6420 For all other sales inquiries please contact the publisher. Translated by: Sarah Hurst Layout: Andrey Elkov Editor Sean Marsh Cover Design: Kaloyan N achev Printed in China First English edition 0987654321 CONTENTS From the authors ....... ......................................................................................... 4 1. A. Raetsky. THE KING'S GAMBIT .... ........................................................... 5 The variation 2 ...ef 3.ll:lf3d6 4.d4 g5 5.h4 g4 6.lllg1 f5 2. A. Raetsky. PETROV'S DEFENSE ............................................................... 15 The variation 3.d4 lllxe4 4.de �c5 3. M. Chetverik. THE RUY LOPEZ .................................................................. 24 The Alapin Defense 3 .. J[b4 4. A. Raetsky. THE SCANDINAVIAN DEFENSE ............................................. 44 The variation 2 ...lll f6 3.d4ll:lxd5 4.c4 Ci:lb4 5. M. Chetverik. ALEKHINE'S DEFENSE ...................................................... 57 The Cambridge Gambit 2.e5 Ci:ld5 3.d4 d6 4.c4ll:lb6 5.f4 g5 6. A. Raetsky. THE FRENCH DEFENSE ......................................................... 65 The variation 3.e5 c5 4.'i¥g4 7. M. Chetverik. THE St. GEORGE DEFENSE .............................................. -

World Chess Hall of Fame Brochure

ABOUT US THE HALL OF FAME The World Chess Hall of Fame Additionally, the World Chess Hall The World Chess Hall of Fame is home to both the World and U.S. Halls of Fame. (WCHOF) is a nonprofit, collecting of Fame offers interpretive programs Located on the third floor of the WCHOF, the Hall of Fame honors World and institution situated in the heart of that provide unique and exciting U.S. inductees with a plaque listing their contributions to the game of chess and Saint Louis. The WCHOF is the only ways to experience art, history, science, features rotating exhibitions from the permanent collection. The collection, institution of its kind and offers a and sport through chess. Since its including the Paul Morphy silver set, an early prototype of the Chess Challenger, variety of programming to explore inception, chess has challenged artists and Bobby Fischer memorabilia, is dedicated to the history of chess and the the dynamic relationship between and craftsmen to interpret the game accomplishments of the Hall of Fame inductees. As of May 2013, there are 19 art and chess, including educational through a variety of mediums resulting members of the World Hall of Fame and 52 members of the U.S. Hall of Fame. outreach initiatives that provide in chess sets of exceptional artistic context and meaning to the game skill and creativity. The WCHOF seeks and its continued cultural impact. to present the work of these craftsmen WORLD HALL OF FAME INDUCTEES and artists while educating visitors 2013 2008 2003 2001 Saint Louis has quickly become about the game itself. -

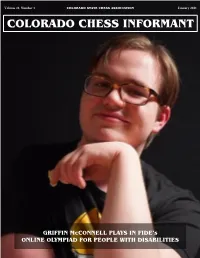

January 2021 COLORADO CHESS INFORMANT

Volume 48, Number 1 COLORADO STATE CHESS ASSOCIATION January 2021 COLORADO CHESS INFORMANT GRIFFIN McCONNELL PLAYS IN FIDE’s ONLINE OLYMPIAD FOR PEOPLE WITH DISABILITIES Volume 48, Number 1 Colorado Chess Informant January 2021 From the Editor And so we begin the New Year with renewed hope that can only arrive none too soon... Hello everyone and Happy New Year! I hope that you have en- joyed the holiday season as much as you can and that you are safe and as happy as can be. I understand that a few of our fellow The Colorado State Chess Association, Incorporated, is a Colorado chess players have been afflicted with Covid-19 but as Section 501(C)(3) tax exempt, non-profit educational corpora- I understand, they are all on the road to recovery or have already tion formed to promote chess in Colorado. Contributions are healed up and are back at life full tilt. tax deductible. As you can read in this issue, the CSCA Board of Directors are Dues are $15 a year. Youth (under 20) and Senior (65 or older) working hard to at least plan on having some in-person tourna- memberships are $10. Family memberships are available to ments held this year. We all hope that it does indeed come to additional family members for $3 off the regular dues. Scholas- pass - so stay tuned. tic tournament membership is available for $3. The lead story in this issue is about a remarkable young man and ● Send address changes to - Attn: Alexander Freeman to the his wonderful play at FIDE’s Online Olympiad for People with email address [email protected]. -

Mikhail Chigorin: the Creative Genius Online

eEDp5 (Download) Mikhail Chigorin: The Creative Genius Online [eEDp5.ebook] Mikhail Chigorin: The Creative Genius Pdf Free Jimmy Adams DOC | *audiobook | ebooks | Download PDF | ePub Download Now Free Download Here Download eBook #179700 in Books 2016-04-15Original language:EnglishPDF # 1 9.51 x 1.95 x 7.00l, .0 #File Name: 9056916017750 pages | File size: 78.Mb Jimmy Adams : Mikhail Chigorin: The Creative Genius before purchasing it in order to gage whether or not it would be worth my time, and all praised Mikhail Chigorin: The Creative Genius: 0 of 0 people found the following review helpful. ExcellentBy B. W. ThewA wonderful book, lots of games with excellent notes. Also quite a good read with a very interesting biography of one of the all time greats.17 of 17 people found the following review helpful. An outstanding portrait of a 19th century chess artist and his era!By SkinnyhorseThis is a preliminary review as I have only gone through the first 220 pages. So far it has been an elegant journey through the later half of 19th century chess history. This is a wonderful book about one of best chessplayers of the 19th century - Mikhail Chigorin twice played for the world championship, losing to Wilhelm Steinitz 10-6 and the second time by 10-8. Chigorin had one terrible weakness as a practical player at the World Championship level: he had no coherent system to deal with d-pawn openings; in the first match, Chigorin lost 7 of 8 games games when Steinitz opened 1. Nf3 and 2. d4 (or 1. -

Eric Schiller the Rubinstein Attack!

The Rubinstein Attack! A chess opening strategy for White Eric Schiller The Rubinstein Attack: A Chess Opening Strategy for White Copyright © 2005 Eric Schiller All rights reserved. BrownWalker Press Boca Raton , Florida USA • 2005 ISBN: 1-58112-454-6 BrownWalker.com If you are looking for an effective chess opening strategy to use as White, this book will provide you with everything you need to use the Rubinstein Attack to set up aggressive attacking formations. Building on ideas developed by the great Akiba Rubinstein, this book offers an opening system for White against most Black defensive formations. The Rubinstein Attack is essentially an opening of ideas rather than memorized variations. Move order is rarely critical, the flow of the game will usually be the same regardless of initial move order. As you play through the games in this book you will see each of White’s major strategies put to use against a variety of defensive formations. As you play through the games in this book, pay close attention to the means White uses to carry out the attack. You’ll see the same patterns repeated over and over again, and you can use these stragies to break down you opponent’s defenses. The basic theme of each game is indicated in the title in the game header. The Rubinstein is a highly effective opening against most defenses to 1.d4, but it is not particularly effective against the King’s Indian or Gruenfeld formations. To handle those openings, you’ll need to play c4 and enter some of the main lines, though you can choose solid formations with a pawn at d3. -

2001 Knoxville Chess Championship Bulletin

David E. Burris Memorial Knoxville City Chess Championship November 2001 No. Player Rating 1 2 3 4 5 6 Total 1. Jonathan A. Alexander 1615 1 0 1 1 .5 3.5 2. John Eric Vaughan 1709 0 0 1 1 1 3 3. Peter Bereolos 2351 1 1 1 1F 1 5 4. Tim Schulze 1499 0 0 0 0 0 0 5. Haresh Mirani 1673 0 0 0F 1 0F 1 6. Jon L. Murray 1551 .5 0 0 1 1F 2.5 (TD: John Anthony) John Anthony http://members.aol.com/KnoxChessClub Round 1 — Wednesday, Nov. 7 Bereolos 1-0 Murray Mirani 1-0 Schulze Alexander 1-0 Vaughan (1) Bereolos,P (2351) - Murray,J (1551) [D35] (Round 1), 26.11.2001 [Annotations by NM Peter Bereolos] 1. d4 d5 2. c4 c6 Jon always seems to throw something new at me. The other 3 times I opened 1.d4 against him saw a QGA, a Chigorin's Defense, and a Grunfeld. 3. Nc3 Nf6 4. Nf3 e6 5. Bg5 Nbd7 6. cxd5 exd5 7. e3 Bd6 This move is much rarer than the usual 7...Be7, but hasn’t scored much worse. My impression was that the Black minor pieces have a hard time get- ting to good squares in this line. 8. Bd3 h6 9. Bh4 O-O 10. O-O Qc7 11. Rb1 Looking at things now, it’s probably more direct to play for the setup with rooks on c1 and e1 immediately. However, the threat of the minority attack with b2-b4-b5 induced him into making some queenside weaknesses. -

Berlin 1881 Chess Tournament - Wikipedia, the Free Encyclopedia Berlin 1881 Chess Tournament from Wikipedia, the Free Encyclopedia

1.9.13 Berlin 1881 chess tournament - Wikipedia, the free encyclopedia Berlin 1881 chess tournament From Wikipedia, the free encyclopedia The Deutscher Schachbund (DSB, the German Chess Federation) had been founded in Leipzig on July 18, 1877. When the next meeting took place in the Schützenhaus, Leipzig on July 15, 1879, sixty-two clubs had become members of the federation. Hofrat Dr. Rudolf von Gottschall became Chairman and Hermann Zwanzig the General Secretary.[1] When foreign players were invited to Berlin in 1881, an important and successful formulae was completed. A master tournament was organised every second year, and Germans could partake in many groups and their talents qualified for master tournaments by a master title in the Hauptturnier.[2] The Berlin 1881 chess tournament (the second DSB Congress,2.DSB-Kongreß), organised by Hermann Zwanzig and Emil Schallopp, took place in Berlin from August 29 to September 17, 1881.[3] The brightest lights among the German participants were Louis Paulsen, his brother Wilfried Paulsen, and Johannes Minckwitz. Great Britain was represented by Joseph Henry Blackburne, the United States by James Mason, a master from Ireland. Mikhail Chigorin travelled from Russia, and two great masters from Poland: Szymon Winawer and Johannes Zukertort, also participated. Karl Pitschel, from the Austro-Hungarian Empire, arrived and played his games in the first three rounds, but was unable to complete the tournament. The eighteen collected masters constituted a field of strength that had not been seen since -

Queen's Gambit

. Semi-Slav), or some other move entirely. C&O Family Chess Center If Black “accepts” the gambit pawn www.chesscenter.net by 2…dxc4 the big center is ready and waiting. White will attempt rapid Openings for Study development of his pieces with his small sacrifice and center control. The Queen’s Gambit; Black, having conceded the center D06 – D69 may try to advance …c7-c5 or even …e7-e5! if possible. The following 30 short games The Queen’s Gambit is another of from historical tournament and master the oldest openings, dating back as play are all from the QGA, D20 – D29. far as the Gottingen manuscript of Re-played on a board, they can help 1490, and was analyzed by two early you get a feel for the opening and chess writers, Dr. Alessandro Salvio some of the mistakes to look for and and Gioachino Greco. avoid (or punish in an opponent’s 1.d4 d5 2.c4 … play). Notes are very basic and the games are divided evenly as to who XABCDEFGHY wins. 8rsnlwqkvlntr( There are many more lines in the 7zppzp-zppzpp' QGD (35 games), which will be 6-+-+-+-+& introduced following the QGA. 5+-+p+-+-% 4-+PzP-+-+$ QGA D20 – D29 3+-+-+-+-# White Wins (15 Games) 2PzP-+PzPPzP" 1tRNvLQmKLsNR! (1) Greco,G - NN [D20] xabcdefghy Europe, 1620 After 2.c4 …Black to move. 1.d4 d5 2.c4 dxc4 3.e3 b5 4.a4 c6 5.axb5 cxb5?? [5...Be6] 6.Qf3 [6.Qf3 As in many King’s pawn openings, Nc6 7.Qxc6+ Bd7 8.Qa6] 1–0 White’s basic idea is to get his two center pawns developed and create (2) Greco,G - NN [D20] the “big center” (pawns at d4 and e4).