1 Introduction to Logic

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Tt-Satisfiable

CMPSCI 601: Recall From Last Time Lecture 6 Boolean Syntax: ¡ ¢¤£¦¥¨§¨£ © §¨£ § Boolean variables: A boolean variable represents an atomic statement that may be either true or false. There may be infinitely many of these available. Boolean expressions: £ atomic: , (“top”), (“bottom”) § ! " # $ , , , , , for Boolean expressions Note that any particular expression is a finite string, and thus may use only finitely many variables. £ £ A literal is an atomic expression or its negation: , , , . As you may know, the choice of operators is somewhat arbitary as long as we have a complete set, one that suf- fices to simulate all boolean functions. On HW#1 we ¢ § § ! argued that is already a complete set. 1 CMPSCI 601: Boolean Logic: Semantics Lecture 6 A boolean expression has a meaning, a truth value of true or false, once we know the truth values of all the individual variables. ¢ £ # ¡ A truth assignment is a function ¢ true § false , where is the set of all variables. An as- signment is appropriate to an expression ¤ if it assigns a value to all variables used in ¤ . ¡ The double-turnstile symbol ¥ (read as “models”) de- notes the relationship between a truth assignment and an ¡ ¥ ¤ expression. The statement “ ” (read as “ models ¤ ¤ ”) simply says “ is true under ”. 2 ¡ ¤ ¥ ¤ If is appropriate to , we define when is true by induction on the structure of ¤ : is true and is false for any , £ A variable is true iff says that it is, ¡ ¡ ¡ ¡ " ! ¥ ¤ ¥ ¥ If ¤ , iff both and , ¡ ¡ ¡ ¡ " ¥ ¤ ¥ ¥ If ¤ , iff either or or both, ¡ ¡ ¡ ¡ " # ¥ ¤ ¥ ¥ If ¤ , unless and , ¡ ¡ ¡ ¡ $ ¥ ¤ ¥ ¥ If ¤ , iff and are both true or both false. 3 Definition 6.1 A boolean expression ¤ is satisfiable iff ¡ ¥ ¤ there exists . -

Verifying the Unification Algorithm In

Verifying the Uni¯cation Algorithm in LCF Lawrence C. Paulson Computer Laboratory Corn Exchange Street Cambridge CB2 3QG England August 1984 Abstract. Manna and Waldinger's theory of substitutions and uni¯ca- tion has been veri¯ed using the Cambridge LCF theorem prover. A proof of the monotonicity of substitution is presented in detail, as an exam- ple of interaction with LCF. Translating the theory into LCF's domain- theoretic logic is largely straightforward. Well-founded induction on a complex ordering is translated into nested structural inductions. Cor- rectness of uni¯cation is expressed using predicates for such properties as idempotence and most-generality. The veri¯cation is presented as a series of lemmas. The LCF proofs are compared with the original ones, and with other approaches. It appears di±cult to ¯nd a logic that is both simple and exible, especially for proving termination. Contents 1 Introduction 2 2 Overview of uni¯cation 2 3 Overview of LCF 4 3.1 The logic PPLAMBDA :::::::::::::::::::::::::: 4 3.2 The meta-language ML :::::::::::::::::::::::::: 5 3.3 Goal-directed proof :::::::::::::::::::::::::::: 6 3.4 Recursive data structures ::::::::::::::::::::::::: 7 4 Di®erences between the formal and informal theories 7 4.1 Logical framework :::::::::::::::::::::::::::: 8 4.2 Data structure for expressions :::::::::::::::::::::: 8 4.3 Sets and substitutions :::::::::::::::::::::::::: 9 4.4 The induction principle :::::::::::::::::::::::::: 10 5 Constructing theories in LCF 10 5.1 Expressions :::::::::::::::::::::::::::::::: -

Pst-Plot — Plotting Functions in “Pure” L ATEX

pst-plot — plotting functions in “pure” LATEX Commonly, one wants to simply plot a function as a part of a LATEX document. Using some postscript tricks, you can make a graph of an arbitrary function in one variable including implicitly defined functions. The commands described on this worksheet require that the following lines appear in your document header (before \begin{document}). \usepackage{pst-plot} \usepackage{pstricks} The full pstricks manual (including pst-plot documentation) is available at:1 http://www.risc.uni-linz.ac.at/institute/systems/documentation/TeX/tex/ A good page for showing the power of what you can do with pstricks is: http://www.pstricks.de/.2 Reverse Polish Notation (postfix notation) Reverse polish notation (RPN) is a modification of polish notation which was a creation of the logician Jan Lukasiewicz (advisor of Alfred Tarski). The advantage of these notations is that they do not require parentheses to control the order of operations in an expression. The advantage of this in a computer is that parsing a RPN expression is trivial, whereas parsing an expression in standard notation can take quite a bit of computation. At the most basic level, an expression such as 1 + 2 becomes 12+. For more complicated expressions, the concept of a stack must be introduced. The stack is just the list of all numbers which have not been used yet. When an operation takes place, the result of that operation is left on the stack. Thus, we could write the sum of all integers from 1 to 10 as either, 12+3+4+5+6+7+8+9+10+ or 12345678910+++++++++ In both cases the result 55 is left on the stack. -

Chapter 9: Initial Theorems About Axiom System

Initial Theorems about Axiom 9 System AS1 1. Theorems in Axiom Systems versus Theorems about Axiom Systems ..................................2 2. Proofs about Axiom Systems ................................................................................................3 3. Initial Examples of Proofs in the Metalanguage about AS1 ..................................................4 4. The Deduction Theorem.......................................................................................................7 5. Using Mathematical Induction to do Proofs about Derivations .............................................8 6. Setting up the Proof of the Deduction Theorem.....................................................................9 7. Informal Proof of the Deduction Theorem..........................................................................10 8. The Lemmas Supporting the Deduction Theorem................................................................11 9. Rules R1 and R2 are Required for any DT-MP-Logic........................................................12 10. The Converse of the Deduction Theorem and Modus Ponens .............................................14 11. Some General Theorems About ......................................................................................15 12. Further Theorems About AS1.............................................................................................16 13. Appendix: Summary of Theorems about AS1.....................................................................18 2 Hardegree, -

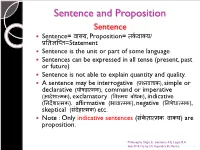

Logic- Sentence and Propositions

Sentence and Proposition Sentence Sentence= वा啍य, Proposition= त셍कवा啍य/ प्रततऻप्तत=Statement Sentence is the unit or part of some language. Sentences can be expressed in all tense (present, past or future) Sentence is not able to explain quantity and quality. A sentence may be interrogative (प्रश्नवाच셍), simple or declarative (घोषड़ा配म셍), command or imperative (आदेशा配म셍), exclamatory (ववमयतबोध셍), indicative (तनदेशा配म셍), affirmative (भावा配म셍), negative (तनषेधा配म셍), skeptical (संदेहा配म셍) etc. Note : Only indicative sentences (सं셍ेता配म셍तवा啍य) are proposition. Philosophy Dept, E- contents-01( Logic-B.A Sem.III & IV) by Dr Rajendra Kr.Verma 1 All kind of sentences are not proposition, only those sentences are called proposition while they will determine or evaluate in terms of truthfulness or falsity. Sentences are governed by its own grammar. (for exp- sentence of hindi language govern by hindi grammar) Sentences are correct or incorrect / pure or impure. Sentences are may be either true or false. Philosophy Dept, E- contents-01( Logic-B.A Sem.III & IV) by Dr Rajendra Kr.Verma 2 Proposition Proposition are regarded as the material of our reasoning and we also say that proposition and statements are regarded as same. Proposition is the unit of logic. Proposition always comes in present tense. (sentences - all tenses) Proposition can explain quantity and quality. (sentences- cannot) Meaning of sentence is called proposition. Sometime more then one sentences can expressed only one proposition. Example : 1. ऩानीतबरसतरहातहैत.(Hindi) 2. ऩावुष तऩड़तोत (Sanskrit) 3. It is raining (English) All above sentences have only one meaning or one proposition. -

Inference Versus Consequence* Göran Sundholm Leyden University

To appear in LOGICA Yearbook, 1998, Czech Acad. Sc., Prague. Inference versus Consequence* Göran Sundholm Leyden University The following passage, hereinafter "the passage", could have been taken from a modern textbook.1 It is prototypical of current logical orthodoxy: The inference (*) A1, …, Ak. Therefore: C is valid if and only if whenever all the premises A1, …, Ak are true, the conclusion C is true also. When (*) is valid, we also say that C is a logical consequence of A1, …, Ak. We write A1, …, Ak |= C. It is my contention that the passage does not properly capture the nature of inference, since it does not distinguish between valid inference and logical consequence. The view that the validity of inference is reducible to logical consequence has been made famous in our century by Tarski, and also by Wittgenstein in the Tractatus and by Quine, who both reduced valid inference to the logical truth of a suitable implication.2 All three were anticipated by Bolzano.3 Bolzano considered Urteile (judgements) of the form A is true where A is a Satz an sich (proposition in the modern sense).4 Such a judgement is correct (richtig) when the proposition A, that serves as the judgemental content, really is true.5 A correct judgement is an Erkenntnis, that is, a piece of knowledge.6 Similarly, for Bolzano, the general form I of inference * I am indebted to my colleague Dr. E. P. Bos who read an early version of the manuscript and offered valuable comments. 1 Could have been so taken and almost was; cf. -

First Order Logic and Nonstandard Analysis

First Order Logic and Nonstandard Analysis Julian Hartman September 4, 2010 Abstract This paper is intended as an exploration of nonstandard analysis, and the rigorous use of infinitesimals and infinite elements to explore properties of the real numbers. I first define and explore first order logic, and model theory. Then, I prove the compact- ness theorem, and use this to form a nonstandard structure of the real numbers. Using this nonstandard structure, it it easy to to various proofs without the use of limits that would otherwise require their use. Contents 1 Introduction 2 2 An Introduction to First Order Logic 2 2.1 Propositional Logic . 2 2.2 Logical Symbols . 2 2.3 Predicates, Constants and Functions . 2 2.4 Well-Formed Formulas . 3 3 Models 3 3.1 Structure . 3 3.2 Truth . 4 3.2.1 Satisfaction . 5 4 The Compactness Theorem 6 4.1 Soundness and Completeness . 6 5 Nonstandard Analysis 7 5.1 Making a Nonstandard Structure . 7 5.2 Applications of a Nonstandard Structure . 9 6 Sources 10 1 1 Introduction The founders of modern calculus had a less than perfect understanding of the nuts and bolts of what made it work. Both Newton and Leibniz used the notion of infinitesimal, without a rigorous understanding of what they were. Infinitely small real numbers that were still not zero was a hard thing for mathematicians to accept, and with the rigorous development of limits by the likes of Cauchy and Weierstrass, the discussion of infinitesimals subsided. Now, using first order logic for nonstandard analysis, it is possible to create a model of the real numbers that has the same properties as the traditional conception of the real numbers, but also has rigorously defined infinite and infinitesimal elements. -

Unification: a Multidisciplinary Survey

Unification: A Multidisciplinary Survey KEVIN KNIGHT Computer Science Department, Carnegie-Mellon University, Pittsburgh, Pennsylvania 15213-3890 The unification problem and several variants are presented. Various algorithms and data structures are discussed. Research on unification arising in several areas of computer science is surveyed, these areas include theorem proving, logic programming, and natural language processing. Sections of the paper include examples that highlight particular uses of unification and the special problems encountered. Other topics covered are resolution, higher order logic, the occur check, infinite terms, feature structures, equational theories, inheritance, parallel algorithms, generalization, lattices, and other applications of unification. The paper is intended for readers with a general computer science background-no specific knowledge of any of the above topics is assumed. Categories and Subject Descriptors: E.l [Data Structures]: Graphs; F.2.2 [Analysis of Algorithms and Problem Complexity]: Nonnumerical Algorithms and Problems- Computations on discrete structures, Pattern matching; Ll.3 [Algebraic Manipulation]: Languages and Systems; 1.2.3 [Artificial Intelligence]: Deduction and Theorem Proving; 1.2.7 [Artificial Intelligence]: Natural Language Processing General Terms: Algorithms Additional Key Words and Phrases: Artificial intelligence, computational complexity, equational theories, feature structures, generalization, higher order logic, inheritance, lattices, logic programming, natural language -

On Pocrims and Hoops

On Pocrims and Hoops Rob Arthan & Paulo Oliva August 10, 2018 Abstract Pocrims and suitable specialisations thereof are structures that pro- vide the natural algebraic semantics for a minimal affine logic and its extensions. Hoops comprise a special class of pocrims that provide al- gebraic semantics for what we view as an intuitionistic analogue of the classical multi-valuedLukasiewicz logic. We present some contribu- tions to the theory of these algebraic structures. We give a new proof that the class of hoops is a variety. We use a new indirect method to establish several important identities in the theory of hoops: in particular, we prove that the double negation mapping in a hoop is a homormorphism. This leads to an investigation of algebraic ana- logues of the various double negation translations that are well-known from proof theory. We give an algebraic framework for studying the semantics of double negation translations and use it to prove new re- sults about the applicability of the double negation translations due to Gentzen and Glivenko. 1 Introduction Pocrims provide the natural algebraic models for a minimal affine logic, ALm, while hoops provide the models for what we view as a minimal ana- arXiv:1404.0816v2 [math.LO] 16 Oct 2014 logue, LL m, ofLukasiewicz’s classical infinite-valued logic LL c. This paper presents some new results on the algebraic structure of pocrims and hoops. Our main motivation for this work is in the logical aspects: we are interested in criteria for provability in ALm, LL m and related logics. We develop a useful practical test for provability in LL m and apply it to a range of prob- lems including a study of the various double negation translations in these logics. -

Supplementary Section 6S.10 Alternative Notations

Supplementary Section 6S.10 Alternative Notations Just as we can express the same thoughts in different languages, ‘He has a big head’ and ‘El tiene una cabeza grande’, there are many different ways to express the same logical claims. Some of these differences are thinly cosmetic. Others are more interesting. Insofar as the different systems of notation we’ll examine in this section are merely different ways of expressing the same logic, they are not particularly important. But one of the most frustrating aspects of studying logic, at first, is getting comfortable with different systems of notation. So it’s good to try to get comfortable with a variety of different ways of presenting logic. Most simply, there are different symbols for all of the logical operators. You can easily find some by perusing various logical texts and websites. The following table contains the most common. Operator We use Others use Negation ∼P ¬P −P P Conjunction P • Q P ∧ Q P & Q PQ Disjunction P ∨ Q P + Q Material conditional P ⊃ Q P → Q P ⇒ Q Biconditional P ≡ Q P ↔ Q P ⇔ Q P ∼ Q Existential quantifier ∃ ∑ ∨ Universal quantifier ∀ ∏ ∧ There are also propositional operators that do not appear in our logical system at all. For example, there are two unary operators called the Sheffer stroke (|) and the Peirce arrow (↓). With these operators, we can define all five of the operators ofPL . Such operators may be used for systems in which one wants a minimal vocabulary and in which one does not need to have simplicity of expression. The balance between simplicity of vocabulary and simplicity of expression is a deep topic, but not one we’ll engage in this section. -

Logic and Proof Release 3.18.4

Logic and Proof Release 3.18.4 Jeremy Avigad, Robert Y. Lewis, and Floris van Doorn Sep 10, 2021 CONTENTS 1 Introduction 1 1.1 Mathematical Proof ............................................ 1 1.2 Symbolic Logic .............................................. 2 1.3 Interactive Theorem Proving ....................................... 4 1.4 The Semantic Point of View ....................................... 5 1.5 Goals Summarized ............................................ 6 1.6 About this Textbook ........................................... 6 2 Propositional Logic 7 2.1 A Puzzle ................................................. 7 2.2 A Solution ................................................ 7 2.3 Rules of Inference ............................................ 8 2.4 The Language of Propositional Logic ................................... 15 2.5 Exercises ................................................. 16 3 Natural Deduction for Propositional Logic 17 3.1 Derivations in Natural Deduction ..................................... 17 3.2 Examples ................................................. 19 3.3 Forward and Backward Reasoning .................................... 20 3.4 Reasoning by Cases ............................................ 22 3.5 Some Logical Identities .......................................... 23 3.6 Exercises ................................................. 24 4 Propositional Logic in Lean 25 4.1 Expressions for Propositions and Proofs ................................. 25 4.2 More commands ............................................ -

Iso/Iec Jtc 1/Sc 2 N 3769/Wg2 N2866 Date: 2004-11-12

ISO/IEC JTC 1/SC 2 N 3769/WG2 N2866 DATE: 2004-11-12 ISO/IEC JTC 1/SC 2 Coded Character Sets Secretariat: Japan (JISC) DOC. TYPE Summary of Voting/Table of Replies Summary of Voting on ISO/IEC JTC 1/SC 2 N 3758 : ISO/IEC 14651/FPDAM 2, Information technology -- International string ordering TITLE and comparison -- Method for comparing character strings and description of the common template tailorable ordering -- AMENDMENT 2 SOURCE SC 2 Secretariat PROJECT JTC1.02.14651.00.02 This document is forwarded to Project Editor for resolution of comments. STATUS The Project Editor is requested to prepare a draft disposition of comments report, revised text and a recommendation for further processing. ACTION ID FYI DUE DATE P, O and L Members of ISO/IEC JTC 1/SC 2 ; ISO/IEC JTC 1 Secretariat; DISTRIBUTION ISO/IEC ITTF ACCESS LEVEL Def ISSUE NO. 202 NAME 02n3769.pdf SIZE FILE (KB) 24 PAGES Secretariat ISO/IEC JTC 1/SC 2 - IPSJ/ITSCJ *(Information Processing Society of Japan/Information Technology Standards Commission of Japan) Room 308-3, Kikai-Shinko-Kaikan Bldg., 3-5-8, Shiba-Koen, Minato-ku, Tokyo 105- 0011 Japan *Standard Organization Accredited by JISC Telephone: +81-3-3431-2808; Facsimile: +81-3-3431-6493; E-mail: [email protected] Summary of Voting on ISO/IEC JTC 1/SC 2 N 3758 : ISO/IEC 14651/FPDAM 2, Information technology -- International string ordering and comparison -- Method for comparing character strings and description of the common template tailorable ordering AMENDMENT 2 Q1 : FPDAM Consideration Q1 Not yet Comments Approve