Critical Analysis and Comparison of Data Protection Techniques for Genomic Data Sets

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Algorithms for Computational Biology 8Th International Conference, Alcob 2021 Missoula, MT, USA, June 7–11, 2021 Proceedings

Lecture Notes in Bioinformatics 12715 Subseries of Lecture Notes in Computer Science Series Editors Sorin Istrail Brown University, Providence, RI, USA Pavel Pevzner University of California, San Diego, CA, USA Michael Waterman University of Southern California, Los Angeles, CA, USA Editorial Board Members Søren Brunak Technical University of Denmark, Kongens Lyngby, Denmark Mikhail S. Gelfand IITP, Research and Training Center on Bioinformatics, Moscow, Russia Thomas Lengauer Max Planck Institute for Informatics, Saarbrücken, Germany Satoru Miyano University of Tokyo, Tokyo, Japan Eugene Myers Max Planck Institute of Molecular Cell Biology and Genetics, Dresden, Germany Marie-France Sagot Université Lyon 1, Villeurbanne, France David Sankoff University of Ottawa, Ottawa, Canada Ron Shamir Tel Aviv University, Ramat Aviv, Tel Aviv, Israel Terry Speed Walter and Eliza Hall Institute of Medical Research, Melbourne, VIC, Australia Martin Vingron Max Planck Institute for Molecular Genetics, Berlin, Germany W. Eric Wong University of Texas at Dallas, Richardson, TX, USA More information about this subseries at http://www.springer.com/series/5381 Carlos Martín-Vide • Miguel A. Vega-Rodríguez • Travis Wheeler (Eds.) Algorithms for Computational Biology 8th International Conference, AlCoB 2021 Missoula, MT, USA, June 7–11, 2021 Proceedings 123 Editors Carlos Martín-Vide Miguel A. Vega-Rodríguez Rovira i Virgili University University of Extremadura Tarragona, Spain Cáceres, Spain Travis Wheeler University of Montana Missoula, MT, USA ISSN 0302-9743 ISSN 1611-3349 (electronic) Lecture Notes in Bioinformatics ISBN 978-3-030-74431-1 ISBN 978-3-030-74432-8 (eBook) https://doi.org/10.1007/978-3-030-74432-8 LNCS Sublibrary: SL8 – Bioinformatics © Springer Nature Switzerland AG 2021 This work is subject to copyright. -

Computational Pan-Genomics: Status, Promises and Challenges

bioRxiv preprint doi: https://doi.org/10.1101/043430; this version posted March 12, 2016. The copyright holder for this preprint (which was not certified by peer review) is the author/funder. All rights reserved. No reuse allowed without permission. Computational Pan-Genomics: Status, Promises and Challenges Tobias Marschall1,2, Manja Marz3,60,61,62, Thomas Abeel49, Louis Dijkstra6,7, Bas E. Dutilh8,9,10, Ali Ghaffaari1,2, Paul Kersey11, Wigard P. Kloosterman12, Veli M¨akinen13, Adam Novak15, Benedict Paten15, David Porubsky16, Eric Rivals17,63, Can Alkan18, Jasmijn Baaijens5, Paul I. W. De Bakker12, Valentina Boeva19,64,65,66, Francesca Chiaromonte20, Rayan Chikhi21, Francesca D. Ciccarelli22, Robin Cijvat23, Erwin Datema24,25,26, Cornelia M. Van Duijn27, Evan E. Eichler28, Corinna Ernst29, Eleazar Eskin30,31, Erik Garrison32, Mohammed El-Kebir5,33,34, Gunnar W. Klau5, Jan O. Korbel11,35, Eric-Wubbo Lameijer36, Benjamin Langmead37, Marcel Martin59, Paul Medvedev38,39,40, John C. Mu41, Pieter Neerincx36, Klaasjan Ouwens42,67, Pierre Peterlongo43, Nadia Pisanti44,45, Sven Rahmann29, Ben Raphael46,47, Knut Reinert48, Dick de Ridder50, Jeroen de Ridder49, Matthias Schlesner51, Ole Schulz-Trieglaff52, Ashley Sanders53, Siavash Sheikhizadeh50, Carl Shneider54, Sandra Smit50, Daniel Valenzuela13, Jiayin Wang70,71,72, Lodewyk Wessels56, Ying Zhang23,5, Victor Guryev16,12, Fabio Vandin57,34, Kai Ye68,69,72 and Alexander Sch¨onhuth5 1Center for Bioinformatics, Saarland University, Saarbr¨ucken, Germany; 2Max Planck Institute for Informatics, Saarbr¨ucken, -

120421-24Recombschedule FINAL.Xlsx

Friday 20 April 18:00 20:00 REGISTRATION OPENS in Fira Palace 20:00 21:30 WELCOME RECEPTION in CaixaForum (access map) Saturday 21 April 8:00 8:50 REGISTRATION 8:50 9:00 Opening Remarks (Roderic GUIGÓ and Benny CHOR) Session 1. Chair: Roderic GUIGÓ (CRG, Barcelona ES) 9:00 10:00 Richard DURBIN The Wellcome Trust Sanger Institute, Hinxton UK "Computational analysis of population genome sequencing data" 10:00 10:20 44 Yaw-Ling Lin, Charles Ward and Steven Skiena Synthetic Sequence Design for Signal Location Search 10:20 10:40 62 Kai Song, Jie Ren, Zhiyuan Zhai, Xuemei Liu, Minghua Deng and Fengzhu Sun Alignment-Free Sequence Comparison Based on Next Generation Sequencing Reads 10:40 11:00 178 Yang Li, Hong-Mei Li, Paul Burns, Mark Borodovsky, Gene Robinson and Jian Ma TrueSight: Self-training Algorithm for Splice Junction Detection using RNA-seq 11:00 11:30 coffee break Session 2. Chair: Bonnie BERGER (MIT, Cambrige US) 11:30 11:50 139 Son Pham, Dmitry Antipov, Alexander Sirotkin, Glenn Tesler, Pavel Pevzner and Max Alekseyev PATH-SETS: A Novel Approach for Comprehensive Utilization of Mate-Pairs in Genome Assembly 11:50 12:10 171 Yan Huang, Yin Hu and Jinze Liu A Robust Method for Transcript Quantification with RNA-seq Data 12:10 12:30 120 Zhanyong Wang, Farhad Hormozdiari, Wen-Yun Yang, Eran Halperin and Eleazar Eskin CNVeM: Copy Number Variation detection Using Uncertainty of Read Mapping 12:30 12:50 205 Dmitri Pervouchine Evidence for widespread association of mammalian splicing and conserved long range RNA structures 12:50 13:10 169 Melissa Gymrek, David Golan, Saharon Rosset and Yaniv Erlich lobSTR: A Novel Pipeline for Short Tandem Repeats Profiling in Personal Genomes 13:10 13:30 217 Rory Stark Differential oestrogen receptor binding is associated with clinical outcome in breast cancer 13:30 15:00 lunch break Session 3. -

BIOGRAPHICAL SKETCH NAME: Berger

BIOGRAPHICAL SKETCH NAME: Berger, Bonnie eRA COMMONS USER NAME (credential, e.g., agency login): BABERGER POSITION TITLE: Simons Professor of Mathematics and Professor of Electrical Engineering and Computer Science EDUCATION/TRAINING (Begin with baccalaureate or other initial professional education, such as nursing, include postdoctoral training and residency training if applicable. Add/delete rows as necessary.) EDUCATION/TRAINING DEGREE Completion (if Date FIELD OF STUDY INSTITUTION AND LOCATION applicable) MM/YYYY Brandeis University, Waltham, MA AB 06/1983 Computer Science Massachusetts Institute of Technology SM 01/1986 Computer Science Massachusetts Institute of Technology Ph.D. 06/1990 Computer Science Massachusetts Institute of Technology Postdoc 06/1992 Applied Mathematics A. Personal Statement Advances in modern biology revolve around automated data collection and sharing of the large resulting datasets. I am considered a pioneer in the area of bringing computer algorithms to the study of biological data, and a founder in this community that I have witnessed grow so profoundly over the last 26 years. I have made major contributions to many areas of computational biology and biomedicine, largely, though not exclusively through algorithmic innovations, as demonstrated by nearly twenty thousand citations to my scientific papers and widely-used software. In recognition of my success, I have just been elected to the National Academy of Sciences and in 2019 received the ISCB Senior Scientist Award, the pinnacle award in computational biology. My research group works on diverse challenges, including Computational Genomics, High-throughput Technology Analysis and Design, Biological Networks, Structural Bioinformatics, Population Genetics and Biomedical Privacy. I spearheaded research on analyzing large and complex biological data sets through topological and machine learning approaches; e.g. -

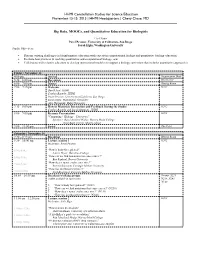

Big Data, Moocs, and ... (PDF)

HHMI Constellation Studios for Science Education November 13-15, 2015 | HHMI Headquarters | Chevy Chase, MD Big Data, MOOCs, and Quantitative Education for Biologists Co-Chairs Pavel Pevzner, University of California- San Diego Sarah Elgin, Washington University Studio Objectives Discuss existing challenges in bioinformatics education with experts in computational biology and quantitative biology education, Evaluate best practices in teaching quantitative and computational biology, and Collaborate with scientist educators to develop instructional modules to support a biology curriculum that includes quantitative approaches. Friday | November 13 4:00 pm Arrival Registration Desk 5:30 – 6:00 pm Reception Great Hall 6:00 – 7:00 pm Dinner Dining Room 7:00 – 7:15 pm Welcome K202 David Asai, HHMI Cynthia Bauerle, HHMI Pavel Pevzner, University of California-San Diego Sarah Elgin, Washington University Alex Hartemink, Duke University 7:15 – 8:00 pm How to Maximize Interaction and Feedback During the Studio K202 Cynthia Bauerle and Sarah Simmons, HHMI 8:00 – 9:00 pm Keynote Presentation K202 "Computing + Biology = Discovery" Speakers: Ran Libeskind-Hadas, Harvey Mudd College Eliot Bush, Harvey Mudd College 9:00 – 11:00 pm Social The Pilot Saturday | November 14 7:30 – 8:15 am Breakfast Dining Room 8:30 – 10:00 am Lecture session 1 K202 Moderator: Pavel Pevzner 834a-854a “How is body fat regulated?” Laurie Heyer, Davidson College 856a-916a “How can we find mutations that cause cancer?” Ben Raphael, Brown University “How does a tumor evolve over time?” 918a-938a Russell Schwartz, Carnegie Mellon University “How fast do ribosomes move?” 940a-1000a Carl Kingsford, Carnegie Mellon University 10:05 – 10:55 am Breakout working groups Rooms: S221, (coffee available in each room) N238, N241, N140 1. -

Computational Biology and Bioinformatics

Vol. 30 ISMB 2014, pages i1–i2 BIOINFORMATICS EDITORIAL doi:10.1093/bioinformatics/btu304 Editorial This special issue of Bioinformatics serves as the proceedings of The conference used a two-tier review system, a continuation the 22nd annual meeting of Intelligent Systems for Molecular and refinement of a process begun with ISMB 2013 in an effort Biology (ISMB), which took place in Boston, MA, July 11–15, to better ensure thorough and fair reviewing. Under the revised 2014 (http://www.iscb.org/ismbeccb2014). The official confer- process, each of the 191 submissions was first reviewed by at least ence of the International Society for Computational Biology three expert referees, with a subset receiving between four and (http://www.iscb.org/), ISMB, was accompanied by 12 Special eight reviews, as needed. These formal reviews were frequently Interest Group meetings of one or two days each, two satellite supplemented by online discussion among reviewers and Area meetings, a High School Teachers Workshop and two half-day Chairs to resolve points of dispute and reach a consensus on tutorials. Since its inception, ISMB has grown to be the largest each paper. Among the 191 submissions, 29 were conditionally international conference in computational biology and bioinfor- accepted for publication directly from the first round review Downloaded from matics. It is expected to be the premiere forum in the field for based on an assessment of the reviewers that the paper was presenting new research results, disseminating methods and tech- clearly above par for the conference. A subset of 16 papers niques and facilitating discussions among leading researchers, were viewed as potentially in the top tier but raised significant practitioners and students in the field. -

An Efficient, Scalable and Exact Representation of High-Dimensional Color Information Enabled Via De Bruijn Graph Search

bioRxiv preprint doi: https://doi.org/10.1101/464222; this version posted November 7, 2018. The copyright holder for this preprint (which was not certified by peer review) is the author/funder, who has granted bioRxiv a license to display the preprint in perpetuity. It is made available under aCC-BY-NC-ND 4.0 International license. An Efficient, Scalable and Exact Representation of High-Dimensional Color Information Enabled via de Bruijn Graph Search Fatemeh Almodaresi1, Prashant Pandey1, Michael Ferdman1, Rob Johnson2;1, and Rob Patro1 1 Computer Science Dept., Stony Brook University ffalmodaresit,ppandey,mferdman,[email protected] 2 VMware Research [email protected] Abstract. a The colored de Bruijn graph (cdbg) and its variants have become an important combinatorial structure used in numerous areas in genomics, such as population-level variation detection in metagenomic samples, large scale sequence search, and cdbg-based reference sequence indices. As samples or genomes are added to the cdbg, the color information comes to dominate the space required to represent this data structure. In this paper, we show how to represent the color information efficiently by adopting a hierarchical encoding that exploits correlations among color classes | patterns of color occurrence | present in the de Bruijn graph (dbg). A major challenge in deriving an efficient encoding of the color information that takes advantage of such correlations is determining which color classes are close to each other in the high-dimensional space of possible color patterns. We demonstrate that the dbg itself can be used as an efficient mechanism to search for approximate nearest neighbors in this space. -

Daniel Aalberts Scott Aa

PLOS Computational Biology would like to thank all those who reviewed on behalf of the journal in 2015: Daniel Aalberts Jeff Alstott Benjamin Audit Scott Aaronson Christian Althaus Charles Auffray Henry Abarbanel Benjamin Althouse Jean-Christophe Augustin James Abbas Russ Altman Robert Austin Craig Abbey Eduardo Altmann Bruno Averbeck Hermann Aberle Philipp Altrock Ferhat Ay Robert Abramovitch Vikram Alva Nihat Ay Josep Abril Francisco Alvarez-Leefmans Francisco Azuaje Luigi Acerbi Rommie Amaro Marc Baaden Orlando Acevedo Ettore Ambrosini M. Madan Babu Christoph Adami Bagrat Amirikian Mohan Babu Frederick Adler Uri Amit Marco Bacci Boris Adryan Alexander Anderson Stephen Baccus Tinri Aegerter-Wilmsen Noemi Andor Omar Bagasra Vera Afreixo Isabelle Andre Marc Baguelin Ashutosh Agarwal R. David Andrew Timothy Bailey Ira Agrawal Steven Andrews Wyeth Bair Jacobo Aguirre Ioan Andricioaei Chris Bakal Alaa Ahmed Ioannis Androulakis Joseph Bak-Coleman Hasan Ahmed Iris Antes Adam Baker Natalie Ahn Maciek Antoniewicz Douglas Bakkum Thomas Akam Haroon Anwar Gabor Balazsi Ilya Akberdin Stefano Anzellotti Nilesh Banavali Eyal Akiva Miguel Aon Rahul Banerjee Sahar Akram Lucy Aplin Edward Banigan Tomas Alarcon Kevin Aquino Martin Banks Larissa Albantakis Leonardo Arbiza Mukul Bansal Reka Albert Murat Arcak Shweta Bansal Martí Aldea Gil Ariel Wolfgang Banzhaf Bree Aldridge Nimalan Arinaminpathy Lei Bao Helen Alexander Jeffrey Arle Gyorgy Barabas Alexander Alexeev Alain Arneodo Omri Barak Leonidas Alexopoulos Markus Arnoldini Matteo Barberis Emil Alexov -

Bringing Folding Pathways Into Strand Pairing Prediction

Bringing folding pathways into strand pairing prediction Jieun Jeong1,2, Piotr Berman1, and Teresa Przytycka2 1 Computer Science and Engineering Department The Pennsylvania State University University Park, PA 16802 USA 2 National Center for Biotechnology Information US National Library of Medicine, National Institutes of Health Bethesda, MD 20894 email: [email protected], [email protected], [email protected] Abstract. The topology of β-sheets is defined by the pattern of hydrogen- bonded strand pairing. Therefore, predicting hydrogen bonded strand partners is a fundamental step towards predicting β-sheet topology. In this work we report a new strand pairing algorithm. Our algorithm at- tempts to mimic elements of the folding process. Namely, in addition to ensuring that the predicted hydrogen bonded strand pairs satisfy basic global consistency constraints, it takes into account hypothetical folding pathways. Consistently with this view, introducing hydrogen bonds be- tween a pair of strands changes the probabilities of forming other strand pairs. We demonstrate that this approach provides an improvement over previously proposed algorithms. 1 Introduction The prediction of protein structure from protein sequence is a long-held goal that would provide invaluable information regarding the function of individ- ual proteins and the evolution of protein families. The increasing amount of sequence and structure data, made it possible to decouple the structure predic- tion problem from the problem of modeling of protein folding process. Indeed, a significant progress has been achieved by bioinformatics approaches such as homology modeling, threading, and assembly from fragments [16]. At the same time, the fundamental problem of how actually a protein acquires its final folded state remains a subject of controversy. -

Extraction of Long K-Mers Using Spaced Seeds

Extraction of long k-mers using spaced seeds Miika Leinonen and Leena Salmela Department of Computer Science, Helsinki Institute for Information Technology HIIT, University of Helsinki fmiika.leinonen, leena.salmelag@helsinki.fi Abstract The extraction of k-mers from sequencing reads is an important task in many bioinformatics applications, such as all DNA sequence analysis methods based on de Bruijn graphs. These methods tend to be more accurate when the used k-mers are unique in the analyzed DNA, and thus the use of longer k-mers is preferred. When the read lengths of short read sequencing technologies increase, the error rate will become the determining factor for the largest possible value of k. Here we propose LoMeX which uses spaced seeds to extract long k-mers accurately even in the presence of sequencing errors. Our experiments show that LoMeX can extract long k-mers from current Illumina reads with a higher recall than a standard k-mer counting tool. Furthermore, our experiments on simulated data show that when the read length further increases, the performance of standard k-mer counters declines, whereas LoMeX still extracts long k-mers successfully. 1 Introduction Counting and extracting k-mers, i.e. subsequences of length k, from sequencing reads is a frequently used technique in bioinformatics applications and many tools have been developed to solve the task [25]. A k-mer counter needs to enumerate all different subsequences of length k that occur in the sequencing reads and report the frequency of each such k-mer. Counting k-mers has several applications in bioinformatics. -

Curriculum Vitae

Curriculum Vitae Tandy Warnow Grainger Distinguished Chair in Engineering 1 Contact Information Department of Computer Science The University of Illinois at Urbana-Champaign Email: [email protected] Homepage: http://tandy.cs.illinois.edu 2 Research Interests Phylogenetic tree inference in biology and historical linguistics, multiple sequence alignment, metage- nomic analysis, big data, statistical inference, probabilistic analysis of algorithms, machine learning, combinatorial and graph-theoretic algorithms, and experimental performance studies of algorithms. 3 Professional Appointments • Co-chief scientist, C3.ai Digital Transformation Institute, 2020-present • Grainger Distinguished Chair in Engineering, 2020-present • Associate Head for Computer Science, 2019-present • Special advisor to the Head of the Department of Computer Science, 2016-present • Associate Head for the Department of Computer Science, 2017-2018. • Founder Professor of Computer Science, the University of Illinois at Urbana-Champaign, 2014- 2019 • Member, Carl R. Woese Institute for Genomic Biology. Affiliate of the National Center for Supercomputing Applications (NCSA), Coordinated Sciences Laboratory, and the Unit for Criticism and Interpretive Theory. Affiliate faculty member in the Departments of Mathe- matics, Electrical and Computer Engineering, Bioengineering, Statistics, Entomology, Plant Biology, and Evolution, Ecology, and Behavior, 2014-present. • National Science Foundation, Program Director for Big Data, July 2012-July 2013. • Member, Big Data Senior Steering Group of NITRD (The Networking and Information Tech- nology Research and Development Program), subcomittee of the National Technology Council (coordinating federal agencies), 2012-2013 • Departmental Scholar, Institute for Pure and Applied Mathematics, UCLA, Fall 2011 • Visiting Researcher, University of Maryland, Spring and Summer 2011. 1 • Visiting Researcher, Smithsonian Institute, Spring and Summer 2011. • Professeur Invit´e,Ecole Polytechnique F´ed´erale de Lausanne (EPFL), Summer 2010. -

2014 ISCB Accomplishment by a Senior Scientist Award: Gene Myers

Message from ISCB 2014 ISCB Accomplishment by a Senior Scientist Award: Gene Myers Christiana N. Fogg1, Diane E. Kovats2* 1 Freelance Science Writer, Kensington, Maryland, United States of America, 2 Executive Director, International Society for Computational Biology, La Jolla, California, United States of America The International Society for Computa- tional Biology (ISCB; http://www.iscb. org) annually recognizes a senior scientist for his or her outstanding achievements. The ISCB Accomplishment by a Senior Scientist Award honors a leader in the field of computational biology for his or her significant contributions to the com- munity through research, service, and education. Dr. Eugene ‘‘Gene’’ Myers of the Max Planck Institute of Molecular Cell Biology and Genetics in Dresden has been selected as the 2014 ISCB Accom- plishment by a Senior Scientist Award winner. Myers (Image 1) was selected by the ISCB’s awards committee, which is chaired by Dr. Bonnie Berger of the Massachusetts Institute of Technology (MIT). Myers will receive his award and deliver a keynote address at ISCB’s 22nd Image 1. Gene Myers. Image credit: Matt Staley, HHMI. Annual Intelligent Systems for Molecular doi:10.1371/journal.pcbi.1003621.g001 Biology (ISMB) meeting. This meeting is being held in Boston, Massachusetts, on July 11–15, 2014, at the John B. Hynes guidance of his dissertation advisor, An- sequences and how to build evolutionary Memorial Convention Center (https:// drzej Ehrenfeucht, who had eclectic inter- trees. www.iscb.org/ismb2014). ests that included molecular biology. Myers landed his first faculty position in Myers was captivated by computer Myers, along with fellow graduate students the Department of Computer Science at programming as a young student.