Iplug 2: Desktop Plug-In Framework Meets Web Audio Modules

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Institut Für Musikinformatik Und Musikwissenschaft

Institut für Musikinformatik und Musikwissenschaft Leitung: Prof. Dr. Christoph Seibert Veranstaltungsverzeichnis für das Sommersemester 2021 Stand 9.04.2021 Vorbemerkung: Im Sommersemester 2021 werden Lehrveranstaltungen als Präsenzveranstaltungen, online oder in hybrider Form (Präsenzveranstaltung mit der Möglichkeit auch online teilzunehmen) durchgeführt. Die konkrete Durchführung der Lehrveranstaltung hängt ab von der jeweils aktuellen Corona-Verordnung Studienbetrieb. Darüber hinaus ausschlaggebend sind die Anzahl der Teilnehmerinnen und Teilnehmer, die maximal zulässige Personenzahl im zugewiesenen Raum und inhaltliche Erwägungen. Die vorliegenden Angaben hierzu entsprechen dem aktuellen Planungsstand. Für die weitere Planung ist es notwendig, dass alle, die an einer Lehrveranstaltung teilnehmen möchten, sich bis Mittwoch, 07.04. bei der jeweiligen Dozentin / dem jeweiligen Dozenten per E-Mail anmelden. Geben Sie bitte auch an, wenn Sie aufgrund der Pandemie-Situation nicht an Präsenzveranstaltungen teilnehmen können oder möchten, etwa bei Zugehörigkeit zu einer Risikogruppe. Diese Seite wird in regelmäßigen Abständen aktualisiert, um die Angaben den aktuellen Gegebenheiten anzupassen. Änderungen gegenüber früheren Fassungen werden markiert. Musikinformatik Prof. Dr. Marc Bangert ([email protected]) Prof. Dr. Paulo Ferreira-Lopes ([email protected]) Prof. Dr. Eckhard Kahle ([email protected]) Prof. Dr. Christian Langen ([email protected]) Prof. Dr. Damon T. Lee ([email protected]) Prof. Dr. Marlon Schumacher ([email protected]) Prof. Dr. Christoph Seibert ([email protected]) Prof. Dr. Heiko Wandler ([email protected]) Tobias Bachmann ([email protected]) Patrick Borgeat ([email protected]) Anna Czepiel ([email protected]) Dirk Handreke ([email protected]) Daniel Höpfner ([email protected]) Daniel Fütterer ([email protected]) Rainer Lorenz ([email protected]) Nils Lemke ([email protected]) Alexander Lunt ([email protected]) Luís A. -

Embedded Real-Time Audio Signal Processing with Faust Romain Michon, Yann Orlarey, Stéphane Letz, Dominique Fober, Dirk Roosenburg

Embedded Real-Time Audio Signal Processing With Faust Romain Michon, Yann Orlarey, Stéphane Letz, Dominique Fober, Dirk Roosenburg To cite this version: Romain Michon, Yann Orlarey, Stéphane Letz, Dominique Fober, Dirk Roosenburg. Embedded Real- Time Audio Signal Processing With Faust. International Faust Conference (IFC-20), Dec 2020, Paris, France. hal-03124896 HAL Id: hal-03124896 https://hal.archives-ouvertes.fr/hal-03124896 Submitted on 29 Jan 2021 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Proceedings of the 2nd International Faust Conference (IFC-20), Maison des Sciences de l’Homme Paris Nord, France, December 1-2, 2020 EMBEDDED REAL-TIME AUDIO SIGNAL PROCESSING WITH FAUST Romain Michon,a;b Yann Orlarey,a Stéphane Letz,a Dominique Fober,a and Dirk Roosenburgd;e aGRAME – Centre National de Création Musicale, Lyon, France bCenter for Computer Research in Music and Acoustics, Stanford University, USA dTIMARA, Oberlin Conservatory of Music, USA eDepartment of Physics, Oberlin College, USA [email protected] ABSTRACT multi-channel audio, etc.). These products work in conjunction with specialized Linux distributions where audio processing tasks FAUST has been targeting an increasing number of embedded plat- forms for real-time audio signal processing applications in recent are carried out outside of the operating system, allowing for the years. -

Extending the Faust VST Architecture with Polyphony, Portamento and Pitch Bend Yan Michalevsky Julius O

Extending the Faust VST Architecture with Polyphony, Portamento and Pitch Bend Yan Michalevsky Julius O. Smith Andrew Best Department of Electrical Center for Computer Research in Blamsoft, Inc. Engineering, Music and Acoustics (CCRMA), [email protected] Stanford University Stanford University [email protected] AES Fellow [email protected] Abstract VST (Virtual Studio Technology) plugin stan- We introduce the vsti-poly.cpp architecture for dard was released by Steinberg GmbH (famous the Faust programming language. It provides sev- for Cubase and other music and sound produc- eral features that are important for practical use of tion products) in 1996, and was followed by the Faust-generated VSTi synthesizers. We focus on widespread version 2.0 in 1999 [8]. It is a partic- the VST architecture as one that has been used tra- ularly common format supported by many older ditionally and is supported by many popular tools, and newer tools. and add several important features: polyphony, note Some of the features expected from a VST history and pitch-bend support. These features take plugin can be found in the VST SDK code.2 Faust-generated VST instruments a step forward in Examining the list of MIDI events [1] can also terms of generating plugins that could be used in Digital Audio Workstations (DAW) for real-world hint at what capabilities are expected to be im- music production. plemented by instrument plugins. We also draw from our experience with MIDI instruments and Keywords commercial VST plugins in order to formulate sound feature requirements. Faust, VST, Plugin, DAW In order for Faust to be a practical tool for generating such plugins, it should support most 1 Introduction of the features expected, such as the following: Faust [5] is a popular music/audio signal pro- • Responding to MIDI keyboard events cessing language developed by Yann Orlarey et al. -

Graphical Design of Physical Models for Real-Time Sound Synthesis

peter vasil GRAPHICALDESIGNOFPHYSICALMODELSFOR REAL-TIMESOUNDSYNTHESIS Masterarbeit Audiokommunikation und -technologie GRAPHICALDESIGNOFPHYSICALMODELS FORREAL-TIMESOUNDSYNTHESIS Implementation of a Graphical User Interface for the Synth-A-Modeler compiler vorgelegt von peter vasil Matr.-Nr.: 328493 Technische Universität Berlin Fakultät 1: Fachgebiet Audiokommunikation Abgabe: 25. Juli 2013 Peter Vasil: Graphical Design of Physical Models for Real-Time Sound Synthesis, Implementation of a Graphical User Interface for the Synth- A-Modeler compiler, © July 2013 supervisors: Prof. Dr. Stefan Weinzierl Dr. Edgar Berdahl ABSTRACT The goal of this Master of Science thesis in Audio Communication and Technology at Technical University Berlin, is to develop a Graph- ical User Interface (GUI) for the Synth-A-Modeler compiler, a text-based tool for converting physical model specification files into DSP exter- nal modules. The GUI should enable composers, artists and students to use physical modeling intuitively, without having to employ com- plex mathematical equations normally necessary for physical model- ing. The GUI that will be developed in the course of this thesis allows the creation of physical models with graphical objects for physical masses, links, resonators, waveguides, terminations, and audioout objects. In addition, this tool will be used to create a model for an Arabic oud to demonstrate its functionality. ZUSAMMENFASSUNG Das Ziel dieser Master Arbeit am Fachgebiet Audiokommunikation der TU Berlin, ist die Entwicklung einer graphischen Umgebung für die textbasierte Software, Synth-A-Modeler compiler, welche es erlaubt, ein speziell dafür entwickeltes Datei Format für physikalische Mod- elle, in externe DSP Module umzuwandeln. Die Software macht es Komponisten, Künstlern und Studenten möglich, physikalische Modellierung intuitiv zu anzuwenden, ohne die komplexen mathe- matischen Formeln, welche normalerweise für physikalische Model- lierung nötig sind, anwenden zu müssen. -

Audio Signal Processing in Faust

Audio Signal Processing in Faust Julius O. Smith III Center for Computer Research in Music and Acoustics (CCRMA) Department of Music, Stanford University, Stanford, California 94305 USA jos at ccrma.stanford.edu Abstract Faust is a high-level programming language for digital signal processing, with special sup- port for real-time audio applications and plugins on various software platforms including Linux, Mac-OS-X, iOS, Android, Windows, and embedded computing environments. Audio plugin formats supported include VST, lv2, AU, Pd, Max/MSP, SuperCollider, and more. This tuto- rial provides an introduction focusing on a simple example of white noise filtered by a variable resonator. Contents 1 Introduction 3 1.1 Installing Faust ...................................... 4 1.2 Faust Examples ...................................... 5 2 Primer on the Faust Language 5 2.1 Basic Signal Processing Blocks (Elementary Operators onSignals) .......... 7 2.2 BlockDiagramOperators . ...... 7 2.3 Examples ........................................ 8 2.4 InfixNotationRewriting. ....... 8 2.5 Encoding Block Diagrams in the Faust Language ................... 9 2.6 Statements ...................................... ... 9 2.7 FunctionDefinition............................... ...... 9 2.8 PartialFunctionApplication . ......... 10 2.9 FunctionalNotationforOperators . .......... 11 2.10Examples ....................................... 11 1 2.11 Summary of Faust NotationStyles ........................... 11 2.12UnaryMinus ..................................... 12 2.13 Fixing -

Proyecto Fin De Grado

ESCUELA TÉCNICA SUPERIOR DE INGENIERÍA Y SISTEMAS DE TELECOMUNICACIÓN PROYECTO FIN DE GRADO TÍTULO: Implementación de un sintetizador en un kit de desarrollo de bajo coste AUTOR: Arturo Fernández TITULACIÓN: Grado en Ingeniería de Sonido e Imagen TUTOR: Antonio Mínguez Olivares DEPARTAMENTO: Teoría de la señal y Comunicación VºBº Miembros del Tribunal Calificador: PRESIDENTE: Eduardo Nogueira Díaz TUTOR: Antonio Mínguez Olivares SECRETARIO: Francisco Javier Tabernero Gil Fecha de lectura: Calificación: El Secretario, Agradecimientos − A mis padres y mi hermana, por apoyarme en todos los aspectos (con discrepancias inevitables) a lo largo de esta carrera de fondo. − A mis amigos y la novia, por no verme el pelo durante largos periodos de tiempo. − A los profesores que han conseguido despertar mi interés y dedicarme horas en tutorías. − A mi tutor por aceptar mi propuesta y tutelar el proyecto sin pensárselo. − A mi cuñado Rafa, que, a pesar de estar eternamente abarrotado, sacaba un hueco para ayudarme en los momentos más difíciles, motivarme y guiarme a lo largo de todos estos años. Sin su ayuda, esto no habría sido posible. − Finalmente, darme las gracias a mí mismo, por aguantar este ritmo de vida, tener la ambición de seguir y afrontar nuevos retos. Como me dice mi padre: “Tú hasta que no llegues a la Luna, no vas a parar, ¿no?”. Implementación de un sintetizador en un kit de desarrollo de bajo coste Resumen La realización de este proyecto consiste en el diseño de un sintetizador ‘Virtual Analógico’ basado en sintetizadores de los años 60 y 70. Para ello, se estudia la teoría que conforma la topología básica de los sintetizadores sustractivos, así como, los elementos que intervienen en la generación y modificación del sonido. -

Audio Software Developer (F/M/X)

Impulse Audio Lab is an audio company based in the heart of Munich. We are an interdisciplinary team of experts in interactive sound design and audio software development, working on innovative and pioneering projects focused on sound. We are looking for a Software Developer (f/m/x) for innovative audio projects You are passionate about audio and DSP algorithms and want to work on new products and solutions. The combination of audio processing and e-mobility sounds exciting to you. You want to solve complex problems through state-of-the-art software solutions and apply your knowledge and expertise to all aspects of the software development lifecycle. What are you waiting for? These could be your future responsibilities: • Handling complex audio software projects, from conception and architecture to implementation • Working on both client-oriented projects as well as our own growing product portfolio • Development, implementation and optimization of new algorithms for processing and generating audio • Sharing your experiences and knowledge with your colleagues This should be you: • You are educated to degree level or have equivalent experience in relevant fields such as Electrical Engineering, Media Technology or Computer Science • You have multiple years of experience programming audio software in C/C++, using frameworks such as Qt or JUCE and Audio Plug-in APIs • You are a team player and are also comfortable taking responsibility for your own projects • You are able to work collaboratively, from requirements to acceptance and in the corresponding tool landscape (Git, unit testing, build systems, automation, debugging, etc.) This could be a plus: • You have programming experience on embedded architectures (e.g. -

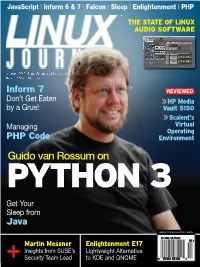

Guido Van Rossum on PYTHON 3 Get Your Sleep From

JavaScript | Inform 6 & 7 | Falcon | Sleep | Enlightenment | PHP LINUX JOURNAL ™ THE STATE OF LINUX AUDIO SOFTWARE LANGUAGES Since 1994: The Original Magazine of the Linux Community OCTOBER 2008 | ISSUE 174 Inform 7 REVIEWED JavaScript | Inform 6 & 7 | Falcon | Sleep Enlightenment PHP Audio | Inform 6 & 7 Falcon JavaScript Don’t Get Eaten HP Media by a Grue! Vault 5150 Scalent’s Managing Virtual Operating PHP Code Environment Guido van Rossum on PYTHON 3 Get Your Sleep from OCTOBER Java www.linuxjournal.com 2008 $5.99US $5.99CAN 10 ISSUE Martin Messner Enlightenment E17 Insights from SUSE’s Lightweight Alternative 174 + Security Team Lead to KDE and GNOME 0 09281 03102 4 MULTIPLY ENERGY EFFICIENCY AND MAXIMIZE COOLING. THE WORLD’S FIRST QUAD-CORE PROCESSOR FOR MAINSTREAM SERVERS. THE NEW QUAD-CORE INTEL® XEON® PROCESSOR 5300 SERIES DELIVERS UP TO 50% 1 MORE PERFORMANCE*PERFORMANCE THAN PREVIOUS INTEL XEON PROCESSORS IN THE SAME POWERPOWER ENVELOPE.ENVELOPE. BASEDBASED ONON THETHE ULTRA-EFFICIENTULTRA-EFFICIENT INTEL®INTEL® CORE™CORE™ MICROMICROARCHITECTURE, ARCHITECTURE IT’S THE ULTIMATE SOLUTION FOR MANAGING RUNAWAY COOLING EXPENSES. LEARN WHYWHY GREAT GREAT BUSINESS BUSINESS COMPUTING COMPUTING STARTS STARTS WITH WITH INTEL INTEL INSIDE. INSIDE. VISIT VISIT INTEL.CO.UK/XEON INTEL.COM/XEON. RELION 2612 s 1UAD #ORE)NTEL®8EON® RELION 1670 s 1UAD #ORE)NTEL®8EON® PROCESSOR PROCESSOR s 5SERVERWITHUPTO4" s )NTEL@3EABURG CHIPSET s )DEALFORCOST EFFECTIVE&ILE$" WITH-(ZFRONTSIDEBUS APPLICATIONS s 5PTO'"2!-IN5CLASS s 2!32ELIABILITY !VAILABILITY LEADINGMEMORYCAPACITY 3ERVICEABILITY s -ANAGEMENTFEATURESTOSUPPORT LARGECLUSTERDEPLOYMENTS 34!24).'!4$2429.00 34!24).'!4$1969.00 Penguin Computing provides turnkey x86/Linux clusters for high performance technical computing applications. -

Cross-Platform 1 Cross-Platform

Cross-platform 1 Cross-platform In computing, cross-platform, or multi-platform, is an attribute conferred to computer software or computing methods and concepts that are implemented and inter-operate on multiple computer platforms.[1] [2] Cross-platform software may be divided into two types; one requires individual building or compilation for each platform that it supports, and the other one can be directly run on any platform without special preparation, e.g., software written in an interpreted language or pre-compiled portable bytecode for which the interpreters or run-time packages are common or standard components of all platforms. For example, a cross-platform application may run on Microsoft Windows on the x86 architecture, Linux on the x86 architecture and Mac OS X on either the PowerPC or x86 based Apple Macintosh systems. A cross-platform application may run on as many as all existing platforms, or on as few as two platforms. Platforms A platform is a combination of hardware and software used to run software applications. A platform can be described simply as an operating system or computer architecture, or it could be the combination of both. Probably the most familiar platform is Microsoft Windows running on the x86 architecture. Other well-known desktop computer platforms include Linux/Unix and Mac OS X (both of which are themselves cross-platform). There are, however, many devices such as cellular telephones that are also effectively computer platforms but less commonly thought about in that way. Application software can be written to depend on the features of a particular platform—either the hardware, operating system, or virtual machine it runs on. -

Grierson, Mick and Fiebrink, Rebecca. 2018. User-Centred Design Actions for Lightweight Evaluation of an Interactive Machine Learning Toolkit

Bernardo, Francisco; Grierson, Mick and Fiebrink, Rebecca. 2018. User-Centred Design Actions for Lightweight Evaluation of an Interactive Machine Learning Toolkit. Journal of Science and Technology of the Arts (CITARJ), 10(2), ISSN 1646-9798 [Article] (In Press) http://research.gold.ac.uk/23667/ The version presented here may differ from the published, performed or presented work. Please go to the persistent GRO record above for more information. If you believe that any material held in the repository infringes copyright law, please contact the Repository Team at Goldsmiths, University of London via the following email address: [email protected]. The item will be removed from the repository while any claim is being investigated. For more information, please contact the GRO team: [email protected] Journal of Science and Technology of the Arts, Volume 10, No. 2 – Special Issue eNTERFACE’17 User-Centred Design Actions for Lightweight Evaluation of an Interactive Machine Learning Toolkit Francisco Bernardo Mick Grierson Rebecca Fiebrink Department of Computing Department of Computing Department of Computing Goldsmiths, University of London Goldsmiths, University of London Goldsmiths, University of London London SE14 6NW London SE14 6NW London SE14 6NW ----- ----- ----- [email protected] [email protected] [email protected] ----- ----- ----- ABSTRACT KEYWORDS Machine learning offers great potential to developers User-centred Design; Action Research; Interactive and end users in the creative industries. For Machine Learning; Application Programming example, it can support new sensor-based Interfaces; Toolkits; Creative Technology. interactions, procedural content generation and end- user product customisation. However, designing ARTICLE INFO machine learning toolkits for adoption by creative Received: 03 April 2018 developers is still a nascent effort. -

An Old-School Polyphonic Synthesizer Samplv1

local projects linuxaudio.org Show-off my open-source stuff, mostly of the Linux Audio/MIDI genre Vee One Suite 0.9.4 - A Late Autumn'18 Release 12 December, 2018 - 19:00 — rncbc Greetings! The Vee One Suite of so called old-school software instruments, synthv1, as a polyphonic subtractive synthesizer, samplv1, a polyphonic sampler synthesizer, drumkv1 as yet another drum-kit sampler and padthv1 as a polyphonic additive synthesizer, are being released for the late in Autumn'18. All still available in dual format: a pure stand-alone JACK client with JACK-session, NSM (Non Session management) and both JACK MIDI and ALSA MIDI input support; a LV2 instrument plug-in. The changes for this special season are the following: Make sure all LV2 state sample file references are resolved to their original and canonical file- paths (not symlinks). (applies to samplv1 and drumkv1 only) Fixed a severe bug on saving the LV2 plug-in state: the sample file reference was being saved with the wrong name identifier and thus gone missing on every session or state reload thereafter.(applies to samplv1 only). Sample waveform drawing is a bit more keen to precision. Old deprecated Qt4 build support is no more. Normalized wavetable oscillator phasors. Added missing include <unistd.h> to shut up some stricter compilers from build failures. The Vee One Suite are free, open-source Linux Audio software, distributed under the terms of the GNU General Public License (GPL) version 2 or later. And here they go again! synthv1 - an old-school polyphonic synthesizer synthv1 0.9.4 (late-autumn'18) released! synthv1 is an old-school all-digital 4-oscillator subtractive polyphonic synthesizer with stereo fx. -

Structural Equation Modeling Analysis of Etiological Factors in Social Anxiety

Louisiana State University LSU Digital Commons LSU Historical Dissertations and Theses Graduate School 1997 Structural Equation Modeling Analysis of Etiological Factors in Social Anxiety. Michele E. Mccarthy Louisiana State University and Agricultural & Mechanical College Follow this and additional works at: https://digitalcommons.lsu.edu/gradschool_disstheses Recommended Citation Mccarthy, Michele E., "Structural Equation Modeling Analysis of Etiological Factors in Social Anxiety." (1997). LSU Historical Dissertations and Theses. 6505. https://digitalcommons.lsu.edu/gradschool_disstheses/6505 This Dissertation is brought to you for free and open access by the Graduate School at LSU Digital Commons. It has been accepted for inclusion in LSU Historical Dissertations and Theses by an authorized administrator of LSU Digital Commons. For more information, please contact [email protected]. INFORMATION TO USERS This manuscript has been reproduced from the microfilm master. UMI films the text direct^ from the original or copy submitted. Thus, some thesis and dissotation copies are in ^pewriter fiice; while others may be from aty type o f computer printer. The qnalltyr o f this rqirodnction is dependent npon the quality of the copy submitted. Broken or indistinct print, colored or poor quality illustrations and photogrtqihs, print bleedthrough, substandard margins, and improper alignment can adversely aflfect rq>roduction. In the unlikely event that the author did not send UMI a complete manuscript and there are missing pages, these will be noted. Also, if unauthorized copyright material had to be removed, a note will indicate the deletioiL Oversize materials (e g., maps, drawings, charts) are reproduced by sectioning the original, b%inning at the upper left-hand comer and continuing from left to right in equal sections with small overiaps.