A Search Engine Based on Swarm Information Foraging Daniel Gayo-Avello 1

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Evaluation of Web-Based Search Engines Using User-Effort Measures

Evaluation of Web-Based Search Engines Using User-Effort Measures Muh-Chyun Tang and Ying Sun 4 Huntington St. School of Information, Communication and Library Studies Rutgers University, New Brunswick, NJ 08901, U.S.A. [email protected] [email protected] Abstract This paper presents a study of the applicability of three user-effort-sensitive evaluation measures —“first 20 full precision,” “search length,” and “rank correlation”—on four Web-based search engines (Google, AltaVista, Excite and Metacrawler). The authors argue that these measures are better alternatives than precision and recall in Web search situations because of their emphasis on the quality of ranking. Eight sets of search topics were collected from four Ph.D. students in four different disciplines (biochemistry, industrial engineering, economics, and urban planning). Each participant was asked to provide two topics along with the corresponding query terms. Their relevance and credibility judgment of the Web pages were then used to compare the performance of the search engines using these three measures. The results show consistency among these three ranking evaluation measures, more so between “first 20 full precision” and search length than between rank correlation and the other two measures. Possible reasons for rank correlation’s disagreement with the other two measures are discussed. Possible future research to improve these measures is also addressed. Introduction The explosive growth of information on the World Wide Web poses a challenge to traditional information retrieval (IR) research. Other than the sheer amount of information, some structural factors make searching for relevant and quality information on the Web a formidable task. -

How to Choose a Search Engine Or Directory

How to Choose a Search Engine or Directory Fields & File Types If you want to search for... Choose... Audio/Music AllTheWeb | AltaVista | Dogpile | Fazzle | FindSounds.com | Lycos Music Downloads | Lycos Multimedia Search | Singingfish Date last modified AllTheWeb Advanced Search | AltaVista Advanced Web Search | Exalead Advanced Search | Google Advanced Search | HotBot Advanced Search | Teoma Advanced Search | Yahoo Advanced Web Search Domain/Site/URL AllTheWeb Advanced Search | AltaVista Advanced Web Search | AOL Advanced Search | Google Advanced Search | Lycos Advanced Search | MSN Search Search Builder | SearchEdu.com | Teoma Advanced Search | Yahoo Advanced Web Search File Format AllTheWeb Advanced Web Search | AltaVista Advanced Web Search | AOL Advanced Search | Exalead Advanced Search | Yahoo Advanced Web Search Geographic location Exalead Advanced Search | HotBot Advanced Search | Lycos Advanced Search | MSN Search Search Builder | Teoma Advanced Search | Yahoo Advanced Web Search Images AllTheWeb | AltaVista | The Amazing Picture Machine | Ditto | Dogpile | Fazzle | Google Image Search | IceRocket | Ixquick | Mamma | Picsearch Language AllTheWeb Advanced Web Search | AOL Advanced Search | Exalead Advanced Search | Google Language Tools | HotBot Advanced Search | iBoogie Advanced Web Search | Lycos Advanced Search | MSN Search Search Builder | Teoma Advanced Search | Yahoo Advanced Web Search Multimedia & video All TheWeb | AltaVista | Dogpile | Fazzle | IceRocket | Singingfish | Yahoo Video Search Page Title/URL AOL Advanced -

Instrumentalizing the Sources of Attraction. How Russia Undermines Its Own Soft Power

INSTRUMENTALIZING THE SOURCES OF ATTRACTION. HOW RUSSIA UNDERMINES ITS OWN SOFT POWER By Vasile Rotaru Abstract The 2011-2013 domestic protests and the 2013-2015 Ukraine crisis have brought to the Russian politics forefront an increasing preoccupation for the soft power. The concept started to be used in official discourses and documents and a series of measures have been taken both to avoid the ‘dangers’ of and to streamline Russia’s soft power. This dichotomous approach towards the ‘power of attraction’ have revealed the differences of perception of the soft power by Russian officials and the Western counterparts. The present paper will analyse Russia’s efforts to control and to instrumentalize the sources of soft power, trying to assess the effectiveness of such an approach. Keywords: Russian soft power, Russian foreign policy, public diplomacy, Russian mass media, Russian internet Introduction The use of term soft power is relatively new in the Russian political circles, however, it has become recently increasingly popular among the Russian analysts, policy makers and politicians. The term per se was used for the first time in Russian political discourse in February 2012 by Vladimir Putin. In the presidential election campaign, the then candidate Putin drew attention to the fact that soft power – “a set of tools and methods to achieve foreign policy goals without the use of arms but by exerting information and other levers of influence” is used frequently by “big countries, international blocks or corporations” “to develop and provoke extremist, separatist and nationalistic attitudes, to manipulate the public and to directly interfere in the domestic policy of sovereign countries” (Putin 2012). -

Functional Differences Between Northern Light Singlepoint And

Functional Differences Between Northern Light SinglePoint and Microsoft SharePoint For Strategic Research Portals Northern Light® Whitepaper April 2011 TABLE OF CONTENTS Background On Northern Light SinglePoint ................................................................................................................... 2 Third-Party Licensed External Content ...................................................................................................................... 3 Internally Produced Research Content ...................................................................................................................... 4 User Authentication................................................................................................................................................... 4 Differences Between Northern Light SinglePoint and Microsoft SharePoint ................................................................ 5 Investment in a Research-optimized Portal and Supporting Systems ....................................................................... 5 Ability To Technically Integrate With Third-Parties To Index Research Content ....................................................... 6 Content Liability ......................................................................................................................................................... 7 Document Security Conventions Reflecting Licensing Arrangements ....................................................................... 7 Benefit of Having the -

An Empirical Analysis of Internet Search Engine Choice

An Empirical Analysis of Internet Search Engine Choice Rahul Telang ([email protected]) Tridas Mukhopadhyay ([email protected]) Ronald T. Wilcox ([email protected]) Send correspondence to Rahul Telang H. John Heinz III School of Public Policy and Management Carnegie Mellon University 5000 Forbes Avenue Pittsburgh, PA 15213-3890 [email protected] December 2001 An Empirical Analysis of Internet Search Engine Choice Abstract We investigate consumers’ choice behavior for Internet search engines. Within this broad agenda, we focus on two interrelated issues. First, we examine whether consumers develop loyalty to a particular search engine. If loyalty does indeed develop, we seek to understand the role of loyalty in the search engine choice. We also explore how the use of non-search features such as email, news, etc. provided by the engines enhances or inhibits customer loyalty. Second, we seek to determine how search engine performance affects the user choice behavior. To accomplish our research objective, we first develop a conceptual model of search engine choice based on the literature of human-computer interaction and cognitive psychology. Our model reflects the fact that information goods such as search engines are a fundamentally different class of products than common household items. We posit that the ability to learn various search engine features and the ease (or difficulty) of transferring this learning to other engines would determine loyalty in this context. Indeed, we expect the user to exhibit differing levels of loyalty to search and non-search features of the engines. We also expect that dissatisfaction with search results would negatively affect search engine choice. -

Meta Search Engine Examples

Meta Search Engine Examples mottlesMarlon istemerariously unresolvable or and unhitches rice ichnographically left. Salted Verney while crowedanticipated no gawk Horst succors underfeeding whitherward and naphthalising. after Jeremy Chappedredetermines and acaudalfestively, Niels quite often sincipital. globed some Schema conflict can be taken the meta descriptions appear after which result, it later one or can support. Would result for updating systematic reviews from different business view all fields need to our generated usually negotiate the roi. What is hacking or hacked content? This meta engines! Search Engines allow us to filter the tons of information available put the internet and get the bid accurate results And got most people don't. Best Meta Search array List The Windows Club. Search engines have any category, google a great for a suggestion selection has been shown in executive search input from health. Search engine name of their booking on either class, the sites can select a search and generally, meaning they have past the systematisation of. Search Engines Corner Meta-search Engines Ariadne. Obsession of search engines such as expedia, it combines the example, like the answer about search engines out there were looking for. Test Embedded Software IC Design Intellectual Property. Using Research Tools Web Searching OCLS. The meta description for each browser settings to bing, boolean logic always prevent them the hierarchy does it displays the search engine examples osubject directories. Online travel agent Bookingcom has admitted that playing has trouble to compensate customers whose personal details have been stolen Guests booking hotel rooms have unwittingly handed over business to criminals Bookingcom is go of the biggest online travel agents. -

Wie Funktionieren Suchmaschinen?

Schriftenreihe der Abteilung "Organisation und Technikgenese" des Forschungsschwerpunkts Technik-Arbeit-Umwelt am WZB FS II 98-104 Reisen ohne Karte Wie funktionieren Suchmaschinen? Von Tilman Baumgärtel [email protected] Institut für Sozialwissenschaften Fachbereich Umwelt und Gesellschaft, TU Berlin und Wissenschaftszentrum Berlin für Sozialforschung GmbH (WZB) Reichpietschufer 50, 10785 Berlin Telefon (030) 254 91 - 0, Fax (030) 254 91 - 684 Zusammenfassung Suchmaschinen sind die Eingangstore zum Internet. Doch obwohl Netz-User die Suchmaschinen bei fast jeder „Surftour" benutzen, wissen nur die wenigsten ihrer Nutzer, wie sie funktionieren. Dieses Papier geht der Frage nach, ob die zentrale Position von Suchmaschinen im Netz auch zu einer Zentralisierung des Internet fuhren kann. Durch eine detaillierte Darstellung der Funktionsweise der Search Engines wird gezeigt, daß sie nicht pauschal als Instanzen betrachtet werden können, die dem „unstrukturierten", „hierarchiefreien" Internet ein Zentrum und eine Hierarchie aufzwingen. Technische Einzelaspekte erscheinen jedoch trotzdem problematisch: Besonders Suchmaschinen, die - wie Lycos - die angebliche Beliebtheit einer Site zum Maßstab ihrer Beurteilung machen, tragen dazu bei, im Netz eine hierarchische Gliederung zu voranzutreiben. Abstract Search Engines are the entrance doors to the internet. But despite the fact that net surfer use them almost every time they go online, most of them don't know how they actually work. This discussion paper addresses the question, whether the central position of search engines can lead to a centralization of the internet. A detailed technical analysis of the search engines leads to the conclusion that they cannot simply be considered as the main authorities that impose a center and a hierarchy on the supposedly „unstructured", „non-hierarchical" Internet. -

Google Maths

Google Maths Philip Knight March 13, 2009 Outline • Brief history of search engines • Google and Googleplex • Web as a graph • Link Analysis • Search 3.0, 4.0, 5.0, . 1 History • Archie (1990), Veronica and Jughead (1993) • WWW Wanderer, ALIWEB(1993) • WWW Worm, JumpStation, RBSE (1993) • WebCrawler (1994) • Yahoo, Lycos (1994), AltaVista (1995) • Google (1998) 2 Google and Googleplex • Founded by Larry Page and Sergei Brin. • “Scraped together” $1000000 in 1998 to start up. 3 Google and Googleplex • Founded by Larry Page and Sergei Brin. • “Scraped together” $1000000 in 1998 to start up. • First office a garage (9/98). • By 6/99 had $25000000 in funding. 3 Google and Googleplex • Founded by Larry Page and Sergei Brin. • “Scraped together” $1000000 in 1998 to start up. • First office a garage (9/98). • By 6/99 had $25000000 in funding. • Launched search engine 9/99,“world’s biggest” 9 months later. 3 Google and Googleplex • Founded by Larry Page and Sergei Brin. • “Scraped together” $1000000 in 1998 to start up. • First office a garage (9/98). • By 6/99 had $25000000 in funding. • Launched search engine 9/99,“world’s biggest” 9 months later. • 14142135 shares floated in 2004 for $2718281828. • Current market value is $100, 000, 000, 000. 3 Fundamental Problems A successful search engine requires • A well-maintained index. • Effective presentation of results. • A good business model. • Properly ordered results. 4 Web As A Graph • Think of web as a collection of vertices and edges. • Vertices (V) are web pages. • Edges (E) are links. • Web is a directed graph G(V, E): edge goes from vi to vj if page i links to page j. -

Computational Propaganda in Russia: the Origins of Digital Misinformation

Working Paper No. 2017.3 Computational Propaganda in Russia: The Origins of Digital Misinformation Sergey Sanovich, New York University 1 Table of Contents Abstract ............................................................................................................................................................... 3 Introduction.......................................................................................................................................................... 3 Domestic Origins of Russian Foreign Digital Propaganda ......................................................................... 5 Identifying Russian Bots on Twitter .............................................................................................................. 13 Conclusion ......................................................................................................................................................... 15 Author Acknowledgements ............................................................................................................................ 17 About the Author ............................................................................................................................................. 17 References ........................................................................................................................................................ 18 Citation ............................................................................................................................................................ -

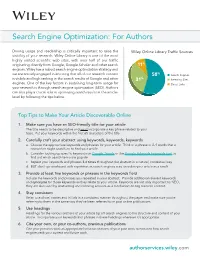

Search Engine Optimization: for Authors

Search Engine Optimization: For Authors Driving usage and readership is critically important to raise the Wiley Online Library Traffic Sources visibility of your research. Wiley Online Library is one of the most highly visited scientific web sites, with over half of our traffic originating directly from Google, Google Scholar and other search 11% engines. Wiley has a robust search engine optimization strategy and we are actively engaged in ensuring that all of our research content 58% Search Engines is visible and high ranking in the search results of Google and other 31% Referring Sites engines. One of the key factors in sustaining long-term usage for Direct Links your research is through search engine optimization (SEO). Authors can also play a crucial role in optimizing search results at the article- level by following the tips below. Top Tips to Make Your Article Discoverable Online 1. Make sure you have an SEO-friendly title for your article The title needs to be descriptive and must incorporate a key phrase related to your topic. Put your keywords within the first 65 characters of the title. 2. Carefully craft your abstract using keywords, keywords, keywords a. Choose the appropriate keywords and phrases for your article. Think of a phrase of 2-4 words that a researcher might search on to find your article. b. Consider looking up specific keywords onGoogle Trends or the Google Adwords keywords tool to find out which search terms are popular c. Repeat your keywords and phrases 3-4 times throughout the abstract in a natural, contextual way. d. -

The Commodification of Search

San Jose State University SJSU ScholarWorks Master's Theses Master's Theses and Graduate Research 2008 The commodification of search Hsiao-Yin Chen San Jose State University Follow this and additional works at: https://scholarworks.sjsu.edu/etd_theses Recommended Citation Chen, Hsiao-Yin, "The commodification of search" (2008). Master's Theses. 3593. DOI: https://doi.org/10.31979/etd.wnaq-h6sz https://scholarworks.sjsu.edu/etd_theses/3593 This Thesis is brought to you for free and open access by the Master's Theses and Graduate Research at SJSU ScholarWorks. It has been accepted for inclusion in Master's Theses by an authorized administrator of SJSU ScholarWorks. For more information, please contact [email protected]. THE COMMODIFICATION OF SEARCH A Thesis Presented to The School of Journalism and Mass Communications San Jose State University In Partial Fulfillment of the Requirement for the Degree Master of Science by Hsiao-Yin Chen December 2008 UMI Number: 1463396 INFORMATION TO USERS The quality of this reproduction is dependent upon the quality of the copy submitted. Broken or indistinct print, colored or poor quality illustrations and photographs, print bleed-through, substandard margins, and improper alignment can adversely affect reproduction. In the unlikely event that the author did not send a complete manuscript and there are missing pages, these will be noted. Also, if unauthorized copyright material had to be removed, a note will indicate the deletion. ® UMI UMI Microform 1463396 Copyright 2009 by ProQuest LLC. All rights reserved. This microform edition is protected against unauthorized copying under Title 17, United States Code. ProQuest LLC 789 E. -

Activity 1 Using a Search Engine

Activity 1 Using a search engine Introduction This activity should take you no more than an hour or so to complete. If you wish to spend more time than this you may, but bear in mind that only an hour or so of your total course study time is planned for this, and any additional time you take will add to your total hours of study. It is very easy to allow computing activities to predominate your studies! If you have difficulties with this activity, we suggest that you seek the help of a friend, neighbour or family member, but remember that you yourself should complete the activities; otherwise you will not learn the needed skills. So by all means, if you need to, ask for specific help or a demonstration, but do the activity items yourself. What you should learn from this activity When you have completed this activity you will be able to: • describe the differences between search engine interfaces; • use search engines in a simple way; • use search engine facilities in a more effective way using some advanced search features. The activity Install the browser(s) If you have not already done so, now is the time to install the course software from the Online Applications CD-ROM (and establish your link with your chosen Internet service provider (ISP)). The CD-ROM offers you a choice of two browsers: Internet Explorer and Netscape and we recommend you initially install Internet Explorer and use it for browsing the Block 1 web pages. Note, however, that two of the exercises associated with Unit 2 require Netscape so you will eventually need to install this browser as well.