Development and Biological Analysis of a Neural Network Based Genomic Compression System

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Tsvi Tlusty – C.V

TSVI TLUSTY – C.V. 06/2021 Center for Soft and Living Matter, Institute for Basic Science, Bldg. (#103), Ulsan National Institute of Science and Technology, 50 UNIST-gil, Ulju-gun, Ulsan 44919, Korea email: [email protected] homepage: life.ibs.re.kr EDUCATION AND EMPLOYMENT 2015– Distinguished Professor, Department of Physics, UNIST, Ulsan 2015– Group Leader, Center for Soft and Living Matter, Institute for Basic Science 2011–2015 Long-term Member, Institute of Advanced Study, Princeton. 2005–2013 Senior researcher, Physics of Complex Systems, Weizmann Institute. 2000–2004 Fellow, Center for Physics and Biology, Rockefeller University, New York. Host: Prof. Albert Libchaber 1995–2000 Ph.D. in Physics, Weizmann Institute, “Universality in Microemulsions”, Supervisor: Prof. Samuel A. Safran. 1991–1995 M.Sc. in Physics, Weizmann Institute. 1988–1990 B.Sc. in Physics and Mathematics (Talpyot), Hebrew University, Jerusalem. Teaching: Landmark Experiments in Biology (2006); Statistical Physics (2007, 2017-20); Information in Biology (2012); Errors and Codes (IAS, 2012); Theory of Living Matter (2016); Students and post-doctoral fellows (03/2020) Pineros William (postdoc, 2019- ) John Mcbride (postdoc, 2018- ) Somya Mani (postdoc, 2018- ) Tamoghna Das (postdoc, 2018- ) Ashwani Tripathi (postdoc, 2018- ) Sandipan Dutta (postdoc, 2016-2021), Prof. at BIRS Pileni, India Vladimir Reinharz (postdoc, 2018-2020), Prof. at U. Montreal. YongSeok Jho (research fellow, 2016-2017), Prof. at GyeongSang U. Yoni Savir (Ph.D., 2005-2011) Prof. at Technion. Adam Lampert (Ph.D., 2008-2012) Prof. at U. Arizona. Arbel Tadmor (M.Sc., 2006-2008) researcher at TRON. Maria Rodriguez Martinez (Postdoc, 2007-2009), PI at IBM Zurich Tamar Friedlander (Postdoc, 2009 -2012) Prof. -

Algorithms for Computational Biology 8Th International Conference, Alcob 2021 Missoula, MT, USA, June 7–11, 2021 Proceedings

Lecture Notes in Bioinformatics 12715 Subseries of Lecture Notes in Computer Science Series Editors Sorin Istrail Brown University, Providence, RI, USA Pavel Pevzner University of California, San Diego, CA, USA Michael Waterman University of Southern California, Los Angeles, CA, USA Editorial Board Members Søren Brunak Technical University of Denmark, Kongens Lyngby, Denmark Mikhail S. Gelfand IITP, Research and Training Center on Bioinformatics, Moscow, Russia Thomas Lengauer Max Planck Institute for Informatics, Saarbrücken, Germany Satoru Miyano University of Tokyo, Tokyo, Japan Eugene Myers Max Planck Institute of Molecular Cell Biology and Genetics, Dresden, Germany Marie-France Sagot Université Lyon 1, Villeurbanne, France David Sankoff University of Ottawa, Ottawa, Canada Ron Shamir Tel Aviv University, Ramat Aviv, Tel Aviv, Israel Terry Speed Walter and Eliza Hall Institute of Medical Research, Melbourne, VIC, Australia Martin Vingron Max Planck Institute for Molecular Genetics, Berlin, Germany W. Eric Wong University of Texas at Dallas, Richardson, TX, USA More information about this subseries at http://www.springer.com/series/5381 Carlos Martín-Vide • Miguel A. Vega-Rodríguez • Travis Wheeler (Eds.) Algorithms for Computational Biology 8th International Conference, AlCoB 2021 Missoula, MT, USA, June 7–11, 2021 Proceedings 123 Editors Carlos Martín-Vide Miguel A. Vega-Rodríguez Rovira i Virgili University University of Extremadura Tarragona, Spain Cáceres, Spain Travis Wheeler University of Montana Missoula, MT, USA ISSN 0302-9743 ISSN 1611-3349 (electronic) Lecture Notes in Bioinformatics ISBN 978-3-030-74431-1 ISBN 978-3-030-74432-8 (eBook) https://doi.org/10.1007/978-3-030-74432-8 LNCS Sublibrary: SL8 – Bioinformatics © Springer Nature Switzerland AG 2021 This work is subject to copyright. -

Computational Pan-Genomics: Status, Promises and Challenges

bioRxiv preprint doi: https://doi.org/10.1101/043430; this version posted March 12, 2016. The copyright holder for this preprint (which was not certified by peer review) is the author/funder. All rights reserved. No reuse allowed without permission. Computational Pan-Genomics: Status, Promises and Challenges Tobias Marschall1,2, Manja Marz3,60,61,62, Thomas Abeel49, Louis Dijkstra6,7, Bas E. Dutilh8,9,10, Ali Ghaffaari1,2, Paul Kersey11, Wigard P. Kloosterman12, Veli M¨akinen13, Adam Novak15, Benedict Paten15, David Porubsky16, Eric Rivals17,63, Can Alkan18, Jasmijn Baaijens5, Paul I. W. De Bakker12, Valentina Boeva19,64,65,66, Francesca Chiaromonte20, Rayan Chikhi21, Francesca D. Ciccarelli22, Robin Cijvat23, Erwin Datema24,25,26, Cornelia M. Van Duijn27, Evan E. Eichler28, Corinna Ernst29, Eleazar Eskin30,31, Erik Garrison32, Mohammed El-Kebir5,33,34, Gunnar W. Klau5, Jan O. Korbel11,35, Eric-Wubbo Lameijer36, Benjamin Langmead37, Marcel Martin59, Paul Medvedev38,39,40, John C. Mu41, Pieter Neerincx36, Klaasjan Ouwens42,67, Pierre Peterlongo43, Nadia Pisanti44,45, Sven Rahmann29, Ben Raphael46,47, Knut Reinert48, Dick de Ridder50, Jeroen de Ridder49, Matthias Schlesner51, Ole Schulz-Trieglaff52, Ashley Sanders53, Siavash Sheikhizadeh50, Carl Shneider54, Sandra Smit50, Daniel Valenzuela13, Jiayin Wang70,71,72, Lodewyk Wessels56, Ying Zhang23,5, Victor Guryev16,12, Fabio Vandin57,34, Kai Ye68,69,72 and Alexander Sch¨onhuth5 1Center for Bioinformatics, Saarland University, Saarbr¨ucken, Germany; 2Max Planck Institute for Informatics, Saarbr¨ucken, -

The Principled Design of Large-Scale Recursive Neural Network Architectures–DAG-Rnns and the Protein Structure Prediction Problem

Journal of Machine Learning Research 4 (2003) 575-602 Submitted 2/02; Revised 4/03; Published 9/03 The Principled Design of Large-Scale Recursive Neural Network Architectures–DAG-RNNs and the Protein Structure Prediction Problem Pierre Baldi [email protected] Gianluca Pollastri [email protected] School of Information and Computer Science Institute for Genomics and Bioinformatics University of California, Irvine Irvine, CA 92697-3425, USA Editor: Michael I. Jordan Abstract We describe a general methodology for the design of large-scale recursive neural network architec- tures (DAG-RNNs) which comprises three fundamental steps: (1) representation of a given domain using suitable directed acyclic graphs (DAGs) to connect visible and hidden node variables; (2) parameterization of the relationship between each variable and its parent variables by feedforward neural networks; and (3) application of weight-sharing within appropriate subsets of DAG connec- tions to capture stationarity and control model complexity. Here we use these principles to derive several specific classes of DAG-RNN architectures based on lattices, trees, and other structured graphs. These architectures can process a wide range of data structures with variable sizes and dimensions. While the overall resulting models remain probabilistic, the internal deterministic dy- namics allows efficient propagation of information, as well as training by gradient descent, in order to tackle large-scale problems. These methods are used here to derive state-of-the-art predictors for protein structural features such as secondary structure (1D) and both fine- and coarse-grained contact maps (2D). Extensions, relationships to graphical models, and implications for the design of neural architectures are briefly discussed. -

120421-24Recombschedule FINAL.Xlsx

Friday 20 April 18:00 20:00 REGISTRATION OPENS in Fira Palace 20:00 21:30 WELCOME RECEPTION in CaixaForum (access map) Saturday 21 April 8:00 8:50 REGISTRATION 8:50 9:00 Opening Remarks (Roderic GUIGÓ and Benny CHOR) Session 1. Chair: Roderic GUIGÓ (CRG, Barcelona ES) 9:00 10:00 Richard DURBIN The Wellcome Trust Sanger Institute, Hinxton UK "Computational analysis of population genome sequencing data" 10:00 10:20 44 Yaw-Ling Lin, Charles Ward and Steven Skiena Synthetic Sequence Design for Signal Location Search 10:20 10:40 62 Kai Song, Jie Ren, Zhiyuan Zhai, Xuemei Liu, Minghua Deng and Fengzhu Sun Alignment-Free Sequence Comparison Based on Next Generation Sequencing Reads 10:40 11:00 178 Yang Li, Hong-Mei Li, Paul Burns, Mark Borodovsky, Gene Robinson and Jian Ma TrueSight: Self-training Algorithm for Splice Junction Detection using RNA-seq 11:00 11:30 coffee break Session 2. Chair: Bonnie BERGER (MIT, Cambrige US) 11:30 11:50 139 Son Pham, Dmitry Antipov, Alexander Sirotkin, Glenn Tesler, Pavel Pevzner and Max Alekseyev PATH-SETS: A Novel Approach for Comprehensive Utilization of Mate-Pairs in Genome Assembly 11:50 12:10 171 Yan Huang, Yin Hu and Jinze Liu A Robust Method for Transcript Quantification with RNA-seq Data 12:10 12:30 120 Zhanyong Wang, Farhad Hormozdiari, Wen-Yun Yang, Eran Halperin and Eleazar Eskin CNVeM: Copy Number Variation detection Using Uncertainty of Read Mapping 12:30 12:50 205 Dmitri Pervouchine Evidence for widespread association of mammalian splicing and conserved long range RNA structures 12:50 13:10 169 Melissa Gymrek, David Golan, Saharon Rosset and Yaniv Erlich lobSTR: A Novel Pipeline for Short Tandem Repeats Profiling in Personal Genomes 13:10 13:30 217 Rory Stark Differential oestrogen receptor binding is associated with clinical outcome in breast cancer 13:30 15:00 lunch break Session 3. -

Methodology for Predicting Semantic Annotations of Protein Sequences by Feature Extraction Derived of Statistical Contact Potentials and Continuous Wavelet Transform

Universidad Nacional de Colombia Sede Manizales Master’s Thesis Methodology for predicting semantic annotations of protein sequences by feature extraction derived of statistical contact potentials and continuous wavelet transform Author: Supervisor: Gustavo Alonso Arango Dr. Cesar German Argoty Castellanos Dominguez A thesis submitted in fulfillment of the requirements for the degree of Master’s on Engineering - Industrial Automation in the Department of Electronic, Electric Engineering and Computation Signal Processing and Recognition Group June 2014 Universidad Nacional de Colombia Sede Manizales Tesis de Maestr´ıa Metodolog´ıapara predecir la anotaci´on sem´antica de prote´ınaspor medio de extracci´on de caracter´ısticas derivadas de potenciales de contacto y transformada wavelet continua Autor: Tutor: Gustavo Alonso Arango Dr. Cesar German Argoty Castellanos Dominguez Tesis presentada en cumplimiento a los requerimientos necesarios para obtener el grado de Maestr´ıaen Ingenier´ıaen Automatizaci´onIndustrial en el Departamento de Ingenier´ıaEl´ectrica,Electr´onicay Computaci´on Grupo de Procesamiento Digital de Senales Enero 2014 UNIVERSIDAD NACIONAL DE COLOMBIA Abstract Faculty of Engineering and Architecture Department of Electronic, Electric Engineering and Computation Master’s on Engineering - Industrial Automation Methodology for predicting semantic annotations of protein sequences by feature extraction derived of statistical contact potentials and continuous wavelet transform by Gustavo Alonso Arango Argoty In this thesis, a method to predict semantic annotations of the proteins from its primary structure is proposed. The main contribution of this thesis lies in the implementation of a novel protein feature representation, which makes use of the pairwise statistical contact potentials describing the protein interactions and geometry at the atomic level. -

BIOGRAPHICAL SKETCH NAME: Berger

BIOGRAPHICAL SKETCH NAME: Berger, Bonnie eRA COMMONS USER NAME (credential, e.g., agency login): BABERGER POSITION TITLE: Simons Professor of Mathematics and Professor of Electrical Engineering and Computer Science EDUCATION/TRAINING (Begin with baccalaureate or other initial professional education, such as nursing, include postdoctoral training and residency training if applicable. Add/delete rows as necessary.) EDUCATION/TRAINING DEGREE Completion (if Date FIELD OF STUDY INSTITUTION AND LOCATION applicable) MM/YYYY Brandeis University, Waltham, MA AB 06/1983 Computer Science Massachusetts Institute of Technology SM 01/1986 Computer Science Massachusetts Institute of Technology Ph.D. 06/1990 Computer Science Massachusetts Institute of Technology Postdoc 06/1992 Applied Mathematics A. Personal Statement Advances in modern biology revolve around automated data collection and sharing of the large resulting datasets. I am considered a pioneer in the area of bringing computer algorithms to the study of biological data, and a founder in this community that I have witnessed grow so profoundly over the last 26 years. I have made major contributions to many areas of computational biology and biomedicine, largely, though not exclusively through algorithmic innovations, as demonstrated by nearly twenty thousand citations to my scientific papers and widely-used software. In recognition of my success, I have just been elected to the National Academy of Sciences and in 2019 received the ISCB Senior Scientist Award, the pinnacle award in computational biology. My research group works on diverse challenges, including Computational Genomics, High-throughput Technology Analysis and Design, Biological Networks, Structural Bioinformatics, Population Genetics and Biomedical Privacy. I spearheaded research on analyzing large and complex biological data sets through topological and machine learning approaches; e.g. -

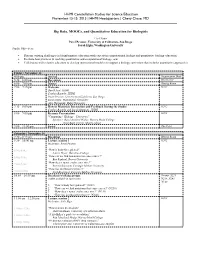

Big Data, Moocs, and ... (PDF)

HHMI Constellation Studios for Science Education November 13-15, 2015 | HHMI Headquarters | Chevy Chase, MD Big Data, MOOCs, and Quantitative Education for Biologists Co-Chairs Pavel Pevzner, University of California- San Diego Sarah Elgin, Washington University Studio Objectives Discuss existing challenges in bioinformatics education with experts in computational biology and quantitative biology education, Evaluate best practices in teaching quantitative and computational biology, and Collaborate with scientist educators to develop instructional modules to support a biology curriculum that includes quantitative approaches. Friday | November 13 4:00 pm Arrival Registration Desk 5:30 – 6:00 pm Reception Great Hall 6:00 – 7:00 pm Dinner Dining Room 7:00 – 7:15 pm Welcome K202 David Asai, HHMI Cynthia Bauerle, HHMI Pavel Pevzner, University of California-San Diego Sarah Elgin, Washington University Alex Hartemink, Duke University 7:15 – 8:00 pm How to Maximize Interaction and Feedback During the Studio K202 Cynthia Bauerle and Sarah Simmons, HHMI 8:00 – 9:00 pm Keynote Presentation K202 "Computing + Biology = Discovery" Speakers: Ran Libeskind-Hadas, Harvey Mudd College Eliot Bush, Harvey Mudd College 9:00 – 11:00 pm Social The Pilot Saturday | November 14 7:30 – 8:15 am Breakfast Dining Room 8:30 – 10:00 am Lecture session 1 K202 Moderator: Pavel Pevzner 834a-854a “How is body fat regulated?” Laurie Heyer, Davidson College 856a-916a “How can we find mutations that cause cancer?” Ben Raphael, Brown University “How does a tumor evolve over time?” 918a-938a Russell Schwartz, Carnegie Mellon University “How fast do ribosomes move?” 940a-1000a Carl Kingsford, Carnegie Mellon University 10:05 – 10:55 am Breakout working groups Rooms: S221, (coffee available in each room) N238, N241, N140 1. -

Mechanistic Mathematical Modeling of Spatiotemporal Microtubule Dynamics and Regulation in Vivo

Research Collection Doctoral Thesis Mechanistic mathematical modeling of spatiotemporal microtubule dynamics and regulation in vivo Author(s): Widmer, Lukas A. Publication Date: 2018 Permanent Link: https://doi.org/10.3929/ethz-b-000328562 Rights / License: In Copyright - Non-Commercial Use Permitted This page was generated automatically upon download from the ETH Zurich Research Collection. For more information please consult the Terms of use. ETH Library diss. eth no. 25588 MECHANISTICMATHEMATICAL MODELINGOFSPATIOTEMPORAL MICROTUBULEDYNAMICSAND REGULATION INVIVO A thesis submitted to attain the degree of DOCTOR OF SCIENCES of ETH ZURICH (dr. sc. eth zurich) presented by LUKASANDREASWIDMER msc. eth cbb born on 11. 03. 1987 citizen of luzern and ruswil lu, switzerland accepted on the recommendation of Prof. Dr. Jörg Stelling, examiner Prof. Dr. Yves Barral, co-examiner Prof. Dr. François Nédélec, co-examiner Prof. Dr. Linda Petzold, co-examiner 2018 Lukas Andreas Widmer Mechanistic mathematical modeling of spatiotemporal microtubule dynamics and regulation in vivo © 2018 ACKNOWLEDGEMENTS We are all much more than the sum of our work, and there is a great many whom I would like to thank for their support and encouragement, without which this thesis would not exist. I would like to thank my supervisor, Prof. Jörg Stelling, for giving me the opportunity to conduct my PhD research in his group. Jörg, you have been a great scientific mentor, and the scientific freedom you give your students is something I enjoyed a lot – you made it possible for me to develop my own theories, and put them to the test. I thank you for the trust you put into me, giving me a challenge to rise up to, and for always having an open door, whether in times of excitement or despair. -

Deep Learning in Chemoinformatics Using Tensor Flow

UC Irvine UC Irvine Electronic Theses and Dissertations Title Deep Learning in Chemoinformatics using Tensor Flow Permalink https://escholarship.org/uc/item/963505w5 Author Jain, Akshay Publication Date 2017 Peer reviewed|Thesis/dissertation eScholarship.org Powered by the California Digital Library University of California UNIVERSITY OF CALIFORNIA, IRVINE Deep Learning in Chemoinformatics using Tensor Flow THESIS submitted in partial satisfaction of the requirements for the degree of MASTER OF SCIENCE in Computer Science by Akshay Jain Thesis Committee: Professor Pierre Baldi, Chair Professor Cristina Videira Lopes Professor Eric Mjolsness 2017 c 2017 Akshay Jain DEDICATION To my family and friends. ii TABLE OF CONTENTS Page LIST OF FIGURES v LIST OF TABLES vi ACKNOWLEDGMENTS vii ABSTRACT OF THE THESIS viii 1 Introduction 1 1.1 QSAR Prediction Methods . .2 1.2 Deep Learning . .4 2 Artificial Neural Networks(ANN) 5 2.1 Artificial Neuron . .5 2.2 Activation Function . .7 2.3 Loss function . .8 2.4 Optimization . .8 3 Deep Recursive Architectures 10 3.1 Recurrent Neural Networks (RNN) . 10 3.2 Recursive Neural Networks . 11 3.3 Directed Acyclic Graph Recursive Neural Networks (DAG-RNN) . 11 4 UG-RNN for small molecules 14 4.1 DAG Generation . 16 4.2 Local Information Vector . 16 4.3 Contextual Vectors . 17 4.4 Activity Prediction . 17 4.5 UG-RNN With Contracted Rings (UG-RNN-CR) . 18 4.6 Example: UG-RNN Model of Propionic Acid . 20 5 Implementation 24 6 Data & Results 26 6.1 Aqueous Solubility Prediction . 26 6.2 Melting Point Prediction . 28 iii 7 Conclusions 30 Bibliography 32 A Source Code 37 A.1 UGRNN . -

UC Irvine UC Irvine Previously Published Works

UC Irvine UC Irvine Previously Published Works Title The capacity of feedforward neural networks. Permalink https://escholarship.org/uc/item/29h5t0hf Authors Baldi, Pierre Vershynin, Roman Publication Date 2019-08-01 DOI 10.1016/j.neunet.2019.04.009 License https://creativecommons.org/licenses/by/4.0/ 4.0 Peer reviewed eScholarship.org Powered by the California Digital Library University of California THE CAPACITY OF FEEDFORWARD NEURAL NETWORKS PIERRE BALDI AND ROMAN VERSHYNIN Abstract. A long standing open problem in the theory of neural networks is the devel- opment of quantitative methods to estimate and compare the capabilities of different ar- chitectures. Here we define the capacity of an architecture by the binary logarithm of the number of functions it can compute, as the synaptic weights are varied. The capacity provides an upperbound on the number of bits that can be extracted from the training data and stored in the architecture during learning. We study the capacity of layered, fully-connected, architectures of linear threshold neurons with L layers of size n1, n2,...,nL and show that in essence the capacity is given by a cubic polynomial in the layer sizes: L−1 C(n1,...,nL) = Pk=1 min(n1,...,nk)nknk+1, where layers that are smaller than all pre- vious layers act as bottlenecks. In proving the main result, we also develop new techniques (multiplexing, enrichment, and stacking) as well as new bounds on the capacity of finite sets. We use the main result to identify architectures with maximal or minimal capacity under a number of natural constraints. -

Course Outline

Department of Computer Science and Software Engineering COMP 6811 Bioinformatics Algorithms (Reading Course) Fall 2019 Section AA Instructor: Gregory Butler Curriculum Description COMP 6811 Bioinformatics Algorithms (4 credits) The principal objectives of the course are to cover the major algorithms used in bioinformatics; sequence alignment, multiple sequence alignment, phylogeny; classi- fying patterns in sequences; secondary structure prediction; 3D structure prediction; analysis of gene expression data. This includes dynamic programming, machine learning, simulated annealing, and clustering algorithms. Algorithmic principles will be emphasized. A project is required. Outline of Topics The course will focus on algorithms for protein sequence analysis. It will not cover genome assembly, genome mapping, or gene recognition. • Background in Biology and Genomics • Sequence Alignment: Pairwise and Multiple • Representation of Protein Amino Acid Composition • Profile Hidden Markov Models • Specificity Determining Sites • Curation, Annotation, and Ontologies • Machine Learning: Secondary Structure, Signals, Subcellular Location • Protein Families, Phylogenomics, and Orthologous Groups • Profile-Based Alignments • Algorithms Based on k-mers 1 Texts | in Library D. Higgins and W. Taylor (editors). Bioinformatics: Sequence, Structure and Databanks, Oxford University Press, 2000. A. D. Baxevanis and B. F. F. Ouelette. Bioinformatics: A Practical Guide to the Analysis of Genes and Proteins, Wiley, 1998. Richard Durbin, Sean R. Eddy, Anders Krogh,