Corpus for Customer Purchase Behavior Prediction in Social Media

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

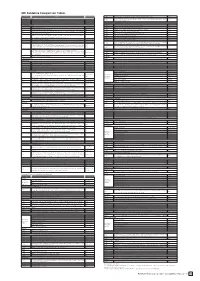

GRI Guideline Comparison Tables

GRI Guideline Comparison Tables Data and Information Environmental Aspects Item Indicators Pages in this Report ○EN14 Strategies, current actions, and future plans for managing impacts on biodiversity. 28, 67-69 1. Strategy and Profile Number of IUCN Red List species and national conservation list species with habitats in areas affected by oper- ○EN15 None Statement from the most senior decision-maker of the organization (e.g., CEO, chair, or equivalent senior position) about the relevance of ations, by level of extinction risk. 1.1 sustainability to the organization and its strategy. 2-3 1.2 Description of key impacts, risks, and opportunities. 28-29 Emissions, Effluents, and Waste 2. Organizational Profile ◎EN16 Total direct and indirect greenhouse gas emissions by weight. 86-90 >>> Environmental monitoring 2.1 Name of the organization. Editorial Policy, 94 ◎EN17 Other relevant indirect greenhouse gas emissions by weight. 86-90 2.2 Primary brands, products, and/or services. 94 ◎EN18 Initiatives to reduce greenhouse gas emissions and reductions achieved. 16-19 >>> Installing plumbing and tanks above ground 2.3 Operational structure of the organization, including main divisions, operating companies, subsidiaries, and joint ventures. 95 ◎EN19 Emissions of ozone-depleting substances by weight. 86-90 2.4 Location of organization’s headquarters. 95 ◎EN20 NOx, SOx, and other significant air emissions by type and weight. 86-90 Number of countries where the organization operates, and names of countries with either major operations or ◎EN21 Total water discharge by quality and destination. 86-90 >>> Legal compliance and reports on complaints 2.5 95 that are specifically relevant to the sustainability issues covered in the report. -

Annual Report 2015 at Fujifilm, We Are Continuously Innovating — Creating New Technologies, Products and Services That Inspire and Excite People Everywhere

Annual Report 2015 At Fujifilm, we are continuously innovating — creating new technologies, products and services that inspire and excite people everywhere. Our goal is to empower the potential and expand the horizons of tomorrow’s businesses and lifestyles. To Our Stakeholders Fujifilm Enters a New Growth Phase In 2014, the Fujifilm Group formulated a new corporate slogan, “Value from Innovation,” to express its commitment to providing value to society through the continuous creation of innovative technologies, products, and services in the future. Based on this slogan, the Fujifilm Group compiled the mid-term CSR plan “Sustainable Value Plan 2016” as a guide for its proactive initiatives to seize opportunities for business growth and help solve social issues related to the environment, health, daily life, and working style. In the health field, for example, we support improvement in medical services in Asia, Africa, and other emerging countries by supplying medical diagnostic equipment and implementing educational programs for medical practitioners. The Fujifilm Group undertook major business restructuring when its core business was at risk of disappearing amid plummeting demand for photographic film after 2000, due to digitization. Through this restructuring, we have built a business foundation that is capable of generating a stable cash flow every year. By balancing the allocation of this cash flow to growth investments and shareholder returns, Fujifilm is transitioning to a new phase of achieving profit growth and ROE improvement. Under the medium-term management plan VISION 2016 announced in November 2014, Fujifilm aims to enhance shareholder returns and fulfill its business portfolio to attain sustained growth in the medium-to-long term by accelerating growth in the core healthcare, highly functional materials, and document business fields and by improving profitability across all businesses. -

Sustainability Report 2007

ိୢຢ఼৹ᓀါ્ భߒኚखᐱۨস 2007 FUJIFILM Holdings Sustainability Report 2007 ݛ݆ླྀڦۯዂً CSRऄ 03 ঢ়ᆐ Պडݛኍ!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!! 36 CSRঢ়ᆐ 05 07 ๚ᄽ߁ᄲ! 37 ഓᄽాևዎ 38 ፏु݆ᇑޅ၃࠶ 43 ࠶༹ဣ ۯCSRऄڦ࡛ྔࠅິ 45 09 ጆ༶!!ॽ༵ߛิऄዊଉዮᇀํ७ ࠲ဣڦᇑ૧ᅮ၎࠲ኁ! Ԓߢۯခ༵ߛิऄዊଉ ĘมࣷႠęऄޜཚࡗׂࢅ ࠲ဣڦဌĄาཪॳԍᄢ 49 ᇑ૧ᅮ၎࠲ኁ 11 ࠲ဣڦᇑࠥਜ਼ 51 ᆇٶیሞՍ૧ 12 ࠲ဣڦᇑࠣ۫Ąཨጨኁ 54 ዚॳྺణՔٷᅜሺ 13 ࠲ဣڦᇑࠃᆌฆ 55 ࠲ဣڦᇑᇵ߾ 58 ߛิऄዊଉ༵ۯཚࡗ֖ᇑมࣷऄ ۯࠅᅮऄ 62 ॏኵڦಢᇣ࿔ࣅ!ྺට்༵ࠃ߸ྺ࠽ݘ 15 ࠲ဣڦ൰࣍ৣں႑တბၯ !ᇑڦሰ૰ظघ݀ 16 Ԓߢۯ Ę࣍ԍęऄں࿄ઠ୴ 17 ླྀڦۯࡔाጨᇸთ࣍ဣཥ 666 ࣍ԍऄ 1 ୴አ֧ڦ๗ঌೌणཷ 67 ۯ๚ᄽऄڦॺთ࣍႙มࣷྺణՔظᅜ 21 څᇑ࣍ৣۯ๚ᄽऄ 69 ߛዊଉ! 70 ࣍ԍยऺڦཚࡗୁࠏཚጕ൱ิऄ 71 ࣅბዊ࠶ มࣷࠅᅮஃ༇ ݞኹඇ൰ഘࢪՎ 73 23 ֧ܔ࣍ԍڦ࣍ԍஃ༇ 75 ୁ 24 ۯࠌ٪࠲ဣ 777 ጨᇸবሀႜڦ൶ںᇑۯऄׂิ 25 ಇݣڦ३ณࣅბዊ 79 ३ಇڦഘĄཱིගĄᇘٷܔگই 80 ჄႠࣷऺ 82 ᅜჄ݀ቛྺణՔ 27 85 ࣍ԍୁ ቛ݀ڦ߁ᄲᇑৃࢫۯCSRऄ 29 ༵ڦCSR ዘᄲੜ༶ 31 ૧ᅮ၎࠲ኁܔࣆ2007 ೠॏĄብĄཨ໕ڦহྔ 86 33 ۅߵAA1000ԍኤՔጚ࠵ 87 ෙݛೠࠚࡕڼڦแํ ෙݛอֱԒߢڼ 89 90 ᆅ 1 FUJIFILM Holdings Sustainability Report 2007 FUJIFILM Holdings Sustainability Report 2007 FUJIFILM Holdings Sustainability Report 2007 2 ᅲ߅ᅲ߅ औࠨࠎऔࠨࠎጓLjᄋጓଥᒋăᄋጓଥᒋă ᑚဵCSRဣୃࡼดă औࠨࠎጓࡼႈఠ Ᏼ፬ၫ൩છࡼखᐱި߲Ꮎሯࡼࡍ۳ஶሆLjိୢຢૹ ᅍཝᄏᏋሆጙቦĂ૩ᅎႊᄏᒠࡼ༓છLj৩ ཝቤࡼူጓᄏᇹLjሶᓹĐऔࠨࠎጓđࡼܪ൳ăᆸ ੑᑗጲૺ৹ࣁĂᄾᓾᑗĂᏋڐඣࡻࡵ೫ਓࡍ৻ఱĂ፬ ܘࢀᒰࣶಽፄሤਈᑗࡼᑽߒăᆸඣۋĂཌĂᔫ ኍጞᑍಽፄሤਈऱࡼ໐ࡗਜ਼ገཇLjᑵཀྵ።࣪ူጓᎧ ્ણஹࡼܤછLjᆐ્खᐱᔪ߲ᔈࡼৡማăᑚᑵဵᆸ ඣཝೆᅎऔࠨࠎጓࡼፀፃჅᏴă உ৩খুᎧ༓છ݀ள፦ ᎖ᑚᒬཱྀဤLjᆸඣᏴ2004ฤᒜࢾ೫ጲࠎጓ75ᒲฤ૾ 2009ฤᆐܪࡼᒦ໐ள፦ଐચĐVISION75đLj݀ఎဪ ቲ೫ࡼஉ৩খুLjள፦ᓾᏎૹᒦᄾྜྷࡵ߅ޠ ቶഌᎮਜ਼ቤቭူጓഌᎮăஉ৩খু݀ݙဵ࠙ݡᏋ ற଼૦৩LjऎဵᏴཱྀᑞఠࡼࢾྙੜৎᎌ ᐒளဵႊᓍ፦ጓᇗڳਣࡼள፦ᓾᏎăࡩLjۦᏳॊ ࡼူጓਖෝቲჁିਜ਼ற଼ᔝᒅ৩ଦݙဵጙୈྏጵࣥ ࡼူ༽ăࡣᆸଫቧLjᆸඣጙࢾᎌৢᄴᅲ߅औࠨ ࠎጓăᑚᑵဵᆸሯሶᆡᄴོࠅࡉࡼቧቦਜ਼ቦăጐ ᑵဵளਭᑚዹࡼখুLjݣถৎଝᐻመ߲ጓࡼ્ࡀ Ᏼଥᒋăૄ৻2006ฤݤฤLjᒦ໐ள፦ଐચĐVISION75 DŽ2006DžđᐱၿಽLjནࡻ೫ިਭᎾ໐ࡼ߅ਫăᑚቋ߅ ਜ਼৻ఱࢀऱࡼᓐᎧᑽۋਫLjᑵဵਓࡍᏋĂᔫ ߒࡼஉਫăᆸཱྀᆐLjᏴิඣࡼᓐਜ਼ᑽߒሆLjᆸඣጯள ໐Ăᆮࢾ၃ፄࡼူጓᓞቯăޠဣሚ೫ถ৫ཀྵۣ -

Sustainability Report 2007 (PDF:8.4MB)

CONTENTS FUJIFILM Holdings Corporation Sustainability Report 2007 03 Message from the CEO Implementing CSR Activities CSR Management 05 Editorial Policy 36 CSR Management 07 Business Overview 37 Corporate Governance 38 Compliance and Risk Management 43 Management Systems 45 CSR Activities at Overseas Sites 09 Feature: Enhancing Quality of Life Communication with Stakeholders Enhancing Quality of Life through Products and Services Social Activities Report 11 Absorption and Permeation for Healthcare 49 Communication with Stakeholders 51 Communication with Customers 12 Making Prints at Convenience Stores 54 Communication with Shareholders and Investors 13 Striving to Enhance the Health of People Everywhere 55 Relationships with Our Suppliers 58 Communication with Our Employees Enhancing Quality of Life through Our Relationship with Society 62 Contributing to the Community 15 Fostering Culture to Provide Value to a Broad Range of People 16 JOHO-JUKU Information School Stimulating Creativity Relationship with the Global Environment 17 “Mirai(Future)” Green Map Environmental Activities Report 19 International Resource Recycling System 66 Implementation of Environmental Activities 21 Business Endeavors to Create a Recycling-based Society 67 Fujifilm Group Green Policy 69 Business Activities and the Environmental Burden Pursuing Quality of Life through Communication 70 Design for Environment 71 Chemical Substance Management 23 Social Contributions Forum 73 Working to Prevent Global Warming 24 Environmental Forum 75 Environmental Measures in Logistical -

Achieving Our “Second Foundation” and Increasing

Message from the CEO Achieving Our “Second Foundation” and Increasing The Thinking Behind Our Second Foundation Here in the Fujifilm Group, we are all working together to build a new strengthened business structure aimed toward our “Second Foundation” amid the major changes transcending predictions such as the digitalization of photographs. We are supported by many stakeholders including customers, photo lovers, shareholders, investors, employees, local communities and business partners. We must appropriately respond to changes in the business environment and society, while meet- ing the expectations and demands of these stakeholders, in addition to contributing to society. The significance of our devo- tion to achieving our Second Foundation can be seen here. Structural Reform and Strengthening of Consolidated Management In 2004, we formulated the VISION75 medium-term business plan aimed at the fiscal year 2009, which marks 75 years since the company was founded. We have been conducting this plan through structural reforms while focusing our management resources on existing business growth and on new fields of business. Structural reforms do not simply involve cutting back on staff. We seriously worked to find out how important man- agement resources can be effectively and efficiently redistrib- uted. However, it was by no means an easy decision to reduce what used to be core businesses and streamline organizations. Nevertheless, we believe that having the courage to carry out our Second Foundation is the real message we want to convey to the people working with us. Without such reforms, we are unable to display the social value of the company. Looking back over the fiscal year 2006, the VISION75 (2006) plan pro- gressed smoothly, and we were able to achieve results sur- passing original targets. -

FUJIFILM Sustainable Value Plan 2016

FUJIFILM Sustainable Value Plan 2016 Medium-Term Medium-Term New Medium-Term Under the corporate slogan, “Value from Innovation,” implementation. Creating the Triple Promotion Policy CSR Plan CSR Plan CSR Plan FY2007-2009 FY2010-2013 FY2014-2016 established to coincide with our 80th anniversary, the Following SVP 2016, we aim to be a corporation that l Thorough implementation Fujifilm Group has created a new Medium-Term CSR Plan contributes to the “development of sustainable society” by The SVP 2016 Triple Promotion Policy was established in the of sound corporate governance and Priority issues Continue & covering FY2014 to FY2016, titled, “Sustainable Value proactively creating “new value” toward resolving social following four steps. compliance (Legal compliance and taking responsibility reinforce l Reduction of impact on as a corporate citizen) Plan 2016” (SVP 2016), and commenced work on its issues. Please visit the link below for details of the process. environment and society http://www.fujifilmholdings.com/en/sustainability/valuePlan2016/ l Across the value chain, Maintain & product lifecycles, and Expand the scope process/index.html world-wide reinforce Social Background & Basic Approach social issues through products, services, and technologies.” l Solve social issues Company-wide STEP Clarifying the Basic Policies through business Expand the scope We enhance collaboration between our business activities 1 activities actively efforts At present, global warming and other environmental issues and social issues under the heightened expectation for the are in a state of crisis. In addition, social issues such as global companies to solve the worsening environmental Making it clear in the Basic Policies through a review of Matrix on Social Issues and Fujifilm Group’s Products, Services, and Technologies existing CSR activities. -

Achieving Our “Second Foundation” and Increasing

Message from the CEO Achieving Our “Second Foundation” and Increasing The Thinking Behind Our Second Foundation Here in the Fujifilm Group, we are all working together to build a new strengthened business structure aimed toward our “Second Foundation” amid the major changes transcending predictions such as the digitalization of photographs. We are supported by many stakeholders including customers, photo lovers, shareholders, investors, employees, local communities and business partners. We must appropriately respond to changes in the business environment and society, while meet- ing the expectations and demands of these stakeholders, in addition to contributing to society. The significance of our devo- tion to achieving our Second Foundation can be seen here. Structural Reform and Strengthening of Consolidated Management In 2004, we formulated the VISION75 medium-term business plan aimed at the fiscal year 2009, which marks 75 years since the company was founded. We have been conducting this plan through structural reforms while focusing our management resources on existing business growth and on new fields of business. Structural reforms do not simply involve cutting back on staff. We seriously worked to find out how important man- agement resources can be effectively and efficiently redistrib- uted. However, it was by no means an easy decision to reduce what used to be core businesses and streamline organizations. Nevertheless, we believe that having the courage to carry out our Second Foundation is the real message we want to convey to the people working with us. Without such reforms, we are unable to display the social value of the company. Looking back over the fiscal year 2006, the VISION75 (2006) plan pro- gressed smoothly, and we were able to achieve results sur- passing original targets.