Half-Sync/Half-Async an Architectural

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Patterns Senior Member of Technical Staff and Pattern Languages Knowledge Systems Corp

Kyle Brown An Introduction to Patterns Senior Member of Technical Staff and Pattern Languages Knowledge Systems Corp. 4001 Weston Parkway CSC591O Cary, North Carolina 27513-2303 April 7-9, 1997 919-481-4000 Raleigh, NC [email protected] http://www.ksccary.com Copyright (C) 1996, Kyle Brown, Bobby Woolf, and 1 2 Knowledge Systems Corp. All rights reserved. Overview Bobby Woolf Senior Member of Technical Staff O Patterns Knowledge Systems Corp. O Software Patterns 4001 Weston Parkway O Design Patterns Cary, North Carolina 27513-2303 O Architectural Patterns 919-481-4000 O Pattern Catalogs [email protected] O Pattern Languages http://www.ksccary.com 3 4 Patterns -- Why? Patterns -- Why? !@#$ O Learning software development is hard » Lots of new concepts O Must be some way to » Hard to distinguish good communicate better ideas from bad ones » Allow us to concentrate O Languages and on the problem frameworks are very O Patterns can provide the complex answer » Too much to explain » Much of their structure is incidental to our problem 5 6 Patterns -- What? Patterns -- Parts O Patterns are made up of four main parts O What is a pattern? » Title -- the name of the pattern » A solution to a problem in a context » Problem -- a statement of what the pattern solves » A structured way of representing design » Context -- a discussion of the constraints and information in prose and diagrams forces on the problem »A way of communicating design information from an expert to a novice » Solution -- a description of how to solve the problem » Generative: -

Chrooting All Services in Linux

LinuxFocus article number 225 http://linuxfocus.org Chrooting All Services in Linux by Mark Nielsen (homepage) About the author: Abstract: Mark works as an independent consultant Chrooted system services improve security by limiting damage that donating time to causes like someone who broke into the system can possibly do. GNUJobs.com, writing _________________ _________________ _________________ articles, writing free software, and working as a volunteer at eastmont.net. Introduction What is chroot? Chroot basically redefines the universe for a program. More accurately, it redefines the "ROOT" directory or "/" for a program or login session. Basically, everything outside of the directory you use chroot on doesn't exist as far a program or shell is concerned. Why is this useful? If someone breaks into your computer, they won't be able to see all the files on your system. Not being able to see your files limits the commands they can do and also doesn't give them the ability to exploit other files that are insecure. The only drawback is, I believe it doesn't stop them from looking at network connections and other stuff. Thus, you want to do a few more things which we won't get into in this article too much: Secure your networking ports. Have all services run as a service under a non-root account. In addition, have all services chrooted. Forward syslogs to another computer. Analyze logs files Analyze people trying to detect random ports on your computer Limit cpu and memory resources for a service. Activate account quotas. The reason why I consider chroot (with a non-root service) to be a line of defense is, if someone breaks in under a non-root account, and there are no files which they can use to break into root, then they can only limit damage to the area they break in. -

The Linux Kernel Module Programming Guide

The Linux Kernel Module Programming Guide Peter Jay Salzman Michael Burian Ori Pomerantz Copyright © 2001 Peter Jay Salzman 2007−05−18 ver 2.6.4 The Linux Kernel Module Programming Guide is a free book; you may reproduce and/or modify it under the terms of the Open Software License, version 1.1. You can obtain a copy of this license at http://opensource.org/licenses/osl.php. This book is distributed in the hope it will be useful, but without any warranty, without even the implied warranty of merchantability or fitness for a particular purpose. The author encourages wide distribution of this book for personal or commercial use, provided the above copyright notice remains intact and the method adheres to the provisions of the Open Software License. In summary, you may copy and distribute this book free of charge or for a profit. No explicit permission is required from the author for reproduction of this book in any medium, physical or electronic. Derivative works and translations of this document must be placed under the Open Software License, and the original copyright notice must remain intact. If you have contributed new material to this book, you must make the material and source code available for your revisions. Please make revisions and updates available directly to the document maintainer, Peter Jay Salzman <[email protected]>. This will allow for the merging of updates and provide consistent revisions to the Linux community. If you publish or distribute this book commercially, donations, royalties, and/or printed copies are greatly appreciated by the author and the Linux Documentation Project (LDP). -

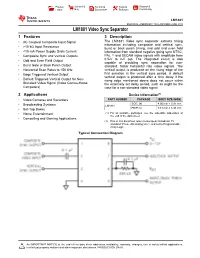

LM1881 Video Sync Separator Datasheet

Product Sample & Technical Tools & Support & Folder Buy Documents Software Community LM1881 SNLS384G –FEBRUARY 1995–REVISED JUNE 2015 LM1881 Video Sync Separator 1 Features 3 Description The LM1881 Video sync separator extracts timing 1• AC Coupled Composite Input Signal information including composite and vertical sync, • >10-kΩ Input Resistance burst or back porch timing, and odd and even field • <10-mA Power Supply Drain Current information from standard negative going sync NTSC, • Composite Sync and Vertical Outputs PAL (1) and SECAM video signals with amplitude from • Odd and Even Field Output 0.5-V to 2-V p-p. The integrated circuit is also capable of providing sync separation for non- • Burst Gate or Back Porch Output standard, faster horizontal rate video signals. The • Horizontal Scan Rates to 150 kHz vertical output is produced on the rising edge of the • Edge Triggered Vertical Output first serration in the vertical sync period. A default vertical output is produced after a time delay if the • Default Triggered Vertical Output for Non- rising edge mentioned above does not occur within Standard Video Signal (Video Games-Home the externally set delay period, such as might be the Computers) case for a non-standard video signal. 2 Applications Device Information(1) • Video Cameras and Recorders PART NUMBER PACKAGE BODY SIZE (NOM) SOIC (8) 4.90 mm × 3.91 mm • Broadcasting Systems LM1881 • Set-Top Boxes PDIP (8) 9.81 mm × 6.35 mm • Home Entertainment (1) For all available packages, see the orderable addendum at the end of the data sheet. • Computing and Gaming Applications (1) PAL in this datasheet refers to European broadcast TV standard “Phase Alternating Line”, and not to Programmable Array Logic. -

Capturing Interactions in Architectural Patterns

Capturing Interactions in Architectural Patterns Dharmendra K Yadav Rushikesh K Joshi Department of Computer Science and Engineering Department of Computer Science and Engineering Indian Institute of Technology Bombay Indian Institute of Technology Bombay Powai, Mumbai 400076, India Powai, Mumbai 400076, India Email: [email protected] Email: [email protected] TABLE I Abstract—Patterns of software architecture help in describing ASUMMARY OF CCS COMBINATORS structural and functional properties of the system in terms of smaller components. The emphasis of this work is on capturing P rimitives & Descriptions Architectural the aspects of pattern descriptions and the properties of inter- Examples Significance component interactions including non-deterministic behavior. Prefix (.) Action sequence intra-component Through these descriptions we capture structural and behavioral p1.p2 sequential flow specifications as well as properties against which the specifications Summation (+) Nondeterminism choice within a component are verified. The patterns covered in this paper are variants of A1 + A2 Proxy, Chain, MVC, Acceptor-Connector, Publisher-Subscriber Composition (|) Connect matching multiple connected and Dinning Philosopher patterns. While the machines are CCS- A1 | A2 i/o ports in assembly components based, the properties have been described in Modal µ-Calculus. Restriction (\) Hiding ports from Internal The approach serves as a framework for precise architectural A\{p1, k1, ..} further composition features descriptions. Relabeling ([]) Renaming of ports syntactic renaming A[new/old, ..] I. INTRODUCTION In component/connector based architectural descriptions [6], [13], components are primary entities having identities in the represents interface points as ports uses a subset of CSP for system and connectors provide the means for communication it’s formal semantics. -

Wiki As Pattern Language

Wiki as Pattern Language Ward Cunningham Cunningham and Cunningham, Inc.1 Sustasis Foundation Portland, Oregon Michael W. Mehaffy Faculty of Architecture Delft University of Technology2 Sustasis Foundation Portland, Oregon Abstract We describe the origin of wiki technology, which has become widely influential, and its relationship to the development of pattern languages in software. We show here how the relationship is deeper than previously understood, opening up the possibility of expanded capability for wikis, including a new generation of “federated” wiki. [NOTE TO REVIEWERS: This paper is part first-person history and part theory. The history is given by one of the participants, an original developer of wiki and co-developer of pattern language. The theory points toward future potential of pattern language within a federated, peer-to-peer framework.] 1. Introduction Wiki is today widely established as a kind of website that allows users to quickly and easily share, modify and improve information collaboratively (Leuf and Cunningham, 2001). It is described on Wikipedia – perhaps its best known example – as “a website which allows its users to add, modify, or delete its content via a web browser usually using a simplified markup language or a rich-text editor” (Wikipedia, 2013). Wiki is so well established, in fact, that a Google search engine result for the term displays approximately 1.25 billion page “hits”, or pages on the World Wide Web that include this term somewhere within their text (Google, 2013a). Along with this growth, the definition of what constitutes a “wiki” has broadened since its introduction in 1995. Consider the example of WikiLeaks, where editable content would defeat the purpose of the site. -

Enterprise Integration Patterns Introduction

Enterprise Integration Patterns Gregor Hohpe Sr. Architect, ThoughtWorks [email protected] July 23, 2002 Introduction Integration of applications and business processes is a top priority for many enterprises today. Requirements for improved customer service or self-service, rapidly changing business environments and support for mergers and acquisitions are major drivers for increased integration between existing “stovepipe” systems. Very few new business applications are being developed or deployed without a major focus on integration, essentially making integratability a defining quality of enterprise applications. Many different tools and technologies are available in the marketplace to address the inevitable complexities of integrating disparate systems. Enterprise Application Integration (EAI) tool suites offer proprietary messaging mechanisms, enriched with powerful tools for metadata management, visual editing of data transformations and a series of adapters for various popular business applications. Newer technologies such as the JMS specification and Web Services standards have intensified the focus on integration technologies and best practices. Architecting an integration solution is a complex task. There are many conflicting drivers and even more possible ‘right’ solutions. Whether the architecture was in fact a good choice usually is not known until many months or even years later, when inevitable changes and additions put the original architecture to test. There is no cookbook for enterprise integration solutions. Most integration vendors provide methodologies and best practices, but these instructions tend to be very much geared towards the vendor-provided tool set and often lack treatment of the bigger picture, including underlying guidelines and principles. As a result, successful enterprise integration architects tend to be few and far in between and have usually acquired their knowledge the hard way. -

Practical Session 1, System Calls a Few Administrative Notes…

Operating Systems, 142 Tas: Vadim Levit , Dan Brownstein, Ehud Barnea, Matan Drory and Yerry Sofer Practical Session 1, System Calls A few administrative notes… • Course homepage: http://www.cs.bgu.ac.il/~os142/ • Contact staff through the dedicated email: [email protected] (format the subject of your email according to the instructions listed in the course homepage) • Assignments: Extending xv6 (a pedagogical OS) Submission in pairs. Frontal checking: 1. Assume the grader may ask anything. 2. Must register to exactly one checking session. System Calls • A System Call is an interface between a user application and a service provided by the operating system (or kernel). • These can be roughly grouped into five major categories: 1. Process control (e.g. create/terminate process) 2. File Management (e.g. read, write) 3. Device Management (e.g. logically attach a device) 4. Information Maintenance (e.g. set time or date) 5. Communications (e.g. send messages) System Calls - motivation • A process is not supposed to access the kernel. It can’t access the kernel memory or functions. • This is strictly enforced (‘protected mode’) for good reasons: • Can jeopardize other processes running. • Cause physical damage to devices. • Alter system behavior. • The system call mechanism provides a safe mechanism to request specific kernel operations. System Calls - interface • Calls are usually made with C/C++ library functions: User Application C - Library Kernel System Call getpid() Load arguments, eax _NR_getpid, kernel mode (int 80) Call sys_getpid() Sys_Call_table[eax] syscall_exit return resume_userspace return User-Space Kernel-Space Remark: Invoking int 0x80 is common although newer techniques for “faster” control transfer are provided by both AMD’s and Intel’s architecture. -

The Culture of Patterns

UDC 681.3.06 The Culture of Patterns James O. Coplien Vloebergh Professor of Computer Science, Vrije Universiteit Brussel, PO Box 4557, Wheaton, IL 60189-4557 USA [email protected] Abstract. The pattern community came about from a consciously crafted culture, a culture that has persisted, grown, and arguably thrived for a decade. The culture was built on a small number of explicit principles. The culture became embodied in its activities conferences called PLoPs that centered on a social activity for reviewing technical worksand in a body of literature that has wielded broad influence on software design. Embedded within the larger culture of software development, the pattern culture has enjoyed broad influence on software development worldwide. The culture hasnt been without its problems: conflict with academic culture, accusations of cultism, and compromises with other cultures. However, its culturally rich principles still live on both in the original organs of the pattern community and in the activities of many other software communities worldwide. 1. Introduction One doesnt read many papers on culture in the software literature. You might ask why anyone in software would think that culture is important enough that an article about culture would appear in such a journal, and you might even ask yourself: just what is culture, anyhow? The software pattern community has long taken culture as a primary concern and focus. Astute observers of the pattern community note a cultural tone to the conferences and literature of the discipline, but probably view it as a distant and puzzling phenomenon. Casual users of the pattern form may not even be aware of the cultural focus or, if they are, may discount it as a distraction. -

26 Disk Space Management

26 Disk Space Management 26.1 INTRODUCTION It has been said that the only thing all UNIX systems have in common is the login message asking users to clean up their files and use less disk space. No matter how much space you have, it isn’t enough; as soon as a disk is added, files magically appear to fill it up. Both users and the system itself are potential sources of disk bloat. Chapter 12, Syslog and Log Files, discusses various sources of logging information and the techniques used to manage them. This chapter focuses on space problems caused by users and the technical and psy- chological weapons you can deploy against them. If you do decide to Even if you have the option of adding more disk storage to your system, add a disk, refer to it’s a good idea to follow this chapter’s suggestions. Disks are cheap, but Chapter 9 for help. administrative effort is not. Disks have to be dumped, maintained, cross-mounted, and monitored; the fewer you need, the better. 26.2 DEALING WITH DISK HOGS In the absence of external pressure, there is essentially no reason for a user to ever delete anything. It takes time and effort to clean up unwanted files, and there’s always the risk that something thrown away might be wanted again in the future. Even when users have good intentions, it often takes a nudge from the system administrator to goad them into action. 618 Chapter 26 Disk Space Management 619 On a PC, disk space eventually runs out and the machine’s primary user must clean up to get the system working again. -

Leader/Followers

Leader/Followers Douglas C. Schmidt, Carlos O’Ryan, Michael Kircher, Irfan Pyarali, and Frank Buschmann {schmidt, coryan}@uci.edu, {Michael.Kircher, Frank.Buschmann}@mchp.siemens.de, [email protected] University of California at Irvine, Siemens AG, and Washington University in Saint Louis The Leader/Followers architectural pattern provides an efficient concurrency model where multiple threads take turns sharing a set of event sources in order to detect, de- multiplex, dispatch, and process service requests that occur on these event sources. Example Consider the design of a multi-tier, high-volume, on-line transaction processing (OLTP) system. In this design, front-end communication servers route transaction requests from remote clients, such as travel agents, claims processing centers, or point-of-sales terminals, to back-end database servers that process the requests transactionally. After a transaction commits, the database server returns its results to the associated communication server, which then forwards the results back to the originating remote client. This multi-tier architecture is used to improve overall system throughput and reliability via load balancing and redundancy, respectively.It LAN WAN Front-End Back-End Clients Communication Servers Database Servers also relieves back-end servers from the burden of managing different communication protocols with clients. © Douglas C. Schmidt 1998 - 2000, all rights reserved, © Siemens AG 1998 - 2000, all rights reserved 19.06.2000 lf.doc 2 Front-end communication servers are actually ``hybrid'' client/server applications that perform two primary tasks: 1 They receive requests arriving simultaneously from hundreds or thousands of remote clients over wide area communication links, such as X.25 or TCP/IP. -

A Survey of Architectural Styles.V4

Survey of Architectural Styles Alexander Bird, Bianca Esguerra, Jack Li Liu, Vergil Marana, Jack Kha Nguyen, Neil Oluwagbeminiyi Okikiolu, Navid Pourmantaz Department of Software Engineering University of Calgary Calgary, Canada Abstract— In software engineering, an architectural style is a and implementation; the capabilities and experience of highest-level description of an accepted solution to a common developers; and the infrastructure and organizational software problem. This paper describes and compares a selection constraints [30]. These styles are not presented as out-of-the- of nine accepted architectural styles selected by the authors and box solutions, but rather a framework within which a specific applicable in various domains. Each pattern is presented in a software design may be made. If one were to say “that cannot general sense and with a domain example, and then evaluated in be called a layered architecture because the such and such a terms of benefits and drawbacks. Then, the styles are compared layer must communicate with an object other than the layer in a condensed table of advantages and disadvantages which is above and below it” then one would have missed the point of useful both as a summary of the architectural styles as well as a architectural styles and design patterns. They are not intended tool for selecting a style to apply to a particular project. The to be definitive and final, but rather suggestive and a starting paper is written to accomplish the following purposes: (1) to give readers a general sense of several architectural styles and when point, not to be used as a rule book but rather a source of and when not to apply them, (2) to facilitate software system inspiration.