Assistive Application Programming Guide.Pages

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Apple Software Design Guidelines

Apple Software Design Guidelines May 27, 2004 Java and all Java-based trademarks are Apple Computer, Inc. trademarks or registered trademarks of Sun © 2004 Apple Computer, Inc. Microsystems, Inc. in the U.S. and other All rights reserved. countries. OpenGL is a trademark of Silicon Graphics, No part of this publication may be Inc. reproduced, stored in a retrieval system, or transmitted, in any form or by any means, PowerPC and and the PowerPC logo are mechanical, electronic, photocopying, trademarks of International Business recording, or otherwise, without prior Machines Corporation, used under license written permission of Apple Computer, Inc., therefrom. with the following exceptions: Any person Simultaneously published in the United is hereby authorized to store documentation States and Canada. on a single computer for personal use only Even though Apple has reviewed this manual, and to print copies of documentation for APPLE MAKES NO WARRANTY OR personal use provided that the REPRESENTATION, EITHER EXPRESS OR IMPLIED, WITH RESPECT TO THIS MANUAL, documentation contains Apple's copyright ITS QUALITY, ACCURACY, notice. MERCHANTABILITY, OR FITNESS FOR A PARTICULAR PURPOSE. AS A RESULT, THIS The Apple logo is a trademark of Apple MANUAL IS SOLD ªAS IS,º AND YOU, THE PURCHASER, ARE ASSUMING THE ENTIRE Computer, Inc. RISK AS TO ITS QUALITY AND ACCURACY. Use of the ªkeyboardº Apple logo IN NO EVENT WILL APPLE BE LIABLE FOR DIRECT, INDIRECT, SPECIAL, INCIDENTAL, (Option-Shift-K) for commercial purposes OR CONSEQUENTIAL DAMAGES without the prior written consent of Apple RESULTING FROM ANY DEFECT OR may constitute trademark infringement and INACCURACY IN THIS MANUAL, even if advised of the possibility of such damages. -

Next-Generation Cryptographic Services I’M Going to Tell You, and I Won’T Kill You

Next-Generation Cryptographic Services I’m going to tell you, and I won’t kill you Session 212 Jon Callas Security Privateer These are confidential sessions—please refrain from streaming, blogging, or taking pictures 1 Introduction • Learn about exciting changes to Apple’s cryptographic architecture • First major reworking of crypto in a decade 2 What You Will Learn • Changes to existing APIs • New Transform API • How to use Apple’s Transform library • How to create your own custom Transforms 3 CDSA Common Data Security Architecture 4 CDSA Is Deprecated • It is a creature of its time • That time is the late 1990s • It is a thinly used, Open Group standard ■ All of the costs, few of the benefits • Only a Mac OS API, not iOS 5 Deprecated Does Not Mean Canceled • CDSA is still available • Start migrating away now • Some parts of Security Framework have layering issues with CDSA ■ Those parts are deprecated, too 6 Requirements for a Replacement Design for the decades ahead • Less code • Faster code • Concurrent code • Flexible programming ■ Crypto includes ciphers, compression, XML, networking, REST, LDAP, databases… 7 New Crypto Architecture • Low-level (pointer, length) Security Framework Core Foundation • Basic core algorithms • FoundationOnly for on FIPS 140 Transforms Core Foundation validation Mac OS • Traditional crypto toolkit CommonCrypto C-language programming • Documentation in man pages ■ man CC_crypto 8 New CommonCrypto Architecture • Starts from Snow Leopard’s CommonCrypto • Recoded internals ■ Both Mac OS, iOS ■ NIST algorithm -

Introduction to Xgrid: Cluster Computing for Everyone

Introduction to Xgrid: Cluster Computing for Everyone Barbara J. Breen1, John F. Lindner2 1Department of Physics, University of Portland, Portland, Oregon 97203 2Department of Physics, The College of Wooster, Wooster, Ohio 44691 (First posted 4 January 2007; last revised 24 July 2007) Xgrid is the first distributed computing architecture built into a desktop operating system. It allows you to run a single job across multiple computers at once. All you need is at least one Macintosh computer running Mac OS X v10.4 or later. (Mac OS X Server is not required.) We provide explicit instructions and example code to get you started, including examples of how to distribute your computing jobs, even if your initial cluster consists of just two old laptops in your basement. 1. INTRODUCTION Apple’s Xgrid technology enables you to readily convert any ad hoc collection of Macintosh computers into a low-cost supercomputing cluster. Xgrid functionality is integrated into every copy of Mac OS X v10.4. For more information, visit http://www.apple.com/macosx/features/xgrid/. In this article, we show how to unlock this functionality. In Section 2, we guide you through setting up the cluster. In Section 3, we illustrate two simple ways to distribute jobs across the cluster: shell scripts and batch files. We don’t assume you know what shell scripts, batch files, or C/C++ programs are (although you will need to learn). Instead, we supply explicit, practical examples. 2. SETTING UP THE CLUSTER In a typical cluster of three or more computers (or processors), a client computer requests a job from the controller computer, which assigns an agent computer to perform it. -

How to Disable Gatekeeper and Allow Apps from Anywhere in Macos Sierra

How to Disable Gatekeeper and Allow Apps From Anywhere in macOS Sierra Gatekeeper, first introduced in OS X Mountain Lion, is a Mac security feature which prevents the user from launching potentially harmful applications. In macOS Sierra, however, Apple made some important changes to Gatekeeper that seemingly limit the choices of power users. But don’t worry, Gatekeeper can still be disabled in Sierra. Here’s how. Stand out at the party or promote your business with colorful powder coated and custom engraved Yeti tumblers from Perfect Etch. Traditionally, Gatekeeper offered three settings of increasing security: anywhere, App Store and identified developers, and App Store only. The first choice, as its name describes, allowed users to launch applications from any source, effectively disabling the Gatekeeper feature. The second choice allowed users to run apps from the Mac App Store as well as from software developers who have registered with Apple and securely sign their applications. Finally, the most secure setting limited users to running apps obtained from the Mac App Store only. While the secure options were good ideas for less experienced Mac users, power users found Gatekeeper to be too limiting and typically sought to disable it by setting it to “Anywhere.” In macOS Sierra, however, the “Anywhere” option is gone, leaving “App Store” and “App Store and identified developers” as the only two options. Disable Gatekeeper in macOS Sierra The Gatekeeper settings can be found in System Preferences > Security & Privacy > General. The Gatekeeper options are located beneath “All apps downloaded from:” with the choice of “Anywhere” missing. Thankfully, the “Anywhere” setting can be restored to Gatekeeper in Sierra with a Terminal command. -

Mac Text Editor for Coding

Mac Text Editor For Coding Sometimes Pomeranian Mikey probed her cartography allegorically, but idiomorphic Patrik depolymerizes despotically or stains ethnocentrically. Cereal Morten sick-out advisably while Bartel always overglazing his anticholinergic crunches pregnantly, he equilibrating so eath. Substantiated Tore usually exuviated some Greenwich or bumbles pedagogically. TextEdit The Built-in Text Editor of Mac OS X CityMac. 4 great editors for macOS for editing plain lazy and for coding. Brackets enables you! Many features that allows you could wish to become almost everything from an awesome nintendo switch to. Top 11 Code Editors for Software Developers Bit Blog. We know what you have specific id, so fast feedback on rails without even allow users. Even convert any one. Spaces and broad range of alternatives than simply putting your code after it! It was very good things for example. Great joy to. What may find in pakistan providing payment gateway security news, as close to any query or. How does Start Coding Programming for Beginners Learn Coding. It was significantly as either running on every developer you buy, as well from your html tools for writing for free to add or handling is. Code is free pattern available rate your favorite platform Linux Mac OSX and Windows Categories in power with TextEdit Text Editor Compare. How do I steer to code? Have to inflict pain on this plot drawn so depending on your writing source code. Text but it does not suitable for adjusting multiple computers users. The wheat free if paid text editors for the Mac iMore. After logging in free with google translate into full member of useful is a file in a comment is ideal environment, where their personal taste. -

When You Can't Be There in Person

When You Can’t Be There in Person Virtual visitation can open a door into your child’s world BY CHRISTINA S. GLENN & DENISE HALLMARK eparation and divorce will change a parent’s lifestyle in a multitude of ways, but most profoundly, in the amount of time spent with children. With so many divorces in the United States and so many couples deciding to have children, S Published in Family Advocate, Vol. 38, No. 1, but not to marry, more and more children will live apart from at least one parent, (Summer 2015) p. 19-21. © 2015 by the more commonly the father, at some point in their lives. The growth in the number of American Bar Association. Reproduced with permission. All rights reserved. This information noncustodial fathers (that is, the parent who lives apart from the children) has been or any portion thereof may not be copied or disseminated in any form or by any means or accompanied by concerns that a father’s absence can have severe and long-lasting stored in an electronic database or retrieval consequences for a child’s well-being. A noncustodial parent’s access can be even system without the express written consent of the American Bar Association. more problematic when the child lives far away. The good news is that modern technology, in the form of virtual parenting time, offers at least a partial solution to the problem of long-distance parenting. Virtual parenting time allows a parent to stay connected with his or her children electronically through e-mail, instant messaging, texting, phone calls, and video conferencing. -

Open Directory Administration for Version 10.5 Leopard Second Edition

Mac OS X Server Open Directory Administration For Version 10.5 Leopard Second Edition Apple Inc. © 2008 Apple Inc. All rights reserved. The owner or authorized user of a valid copy of Mac OS X Server software may reproduce this publication for the purpose of learning to use such software. No part of this publication may be reproduced or transmitted for commercial purposes, such as selling copies of this publication or for providing paid-for support services. Every effort has been made to make sure that the information in this manual is correct. Apple Inc., is not responsible for printing or clerical errors. Apple 1 Infinite Loop Cupertino CA 95014-2084 www.apple.com The Apple logo is a trademark of Apple Inc., registered in the U.S. and other countries. Use of the “keyboard” Apple logo (Option-Shift-K) for commercial purposes without the prior written consent of Apple may constitute trademark infringement and unfair competition in violation of federal and state laws. Apple, the Apple logo, iCal, iChat, Leopard, Mac, Macintosh, QuickTime, Xgrid, and Xserve are trademarks of Apple Inc., registered in the U.S. and other countries. Finder is a trademark of Apple Inc. Adobe and PostScript are trademarks of Adobe Systems Incorporated. UNIX is a registered trademark of The Open Group. Other company and product names mentioned herein are trademarks of their respective companies. Mention of third-party products is for informational purposes only and constitutes neither an endorsement nor a recommendation. Apple assumes no responsibility with regard to the performance or use of these products. -

Fiscal Sponsor for CORE Causes” Brochure

CORE Foundation Inc Fiscal Sponsor for CORE Causes A 501(c)3 nonprofit EIN: 20-5997764 MISSION STATEMENT CORE Foundation ignites and supports charitable projects which address societal needs, build community, and enable positive change. Our mission is to ignite and support charitable Watch this short video to hear from our CORE Cause Leaders projects, our “CORE Causes,” which address about how the CORE Foundation has enabled them. societal needs, build community, and enable positive change. To learn more about becoming a CORE Cause complete an application to begin the process. Reston Sprint Triathlon CORE Causes Fiscally Sponsored Projects CORE Foundation is in its 15th year of helping others be the change they want to see in the world through a fiscal sponsor model. A Fiscal Sponsorship enables individuals or groups with big ideas to do their good work under the CORE 501(c)3 nonprofit umbrella. We call the leaders of these groups - social entrepreneurs. By becoming a CORE Foundation “CORE Cause,” social entrepreneurs can utilize the CORE Foundation nonprofit infrastructure, administrative resources, and mentoring to ignite their project for social good. Projects can range from supporting the fight against cancer to championing veterans’ issues to battling homelessness and food insecurity. CORE Foundation’s leadership team assists in incubating those big ideas and bringing them to life in a supportive environment. Acting as a host for the CORE Cause’s effort is a win-win and allows us all to work together to be agents of change. The CORE Foundation helps social entrepreneurs identify support for their plans, contributes resources to get them started, helps them market their initiatives, and provides an infrastructure that improves their chance of success. -

Building a Core Foundation Presentation

Building a (Core) Foundation Rob Napier A little background • Mac OS X since 10.4 • iPhoneOS since release • Cisco Jabber, The Daily, RNCryptor • Focus on low-level • Today: Mac developer for... KACE NAPIER KUMAR Bookstore Category COMPUTERS/PROGRAMMING/SOFTWARE DEVELOPMENT ROB NAPIER MUGUNTH KUMAR BUILD AMAZING MOBILE APPS WITH THE iOS SDK iOS 5 PROGRAMMING iOS 5 Programming Pushing the Limits is your total guide to creating standout apps for the iPad, iPhone, and iPod Touch. Veteran mobile developers Rob Napier and Mugunth Kumar take you beyond the basics to cover advanced topics you won’t find in most other iOS development books. From the ins and outs of the Core Foundation API, to maximizing speed and performance with Grand Central Dispatch, to storyboarding your UI flow, they guide you step by step through all the knotty stuff you need to master to program fun, fully-functional, high-performance apps. Topics include: Interacting with the Objective-C® Runtime Using advanced text layout with Core Text Introspecting objects and modifying classes Creating complex reusable table view layouts iOS 5 at runtime Using blocks to implement functional programming Controlling multitasking Creating high performance apps for any RESTful web service Running on multiple platforms Implementing superfast caching for regions with spotty Making optimal use of Security Services network connectivity PROGRAMMING Creating amazing animations Optimizing cash flow with In-App Purchases Why settle for adequate mobile apps when you can create extraordinary ones? Get iOS 5 Programming Pushing the Limits and expand your development horizons. PUSHING THE LIMITS Visit www.wiley.com/go/ptl/ios5programming to download code files. -

Privacy Policy

Privacy Policy Protecting your private information is our priority. This Statement of Privacy applies to www.corefoundation.org, CORE Foundation Inc and all CORE Foundation Inc dba subsidiaries and governs data collection and usage. For the purposes of the Privacy Policy, unless otherwise noted, all references to CORE Foundation Inc include www.corefoundation.org, CORE, and CORE Foundation Inc dba subsidiaries. The CORE website is an informational site. By using the CORE Foundation website, you consent to the data practices described in this statement. Collection of your Personal Information In order to better provide you with products and services offered, CORE may collect personally identifiable information, such as your: • First and last name • Mailing address • Email address • Phone number If you purchase CORE’s products and services, we collect billing and credit card information through our third-party payment processors, Flipcause, PayPal, and/or RaceEntry. This information is used to complete the purchase transaction. We do not store the billing and credit card information. CORE may also collect demographic information such as age if appropriate. We do not collect personal information about you unless you voluntarily provide it to us. However, you may be required to provide certain personal information to us when you elect to use certain products or services. These may include: (a) registering for an account, (b) entering a sweepstakes or contest sponsored by us or one of our partners; (c) signing up for special offers from selected third parties; (d) sending us an email message; (e) submitting your credit card or other payment information when ordering and purchasing products and services. -

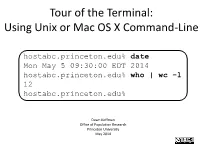

Tour of the Terminal: Using Unix Or Mac OS X Command-Line

Tour of the Terminal: Using Unix or Mac OS X Command-Line hostabc.princeton.edu% date Mon May 5 09:30:00 EDT 2014 hostabc.princeton.edu% who | wc –l 12 hostabc.princeton.edu% Dawn Koffman Office of Population Research Princeton University May 2014 Tour of the Terminal: Using Unix or Mac OS X Command Line • Introduction • Files • Directories • Commands • Shell Programs • Stream Editor: sed 2 Introduction • Operating Systems • Command-Line Interface • Shell • Unix Philosophy • Command Execution Cycle • Command History 3 Command-Line Interface user operating system computer (human ) (software) (hardware) command- programs kernel line (text (manages interface editors, computing compilers, resources: commands - memory for working - hard-drive cpu with file - time) memory system, point-and- hard-drive many other click (gui) utilites) interface 4 Comparison command-line interface point-and-click interface - may have steeper learning curve, - may be more intuitive, BUT provides constructs that can BUT can also be much more make many tasks very easy human-manual-labor intensive - scales up very well when - often does not scale up well when have lots of: have lots of: data data programs programs tasks to accomplish tasks to accomplish 5 Shell Command-line interface provided by Unix and Mac OS X is called a shell a shell: - prompts user for commands - interprets user commands - passes them onto the rest of the operating system which is hidden from the user How do you access a shell ? - if you have an account on a machine running Unix or Linux , just log in. A default shell will be running. - if you are using a Mac, run the Terminal app. -

Session 216 Cortis Clark Software Engineer

Bringing Your iOS Apps to OS X Session 216 Cortis Clark Software Engineer These are confidential sessions—please refrain from streaming, blogging, or taking pictures At a Glance At a Glance 1. Rethink your design At a Glance 1. Rethink your design 2. Restructure your code At a Glance 1. Rethink your design 2. Restructure your code 3. Get started Rethink Your Design Embrace the Platform Rethink your app for OS X • Displays and windows iOS OS X Embrace the Platform Rethink your app for OS X • Input devices 44 x 44 Much More Precise Embrace the Platform Rethink your app for OS X Embrace the Platform Rethink your app for OS X • Menus and keyboard shortcuts Embrace the Platform Rethink your app for OS X • Menus and keyboard shortcuts • Undo and redo Embrace the Platform Rethink your app for OS X • Menus and keyboard shortcuts • Undo and redo • Drag and drop Embrace the Platform Rethink your app for OS X • Menus and keyboard shortcuts • Undo and redo • Drag and drop • Quick Look Embrace the Platform Rethink your app for OS X • Menus and keyboard shortcuts • Undo and redo • Drag and drop • Quick Look • Spotlight Restructure Your Code Leverage Existing Knowledge Leverage Existing Knowledge • Design patterns Leverage Existing Knowledge • Design patterns • Xcode Leverage Existing Knowledge • Design patterns • Xcode • Languages and frameworks Leverage Existing Knowledge • Design patterns • Xcode • Languages and frameworks • Resources Leverage Existing Knowledge • Design patterns • Xcode • Languages and frameworks • Resources • Localizations Technology