Standards Conversion in the File Domain

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Problems on Video Coding

Problems on Video Coding Guan-Ju Peng Graduate Institute of Electronics Engineering, National Taiwan University 1 Problem 1 How to display digital video designed for TV industry on a computer screen with best quality? .. Hint: computer display is 4:3, 1280x1024, 72 Hz, pro- gressive. Supposing the digital TV display is 4:3 640x480, 30Hz, Interlaced. The problem becomes how to convert the video signal from 4:3 640x480, 60Hz, Interlaced to 4:3, 1280x1024, 72 Hz, progressive. First, we convert the video stream from interlaced video to progressive signal by a suitable de-interlacing algorithm. Thus, we introduce the de-interlacing algorithms ¯rst in the following. 1.1 De-Interlacing Generally speaking, there are three kinds of methods to perform de-interlacing. 1.1.1 Field Combination Deinterlacing ² Weaving is done by adding consecutive ¯elds together. This is ¯ne when the image hasn't changed between ¯elds, but any change will result in artifacts known as "combing", when the pixels in one frame do not line up with the pixels in the other, forming a jagged edge. This technique retains full vertical resolution at the expense of half the temporal resolution. ² Blending is done by blending, or averaging consecutive ¯elds to be displayed as one frame. Combing is avoided because both of the images are on top of each other. This instead leaves an artifact known as ghosting. The image loses vertical resolution and 1 temporal resolution. This is often combined with a vertical resize so that the output has no numerical loss in vertical resolution. The problem with this is that there is a quality loss, because the image has been downsized then upsized. -

Viarte Remastering of SD to HD/UHD & HDR Guide

Page 1/3 Viarte SDR-to-HDR Up-conversion & Digital Remastering of SD/HD to HD/UHD Services 1. Introduction As trends move rapidly towards online content distribution and bigger and brighter progressive UHD/HDR displays, the need for high quality remastering of SD/HD and SDR to HDR up-conversion of valuable SD/HD/UHD assets becomes more relevant than ever. Various technical issues inherited in legacy content hinder the immersive viewing experience one might expect from these new HDR display technologies. In particular, interlaced content need to be properly deinterlaced, and frame rate converted in order to accommodate OTT or Blu-ray re-distribution. Equally important, film grain or various noise conditions need to be addressed, so as to avoid noise being further magnified during edge-enhanced upscaling, and to avoid further perturbing any future SDR to HDR up-conversion. Film grain should no longer be regarded as an aesthetic enhancement, but rather as a costly nuisance, as it not only degrades the viewing experience, especially on brighter HDR displays, but also significantly increases HEVC/H.264 compressed bit-rates, thereby increases online distribution and storage costs. 2. Digital Remastering and SDR to HDR Up-Conversion Process There are several steps required for a high quality SD/HD to HD/UHD remastering project. The very first step may be tape scan. The digital master forms the baseline for all further quality assessment. isovideo's SD/HD to HD/UHD digital remastering services use our proprietary, state-of-the-art award- winning Viarte technology. Viarte's proprietary motion processing technology is the best available. -

Mixbus V4 1 — Last Update: 2017/12/19 Harrison Consoles

Mixbus v4 1 — Last update: 2017/12/19 Harrison Consoles Harrison Consoles Copyright Information 2017 No part of this publication may be copied, reproduced, transmitted, stored on a retrieval system, or translated into any language, in any form or by any means without the prior written consent of an authorized officer of Harrison Consoles, 1024 Firestone Parkway, La Vergne, TN 37086. Table of Contents Introduction ................................................................................................................................................ 5 About This Manual (online version and PDF download)........................................................................... 7 Features & Specifications.......................................................................................................................... 9 What’s Different About Mixbus? ............................................................................................................ 11 Operational Differences from Other DAWs ............................................................................................ 13 Installation ................................................................................................................................................ 16 Installation – Windows ......................................................................................................................... 17 Installation – OS X ............................................................................................................................... -

A Novel Edge-Preserving Mesh-Based Method for Image Scaling

A Novel Edge-Preserving Mesh-Based Method for Image Scaling Seyedali Mostafavian and Michael D. Adams Dept. of Electrical and Computer Engineering, University of Victoria, Victoria, BC V8P 5C2, Canada [email protected] and [email protected] Abstract—In this paper, we present a novel image scaling no loss of image quality. One main problem in vector-based method that employs a mesh model that explicitly represents interpolation methods, however, is how to create a vector discontinuities in the image. Our method effectively addresses model which faithfully represents the raster image data and its the problem of preserving the sharpness of edges, which has always been a challenge, during image enlargement. We use important features such as edges. Among the many techniques a constrained Delaunay triangulation to generate the model to generate a vector image from a raster image, triangle and an approximating function that is continuous everywhere mesh models have become quite popular. With a triangle-mesh except across the image edges (i.e., discontinuities). The model model, the image domain is partitioned into a set of non- is then rasterized using a subdivision-based technique. Visual overlapping triangles called a triangulation. Then, the image comparisons and quantitative measures show that our method can greatly reduce the blurring artifacts that can arise during intensity function is approximated over each of the triangles. image enlargement and produce images that look more pleasant A mesh-generation method is required to choose a good subset to human observers, compared to the well-known bilinear and of sample points and to collect any critical data from the input bicubic methods. -

Color Page Effects Chapter 116 Davinci Resolve Control Panels

PART 9 Color Page Effects Chapter 116 DaVinci Resolve Control Panels The DaVinci Resolve control panels make it easier to make more adjustments in the same amount of time than using a mouse, pen, or trackpad with the on-screen interface. Additionally, using a DaVinci Resolve control panel to control the Color page provides vastly superior ergonomic comfort to clutching a mouse or pen all day, which is important when you’re potentially grading a thousand shots per day. This chapter covers details about the three DaVinci Resolve control panels that are available, and how they work with DaVinci Resolve. Chapter – 116 DaVinci Resolve Control Panels 2258 Contents About The DaVinci Resolve Control Panels 2260 DaVinci Resolve Micro Panel 2261 Trackballs 2261 Control Knobs 2262 Control Buttons 2263 DaVinci Resolve Mini Panel 2265 Palette Selection Buttons 2265 Quick Selection Buttons 2266 DaVinci Resolve Advanced Control Panel 2268 Menus, Soft Keys, and Soft Knob Controls 2268 Trackball Panel 2269 T-bar Panel 2270 Transport Panel 2276 Copying Grades Using the Advanced Control Panel 2280 Copy Forward Keys 2280 Scroll 2280 Rippling Changes Using the Advanced Control Panel 2281 Chapter – 116 DaVinci Resolve Control Panels 2259 About The DaVinci Resolve Control Panels There are three DaVinci Resolve control panel options available and each are designed to meet modern workflow ergonomics and ease of use so colorists can quickly and accurately construct both simple and complex creative grades with minimal fatigue. This chapter provides details of the each of the panel functions and should be read in conjunction with the previous grading chapters to get the best from your panel. -

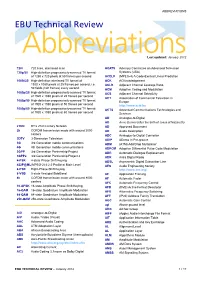

ABBREVIATIONS EBU Technical Review

ABBREVIATIONS EBU Technical Review AbbreviationsLast updated: January 2012 720i 720 lines, interlaced scan ACATS Advisory Committee on Advanced Television 720p/50 High-definition progressively-scanned TV format Systems (USA) of 1280 x 720 pixels at 50 frames per second ACELP (MPEG-4) A Code-Excited Linear Prediction 1080i/25 High-definition interlaced TV format of ACK ACKnowledgement 1920 x 1080 pixels at 25 frames per second, i.e. ACLR Adjacent Channel Leakage Ratio 50 fields (half frames) every second ACM Adaptive Coding and Modulation 1080p/25 High-definition progressively-scanned TV format ACS Adjacent Channel Selectivity of 1920 x 1080 pixels at 25 frames per second ACT Association of Commercial Television in 1080p/50 High-definition progressively-scanned TV format Europe of 1920 x 1080 pixels at 50 frames per second http://www.acte.be 1080p/60 High-definition progressively-scanned TV format ACTS Advanced Communications Technologies and of 1920 x 1080 pixels at 60 frames per second Services AD Analogue-to-Digital AD Anno Domini (after the birth of Jesus of Nazareth) 21CN BT’s 21st Century Network AD Approved Document 2k COFDM transmission mode with around 2000 AD Audio Description carriers ADC Analogue-to-Digital Converter 3DTV 3-Dimension Television ADIP ADress In Pre-groove 3G 3rd Generation mobile communications ADM (ATM) Add/Drop Multiplexer 4G 4th Generation mobile communications ADPCM Adaptive Differential Pulse Code Modulation 3GPP 3rd Generation Partnership Project ADR Automatic Dialogue Replacement 3GPP2 3rd Generation Partnership -

A Review and Comparison on Different Video Deinterlacing

International Journal of Research ISSN NO:2236-6124 A Review and Comparison on Different Video Deinterlacing Methodologies 1Boyapati Bharathidevi,2Kurangi Mary Sujana,3Ashok kumar Balijepalli 1,2,3 Asst.Professor,Universal College of Engg & Technology,Perecherla,Guntur,AP,India-522438 [email protected],[email protected],[email protected] Abstract— Video deinterlacing is a key technique in Interlaced videos are generally preferred in video broadcast digital video processing, particularly with the widespread and transmission systems as they reduce the amount of data to usage of LCD and plasma TVs. Interlacing is a widely used be broadcast. Transmission of interlaced videos was widely technique, for television broadcast and video recording, to popular in various television broadcasting systems such as double the perceived frame rate without increasing the NTSC [2], PAL [3], SECAM. Many broadcasting agencies bandwidth. But it presents annoying visual artifacts, such as made huge profits with interlaced videos. Video acquiring flickering and silhouette "serration," during the playback. systems on many occasions naturally acquire interlaced video Existing state-of-the-art deinterlacing methods either ignore and since this also proved be an efficient way, the popularity the temporal information to provide real-time performance of interlaced videos escalated. but lower visual quality, or estimate the motion for better deinterlacing but with a trade-off of higher computational cost. The question `to interlace or not to interlace' divides the TV and the PC communities. A proper answer requires a common understanding of what is possible nowadays in deinterlacing video signals. This paper outlines the most relevant methods, and provides a relative comparison. -

Episode Engine User’S Guide

Note on License The accompanying Software is licensed and may not be distributed without writ- ten permission. Disclaimer The contents of this document are subject to revision without notice due to con- tinued progress in methodology, design, and manufacturing. Telestream shall have no liability for any error or damages of any kind resulting from the use of this doc- ument and/or software. The Software may contain errors and is not designed or intended for use in on-line facilities, aircraft navigation or communications systems, air traffic control, direct life support machines, or weapons systems (“High Risk Activities”) in which the failure of the Software would lead directly to death, personal injury or severe physical or environmental damage. You represent and warrant to Telestream that you will not use, distribute, or license the Software for High Risk Activities. Export Regulations. Software, including technical data, is subject to Swedish export control laws, and its associated regulations, and may be subject to export or import regulations in other countries. You agree to comply strictly with all such regulations and acknowledge that you have the responsibility to obtain licenses to export, re-export, or import Software. Copyright Statement ©Telestream, Inc, 2010 All rights reserved. No part of this document may be copied or distributed. This document is part of the software product and, as such, is part of the license agreement governing the software. So are any other parts of the software product, such as packaging and distribution media. The information in this document may be changed without prior notice and does not represent a commitment on the part of Telestream. -

Dolby DP600 Program Optimizer Manual

0. Dolby® DP600 Program Optimizer Manual Issue 2 Part Number 91963 Dolby Laboratories, Inc. Corporate Headquarters Dolby Laboratories, Inc. 100 Potrero Avenue San Francisco, CA 94103‐4813 USA Telephone 415‐558‐0200 Fax 415‐863‐1373 www.dolby.com European Headquarters Dolby Laboratories, Inc. Wootton Bassett Wiltshire SN4 8QJ England Telephone 44‐1793‐842100 Fax 44‐1793‐842101 DISCLAIMER OF WARRANTIES: EQUIPMENT MANUFACTURED BY DOLBY LABORATORIES IS WARRANTED AGAINST DEFECTS IN MATERIALS AND WORKMANSHIP FOR A PERIOD OF ONE YEAR FROM THE DATE OF PURCHASE. THERE ARE NO OTHER EXPRESS OR IMPLIED WARRANTIES AND NO WARRANTY OF MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE, OR OF NONINFRINGEMENT OF THIRD‐PARTY RIGHTS (INCLUDING, BUT NOT LIMITED TO, COPYRIGHT AND PATENT RIGHTS). LIMITATION OF LIABILITY: IT IS UNDERSTOOD AND AGREED THAT DOLBY LABORATORIES’ LIABILITY, WHETHER IN CONTRACT, IN TORT, UNDER ANY WARRANTY, IN NEGLIGENCE, OR OTHERWISE, SHALL NOT EXCEED THE COST OF REPAIR OR REPLACEMENT OF THE DEFECTIVE COMPONENTS OR ACCUSED INFRINGING DEVICES, AND UNDER NO CIRCUMSTANCES SHALL DOLBY LABORATORIES BE LIABLE FOR INCIDENTAL, SPECIAL, DIRECT, INDIRECT, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, DAMAGE TO SOFTWARE OR RECORDED AUDIO OR VISUAL MATERIAL), COST OF DEFENSE, OR LOSS OF USE, REVENUE, OR PROFIT, EVEN IF DOLBY LABORATORIES OR ITS AGENTS HAVE BEEN ADVISED, ORALLY OR IN WRITING, OF THE POSSIBILITY OF SUCH DAMAGES. Dolby, Pro Logic, and the double‐D symbol are registered trademarks of Dolby Laboratories. Part Number 91963 Dialogue Intelligence is a trademark of Dolby Laboratories. S08/18709/19636 All other trademarks remain the property of their respective owners. -

EBU Tech 3315-2006 Archiving: Experiences with TK Transfer to Digital

EBU – TECH 3315 Archiving: Experiences with telecine transfer of film to digital formats Source: P/HDTP Status: Report Geneva April 2006 1 Page intentionally left blank. This document is paginated for recto-verso printing Tech 3315 Archiving: Experiences with telecine transfer of film to digital formats Contents Introduction ......................................................................................................... 5 Decisions on Scanning Format .................................................................................... 5 Scanning tests ....................................................................................................... 6 The Results .......................................................................................................... 7 Observations of the influence of elements of film by specialists ........................................ 7 Observations on the results of the formal evaluations .................................................... 7 Overall conclusions .............................................................................................. 7 APPENDIX : Details of the Tests and Results ................................................................... 9 3 Archiving: Experiences with telecine transfer of film to digital formats Tech 3315 Page intentionally left blank. This document is paginated for recto-verso printing 4 Tech 3315 Archiving: Experiences with telecine transfer of film to digital formats Archiving: Experience with telecine transfer of film to digital formats -

Cerify Automated Video Content Verification System User Manual

xx Cerify Automated Video Content Verification System ZZZ User Manual *P077035208* 077-0352-08 Cerify Automated Video Content Verification System ZZZ User Manual www.tektronix.com 077-0352-08 Copyright © Tektronix. All rights reserved. Licensed software products are owned by Tektronix or its subsidiaries or suppliers, and are protected by national copyright laws and international treaty provisions. Tektronix products are covered by U.S. and foreign patents, issued and pending. Information in this publication supersedes that in all previously published material. Specifications and price change privileges reserved. TEKTRONIX and TEK are registered trademarks of Tektronix, Inc. Cerify is trademark of Tektronix, Inc. This document supports software version 7.5 and above. Cerify Technical Support To obtain technical support for your Cerify system, send an e-mail to the following address: [email protected]. Contacting Tektronix Tektronix, Inc. 14150 SW Karl Braun Drive P.O. Box 500 Beaverton, OR 97077 USA For product information, sales, service, and technical support: In North America, call 1-800-833-9200. Worldwide, visit www.tektronix.com to find contacts in your area. Warranty Tektronix warrants that the media on which this software product is furnished and the encoding of the programs on the media will be free from defects in materials and workmanship for a period of three (3) months from the date of shipment. If any such medium or encoding proves defective during the warranty period, Tektronix will provide a replacement in exchange for the defective medium. Except as to the media on which this software product is furnished, this software product is provided “as is” without warranty of any kind, either express or implied. -

20130218 Technical White Paper.Bw.Jp

Sending, Storing & Sharing Video With latakoo © Copyright latakoo. All rights reserved. Revised 11/12/2012 Table of contents Table of contents ................................................................................................... 1 1. Introduction ...................................................................................................... 2 2. Sending video & files with latakoo ...................................................................... 3 2.1 The latakoo app ............................................................................................ 3 2.2 Compression & upload ................................................................................. 3 2.3 latakoo minutes ............................................................................................ 4 3. The latakoo web interface .................................................................................. 4 3.1 Web interface requirements .......................................................................... 4 3.2 Logging into the dashboard .......................................................................... 4 3.3 Streaming video previews ............................................................................. 4 3.4 Downloading video ...................................................................................... 5 3.5 latakoo Pilot ................................................................................................. 5 3.6 Search and tagging ......................................................................................