Observations on the SIMON Block Cipher Family

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Partly-Pseudo-Linear Cryptanalysis of Reduced-Round SPECK

cryptography Article Partly-Pseudo-Linear Cryptanalysis of Reduced-Round SPECK Sarah A. Alzakari * and Poorvi L. Vora Department of Computer Science, The George Washington University, 800 22nd St. NW, Washington, DC 20052, USA; [email protected] * Correspondence: [email protected] Abstract: We apply McKay’s pseudo-linear approximation of addition modular 2n to lightweight ARX block ciphers with large words, specifically the SPECK family. We demonstrate that a pseudo- linear approximation can be combined with a linear approximation using the meet-in-the-middle attack technique to recover several key bits. Thus we illustrate improvements to SPECK linear distinguishers based solely on Cho–Pieprzyk approximations by combining them with pseudo-linear approximations, and propose key recovery attacks. Keywords: SPECK; pseudo-linear cryptanalysis; linear cryptanalysis; partly-pseudo-linear attack 1. Introduction ARX block ciphers—which rely on Addition-Rotation-XOR operations performed a number of times—provide a common approach to lightweight cipher design. In June 2013, a group of inventors from the US’s National Security Agency (NSA) proposed two families of lightweight block ciphers, SIMON and SPECK—each of which comes in a variety of widths and key sizes. The SPECK cipher, as an ARX cipher, provides efficient software implementations, while SIMON provides efficient hardware implementations. Moreover, both families perform well in both hardware and software and offer the flexibility across Citation: Alzakari, S.; Vora, P. different platforms that will be required by future applications [1,2]. In this paper, we focus Partly-Pseudo-Linear Cryptanalysis on the SPECK family as an example lightweight ARX block cipher to illustrate our attack. -

The Summation-Truncation Hybrid: Reusing Discarded Bits for Free

The Summation-Truncation Hybrid: Reusing Discarded Bits for Free Aldo Gunsing and Bart Mennink Digital Security Group, Radboud University, Nijmegen, The Netherlands [email protected], [email protected] Abstract. A well-established PRP-to-PRF conversion design is trun- cation: one evaluates an n-bit pseudorandom permutation on a certain input, and truncates the result to a bits. The construction is known to achieve tight 2n−a=2 security. Truncation has gained popularity due to its appearance in the GCM-SIV key derivation function (ACM CCS 2015). This key derivation function makes four evaluations of AES, truncates the outputs to n=2 bits, and concatenates these to get a 2n-bit subkey. In this work, we demonstrate that truncation is wasteful. In more detail, we present the Summation-Truncation Hybrid (STH). At a high level, the construction consists of two parallel evaluations of truncation, where the truncated (n − a)-bit chunks are not discarded but rather summed together and appended to the output. We prove that STH achieves a similar security level as truncation, and thus that the n − a bits of extra output is rendered for free. In the application of GCM-SIV, the current key derivation can be used to output 3n bits of random material, or it can be reduced to three primitive evaluations. Both changes come with no security loss. Keywords: PRP-to-PRF, Truncation, Sum of permutations, Efficiency, GCM-SIV 1 Introduction The vast majority of symmetric cryptographic schemes is built upon a pseu- dorandom permutation, such as AES [21]. Such a function gets as input a key and bijectively transforms its input to an output such that the function is hard to distinguish from random if an attacker has no knowledge about the key. -

Linear Cryptanalysis of Reduced-Round SIMON Using Super Rounds

cryptography Article Linear Cryptanalysis of Reduced-Round SIMON Using Super Rounds Reham Almukhlifi * and Poorvi L. Vora Department of Computer Science, The George Washington University, Washington, DC 20052, USA; [email protected] * Correspondence: [email protected] Received: 5 March 2020; Accepted: 15 March 2020; Published: 18 March 2020 Abstract: We present attacks on 21-rounds of SIMON 32/64, 21-rounds of SIMON 48/96, 25-rounds of SIMON 64/128, 35-rounds of SIMON 96/144 and 43-rounds of SIMON 128/256, often with direct recovery of the full master key without repeating the attack over multiple rounds. These attacks result from the observation that, after four rounds of encryption, one bit of the left half of the state of 32/64 SIMON depends on only 17 key bits (19 key bits for the other variants of SIMON). Further, linear cryptanalysis requires the guessing of only 16 bits, the size of a single round key of SIMON 32/64. We partition the key into smaller strings by focusing on one bit of state at a time, decreasing the cost of the exhaustive search of linear cryptanalysis to 16 bits at a time for SIMON 32/64. We also present other example linear cryptanalysis, experimentally verified on 8, 10 and 12 rounds for SIMON 32/64. Keywords: SIMON; linear cryptanalysis; super round 1. Introduction Lightweight cryptography is a rapidly growing area of research, emerging to fill the need for securing highly-constrained devices such as RFID tags and sensor networks. The limited hardware and software resources require that the cryptographic primitives be highly efficient. -

Giza and the Pyramids, Veteran Hosni Mubarak

An aeroplane flies over the pyramids and Sphinx on the Giza Plateau near Cairo. ARCHAEOLOGY The wonder of the pyramids Andrew Robinson enjoys a volume rounding up research on the complex at Giza, Egypt. n Giza and the Pyramids, veteran Hosni Mubarak. After AERAGRAM 16(2), 8–14; 2015), the tomb’s Egyptologists Mark Lehner and Zahi Mubarak fell from four sides, each a little more than 230 metres Hawass cite an Arab proverb: “Man power during the long, vary by at most just 18.3 centimetres. Ifears time, but time fears the pyramids.” It’s Arab Spring that year, Lehner and Hawass reject the idea that a reminder that the great Egyptian complex Hawass resigned, amid armies of Egyptian slaves constructed the on the Giza Plateau has endured for some controversy. pyramids, as the classical Greek historian four and a half millennia — the last monu- The Giza complex Herodotus suggested. They do, however, ment standing of that classical-era must-see invites speculation embrace the concept that the innovative list, the Seven Wonders of the World. and debate. Its three administrative and social organization Lehner and Hawass have produced an pyramids are the Giza and the demanded by the enormous task of build- astonishingly comprehensive study of the tombs of pharaohs Pyramids ing the complex were key factors in creating excavations and scientific investigations Khufu, Khafre and MARK LEHNER & ZAHI Egyptian civilization. CREATIVE GEOGRAPHIC L. STANFIELD/NATL JAMES that have, over two centuries, uncovered Menkaure, also known HAWASS The authors are also in accord over a the engineering techniques, religious and as Cheops, Chephren Thames & Hudson: theory regarding the purpose of the Giza cultural significance and other aspects of and Mycerinus, 2017. -

FPGA Modeling and Optimization of a SIMON Lightweight Block Cipher

sensors Article FPGA Modeling and Optimization of a SIMON Lightweight Block Cipher Sa’ed Abed 1,* , Reem Jaffal 1, Bassam Jamil Mohd 2 and Mohammad Alshayeji 1 1 Department of Computer Engineering, Kuwait University, Safat 13060, Kuwait; [email protected] (R.J.); [email protected] (M.A.) 2 Department of Computer Engineering, Hashemite University, Zarqa 13115, Jordan; [email protected] * Correspondence: [email protected]; Tel.: +965-249-837-90 Received: 18 December 2018; Accepted: 18 February 2019; Published: 21 February 2019 Abstract: Security of sensitive data exchanged between devices is essential. Low-resource devices (LRDs), designed for constrained environments, are increasingly becoming ubiquitous. Lightweight block ciphers provide confidentiality for LRDs by balancing the required security with minimal resource overhead. SIMON is a lightweight block cipher targeted for hardware implementations. The objective of this research is to implement, optimize, and model SIMON cipher design for LRDs, with an emphasis on energy and power, which are critical metrics for LRDs. Various implementations use field-programmable gate array (FPGA) technology. Two types of design implementations are examined: scalar and pipelined. Results show that scalar implementations require 39% less resources and 45% less power consumption. The pipelined implementations demonstrate 12 times the throughput and consume 31% less energy. Moreover, the most energy-efficient and optimum design is a two-round pipelined implementation, which consumes 31% of the best scalar’s implementation energy. The scalar design that consumes the least energy is a four-round implementation. The scalar design that uses the least area and power is the one-round implementation. -

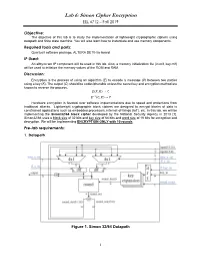

Lab 6: Simon Cipher Encryption EEL 4712 – Fall 2019

Lab 6: Simon Cipher Encryption EEL 4712 – Fall 2019 Objective: The objective of this lab is to study the implementation of lightweight cryptographic ciphers using datapath and finite state machine. You will also learn how to instantiate and use memory components. Required tools and parts: QuartusII software package, ALTERA DE10-lite board. IP Used: An altsyncram IP component will be used in this lab. Also, a memory initialization file (in.mif, key.mif) will be used to initialize the memory values of the ROM and RAM. Discussion: Encryption is the process of using an algorithm (E) to encode a message (P) between two parties using a key (K). The output (C) should be undecipherable unless the secret key and encryption method are known to reverse the process. 퐸(푃, 퐾) → 퐶 퐸−1(퐶, 퐾) → 푃 Hardware encryption is favored over software implementations due to speed and protections from traditional attacks. Lightweight cryptographic block ciphers are designed to encrypt blocks of data in constrained applications such as embedded processors, internet-of-things (IoT), etc. In this lab, we will be implementing the Simon32/64 block cipher developed by the National Security Agency in 2013 [1]. Simon32/64 uses a block size of 32 bits and key size of 64 bits and word size of 16 bits for encryption and decryption. We will be implementing ENCRYPTION ONLY with 10 rounds. Pre-lab requirements: 1. Datapath Figure 1. Simon 32/64 Datapath 1 Lab 6: Simon Cipher Encryption EEL 4712 – Fall 2019 The datapath and control signals (blue) for Simon32/64 block cipher are shown in Fig. -

Analysis of Block Encryption Algorithms Being Used in Devices with Restricted Amount of Technological Possibilities

E3S Web of Conferences 224, 01043 (2020) https://doi.org/10.1051/e3sconf/202022401043 TPACEE-2020 Analysis of block encryption algorithms being used in devices with restricted amount of technological possibilities Larissa Cherckesova1,*, Olga Safaryan1, Pavel Razumov1, Dmitry Medvedev2, Veronica Kravchenko1, Yuriy Ivanov3 1Don state technical University, Rostov–on–Don, 1, Gagarin square, Russia 2Don state technical University, Shakhty, Rostov region, 147, Shevchenko str., Russia 3Southern Federal University Taganrog, Taganrog, 44, per. Nekrasovsky, Rostov region, Russia Abstract. This report is devoted to the comparative analysis of the lightweight NASH block encryption algorithm and the algorithm presented by USA National Security Agency in 2013 – SPECK. Their detailed description is given, the analysis is made. The task of the study is to investigate and analyze cryptographic encryption algorithms used in devices with limited capabilities such as microcontrollers. The study of lightweight encryption algorithms and their application for cybersecurity tasks is necessary to create the latest cryptographic systems aimed at preventing various types of attacks. The study revealed that the NASH block encryption algorithm showed more optimized performance, since the number of rounds of cipher execution is less than that Speck algorithm, which provides greater stability of algorithm with least number of executable rounds. 1 Introduction The modern world is crowded of various household devices, sensors, recognition mechanisms, intelligent systems and other amenities that are actively involved in the life of the modern society and a specific person. Every minute people are interacting with automated systems; manage their lives with the help of devices that operate by microcontrollers. In addition, low cost and easy accessibility contributes to wide distribution of micro-controllers market in industrial systems, control systems, as well as in household appliances of the mass–market segment. -

A Survey of ARX-Based Symmetric-Key Primitives

397 International Journal of Communication Networks and Information Security (IJCNIS) Vol. 11, No. 3, December 2019 A Survey of ARX-based Symmetric-key Primitives Nur Fasihah Mohd Esa1, Shekh Faisal Abdul-Latip1 and Mohd Rizuan Baharon1 1INSFORNET Centre for Advanced Computing Technology, Fakulti Teknologi Maklumat dan Komunikasi, Universiti Teknikal Malaysia Melaka Abstract: Addition Rotation XOR is suitable for fast and fast software-oriented implementation. Nevertheless, the implementation symmetric –key primitives, such as stream and security properties are still not well studied in literature as block ciphers. This paper presents a review of several block and compared to SPN and Feistel ciphers. stream ciphers based on ARX construction followed by the Observation of addition from [4]: First, addition modulo discussion on the security analysis of symmetric key primitives n where the best attack for every cipher was carried out. We 2 on the window can be approximated by addition modulo benchmark the implementation on software and hardware platforms according to the evaluation metrics. Therefore, this paper aims at . Second, this addition gives a perfect approximation if providing a reference for a better selection of ARX design strategy. the carry into the window is estimated correctly. The probability distribution of the carry is generated, depending Keywords: ARX, cryptography, cryptanalysis, design, stream on the probability of approximation correctness. The ciphers, block ciphers. probability of the carry is independent of w; in fact, for 1. Introduction uniformly distributed addends it is , where The rapid development of today’s computing technology has is the position of the least significant bit in the window. made computer devices became smaller which in turn poses Thirdly, the probability of correctness for a random guess of a challenge to their security aspects. -

Comparison of Hardware and Software Implementations of Selected Lightweight Block Ciphers

Comparison of Hardware and Software Implementations of Selected Lightweight Block Ciphers William Diehl, Farnoud Farahmand, Panasayya Yalla, Jens-Peter Kaps and Kris Gaj Department of Electrical and Computer Engineering, George Mason University, Fairfax, U.S.A. e-mail: {wdiehl, ffarahma, pyalla, jkaps, kgaj}@gmu.edu cases by which ciphers would be evaluated in latter rounds, Abstract— Lightweight block ciphers are an important topic of including lightweight applications (resource constrained research in the context of the Internet of Things (IoT). Current environments). The desired characteristics for authenticated cryptographic contests and standardization efforts seek to ciphers conforming to this use case include performance and benchmark lightweight ciphers in both hardware and software. energy efficiency in resource-constrained hardware and Although there have been several benchmarking studies of both software, including 8-bit CPUs [3]. hardware and software implementations of lightweight ciphers, In this work, we support the above efforts by implementing direct comparison of hardware and software implementations is difficult due to differences in metrics, measures of effectiveness, six secret-key block ciphers using two methods – custom and implementation platforms. In this research, we facilitate this hardware implementations using Register Transfer Level comparison by use of a custom lightweight reconfigurable (RTL) design, and software using a custom lightweight processor. We implement six ciphers, AES, SIMON, SPECK, reconfigurable 8-bit soft core microprocessor. Five of the PRESENT, LED and TWINE, in hardware using register transfer ciphers chosen are lightweight ciphers: SIMON 96/96, SPECK level (RTL) design, and in software using the custom 96/96, PRESENT-80, LED-80, and TWINE-80 [4 – 7]. -

Simpleenc and Simpleencsmall – an Authenticated Encryption Mode for the Lightweight Setting?

SimpleENC and SimpleENCsmall { an Authenticated Encryption Mode for the Lightweight Setting? Shay Gueron1 and Yehuda Lindell2 1 University of Haifa, Israel, and Amazon, USA 2 Bar Ilan University, Israel, and Unbound Tech Ltd., Israel Abstract. Block cipher modes of operation provide a way to securely encrypt using a block cipher, and different modes of operation achieve dif- ferent tradeoffs of security, performance and simplicity. In this paper, we present a new authenticated encryption scheme that is designed for the lightweight cryptography setting, but can be used in standard settings as well. Our mode of encryption is extremely simple, requiring only a single block cipher primitive (in forward direction) and minimal padding, and supports streaming (online encryption). In addition, our mode achieves very strong security bounds, and can even provide good security when the block size is just 64 bits. As such, it is highly suitable for lightweight settings, where the lifetime of the key and/or overall amount encrypted may be high. Our new scheme can be seen as an improved version of CCM that supports streaming, and provides much better bounds. 1 Introduction 1.1 Background and the Challenge Block ciphers are a basic building block in encryption. Modes of operation are ways of using block ciphers in order to obtain secure encryption, and have been studied for decades. Nevertheless, new computing settings and threats make the design of new and better modes of operation a very active field of research. For just one example, NIST has recently initiated a competition for a mode of operation that is suited for lightweight ciphers [18]. -

Trade-Off of Security and Performance of Lightweight Block Ciphers in Industrial Wireless Sensor Networks Chao Pei1,2,3,Yangxiao4*,Weiliang1,2* and Xiaojia Han1,2,3

Pei et al. EURASIP Journal on Wireless Communications and Networking (2018) 2018:117 https://doi.org/10.1186/s13638-018-1121-6 RESEARCH Open Access Trade-off of security and performance of lightweight block ciphers in Industrial Wireless Sensor Networks Chao Pei1,2,3,YangXiao4*,WeiLiang1,2* and Xiaojia Han1,2,3 Abstract Lightweight block ciphers play an indispensable role for the security in the context of pervasive computing. However, the performance of resource-constrained devices can be affected dynamically by the selection of suitable cryptalgorithms, especially for the devices in the resource-constrained devices and/or wireless networks. Thus, in this paper, we study the trade-off between security and performance of several recent top performing lightweight block ciphers for the demand of resource-constrained Industrial Wireless Sensor Networks. Then, the software performance evaluation about these ciphers has been carried out in terms of memory occupation, cycles per byte, throughput, and a relative good comprehensive metric. Moreover, the results of avalanche effect, which shows the possibility to resist possible types of different attacks, are presented subsequently. Our results show that SPECK is the software-oriented lightweight cipher which achieves the best performance in various aspects, and it enjoys a healthy security margin at the same time. Furthermore, PRESENT, which is usually used as a benchmark for newer hardware-oriented lightweight ciphers, shows that the software performance combined with avalanche effect is inadequate when it is implemented. In the real application, there is a need to better understand the resources of dedicated platforms and security requirement, as well as the emphasis and focus. -

Block Ciphers for the Iot – SIMON, SPECK, KATAN, LED, TEA, PRESENT, and SEA Compared

Block ciphers for the IoT { SIMON, SPECK, KATAN, LED, TEA, PRESENT, and SEA compared Michael Appel1, Andreas Bossert1, Steven Cooper1, Tobias Kußmaul1, Johannes L¨offler1, Christof Pauer1, and Alexander Wiesmaier1;2;3 1 TU Darmstadt 2 AGT International 3 Hochschule Darmstadt Abstract. In this paper we present 7 block cipher algorithms Simon, Speck, KATAN, LED, TEA, Present and Sea. Each of them gets a short introduction of their functions and it will be examined with regards to their security. We also compare these 7 block ciphers with each other and with the state of the art algorithm the Advanced Encryption Standard (AES) to see how efficient and fast they are to be able to conclude what algorithm is the best for which specific application. Keywords: Internet of things (IoT); lightweight block ciphers; SIMON; SPECK; KATAN; LED; TEA; PRESENT; SEA 1 Introduction In modern IT The Internet of Things(IoT) is one of the most recent topics. Through the technical progress the internet has increasingly moving into our daily lives. More and more devices get functions to go online interconnect with each other and send and receive data. The increasingly smaller and cheaper expectant electronic control and communication components were installed in particular in recent years, increasingly in things of daily life. Typical fields of application are for example home automation, Security technology in the private or business environment as well as the supporting usage in the industry [1]. Be- cause of the very high price sensitivity in this environment the focus is set on the efficiency of the used programs and algorithms.