The University of Sheffield

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

February 26, 2021 Amazon Warehouse Workers In

February 26, 2021 Amazon warehouse workers in Bessemer, Alabama are voting to form a union with the Retail, Wholesale and Department Store Union (RWDSU). We are the writers of feature films and television series. All of our work is done under union contracts whether it appears on Amazon Prime, a different streaming service, or a television network. Unions protect workers with essential rights and benefits. Most importantly, a union gives employees a seat at the table to negotiate fair pay, scheduling and more workplace policies. Deadline Amazon accepts unions for entertainment workers, and we believe warehouse workers deserve the same respect in the workplace. We strongly urge all Amazon warehouse workers in Bessemer to VOTE UNION YES. In solidarity and support, Megan Abbott (DARE ME) Chris Abbott (LITTLE HOUSE ON THE PRAIRIE; CAGNEY AND LACEY; MAGNUM, PI; HIGH SIERRA SEARCH AND RESCUE; DR. QUINN, MEDICINE WOMAN; LEGACY; DIAGNOSIS, MURDER; BOLD AND THE BEAUTIFUL; YOUNG AND THE RESTLESS) Melanie Abdoun (BLACK MOVIE AWARDS; BET ABFF HONORS) John Aboud (HOME ECONOMICS; CLOSE ENOUGH; A FUTILE AND STUPID GESTURE; CHILDRENS HOSPITAL; PENGUINS OF MADAGASCAR; LEVERAGE) Jay Abramowitz (FULL HOUSE; GROWING PAINS; THE HOGAN FAMILY; THE PARKERS) David Abramowitz (HIGHLANDER; MACGYVER; CAGNEY AND LACEY; BUCK JAMES; JAKE AND THE FAT MAN; SPENSER FOR HIRE) Gayle Abrams (FRASIER; GILMORE GIRLS) 1 of 72 Jessica Abrams (WATCH OVER ME; PROFILER; KNOCKING ON DOORS) Kristen Acimovic (THE OPPOSITION WITH JORDAN KLEPPER) Nick Adams (NEW GIRL; BOJACK HORSEMAN; -

As Writers of Film and Television and Members of the Writers Guild Of

July 20, 2021 As writers of film and television and members of the Writers Guild of America, East and Writers Guild of America West, we understand the critical importance of a union contract. We are proud to stand in support of the editorial staff at MSNBC who have chosen to organize with the Writers Guild of America, East. We welcome you to the Guild and the labor movement. We encourage everyone to vote YES in the upcoming election so you can get to the bargaining table to have a say in your future. We work in scripted television and film, including many projects produced by NBC Universal. Through our union membership we have been able to negotiate fair compensation, excellent benefits, and basic fairness at work—all of which are enshrined in our union contract. We are ready to support you in your effort to do the same. We’re all in this together. Vote Union YES! In solidarity and support, Megan Abbott (THE DEUCE) John Aboud (HOME ECONOMICS) Daniel Abraham (THE EXPANSE) David Abramowitz (CAGNEY AND LACEY; HIGHLANDER; DAUGHTER OF THE STREETS) Jay Abramowitz (FULL HOUSE; MR. BELVEDERE; THE PARKERS) Gayle Abrams (FASIER; GILMORE GIRLS; 8 SIMPLE RULES) Kristen Acimovic (THE OPPOSITION WITH JORDAN KLEEPER) Peter Ackerman (THINGS YOU SHOULDN'T SAY PAST MIDNIGHT; ICE AGE; THE AMERICANS) Joan Ackermann (ARLISS) 1 Ilunga Adell (SANFORD & SON; WATCH YOUR MOUTH; MY BROTHER & ME) Dayo Adesokan (SUPERSTORE; YOUNG & HUNGRY; DOWNWARD DOG) Jonathan Adler (THE TONIGHT SHOW STARRING JIMMY FALLON) Erik Agard (THE CHASE) Zaike Airey (SWEET TOOTH) Rory Albanese (THE DAILY SHOW WITH JON STEWART; THE NIGHTLY SHOW WITH LARRY WILMORE) Chris Albers (LATE NIGHT WITH CONAN O'BRIEN; BORGIA) Lisa Albert (MAD MEN; HALT AND CATCH FIRE; UNREAL) Jerome Albrecht (THE LOVE BOAT) Georgianna Aldaco (MIRACLE WORKERS) Robert Alden (STREETWALKIN') Richard Alfieri (SIX DANCE LESSONS IN SIX WEEKS) Stephanie Allain (DEAR WHITE PEOPLE) A.C. -

Won't Bow Don't Know

A NEW SERIES FROM THE CREATORS OF SM WON’T BOW DON’T KNOW HOW New Orleans, 2005 ONLINE GUIDE MAY 2010 SUNDAYS 10 PM/9C SATURDAYSATURDAY NIGHTSNIGHTS The prehistoric pals trade the permafrost for paradise in this third adventure in the Ice Age series. As Manny the Mammoth and his woolly wife prep for parenthood, Sid the Sloth decides to join his furry friend by fathering a trio of snatched eggs...which soon hatch into dinosaurs! It isn’t long before Mama T-Rex turns up looking for her tots and ends up leading the pack of glacial mates to a vibrant climate under the ice where dinos rule. “The best of the three films...” (Roger Ebert). Featuring the voices of Ray Romano, Screwball quantum paleontologist Will Ferrell and three companions time warp back John Leguizamo, Denis to the prehistoric age in this comical adventure based on the cult 1970s TV series. Leary, Josh Peck and Danny McBride, Anna Friel and Jorma Taccone star as Ferrell’s cohorts whose routine Queen Latifah. (MV) PG- expedition winds up leaving them in a primitive world of dinosaurs, cave-dwelling 1:34. HBO May 1,2,4,9,15, lizards, ape-like creatures and hungry mosquitoes. Directed by Brad Silberling; 19,23,27,31 C5EH written by Chris Henchy & Dennis McNicholas, based on Sid & Marty Krofft’s Land STARTS SATURDAY, of the Lost. (AC,AL,MV) PG13-1:42. HBO May 8,9,11,16,20,22,24,27,30 C5EH MAY 1 STARTS SATURDAY, MAY 8 A NEW MOVIE EVERY SATURDAY—GUARANTEED! SATURDAYSATURDAY NIGHTSNIGHTS One night in Vegas She was born to save proves to be more her sister’s life, but at than four guys arriving what cost to her own? for a bachelor party Cameron Diaz and can handle in this Abigail Breslin star in riotous Golden Globe®- this heart-pulling drama winning comedy. -

HBO: Brand Management and Subscriber Aggregation: 1972-2007

1 HBO: Brand Management and Subscriber Aggregation: 1972-2007 Submitted by Gareth Andrew James to the University of Exeter as a thesis for the degree of Doctor of Philosophy in English, January 2011. This thesis is available for Library use on the understanding that it is copyright material and that no quotation from the thesis may be published without proper acknowledgement. I certify that all material in this thesis which is not my own work has been identified and that no material has previously been submitted and approved for the award of a degree by this or any other University. ........................................ 2 Abstract The thesis offers a revised institutional history of US cable network Home Box Office that expands on its under-examined identity as a monthly subscriber service from 1972 to 1994. This is used to better explain extensive discussions of HBO‟s rebranding from 1995 to 2007 around high-quality original content and experimentation with new media platforms. The first half of the thesis particularly expands on HBO‟s origins and early identity as part of publisher Time Inc. from 1972 to 1988, before examining how this affected the network‟s programming strategies as part of global conglomerate Time Warner from 1989 to 1994. Within this, evidence of ongoing processes for aggregating subscribers, or packaging multiple entertainment attractions around stable production cycles, are identified as defining HBO‟s promotion of general monthly value over rivals. Arguing that these specific exhibition and production strategies are glossed over in existing HBO scholarship as a result of an over-valuing of post-1995 examples of „quality‟ television, their ongoing importance to the network‟s contemporary management of its brand across media platforms is mapped over distinctions from rivals to 2007. -

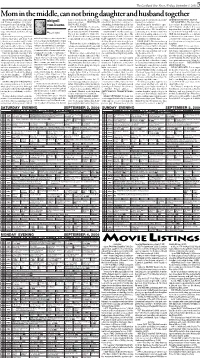

Mom in the Middle, Can Not Bring Daughter and Husband Together DEAR ABBY: I Was Recently Mar- Want to Tell Them Who Did It

The Goodland Star-News / Friday, September 1, 2006 5 Mom in the middle, can not bring daughter and husband together DEAR ABBY: I was recently mar- want to tell them who did it. I really coming a young woman, and sharing transsexual. Your friend needs under- BRENDA IN AUSTIN, TEXAS ried. I have a daughter, “Courtney,” abigail need some advice. — HURTING IN a bed with her father could be too standing, not isolation. DEAR BRENDA: The type of par- from a previous relationship. Things van buren HAYWARD, CALIF. stimulating, for both of them. If he has By all means he should see a psy- ties you have described are more for were great before the wedding. We DEAR HURTING: Pick up the further doubts about this, he should chiatrist — one who specializes in adults than for children. It is more even included Courtney in the plan- phone and call the Rape, Abuse and consult his daughter’s pediatrician. gender disorders. He should have important that your little girl be able ning. Afterward, however, things Incest National Network (RAINN). DEAR ABBY: About a month ago, counseling if he wants to take this to enjoy her birthday with some of dear abby turned sour. • The toll-free number is (800) 656- I was shocked out of my shoes. My where it is heading, and also to cope HER friends than it be a command Courtney kept causing problems 4673. Counselors there will guide you longtime friend, “Orville,” told me he with the loss of his friends. It would performance for your sisters. -

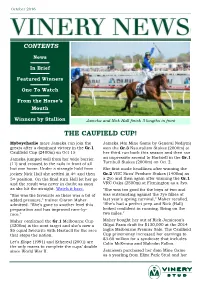

Oct 16 Newsletter

October 2016 CONTENTS News In Brief Featured Winners One To Watch From the Horse’s Mouth Winners by Stallion Jameka and Nick Hall finish 3 lengths in front THE CAUFIELD CUP! Myboycharlie mare Jameka can join the Jameka (4m Mine Game by General Nediym) greats after a dominant victory in the Gr.1 won the Gr.3 Naturalism Stakes (2000m) at Caulfield Cup (2400m) on Oct 15. her third run back this season and then ran Jameka jumped well from her wide barrier an impressive second to Hartnell in the Gr.1 (11) and crossed to the rails in front of all Turnbull Stakes (2000m) on Oct 2. but one horse. Under a strangle hold from She first made headlines after winning the jockey Nick Hall she settled in 4th and then Gr.2 VRC Sires’ Produce Stakes (1400m) as 5th position. On the final turn Hall let her go a 2yo and then again after winning the Gr.1 and the result was never in doubt as soon VRC Oaks (2500m) at Flemington as a 3yo. as she hit the straight. Watch it here. “She was too good for the boys at two and “She was the favourite so there was a bit of was outstanding against the 3yo fillies at added pressure,” trainer Ciaron Maher last year’s spring carnival,” Maher recalled. admitted. “She’s gone to another level this “She’s had a perfect prep and Nick (Hall) preparation and has improved race-by- looked confident in running. Bring on the race.” two miles.” Maher confirmed the Gr.1 Melbourne Cup Maher bought her out of Rick Jamieson’s (3200m) is the next target and she’s now a Gilgai Farm draft for $130,000 at the 2014 $5 equal favourite with Hartnell for the race Inglis Melbourne Premier Sale. -

Great Gift Ideas! Paid Too Much! A2 | FRIDAY, DECEMBER 14, 2018 the SUMTER ITEM

CLARENDON SUN: Luncheon brings cheer to families Dec. 20 A7 LOCAL Hear Handel’s ‘Messiah’ on SERVING SOUTH CAROLINA SINCE OCTOBER 15, 1894 Sunday A2 FRIDAY, DECEMBER 14, 2018 75 CENTS Program teaches adults skills to remove barriers to job offers BY KAYLA ROBINS rate in the state’s vibrant economy — [email protected] 2.2 million South Carolinians are now working — may mean those who are A low unemployment rate in South not working have faced barriers at the Carolina also comes with the ramifica- interview desk in tighter times. tion that employers often find it diffi- A graduation ceremony at Central cult to fill current vacancies or hire Carolina Technical College’s Ad- KAYLA ROBINS / THE SUMTER ITEM new people who are ready to work in vanced Manufacturing Technology Graduates of the Back to Work program show off their diplomas with state Rep. Kevin expanding opportunities. Weeks, D-Sumter, fourth from the left, and Chris McKinney, executive director of Santee- Sumter’s 3.9 percent unemployment SEE WORK, PAGE A11 Lynches Regional Council of Governments. Florence man ‘Headed in the is in court for 2015 killing right direction’ in Lynchburg BY ADRIENNE SARVIS [email protected] A Florence native accused of killing a supposed friend in 2015 is having his time in court without a jury after requesting a bench trial at Sumter County Judicial Center, according to county authorities. George Jacob Shine, 34, faces a murder charge and a charge of possession of a weapon during a violent crime after 70-year-old Joseph Frey was found dead with two gunshot wounds at a residence off Narrow Paved Road on Nov. -

The A+ List Positive Former Child Actors

The A+ List Positive Former Child Actors NAME Age of Known as a Child For... As an Adult... first job Adlon, Pamela (Segall) 8 Grease 2, Little Darlings, Kelly of Facts of Life Actor / Writer / Producer, Voiceover artist including an Emmy for King of the Hill (Emmy). Ashley on Recess, Californication, Louie, Better Things. Nominated for multiple primetime Emmys, WGA and PGA awards. Allen, Aleisha 4 Model at 4, Blues Clues at 6, Are We There Yet, Columbia University, Pace University, Speech Pathologist, and School of Rock clinical instructor at Teachers College, Columbia University. Ali, Tatyana 6 Sesame Street, Star Search, Fresh Prince of Bel Harvard Grad (BS African-American Studies and Government), Air spokesperson for Barack Obama campaign, actor / singer with several albums and continued guest stars and film roles. Married, son. Ambrose, Lauren 14 Law and Order Actor Six Feet Under, London Theatre 2004-“Buried Child”, Can't Hardly Wait Appleby, Shiri 4 Commercials, Santa Barbara (soap) Actor Roswell, Swimfan USC Applegate, Christina 5 First job: Playtex commercial. Beatlemania, Actor: Married with Children, Samantha Who (Emmy nom), mo. Days of Our Lives, Married with Children, Grace Jessie (series), Don't Tell Mom the Babysitter's Dead, Mars Kelly, New Leave it to Beaver, Charles in Attacks, Friends (Emmy nom). Mom to be, breast cancer Charge, Heart of the City survivor and research advocate. Astin, Sean 9 Goonies (son of John Astin and Patty Duke) Director, Actor Lord of the Rings UCLA grad (1997) Atchison, Nancy 7 First job: Fried Green Tomatoes, The Long Walk Non-profit Professional: Director of Development at Home, Wild Hearts Can’t Be Broken, The Prince Birmingham Museum of Art. -

February 26, 2021 Amazon Warehouse Workers in Bessemer

February 26, 2021 Amazon warehouse workers in Bessemer, Alabama are voting to form a union with the Retail, Wholesale and Department Store Union (RWDSU). We are the writers of feature films and television series. All of our work is done under union contracts whether it appears on Amazon Prime, a different streaming service, or a television network. Unions protect workers with essential rights and benefits. Most importantly, a union gives employees a seat at the table to negotiate fair pay, scheduling and more workplace policies. Amazon accepts unions for entertainment workers, and we believe warehouse workers deserve the same respect in the workplace. We strongly urge all Amazon warehouse workers in Bessemer to VOTE UNION YES. In solidarity and support, Megan Abbott (DARE ME) Chris Abbott (LITTLE HOUSE ON THE PRAIRIE; CAGNEY AND LACEY; MAGNUM, PI; HIGH SIERRA SEARCH AND RESCUE; DR. QUINN, MEDICINE WOMAN; LEGACY; DIAGNOSIS, MURDER; BOLD AND THE BEAUTIFUL; YOUNG AND THE RESTLESS) Melanie Abdoun (BLACK MOVIE AWARDS; BET ABFF HONORS) John Aboud (HOME ECONOMICS; CLOSE ENOUGH; A FUTILE AND STUPID GESTURE; CHILDRENS HOSPITAL; PENGUINS OF MADAGASCAR; LEVERAGE) Jay Abramowitz (FULL HOUSE; GROWING PAINS; THE HOGAN FAMILY; THE PARKERS) David Abramowitz (HIGHLANDER; MACGYVER; CAGNEY AND LACEY; BUCK JAMES; JAKE AND THE FAT MAN; SPENSER FOR HIRE) Gayle Abrams (FRASIER; GILMORE GIRLS) 1 of 72 Jessica Abrams (WATCH OVER ME; PROFILER; KNOCKING ON DOORS) Kristen Acimovic (THE OPPOSITION WITH JORDAN KLEPPER) Nick Adams (NEW GIRL; BOJACK HORSEMAN; BLACKISH) -

The International Journal of Research Into New Media Technologies

Convergence: The International Journal of Research into New Media Technologies http://con.sagepub.com/ Mapping commercial intertextuality: HBO's True Blood Jonathan Hardy Convergence 2011 17: 7 DOI: 10.1177/1354856510383359 The online version of this article can be found at: http://con.sagepub.com/content/17/1/7 Published by: http://www.sagepublications.com Additional services and information for Convergence: The International Journal of Research into New Media Technologies can be found at: Email Alerts: http://con.sagepub.com/cgi/alerts Subscriptions: http://con.sagepub.com/subscriptions Reprints: http://www.sagepub.com/journalsReprints.nav Permissions: http://www.sagepub.com/journalsPermissions.nav Citations: http://con.sagepub.com/content/17/1/7.refs.html >> Version of Record - Feb 18, 2011 What is This? Downloaded from con.sagepub.com at University of Sheffield on September 4, 2013 Articles Convergence: The International Journal of Research into Mapping commercial New Media Technologies 17(1) 7–17 ª The Author(s) 2011 intertextuality: HBO’s Reprints and permission: sagepub.co.uk/journalsPermissions.nav True Blood DOI: 10.1177/1354856510383359 con.sagepub.com Jonathan Hardy University of East London, UK Abstract Corporate synergy, cross-media promotion and transmedia storytelling are increasingly prevalent features of media production today, yet they generate highly divergent readings. Criticism of syner- gistic corporate control and commercialism in some political economic accounts contrasts with celebrations of fan/prosumer agency, active audiences and resistant readings in others. This debate reviews the different approaches taken by political economists and culturalists in their analyses of commercial intertextuality. Tracing influential studies, it considers the tensions and affinities between divergent readings, and grounds for synthesis. -

Repor T Resumes

REPOR TRESUMES ED 018 101 TE oon 133 THE POWER OF ORAL LAKE URGE, SPEAKING AND LISTENING, A GUIDE FOR TEACHERS OF ENGLISH--GRADES 7, 8, 9. SANTA CLARA COUNTY OFFICE OF EDUC.,SAN JOSE, CALIF PUB DATE JUN 66 EDRS PRICE MF-$1.00 HC '-$8.88 220P. DESCRIPTORS- *CURRICULUM GUIDES, *ENGLISH INSTRUCTION, *JUNIOR HIGH SCHOOL STUDENTS, *LISTENING, *SPEAKING, ORAL COMMUNICATION, ORAL EXPRESSION, LANGUAGE ARTS, SECONDARY EDUCATION, SANTA CLARA COUNTY, CALIFORNIA, SANTA CLARA COUNTY'S GUIDE FOR ENGLISH TEACHERS OF GRADES SEVEN, EIGHT, AND NINE IS ARRANGED AROUND SIX MAJOR GOALS WHICH ENCOURAGE STUDENTS--(1) TO ORGANIZE THE CONTENT OF ORAL LANGUAGE IN RELATION TO PURPOSE AND LISTENER,(2) TO SPEAK EASILY AND EFFECTIVELY IN A VARIETY OF SITUATIONS, (3) TO PARTICIPATE EFFECTIVELY IN DEMOCRATIC DISCUSSION PROCEDURES, (4)TO USE STANDARD INFORMAL ENGLISH IN SITUATIONS REQUIRING THE ACCEPTED CONVENTIONS OF SPOKEN LANGUAGE, (5) TO LISTEN COURTEOUSLY AND CRITICALLY, AN( (6) TO LISTEN CREATIVELY. BASIC CONCEPTS, DESIRABLE ATTITUDES, AND MAJOR SKILLS AND COMPETENCIES TO BE ACQUIRED BY THE STUDENT ARE SPECIFIED FOR EACH GOAL. CLASSROOM ACTIVITIESAND TEACHING TECHNIQUES ARE PRESENTED IN A LOGICAL SEQUENCE TO HELP DEVELOP THESE CONCEPTS AND SKILLS, AND EVALUATION METHODS ARE SUGGESTED. AN APPENDIX CONTAINS COMMENTS ABOUT PRGPAGANDA AND A BIBLIOGRAPHY OF BOOKS FOR THE JUNIOR HIGH SCHOOL LITERATURE PROGRAM. THIS GUIDE, RECOMMENDED BY THE NCTE COMMITTEE TO REVIEW CURRICULUM GUIDES, IS NOTED IN "ANNOTATED LIST OF RECOMMENDED ELEMENTARY AND SECONDARY CURRICULUM GUIDES IN ENGLISH, 1967." (SEE ED 014 490.) IT IS ALSO AVAILABLE FROM THE SANTA CLARA COUNTY OFFICE OF EDUCATION, 70 WEST HEDDING STREET, SAN JOSE, CALIFORNIA 95110, FOR $3.50. -

Championship Trifecta Celebrating the Champions of Breeders Crown, Indiana Sires Stakes and the Governor’S Cup

FEATURE: Super Finals, Super Night Indiana crowns its champions for 2020 Story on page 6 NOVEMBER 2020 Serving the Indiana Indiana Harness Racing Industry StandardbredMAGAZINE Championship Trifecta Celebrating the Champions of Breeders Crown, Indiana Sires Stakes and the Governor’s Cup Produced by the Indiana Standardbred Association Thank You Harrah’s Hoosier Park Racing & Casino would like to thank The Hambletonian Society, Libfeld-Katz Breeding Partnership, Indiana Standardbred Association, Indiana Horse Racing Commission and all other supporting sponsors for their contributions to the success of the 2020 Breeders Crown during this unique time. Additionally, we would also like to thank and congratulate all Breeders, Owners, Trainers & Drivers for an outstanding and historic event. We were honored to serve as the host track and look forward to supporting the Breeders Crown in the future. Must be 18 or older to wager on horse racing at racetracks and 21 or older to gamble at sports books and casinos. Know When To Stop Before You Start.® Gambling Problem? Call 1-800-9-WITH-IT (1-800-994-8448). ©2020 Caesars License Company, LLC. from the EDITOR Indiana Standardbred MAGAZINE In the first “Letter from the Editor” of 2020, the issue was about how exciting 2020 was going to be. Well, 2020 has been exciting, alright, just not in the way any- one pictured. The year started off with momentum from 2019 and the addition of table games would have been a great lead in to the return of …Indiana didn’t the Breeders Crown. Disruptions the entire season have changed the landscape of racing in Indiana, which could have easily waiver, they just canceled the Breeders Crown.