Development of Quranic Reciter Identification System Using MFCC and GMM Classifier

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Original Paper the Tayyibāt in Islam

World Journal of Education and Humanities ISSN 2687-6760 (Print) ISSN 2687-6779 (Online) Vol. 2 No. 1, 2020 www.scholink.org/ojs/index.php/wjeh Original Paper The Tayyibāt in Islam Yousef Saa’deh1* & Mustafa Yuosef Saa’deh2 1 Department of Accounting, School of Business, University of Jordan, Amman, Jordan 2 Department of Accounting, School of Maritime Business and Management, University Malaysia Terengganu, Terengganu, Malaysia * Yousef Saa’deh, Department of Accounting, School of Business, University of Jordan, Amman, Jordan Received: September 18, 2019 Accepted: October 2, 2019 Online Published: October 5, 2019 doi:10.22158/wjeh.v2n1p1 URL: http://dx.doi.org/10.22158/wjeh.v2n1p1 Abstract The expressions of the Quran regarding the tayyibāt (good things) has always carried good meanings, ethical and intellectual values, because of the relationship of the tayyibāt with the worldview, the belief, and the characters of the Ummah. This is what Islam is keen to assert, protect, care for, and ensure its existence because of its importance for the continuation of Islam and its mission over time, which always makes it a fertile field for research; especially when Islam is attacked from every angle, including the tayyibāt. Moreover, it is also to remind the Muslims of their religion’s constants and its teachings to help them in facing of this incoming corruption, whereby their non-Muslims promote all types of khabāith (bad things), such as doctrines of religious groups and secularism; food and drinks such as alcohol, drugs, marijuana, and others, which requires the continued vigilance of Muslims and their keenness to protect the believes of the Ummah, its members, and their future in this regard by always studying at the tayyibāt and khabāith. -

Flow Chart Macro Structure 80. Surah Abasa

NurulQuran Dawrah e Quran Flow Chart Macro Structure 80. Surah Abasa (He Frowned) Verses:42; Makki; Paragraphs: 7 Paragraph 2:V11-16 Quran has noble Paragraph 1: V1-10 Paragraph 7: V38-42 message & best The result will be advice, leave etiquettes of different for pious arrogance and accept inviting people to the one’s Islam. others/Da’awa manners who are disbelievers. Paragraph 6: V33-37 Paragraph 3: V17-21 Scenes of the arguments given, day of and people are resurrection. asked to believe in hereafter. Main Themes: Accept the message of Quran & believe in hereafter. Paragraph 5: V24-32 Do Good deeds. Allah swt is Creator, Paragraph 4: V22-23 Arrogant people who Providence and Sustainer Invitation has been reject the message of of this Universe, he will given to follow the Quran are disbelievers. gather you all on the day commands specified in of judgement. Quran. Period of Revelation: The commentators and traditionists are unanimous about the occasion of the revelation of this Surah. According to them, once some big chiefs of Makkah were sitting in the Holy Prophet's assembly and he was earnestly engaged in trying to persuade them to accept Islam. At that very point, a blind man, named Ibn Umm Maktum, approached him to seek explanation of some point concerning Islam. The Holy Prophet (upon whom be peace) disliked his interruption and ignored him. Thereupon Allah sent down this Surah. From this historical incident the period of the revelation of this Surah can be precisely determined. 1 NurulQuran Dawrah e Quran In the first place, it is confirmed that Hadrat Ibn Umm Maktum was one of the earliest converts to Islam. -

Mistranslations of the Prophets' Names in the Holy Quran: a Critical Evaluation of Two Translations

Journal of Education and Practice www.iiste.org ISSN 2222-1735 (Paper) ISSN 2222-288X (Online) Vol.8, No.2, 2017 Mistranslations of the Prophets' Names in the Holy Quran: A Critical Evaluation of Two Translations Izzeddin M. I. Issa Dept. of English & Translation, Jadara University, PO box 733, Irbid, Jordan Abstract This study is devoted to discuss the renditions of the prophets' names in the Holy Quran due to the authority of the religious text where they reappear, the significance of the figures who carry them, the fact that they exist in many languages, and the fact that the Holy Quran addresses all mankind. The data are drawn from two translations of the Holy Quran by Ali (1964), and Al-Hilali and Khan (1993). It examines the renditions of the twenty five prophets' names with reference to translation strategies in this respect, showing that Ali confused the conveyance of six names whereas Al-Hilali and Khan confused the conveyance of four names. Discussion has been raised thereupon to present the correct rendition according to English dictionaries and encyclopedias in addition to versions of the Bible which add a historical perspective to the study. Keywords: Mistranslation, Prophets, Religious, Al-Hilali, Khan. 1. Introduction In Prophets’ names comprise a significant part of people's names which in turn constitutes a main subdivision of proper nouns which include in addition to people's names the names of countries, places, months, days, holidays etc. In terms of translation, many translators opt for transliterating proper names thinking that transliteration is a straightforward process depending on an idea deeply rooted in many people's minds that proper nouns are never translated or that the translation of proper names is as Vermes (2003:17) states "a simple automatic process of transference from one language to another." However, in the real world the issue is different viz. -

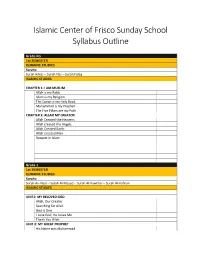

Islamic Center of Frisco Sunday School Syllabus Outline

Islamic Center of Frisco Sunday School Syllabus Outline Grade KG 1st SEMESTER QURANIC STUDIES Surahs: Surah Ikhlas – Surah Nas – Surah Falaq ISLAMIC STUDIES CHAPTER 1: I AM MUSLIM Allah is my Rabb Islam is my Religion The Quran is my Holy Book Muhammad is my Prophet The Five Pillars are my Path CHAPTER 2: ALLAH MY CREATOR Allah Created the Heavens Allah created the Angels Allah Created Earth Allah Created Man Respect in Islam Grade 1 1st SEMESTER QURANIC STUDIES Surahs: Surah An-Nasr – Surah Al-Masad - Surah Al-Kawthar – Surah Al-Kafirun ISLAMIC STUDIES UNIT1: MY BELOVED GOD Allah, Our Creator Searching for Allah God is One I Love God, He Loves Me Thank You Allah UNIT 2: MY GREAT PROPHET His Name was Muhammad Muhammad as a Child Muhammad Worked Hard The Prophet’s Family Muhammad Becomes a Prophet Sahaba: Friends of the Prophet UNIT 3: WORSHIPPING ALLAH Arkan-ul-Islam: The Five Pillars of Islam I Love Salah Wud’oo Makes me Clean Zaid Learns How to Pray UNIT 4: MY MUSLIM WORLD My Muslim Brothers and Sisters Assalam o Alaikum Eid Mubarak UNIT 5: MY MUSLIM MANNERS Allah Loves Kindness Ithaar and Caring I Obey my Parents I am a Muslim, I must be Clean A Dinner in our Neighbor’s Home Leena and Zaid Sleep Over at their Grandparents’ Home Grade 2 1st SEMESTER QURANIC STUDIES Surahs: Surah Al-Quraish – Surah Al-Maun - Surah Al-Humaza – Surah Al-Feel ISLAMIC STUDIES UNIT1: IMAN IN MY LIFE I Think of Allah First I Obey Allah: The Story of Prophet Adam (A.S) The Sons of Adam I Trust Allah: The Story of Prophet Nuh (A.S) My God is My Creator Taqwa: -

If Eid Is on Sunday, Quran Gallery Date Will Be Change to June 24Th, 2017

If Eid is on Sunday, Quran Gallery date will be change to June 24th, 2017 2 Part 1: Memorize the Surah in each participant's age group (see chart below) AGES Surahs Age 5 and below Fatiha, Ikhlaas Age 6 Naas Age 7 Fil Age 8 Zilzaal Age 9 Teen Age 10 Aadiyaat Age 11 Shams Age 12 Bayyinah Age 13 Infitar Age 14 Layl Age 15 Al-Ala Age 16 Balad Age 17 Ghashiyah Age 18 Inshiqaq Age 19 Buruj Age 20 Takwir Age 21 and above Naba Mutaffifin Abasa Fajr On June 25th, participants will be reciting the memorized Surah to the panel of judges. Time will be announced closer to the date. Note: These Surahs have been placed in order of difficulty. Please keep in mind that some Surahs have more ayaats, but are easier than other Surahs which have less ayaats. Note: If there are too many participants in one age group, participants will be assigned different surahs. 3 Part 2: Present a display board with key concepts of assigned surah. Please collect your board from Sister Zainab Razavi or Sister Sakina Jaffari on Saturday May 27th. Mandatory requirements on display board (for ALL ages) 1 Title of Surah 2 Arabic Script (can be printed, however handwritten is preferred as writing the Quran has immense blessings) 3 Translation (please refer to specific translators found on References page) 4 When the Surah was revealed and relevant information regarding revelation 5 Main theme of Surah (what is the Surah talking about) 6 What are the benefits of reciting this Surah? 7 Other interesting facts related to Surah 8 Diagram or Picture related to tafseer of Surah 9 -

An Analytical Study of Women-Related Verses of S¯Ura An-Nisa

Gunawan Adnan Women and The Glorious QurÞÁn: An Analytical Study of Women-RelatedVerses of SÙra An-NisaÞ erschienen in der Reihe der Universitätsdrucke des Universitätsverlages Göttingen 2004 Gunawan Adnan Women and The Glorious QurÞÁn: An Analytical Study of Women- RelatedVerses of SÙra An-NisaÞ Universitätsdrucke Göttingen 2004 Die Deutsche Bibliothek – CIP-Einheitsaufnahme Ein Titelsatz für diese Publikation ist bei der Deutschen Bibliothek erhältlich. © Alle Rechte vorbehalten, Universitätsverlag Göttingen 2004 ISBN 3-930457-50-4 Respectfully dedicated to My honorable parents ...who gave me a wonderful world. To my beloved wife, son and daughter ...who make my world beautiful and meaningful as well. i Acknowledgements All praises be to AllÁh for His blessing and granting me the health, strength, ability and time to finish the Doctoral Program leading to this book on the right time. I am indebted to several persons and institutions that made it possible for this study to be undertaken. My greatest intellectual debt goes to my academic supervisor, Doktorvater, Prof. Tilman Nagel for his invaluable advice, guidance, patience and constructive criticism throughout the various stages in the preparation of this dissertation. My special thanks go to Prof. Brigitta Benzing and Prof. Heide Inhetveen whose interests, comments and guidance were of invaluable assistance. The Seminar for Arabic of Georg-August University of Göttingen with its international reputation has enabled me to enjoy a very favorable environment to expand my insights and experiences especially in the themes of Islamic studies, literature, phylosophy, philology and other oriental studies. My thanks are due to Dr. Abdul RazzÁq Weiss who provided substantial advice and constructive criticism for the perfection of this dissertation. -

Surah Yunus Most Merciful

- 283 In the name of Allah, the Most Gracious,the Surah Yunus Most Merciful. 1. Alif Lam Ra. These are the MostMerciful. the MostGracious, (of)Allah, In(the)name the verses of the wise Book. Is it 1 the wise. (of) the Book (are the) verses These Alif Lam Ra. 2. Is it a wonder for mankind that We revealed (Our from (among) them a man to We revealed that a wonder for the mankind revelation) to a man from among them (saying), “Warn mankind and give glad tidings to those who that believe (to) those who and give glad tidings the mankind “ Warn that, believe that for them will be a respectable position near their Lord?” (But) Said their Lord?” near (will be) a respectable position for them the disbelievers say, “Indeed, this is an obvious magician.” 2 obvious.” (is) surely a magician this “ Indeed, the disbelievers, 3. Indeed,your Lord is Allah, the One Who the heavens created the One Who (is) Allah your Lord Indeed, created the heavens and the earth in six periods and then established Himself on the throne, on He established then periods, six in and the earth disposing the affairs (of all things). There is no intercessor except after His permission. That is after except (is) any intercessor Not the affairs. disposing the Throne, Allah, your Lord, so worship Him. Then will you not remember? Then will not so worship Him. your Lord, (is) Allah, That His permission. 4. To Him,you will all return. The Promise of (of) Allah Promise [all]. (will be) your return To Him, 3 you remember? Allah is true. -

![Surah Abasa [80] - Dream Tafseer Notes - Nouman Ali Khan](https://docslib.b-cdn.net/cover/4902/surah-abasa-80-dream-tafseer-notes-nouman-ali-khan-1094902.webp)

Surah Abasa [80] - Dream Tafseer Notes - Nouman Ali Khan

Asalaam alaikum Warahmatulah Wabarakatuh. Surah Abasa [80] - Dream Tafseer Notes - Nouman Ali Khan [ Download Original Lectures in this series by Nouman Ali Khan from; Bayyinah.com/media ] Connection of Previous Surah [Nazi'at 79] to this Surah [Abasa]: In the previous surah of Nazi'aat - we found 2 types of contrasting people near the end. فَأَ ّما َمن َط َغ ٰى َوآثَ َر ا ْل َح َياةَ ال ّد ْن َيا فَإِ ّن ا ْل َج ِحي َم ِه َي ا ْل َمأْ َو ٰى As for him who was rebellious, [who] disbelieved, and preferred the life of this world, Then indeed, Hellfire will be [his] refuge. [Nazi'at 79: 37-39] َوأَ ّما َم ْن َخا َف َم َقا َم َربّ ِه َونَ َهى النّ ْف َس َع ِن ا ْل َه َو ٰى فَإِ ّن ا ْل َجنّةَ ِه َي ا ْل َمأْ َو ٰى But as for he who feared the position of his Lord and prevented the soul from [unlawful] inclination, Then indeed, Paradise will be [his] refuge. [Nazi'at 79: 40-41] In this surah too there are 2 types of people; 1 - The one who doesn't care [Istaghnaa]. 2 - The one who fears and comes to the Messenger of Allah running. Believer and disbeliever. We see such characters - from the previous surah's descriptions - being enacted within this surah. Introduction: 1 | P a g e http://linguisticmiracle.blogspot.com/ --- http://literarymiracle.wordpress.com/ Respecting Allah's Messenger: This Surah discusses a really sensitive topic, so we should be careful about what we say when talking about Allah's Messenger (sal Allah alaihi wasalam.) In Madani Qur'an (Qur'an revealed in Madinah) - we see that Allah criticizes the bedouins for just talking casually with the Messenger of Allah like they talk to other people. -

Zero Hunger by 2030

JULY 2016 JULY Zero hunger by 2030: The not-so-impossible dream Ministerial Council holds 37th session OFID gifts sculpture to Vienna Child of Play exhibit highlights plight of refugee children OFID and UNDP launch Arab Development Portal OFID Quarterly is published COMMENT four times a year by the OPEC Fund for International Hunger: More than a moral outrage 2 Development (OFID). OFID is the development finance agency established in January 1976 SPECIAL FEATURE by the Member States of OPEC ZERO HUNGER BY 2030 (the Organization of the Petroleum Exporting Countries) to promote Zero hunger by 2030: The not-so-impossible dream 4 South-South cooperation by extend- ing development assistance to other, Ending hunger: The nexus approach 11 non-OPEC developing countries. Food security: An integrated approach to a OFID Quarterly is available multidimensional problem. free-of-charge. If you wish to be Interview with chair of Committee on World Food Security 13 included on the distribution list, please send your full mailing details OFID in the Field 16 to the address below. Back issues of the magazine can be found on our Palestine: Food security through the dry seasons 18 website in PDF format. El Salvador: Promising a square meal for all 20 OFID Quarterly welcomes articles and photos on development-related Revitalizing rural communities in Africa 22 topics, but cannot guarantee publication. Manuscripts, together with a brief biographical note on the author, may be submitted MINISTERIAL COUNCIL to the Editor for consideration. HOLDS 37TH SESSION The contents of this publication Ministerial Council gathers to mark do not necessarily reflect the OFID’s 40th Anniversary 24 official views of OFID or its Member Countries. -

Exam Questions (A) Ashab-I Dari'l-Erkam

Exam Questions (A) Ashab-ı Dari’l-Erkam 1. Who are the people referred “those who are misguided” and 13. According to Surah al-Mulk, how Allah described the ones who “those who go astray” in Surah al-Fatiha? believed and the one who disbelieved? a. Hypocrites (al-Munafikun)-idolaters (al-Mushrikun) a. The person who walks/the person who is in delusion b. Idolaters (al-Mushrikun)- Heretics (al-Kafirun) b. The person who is in Heaven/the person who is in Hell c. Christians-Magi c. The person who is in darkness/ the person who is in light d. Christians-Jews d. Good person/ bad person 2. Which of the following is a correct match? 14. According to Surah Mulk, what is the call of guards of hell a. Quran - The Prophet Moses (as) towards the sinners? b. Psalms (Zabur) – The Prophet David (as) a. Taste the punishment after your sins b. You are going to stay here c. The Torah - The Prophet Solomon (as) forever d. Bible- The prophet Zakariyyah c. Today, God’s promise has come true! d. Did there not come to you a warner? 3. Which of the following answer has been given as a Surah name in the Quran? 15. Which of the following is not the cause of the disaster sent to a. Mosquito b. Bird c. Fish d. Bee owners of the garden as reported in the Surah Qalem? a. Not helping the poor 4. Which of the following is not one of the names of the Holy b. Because of collecting goods Quran? c. -

Nota Bene-- H:\TCH\WORLDR~1\ISLAM

Sūras of the Qur’ān # Name Translation Verses 58. al-Mujādilah The Disputer 22 1. al-Fātih.ah The Opening 7 59. al-H. ashr The Mustering 24 2. al-Baqarah The Cow 286 60. al-Muntah.anah The Woman Tested 13 3. Āl ‘Imrān The Family of ‘Imrān 200 61. as.-S. aff The Ranks 14 4. an-Nisā’ The Women 176 62. al-Jumu’ah The Congregation 11 5. al-Mā’idah The Table 120 63. al-Munāfiqūn The Hypocrites 11 6. al-An’ām The Cattle 165 64. at-Taghābun Mutual Fraud 18 7. al-A’rāf The Heights 206 65. at.-T. alāq Divorce 12 8. al-Anfāl The Spoils 75 66. at-Tah.rīm The Prohibition 12 9. at-Taubah Repentance 129 67. al-Mulk The Kingdom 30 al-Bara’ah Immunity 68. al-Qalam The Pen 52 10. Yūnus Jonah 109 69. al-H. āqqah The Indubitable 52 11. Hūd Hūd 123 70. al-Ma’ārij The Stairways 44 12. Yūsuf Joseph 111 71. Nūh. Noah 28 13. ar-Ra’d The Thunder 43 72. al-Jinn The Jinn 28 14. Ibrāhīm Abraham 52 73. al-Muzzammil The Enwrapped One 20 15. al-H. ijr al-H. ijr 99 74. al-Muddaththir The Shrouded One 56 16. an-Nah.l The Bee 128 75. al-Qiyāmah The Resurrection 40 17. al-Isrā’ The Night Journey 111 76. al-Insān Man 31 Banū Isrā’īl The Children of Israel ad-Dahr Time 18. al-Kahf The Cave 110 77. al-Mursalāt Those That Are Sent 50 19. -

The Analysis of a Translation of Musyakalah Verses in the Holy Quran Published by the Indonesian Department of Religious Affairs

Preprints (www.preprints.org) | NOT PEER-REVIEWED | Posted: 6 August 2016 doi:10.20944/preprints201608.0057.v1 Article The Analysis of a Translation of Musyakalah Verses in the Holy Quran Published by the Indonesian Department of Religious Affairs Yayan Nurbayan Department of Arabic Language, Faculty of Language and Linguistic, Universitas Pendidikan Indonesia, Jalan. Dr. Setiabudhi No.229, Bandung, Indonesia; [email protected] Abstract: Musyakalah is one of the Arabic linguistic styles included under the category of majaz. This style is commonly used in Al-Quran. The Indonesian translation of Al-Quran is a case where many of the figures of speech are translated literally, thereby causing serious semantic problems. Thus, the research problem of this is formulated with the following questions: 1) How many musyakalah ayahs are there in Al-Quran?; 2) How are the musyakalah ayahs translated, literally (harfiyya) or interpretatively (tafsiriyya)?; 3) How many ayahs are translated literally and how many are translated interpretatively?; and 4) Which translated musyakalah ayahs have the potential to raise semantic and theological problems? The corpus in this research consists of all musyakalah ayahs in Al-Quran and their translation to Indonesian published by the Department of Religious Affairs of Indonesia. The research adopted a descriptive-semantic method. The findings of this research are: 1) There are only eleven ayahs in Al-Quran using musyakalah style, namely: Alhasyr ayah 19, Ali Imran ayah 54, Annaml ayah 50, Alanfal ayah 30, Asysyura ayah 40, Albaqarah ayah 15, Almaidah 116, Aljatsiah ayah 34, Attaubah ayah 79, Annisa ayah 142, and Albaqarah 194; 2) The musyakalah ayahs translated literally are: Aljatsiah 34, Almaidah 116, Asysura 40, Annaml 50, and Alhasyr 19, whereas the musyakalah ayahs translated interpretatively are Albaqarah 194, Annisa 142, Attaubah 79, Albaqarah 15, Alanfal 30, and Ali Imran 54; 3) Of the eleven musyakalah ayahs, only Alhasyr ayah 19 that is translated correctly and does not have the potential of creating misinterpretation.