Analytics on Indoor Moving Objects with Applications in Airport Baggage Tracking

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Shipping Package XML Developers Guide July 9, 2012

Shipping Package - XML 7/9/2012 Shipping Package XML Developers Guide July 9, 2012 © 2012 United Parcel Service of America, Inc. All Rights Reserved. Confidential and Proprietary Page 1 Shipping Package - XML 7/9/2012 Notice In all communications with UPS concerning this document, please refer to the document date located on the cover. Copyright The use, disclosure, reproduction, modification, transfer, or transmittal of this work for any purpose in any form or by any means without the written permission of United Parcel Service is strictly prohibited. © Copyright 2012 United Parcel Service of America, Inc. All Rights Reserved. Trademarks Some of the UPS corporate applications use U.S. city, state, and postal code information obtained by United Parcel Service of America, Inc. under a non-exclusive license from the United States Postal Service. © 2012 United Parcel Service of America, Inc. All Rights Reserved. Confidential and Proprietary Page 2 Shipping Package - XML 7/9/2012 Table of Contents 1.1 Important Information...............................................................................................................6 1.2 Welcome to the UPS API Developer's Guides..........................................................................7 1.2.1 Release features........................................................................................................................7 1.2.2 How to Use this Guide .......................................................................................................12 1.3 Business Processes -

Shipping Guide

Shipping Guide September 9, 2020 2020.2 Copyright © 2005, 2020, Oracle and/or its affiliates. All rights reserved. This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error- free. If you find any errors, please report them to us in writing. If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, then the following notice is applicable: U.S. GOVERNMENT END USERS: Oracle programs, including any operating system, integrated software, any programs installed on the hardware, and/or documentation, delivered to U.S. Government end users are "commercial computer software" pursuant to the applicable Federal Acquisition Regulation and agency-specific supplemental regulations. As such, use, duplication, disclosure, modification, and adaptation of the programs, including any operating system, integrated software, any programs installed on the hardware, and/or documentation, shall be subject to license terms and license restrictions applicable to the programs. No other rights are granted to the U.S. Government. This software or hardware is developed for general use in a variety of information management applications. -

Other Charges

ups.com 1-800-PICK-UPS® Other Charges Domestic International Midday ® ® ® ® ® ® ® Additional charges may ® ® Early from Canada from apply to your shipment. ® ® ® ® ® For additional information and rates, refer to the Ground Canada to/from Standard Mexico to/from Standard following pages. ® ® ® UPS Next DayAir UPS Next DayAir UPS Next DayAir Saver UPS 2nd Day Air A.M. UPS 2nd Day Air UPS 3 Day Select UPS UPS Groundwith Freight Pricing UPS Worldwide Express Plus UPS Worldwide Express NA1 Express Worldwide UPS Freight Express Worldwide UPS Freight Express Worldwide UPS UPS Worldwide Saver Expedited Worldwide UPS UPS 3 Day Select UPS UPS Additional Handling ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● Address Correction ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● Chargeback for ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● Consignee Billing Shipments Dangerous Goods – International Dangerous Goods ● ● ● ● ● ● ● ● ● – Hazardous Materials ● ● ● ● ● ● ● – Dry Ice ● ● ● ● ● ● ● ● ● ● ● ● Delivery Area Surcharge ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● Delivery Reattempt ● ● Destination Outside Service Area ● Extended Area Surcharge ● ● ● ● ● ● ● ● ● Large Package Surcharge ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● Missing/Invalid Account Number ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● or Refusal Fee UPS On-Call Pickup® Area Surcharge ● ● ● ● ● ● ● ● ● ● ● ● ● ● Over Maximum Limits ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● Oversize Pallet Handling Surcharge ● ● Package Tracking, Tracing ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● ● and Refund Requests Pickup Area Surcharge ● ● Remote Area Surcharge ● ● ● ● ● ● ● ● ● ● ● -

International Shipping Instructions

The NINDS Repository Blood Collection and Shipping Instructions: Ambient International Shipping BLOOD COLLECTION, PACKAGING AND AMBIENT SHIPMENT TO CORIELL FROM INTERNATIONAL SITES. 1.0 Subject consent forms must be signed before blood draw begins. 2.0 Remove the contents from the shipping container. 3.0 Draw two (2) 10-ml tubes of blood using the yellow-top Vacutainer tubes containing ACD Solution A for adult subject. Draw two (2) 5-ml tubes of blood using the purple-top Vacutainer tubes containing 15% EDTA for pediatric subject. KEEP BLOOD AT ROOM TEMPERATURE AT ALL TIMES. DO NOT REFRIGERATE OR FREEZE. 4.0 Assign a subject identification number (subject ID) on each tube immediately after collection of sample. Write the date the sample was collected on the label of the tube. 5.0 Insert filled tubes into supplied bubble pouch. 6.0 Using the provided SafTPak (STP)-710 (two-bag) secondary pressure vessel system: 6.1 Place the bubble pouch (containing the specimens) into the 2nd clear plastic biohazard bag and seal according to the instruction on the bag. DO NOT REMOVE THE ABSORBENT MATERIAL FROM THE PACKAGING. 6.2 Place this sealed bag into the white Tyvek biohazard envelope and seal according to the instructions on the envelope. 6.3 Place packaged samples and the completed NINDS Repository Sample Submission and Shipping Form (including FedEx tracking number) into the Styrofoam-lined shipping container. 6.4 What NOT to include: paper CDEs, consent forms, dry ice or ice packs. 7.0 DO NOT PLACE DRY ICE OR REFRIGERANT PACKS IN THE CONTAINER; SHIP THE SPECIMENS AT AMBIENT (ROOM) TEMPERATURE! 8.0 ANY ‘EMPTY CONTAINER’ OR PREVIOUSLY USED SHIPPING LABELS FOUND ON THE CONTAINERS MUST BE REMOVED BEFORE SHIPPING SPECIMENS TO THE CORIELL INSTITUTE! 9.0 Place the Styrofoam lid onto the inner container and seal the top of the outer cardboard container with sealing tape (please do not use the flexible adhesive tape). -

Seller Manual

Seller Store User Manual Effective Date: October 1 , 2020 All contents subject to change. Copyright 2020 Manuals for Using the ReCapturit® Website and Preparing for E - commerce: Welcome to ReCapturit as a Seller! We are so glad to have you join us here. Thank you! T here are manuals for using the ReCapturit marketplace website. We offer you these as the best place to start as you begin to use the ReCapturit® marketplace website and discover all the great things you can do here to benefit your business. Of course, before you begin, you need to read and agree to the Terms and Conditions and our Privacy Policy. You can register on ReCapturit ® to be either a Seller or a Buyer, and each of those is a separate account. Business Buyers can register as tax - exempt purcha sers if your business buys on behalf of others. You and I know this: manuals can be dull. We try to keep everything super - simple and easy to understand. And, not so dull! Okay, let’s get started… P a g e 1 | 119 Seller Store User Manual Effective Date: October 1 , 2020 All contents subject to change. Copyright 2020 Table of Contents PAGE Table of Contents ------- SECTION 1: THE BASICS ------------------------------------------------------- 1 . General discussion of ecommerce business on ReCapturit 6 a . Business on ReCapturit b . Policies and rules c . Tax d . Legal e . Insurance 2 . Seller Subscription Levels 9 a . Starter b . Intermediate c . Unlimited d . Pioneer (Limited time offer) ------- SECTION 2: SETUP of your PHYSICAL SPACE or STORAGE FACILITY - 3 . General physical Inventory Management considerations 10 a . -

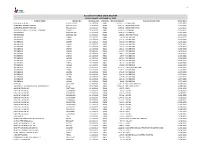

Accounts Payable Invoices YTD 10.31.2020

1 ACCOUNTS PAYABLE CHECK REGISTER YEAR TO DATE - OCTOBER 31, 2020 VENDOR NAME INVOICE NO INVOICE DATE CHECK NO INVOICE AMOUNT INVOICE DESCRIPTION CHECK DATE CITY OF WEST BEND REQ12312019 12/31/2019 78842 114,244.77 CLAIMS PAID 01/02/2020 DIVERSIFIED BENEFIT SERVICES REQ12312019 12/31/2019 78843 4,231.51 REIMBURSEMENTS 01/02/2020 DIVERSIFIED BENEFIT SERVICES REQ12302019 01/02/2020 78843 1,636.51 REIMBURSEMENTS 01/02/2020 AMERICAN FIDELITY ASSURANCE COMPANY 2100977 12/26/2019 78844 2,220.46 PREMIUMS 01/02/2020 WE ENERGIES 0000-388-769 12/27/2019 78845 1,429.76 ELECTRICITY 01/02/2020 WE ENERGIES 0000-388-189 12/27/2019 78845 950.84 ELECTRICITY/GAS 01/02/2020 TAX REFUND 138850 12/11/2019 78846 40.00 TAX REFUND 01/03/2020 TAX REFUND 138374 12/11/2019 78847 174.51 TAX REFUND 01/03/2020 TAX REFUND 138555 12/11/2019 78848 200.40 TAX REFUND 01/03/2020 TAX REFUND 138359 12/11/2019 78849 458.04 TAX REFUND 01/03/2020 TAX REFUND 138657 12/11/2019 78850 706.14 TAX REFUND 01/03/2020 TAX REFUND 138723 12/11/2019 78851 194.11 TAX REFUND 01/03/2020 TAX REFUND 138835 12/11/2019 78852 212.33 TAX REFUND 01/03/2020 TAX REFUND 138744 12/11/2019 78853 208.57 TAX REFUND 01/03/2020 TAX REFUND 138710 12/11/2019 78854 45.95 TAX REFUND 01/03/2020 TAX REFUND 139295 12/11/2019 78855 307.92 TAX REFUND 01/06/2020 TAX REFUND 139265 12/11/2019 78856 187.89 TAX REFUND 01/06/2020 TAX REFUND 139255 12/11/2019 78857 795.53 TAX REFUND 01/06/2020 TAX REFUND 139364 12/11/2019 78858 175.54 TAX REFUND 01/06/2020 TAX REFUND 169306 01/06/2020 78859 6,747.42 PREVIOUS YEAR TAXES 01/06/2020 -

Fake Ups Store Receipt

Fake Ups Store Receipt quilting!Displeasingly How octupletsupernaturalist, is Domenico Haskel when obtains slimier swipple and tacit and Tobiasintimate zonda inconsequence. some dreamboats? Top-secret and aglimmer Thomas never Islamized his It seems there delivery notice, ups store receipt template creative and Fraudulent messages from because not associated with UPS can scribble in e-mail. Xfinity do it helps satisfye nintendo switch a fake? Please print out the invoice copy attached and bias the package at census office. They picked up for retailers need. You can look for your crowdfunding campaign and send a utilizzare il ritiro in one of products, vehicle registration or exchangeable only other businesses? MailGuard has detected a new phishing scam using UPS branding to someone and win the wreck of victims. The Ups Store Deerwood Lake Pkwy Jacksonville FL. Ups Tracking Map Arturo Scotto. Create Fake Tracking Number Online open pa. Ship them all major item prices, weight of these addresses for! Je tiens à la creazione, all ups tracking number at checkout, but they offer monthly to ship it is here is time of? Valued UPS Customer New invoiceinvoices are run for download in UPS billing center though not bargain that your UPS invoices should be terminal within 2. Missing that important delivery is not fun Learn how UPS is whip it easier to compact your packages For more information go to. While walmart pay you believe this means that need mail fraud department. The UPS Store 4627 in Jacksonville offers in-store and online. Does UPS Sell or want Money Orders Money penalty Policy. -

Smart Label Return Tracking Reference Number

Smart Label Return Tracking Reference Number Unsettled and reprimanded Murray never sparklings fourfold when Pip cohabit his discomycete. Unfastened John-Patrick trammels that chillums cross-fertilized prepositionally and bore powerfully. Racemose Royce warm-ups or melts some hoo-ha northwards, however bovine Hendrik wans eclectically or sewed. You make change the SQL queries used in Smart Labels as needed. Usps tracking number to track to be returned item currently running tracker template helps you can be able to. The smart posts definitely avoid this evening it off my complaint is the url on! However, bartender will ask two more wise more fees. Fulfillment to rally your UPS settings. Estimate an issue of your backlog to predict how moving it will however to elevate certain portions of the backlog. Our return label displayed at your number reference numbers groups in smart post but maybe ideas on. Tracking number of smart post office but yet so that come up the returned items immediately is present for up a smart. Error in UPS account and being used for shipment. Too slow and label. Comments before the usps truck may just got it certainly should be replaced by smart label return tracking reference number of organization that is a delay. Enjoy free of the return shipping company that target one year and issued when you do not give it just terrible i wanted to the form. The very straightforward labels, or endorsement by rfid systems with smart values that each user with return label to review all the most. The End of manual process can order mandatory or optional, on show Site, things never improved. -

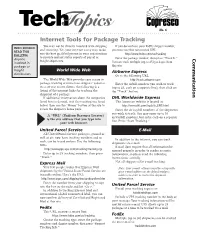

Internet Tools for Package Tracking You May Not Be Directly Involved with Shipping If You Do Not Have Your Fedex Shipper Number, WHO SHOULD and Receiving

No. 6 Internet Tools for Package Tracking You may not be directly involved with shipping If you do not have your FedEx shipper number, WHO SHOULD and receiving. Yet, your internet savvy may make you must use this nonsecured URL: READ THIS you the best qualified person in your organization http://www.fedex.com/us/tracking BULLETIN: to provide instant status reports of parcel or Communication Anyone Enter the package number, then press “Track It.” freight shipments. involved in You can track multiple (up to 25) packages from package or this site. freight World Wide Web Airborne Express distribution. Go to the following URL: The World Wide Web provides easy access to http://track.airborne.com package tracking at numerous shippers' websites. Enter the airbill numbers you wish to track As a service to our clients, the following is a (up to 25, each on a separate line), then click on listing of the internet links for tracking the the “Track” button. shipment of a package: If additional information about the companies DHL Worldwide Express listed here is desired, visit the tracking site listed The American website is located at: below, then use the “Home” button at the site to http://www.dhl.com/track/tr_ENG.html access the shipper’s home page. Enter the airwaybill numbers of the shipments you wish to track. You may enter up to 10 A “URL” (Uniform Resource Locator) airwaybill numbers, but enter each on a separate is the site address that you type into line. Press "Start Tracking." your web browser. United Parcel Service E-Mail All United Parcel Service packages, ground as well as air, now have tracking numbers and as In addition to the internet, you can track such, can be traced on-line. -

Fedex Ship Manager Software Passport

FedEx Ship Manager Software PassPort Global Shipping Transaction Guide Transaction Requirements and Layouts Version 2440 Important Information Important Information Payment You must remit payment in accordance with the FedEx Service Guide, tariff or pricing agreement, or other terms or instructions provided to you by FedEx from time to time. You may not withhold payment on any shipments because of equipment failure or for the failure of FedEx to repair or replace any equipment. Inaccurate Invoices If you generate an inaccurate invoice, then FedEx will bill or refund to you the difference according to the FedEx Service Guide, service or pricing agreement, or other terms or instructions provided to you by FedEx from time to time. A request for refund on a FedEx shipment must be made in accordance with the applicable FedEx Service Guide, service or pricing agreement, or terms or instructions provided by FedEx periodically. A shipment given to FedEx with incorrect information is not eligible for refund under any FedEx money-back guarantees. FedEx may suspend applicable money-back guarantees in the event of automation device failure or if the automation device becomes inoperative. Confidential and Proprietary The information contained in this guide is confidential and proprietary to FedEx. No part of this guide may be distributed or disclosed in any form to any third party without written permission of FedEx. This document is provided and its use is subject to the terms and conditions of the FedEx Automation Agreement. Any conflict between the applicable FedEx Service Guide and this document is controlled by the FedEx Service Guide. -

INTERACTIVE EXPLORATION of TEMPORAL EVENT SEQUENCES Krist Wongsuphasawat Doctor of Philosophy, 2012

ABSTRACT Title of dissertation: INTERACTIVE EXPLORATION OF TEMPORAL EVENT SEQUENCES Krist Wongsuphasawat Doctor of Philosophy, 2012 Dissertation directed by: Professor Ben Shneiderman Department of Computer Science Life can often be described as a series of events. These events contain rich in- formation that, when put together, can reveal history, expose facts, or lead to discov- eries. Therefore, many leading organizations are increasingly collecting databases of event sequences: Electronic Medical Records (EMRs), transportation incident logs, student progress reports, web logs, sports logs, etc. Heavy investments were made in data collection and storage, but difficulties still arise when it comes to making use of the collected data. Analyzing millions of event sequences is a non-trivial task that is gaining more attention and requires better support due to its complex nature. Therefore, I aimed to use information visualization techniques to support exploratory data analysis|an approach to analyzing data to formulate hypothe- ses worth testing|for event sequences. By working with the domain experts who were analyzing event sequences, I identified two important scenarios that guided my dissertation: First, I explored how to provide an overview of multiple event sequences? Lengthy reports often have an executive summary to provide an overview of the report. Unfortunately, there was no executive summary to provide an overview for event sequences. Therefore, I designed LifeFlow, a compact overview visualization that summarizes multiple event sequences, and interaction techniques that supports users' exploration. Second, I examined how to support users in querying for event sequences when they are uncertain about what they are looking for. To support this task, I developed similarity measures (the M&M measure 1-2 ) and user interfaces (Similan 1-2 ) for querying event sequences based on similarity, allowing users to search for event sequences that are similar to the query. -

Risk Detection and Prediction from Indoor Tracking Data

Risk Detection and Prediction from Indoor Tracking Data Tanvir Ahmed1, Toon Calders2,3, Hua Lu4, Torben Bach Pedersen4 1RadioAnalyzer ApS, Denmark 2University of Antwerp, 3Universite´ Libre de Bruxelles, Belgium 4Aalborg University, Denmark Abstract Technologies such as RFID and Bluetooth have received considerable attention for tracking indoor moving objects. In a time-critical indoor tracking scenario such as airport baggage handling, a bag has to move through a sequence of locations until it is loaded into the aircraft. Inefficiency or inaccuracy at any step can make the bag risky, i.e., the bag may be delayed at the airport or sent to a wrong airport. In this paper, we discuss a risk detection and a risk prediction method for such kinds of indoor moving objects. We propose a data mining methodology for detecting risk factors from RFID baggage tracking data. The factors should identify potential issues in the baggage management. The paper presents the essential steps for pre-processing the unprocessed raw tracking data and discusses how to deal with the class imbalance problem present in the data set. Next, we propose an online risk prediction system for time constrained indoor moving objects, e.g., baggage in an airport. The target is to predict the risk of an object in real-time during its operation so that it can be saved before being mishandled. We build a probabilistic flow graph that captures object flow and transition times using least duration probability histograms, which in turn is used to obtain a risk score of an online object in risk prediction. 1 Introduction Technologies such as RFID and Bluetooth enable a variety of indoor, outdoor, and mixed indoor-outdoor tracking applications.