66 in Thermodynamics, the Total Energy E of Our System

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

On Entropy, Information, and Conservation of Information

entropy Article On Entropy, Information, and Conservation of Information Yunus A. Çengel Department of Mechanical Engineering, University of Nevada, Reno, NV 89557, USA; [email protected] Abstract: The term entropy is used in different meanings in different contexts, sometimes in contradic- tory ways, resulting in misunderstandings and confusion. The root cause of the problem is the close resemblance of the defining mathematical expressions of entropy in statistical thermodynamics and information in the communications field, also called entropy, differing only by a constant factor with the unit ‘J/K’ in thermodynamics and ‘bits’ in the information theory. The thermodynamic property entropy is closely associated with the physical quantities of thermal energy and temperature, while the entropy used in the communications field is a mathematical abstraction based on probabilities of messages. The terms information and entropy are often used interchangeably in several branches of sciences. This practice gives rise to the phrase conservation of entropy in the sense of conservation of information, which is in contradiction to the fundamental increase of entropy principle in thermody- namics as an expression of the second law. The aim of this paper is to clarify matters and eliminate confusion by putting things into their rightful places within their domains. The notion of conservation of information is also put into a proper perspective. Keywords: entropy; information; conservation of information; creation of information; destruction of information; Boltzmann relation Citation: Çengel, Y.A. On Entropy, 1. Introduction Information, and Conservation of One needs to be cautious when dealing with information since it is defined differently Information. Entropy 2021, 23, 779. -

ENERGY, ENTROPY, and INFORMATION Jean Thoma June

ENERGY, ENTROPY, AND INFORMATION Jean Thoma June 1977 Research Memoranda are interim reports on research being conducted by the International Institute for Applied Systems Analysis, and as such receive only limited scientific review. Views or opinions contained herein do not necessarily represent those of the Institute or of the National Member Organizations supporting the Institute. PREFACE This Research Memorandum contains the work done during the stay of Professor Dr.Sc. Jean Thoma, Zug, Switzerland, at IIASA in November 1976. It is based on extensive discussions with Professor HAfele and other members of the Energy Program. Al- though the content of this report is not yet very uniform because of the different starting points on the subject under consideration, its publication is considered a necessary step in fostering the related discussion at IIASA evolving around th.e problem of energy demand. ABSTRACT Thermodynamical considerations of energy and entropy are being pursued in order to arrive at a general starting point for relating entropy, negentropy, and information. Thus one hopes to ultimately arrive at a common denominator for quanti- ties of a more general nature, including economic parameters. The report closes with the description of various heating appli- cation.~and related efficiencies. Such considerations are important in order to understand in greater depth the nature and composition of energy demand. This may be highlighted by the observation that it is, of course, not the energy that is consumed or demanded for but the informa- tion that goes along with it. TABLE 'OF 'CONTENTS Introduction ..................................... 1 2 . Various Aspects of Entropy ........................2 2.1 i he no me no logical Entropy ........................ -

Practice Problems from Chapter 1-3 Problem 1 One Mole of a Monatomic Ideal Gas Goes Through a Quasistatic Three-Stage Cycle (1-2, 2-3, 3-1) Shown in V 3 the Figure

Practice Problems from Chapter 1-3 Problem 1 One mole of a monatomic ideal gas goes through a quasistatic three-stage cycle (1-2, 2-3, 3-1) shown in V 3 the Figure. T1 and T2 are given. V 2 2 (a) (10) Calculate the work done by the gas. Is it positive or negative? V 1 1 (b) (20) Using two methods (Sackur-Tetrode eq. and dQ/T), calculate the entropy change for each stage and ∆ for the whole cycle, Stotal. Did you get the expected ∆ result for Stotal? Explain. T1 T2 T (c) (5) What is the heat capacity (in units R) for each stage? Problem 1 (cont.) ∝ → (a) 1 – 2 V T P = const (isobaric process) δW 12=P ( V 2−V 1 )=R (T 2−T 1)>0 V = const (isochoric process) 2 – 3 δW 23 =0 V 1 V 1 dV V 1 T1 3 – 1 T = const (isothermal process) δW 31=∫ PdV =R T1 ∫ =R T 1 ln =R T1 ln ¿ 0 V V V 2 T 2 V 2 2 T1 T 2 T 2 δW total=δW 12+δW 31=R (T 2−T 1)+R T 1 ln =R T 1 −1−ln >0 T 2 [ T 1 T 1 ] Problem 1 (cont.) Sackur-Tetrode equation: V (b) 3 V 3 U S ( U ,V ,N )=R ln + R ln +kB ln f ( N ,m ) V2 2 N 2 N V f 3 T f V f 3 T f ΔS =R ln + R ln =R ln + ln V V 2 T V 2 T 1 1 i i ( i i ) 1 – 2 V ∝ T → P = const (isobaric process) T1 T2 T 5 T 2 ΔS12 = R ln 2 T 1 T T V = const (isochoric process) 3 1 3 2 2 – 3 ΔS 23 = R ln =− R ln 2 T 2 2 T 1 V 1 T 2 3 – 1 T = const (isothermal process) ΔS 31 =R ln =−R ln V 2 T 1 5 T 2 T 2 3 T 2 as it should be for a quasistatic cyclic process ΔS = R ln −R ln − R ln =0 cycle 2 T T 2 T (quasistatic – reversible), 1 1 1 because S is a state function. -

Lecture 2 the First Law of Thermodynamics (Ch.1)

Lecture 2 The First Law of Thermodynamics (Ch.1) Outline: 1. Internal Energy, Work, Heating 2. Energy Conservation – the First Law 3. Quasi-static processes 4. Enthalpy 5. Heat Capacity Internal Energy The internal energy of a system of particles, U, is the sum of the kinetic energy in the reference frame in which the center of mass is at rest and the potential energy arising from the forces of the particles on each other. system Difference between the total energy and the internal energy? boundary system U = kinetic + potential “environment” B The internal energy is a state function – it depends only on P the values of macroparameters (the state of a system), not on the method of preparation of this state (the “path” in the V macroparameter space is irrelevant). T A In equilibrium [ f (P,V,T)=0 ] : U = U (V, T) U depends on the kinetic energy of particles in a system and an average inter-particle distance (~ V-1/3) – interactions. For an ideal gas (no interactions) : U = U (T) - “pure” kinetic Internal Energy of an Ideal Gas f The internal energy of an ideal gas U = Nk T with f degrees of freedom: 2 B f ⇒ 3 (monatomic), 5 (diatomic), 6 (polyatomic) (here we consider only trans.+rotat. degrees of freedom, and neglect the vibrational ones that can be excited at very high temperatures) How does the internal energy of air in this (not-air-tight) room change with T if the external P = const? f ⎡ PV ⎤ f U =Nin room k= T Bin⎢ N room = ⎥ = PV 2 ⎣ kB T⎦ 2 - does not change at all, an increase of the kinetic energy of individual molecules with T is compensated by a decrease of their number. -

Lecture 4: 09.16.05 Temperature, Heat, and Entropy

3.012 Fundamentals of Materials Science Fall 2005 Lecture 4: 09.16.05 Temperature, heat, and entropy Today: LAST TIME .........................................................................................................................................................................................2� State functions ..............................................................................................................................................................................2� Path dependent variables: heat and work..................................................................................................................................2� DEFINING TEMPERATURE ...................................................................................................................................................................4� The zeroth law of thermodynamics .............................................................................................................................................4� The absolute temperature scale ..................................................................................................................................................5� CONSEQUENCES OF THE RELATION BETWEEN TEMPERATURE, HEAT, AND ENTROPY: HEAT CAPACITY .......................................6� The difference between heat and temperature ...........................................................................................................................6� Defining heat capacity.................................................................................................................................................................6� -

Chapter 3. Second and Third Law of Thermodynamics

Chapter 3. Second and third law of thermodynamics Important Concepts Review Entropy; Gibbs Free Energy • Entropy (S) – definitions Law of Corresponding States (ch 1 notes) • Entropy changes in reversible and Reduced pressure, temperatures, volumes irreversible processes • Entropy of mixing of ideal gases • 2nd law of thermodynamics • 3rd law of thermodynamics Math • Free energy Numerical integration by computer • Maxwell relations (Trapezoidal integration • Dependence of free energy on P, V, T https://en.wikipedia.org/wiki/Trapezoidal_rule) • Thermodynamic functions of mixtures Properties of partial differential equations • Partial molar quantities and chemical Rules for inequalities potential Major Concept Review • Adiabats vs. isotherms p1V1 p2V2 • Sign convention for work and heat w done on c=C /R vm system, q supplied to system : + p1V1 p2V2 =Cp/CV w done by system, q removed from system : c c V1T1 V2T2 - • Joule-Thomson expansion (DH=0); • State variables depend on final & initial state; not Joule-Thomson coefficient, inversion path. temperature • Reversible change occurs in series of equilibrium V states T TT V P p • Adiabatic q = 0; Isothermal DT = 0 H CP • Equations of state for enthalpy, H and internal • Formation reaction; enthalpies of energy, U reaction, Hess’s Law; other changes D rxn H iD f Hi i T D rxn H Drxn Href DrxnCpdT Tref • Calorimetry Spontaneous and Nonspontaneous Changes First Law: when one form of energy is converted to another, the total energy in universe is conserved. • Does not give any other restriction on a process • But many processes have a natural direction Examples • gas expands into a vacuum; not the reverse • can burn paper; can't unburn paper • heat never flows spontaneously from cold to hot These changes are called nonspontaneous changes. -

HEAT and TEMPERATURE Heat Is a Type of ENERGY. When Absorbed

HEAT AND TEMPERATURE Heat is a type of ENERGY. When absorbed by a substance, heat causes inter-particle bonds to weaken and break which leads to a change of state (solid to liquid for example). Heat causing a phase change is NOT sufficient to cause an increase in temperature. Heat also causes an increase of kinetic energy (motion, friction) of the particles in a substance. This WILL cause an increase in TEMPERATURE. Temperature is NOT energy, only a measure of KINETIC ENERGY The reason why there is no change in temperature at a phase change is because the substance is using the heat only to change the way the particles interact (“stick together”). There is no increase in the particle motion and hence no rise in temperature. THERMAL ENERGY is one type of INTERNAL ENERGY possessed by an object. It is the KINETIC ENERGY component of the object’s internal energy. When thermal energy is transferred from a hot to a cold body, the term HEAT is used to describe the transferred energy. The hot body will decrease in temperature and hence in thermal energy. The cold body will increase in temperature and hence in thermal energy. Temperature Scales: The K scale is the absolute temperature scale. The lowest K temperature, 0 K, is absolute zero, the temperature at which an object possesses no thermal energy. The Celsius scale is based upon the melting point and boiling point of water at 1 atm pressure (0, 100o C) K = oC + 273.13 UNITS OF HEAT ENERGY The unit of heat energy we will use in this lesson is called the JOULE (J). -

Ffontiau Cymraeg

This publication is available in other languages and formats on request. Mae'r cyhoeddiad hwn ar gael mewn ieithoedd a fformatau eraill ar gais. [email protected] www.caerphilly.gov.uk/equalities How to type Accented Characters This guidance document has been produced to provide practical help when typing letters or circulars, or when designing posters or flyers so that getting accents on various letters when typing is made easier. The guide should be used alongside the Council’s Guidance on Equalities in Designing and Printing. Please note this is for PCs only and will not work on Macs. Firstly, on your keyboard make sure the Num Lock is switched on, or the codes shown in this document won’t work (this button is found above the numeric keypad on the right of your keyboard). By pressing the ALT key (to the left of the space bar), holding it down and then entering a certain sequence of numbers on the numeric keypad, it's very easy to get almost any accented character you want. For example, to get the letter “ô”, press and hold the ALT key, type in the code 0 2 4 4, then release the ALT key. The number sequences shown from page 3 onwards work in most fonts in order to get an accent over “a, e, i, o, u”, the vowels in the English alphabet. In other languages, for example in French, the letter "c" can be accented and in Spanish, "n" can be accented too. Many other languages have accents on consonants as well as vowels. -

Form IT-2658-E:12/19:Certificate of Exemption from Partnership Or New

Department of Taxation and Finance IT-2658-E Certificate of Exemption from Partnership (12/19) or New York S Corporation Estimated Tax Paid on Behalf of Nonresident Individual Partners and Shareholders Do not send this certificate to the Tax Department (see instructions below). Use this certificate for tax years 2020 and 2021; it will expire on February 1, 2022. First name and middle initial Last name Social Security number Mailing address (number and street or PO box) Telephone number ( ) City, village, or post office State ZIP code I certify that I will comply with the New York State estimated tax provisions and tax return filing requirements, to the extent that they apply to me, for tax years 2020 and 2021 (see instructions). Signature of nonresident individual partner or shareholder Date Instructions General information If you do not meet either of the above exceptions, you may still claim exemption from this estimated tax provision by Tax Law section 658(c)(4) requires the following entities filing Form IT-2658-E. that have income derived from New York sources to make estimated personal income tax payments on behalf of You qualify to claim exemption and file Form IT-2658-E by partners or shareholders who are nonresident individuals: certifying that you will comply in your individual capacity with • New York S corporations; all the New York State personal income tax and MCTMT estimated tax and tax return filing requirements, to the • partnerships (other than publicly traded partnerships as extent that they apply to you, for the years covered by this defined in Internal Revenue Code section 7704); and certificate. -

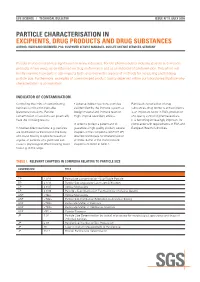

Particle Characterisation In

LIFE SCIENCE I TECHNICAL BULLETIN ISSUE N°11 /JULY 2008 PARTICLE CHARACTERISATION IN EXCIPIENTS, DRUG PRODUCTS AND DRUG SUBSTANCES AUTHOR: HILDEGARD BRÜMMER, PhD, CUSTOMER SERVICE MANAGER, SGS LIFE SCIENCE SERVICES, GERMANY Particle characterization has significance in many industries. For the pharmaceutical industry, particle size impacts products in two ways: as an influence on drug performance and as an indicator of contamination. This article will briefly examine how particle size impacts both, and review the arsenal of methods for measuring and tracking particle size. Furthermore, examples of compromised product quality observed within our laboratories illustrate why characterization is so important. INDICATOR OF CONTAMINATION Controlling the limits of contaminating • Adverse indirect reactions: particles Particle characterisation of drug particles is critical for injectable are identified by the immune system as substances, drug products and excipients (parenteral) solutions. Particle foreign material and immune reaction is an important factor in R&D, production contamination of solutions can potentially might impose secondary effects. and quality control of pharmaceuticals. have the following results: It is becoming increasingly important for In order to protect a patient and to compliance with requirements of FDA and • Adverse direct reactions: e.g. particles guarantee a high quality product, several European Health Authorities. are distributed via the blood in the body chapters in the compendia (USP, EP, JP) and cause toxicity to specific tissues or describe techniques for characterisation organs, or particles of a given size can of limits. Some of the most relevant cause a physiological effect blocking blood chapters are listed in Table 1. flow e.g. in the lungs. -

Left Ventricular Diastolic Dysfunction in Ischemic Stroke: Functional and Vascular Outcomes

Journal of Stroke 2016;18(2):195-202 http://dx.doi.org/10.5853/jos.2015.01669 Original Article Left Ventricular Diastolic Dysfunction in Ischemic Stroke: Functional and Vascular Outcomes Hong-Kyun Park,a* Beom Joon Kim,a* Chang-Hwan Yoon,b Mi Hwa Yang,a Moon-Ku Han,a Hee-Joon Baea aDepartment of Neurology and Cerebrovascular Center, Seoul National University Bundang Hospital, Seongnam, Korea bDivision of Cardiology, Department of Internal Medicine, Seoul National University Bundang Hospital, Seongnam, Korea Background and Purpose Left ventricular (LV) diastolic dysfunction, developed in relation to myo- Correspondence: Moon-Ku Han cardial dysfunction and remodeling, is documented in 15%-25% of the population. However, its role Department of Neurology and Cerebrovascular Center, Seoul National in functional recovery and recurrent vascular events after acute ischemic stroke has not been thor- University Bundang Hospital, oughly investigated. 82 Gumi-ro 173beon-gil, Bundang-gu, Seongnam 13620, Korea Methods In this retrospective observational study, we identified 2,827 ischemic stroke cases with Tel: +82-31-787-7464 adequate echocardiographic evaluations to assess LV diastolic dysfunction within 1 month after the Fax: +82-31-787-4059 index stroke. The peak transmitral filling velocity/mean mitral annular velocity during early diastole (E/ E-mail: [email protected] e’) was used to estimate LV diastolic dysfunction. We divided patients into 3 groups according to E/e’ Received: December 1, 2015 as follows: <8, 8-15, and ≥15. Recurrent vascular events and functional recovery were prospectively Revised: March 14, 2016 collected at 3 months and 1 year. Accepted: March 21, 2016 Results Among included patients, E/e’ was 10.6±6.4: E/e’ <8 in 993 (35%), 8-15 in 1,444 (51%), and *These authors contributed equally to this ≥15 in 378 (13%) cases. -

Chapter 3 Bose-Einstein Condensation of an Ideal

Chapter 3 Bose-Einstein Condensation of An Ideal Gas An ideal gas consisting of non-interacting Bose particles is a ¯ctitious system since every realistic Bose gas shows some level of particle-particle interaction. Nevertheless, such a mathematical model provides the simplest example for the realization of Bose-Einstein condensation. This simple model, ¯rst studied by A. Einstein [1], correctly describes important basic properties of actual non-ideal (interacting) Bose gas. In particular, such basic concepts as BEC critical temperature Tc (or critical particle density nc), condensate fraction N0=N and the dimensionality issue will be obtained. 3.1 The ideal Bose gas in the canonical and grand canonical ensemble Suppose an ideal gas of non-interacting particles with ¯xed particle number N is trapped in a box with a volume V and at equilibrium temperature T . We assume a particle system somehow establishes an equilibrium temperature in spite of the absence of interaction. Such a system can be characterized by the thermodynamic partition function of canonical ensemble X Z = e¡¯ER ; (3.1) R where R stands for a macroscopic state of the gas and is uniquely speci¯ed by the occupa- tion number ni of each single particle state i: fn0; n1; ¢ ¢ ¢ ¢ ¢ ¢g. ¯ = 1=kBT is a temperature parameter. Then, the total energy of a macroscopic state R is given by only the kinetic energy: X ER = "ini; (3.2) i where "i is the eigen-energy of the single particle state i and the occupation number ni satis¯es the normalization condition X N = ni: (3.3) i 1 The probability