Robin Hood Hashing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

SAHA: a String Adaptive Hash Table for Analytical Databases

applied sciences Article SAHA: A String Adaptive Hash Table for Analytical Databases Tianqi Zheng 1,2,* , Zhibin Zhang 1 and Xueqi Cheng 1,2 1 CAS Key Laboratory of Network Data Science and Technology, Institute of Computing Technology, Chinese Academy of Sciences, Beijing 100190, China; [email protected] (Z.Z.); [email protected] (X.C.) 2 University of Chinese Academy of Sciences, Beijing 100049, China * Correspondence: [email protected] Received: 3 February 2020; Accepted: 9 March 2020; Published: 11 March 2020 Abstract: Hash tables are the fundamental data structure for analytical database workloads, such as aggregation, joining, set filtering and records deduplication. The performance aspects of hash tables differ drastically with respect to what kind of data are being processed or how many inserts, lookups and deletes are constructed. In this paper, we address some common use cases of hash tables: aggregating and joining over arbitrary string data. We designed a new hash table, SAHA, which is tightly integrated with modern analytical databases and optimized for string data with the following advantages: (1) it inlines short strings and saves hash values for long strings only; (2) it uses special memory loading techniques to do quick dispatching and hashing computations; and (3) it utilizes vectorized processing to batch hashing operations. Our evaluation results reveal that SAHA outperforms state-of-the-art hash tables by one to five times in analytical workloads, including Google’s SwissTable and Facebook’s F14Table. It has been merged into the ClickHouse database and shows promising results in production. Keywords: hash table; analytical database; string data 1. -

Chapter 5 Hashing

Introduction hashing performs basic operations, such as insertion, Chapter 5 deletion, and finds in average time Hashing 2 Hashing Hashing Functions a hash table is merely an of some fixed size let be the set of search keys hashing converts into locations in a hash hash functions map into the set of in the table hash table searching on the key becomes something like array lookup ideally, distributes over the slots of the hash table, to minimize collisions hashing is typically a many-to-one map: multiple keys are if we are hashing items, we want the number of items mapped to the same array index hashed to each location to be close to mapping multiple keys to the same position results in a example: Library of Congress Classification System ____________ that must be resolved hash function if we look at the first part of the call numbers two parts to hashing: (e.g., E470, PN1995) a hash function, which transforms keys into array indices collision resolution involves going to the stacks and a collision resolution procedure looking through the books almost all of CS is hashed to QA75 and QA76 (BAD) 3 4 Hashing Functions Hashing Functions suppose we are storing a set of nonnegative integers we can also use the hash function below for floating point given , we can obtain hash values between 0 and 1 with the numbers if we interpret the bits as an ______________ hash function two ways to do this in C, assuming long int and double when is divided by types have the same length fast operation, but we need to be careful when choosing first method uses -

Double Hashing

CSE 332: Data Structures & Parallelism Lecture 11:More Hashing Ruth Anderson Winter 2018 Today • Dictionaries – Hashing 1/31/2018 2 Hash Tables: Review • Aim for constant-time (i.e., O(1)) find, insert, and delete – “On average” under some reasonable assumptions • A hash table is an array of some fixed size hash table – But growable as we’ll see 0 client hash table library collision? collision E int table-index … resolution TableSize –1 1/31/2018 3 Hashing Choices 1. Choose a Hash function 2. Choose TableSize 3. Choose a Collision Resolution Strategy from these: – Separate Chaining – Open Addressing • Linear Probing • Quadratic Probing • Double Hashing • Other issues to consider: – Deletion? – What to do when the hash table gets “too full”? 1/31/2018 4 Open Addressing: Linear Probing • Why not use up the empty space in the table? 0 • Store directly in the array cell (no linked list) 1 • How to deal with collisions? 2 • If h(key) is already full, 3 – try (h(key) + 1) % TableSize. If full, 4 – try (h(key) + 2) % TableSize. If full, 5 – try (h(key) + 3) % TableSize. If full… 6 7 • Example: insert 38, 19, 8, 109, 10 8 38 9 1/31/2018 5 Open Addressing: Linear Probing • Another simple idea: If h(key) is already full, 0 / – try (h(key) + 1) % TableSize. If full, 1 / – try (h(key) + 2) % TableSize. If full, 2 / – try (h(key) + 3) % TableSize. If full… 3 / 4 / • Example: insert 38, 19, 8, 109, 10 5 / 6 / 7 / 8 38 9 19 1/31/2018 6 Open Addressing: Linear Probing • Another simple idea: If h(key) is already full, 0 8 – try (h(key) + 1) % TableSize. -

Chapter 5. Hash Tables

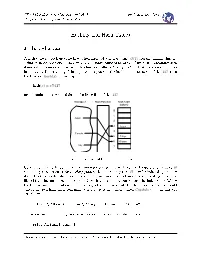

CSci 335 Software Design and Analysis 3 Prof. Stewart Weiss Chapter 5 Hashing and Hash Tables Hashing and Hash Tables 1 Introduction A hash table is a look-up table that, when designed well, has nearly O(1) average running time for a nd or insert operation. More precisely, a hash table is an array of xed size containing data items with unique keys, together with a function called a hash function that maps keys to indices in the table. For example, if the keys are integers and the hash table is an array of size 127, then the function hash(x), dened by hash(x) = x%127 maps numbers to their modulus in the nite eld of size 127. Key Space Hash Function Hash Table (values) 0 1 Japan 2 3 Rome 4 Canada 5 Tokyo 6 Ottawa Italy 7 Figure 1: A hash table for world capitals. Conceptually, a hash table is a much more general structure. It can be thought of as a table H containing a collection of (key, value) pairs with the property that H may be indexed by the key itself. In other words, whereas you usually reference an element of an array A by writing something like A[i], using an integer index value i, with a hash table, you replace the index value "i" by the key contained in location i in order to get the value associated with it. For example, we could conceptualize a hash table containing world capitals as a table named Capitals that contains the set of pairs (Italy, Rome), (Japan, Tokyo), (Canada, Ottawa) and so on, and conceptually (not actually) you could write a statement such as print Capitals[Italy] This work is licensed under the Creative Commons Attribution-ShareAlike 4.0 International License. -

Linear Probing Revisited: Tombstones Mark the Death of Primary Clustering

Linear Probing Revisited: Tombstones Mark the Death of Primary Clustering Michael A. Bender* Bradley C. Kuszmaul William Kuszmaul† Stony Brook Google Inc. MIT Abstract First introduced in 1954, the linear-probing hash table is among the oldest data structures in computer science, and thanks to its unrivaled data locality, linear probing continues to be one of the fastest hash tables in practice. It is widely believed and taught, however, that linear probing should never be used at high load factors; this is because of an effect known as primary clustering which causes insertions at a load factor of 1 − 1=x to take expected time Θ(x2) (rather than the intuitive running time of Θ(x)). The dangers of primary clustering, first discovered by Knuth in 1963, have now been taught to generations of computer scientists, and have influenced the design of some of the most widely used hash tables in production. We show that primary clustering is not the foregone conclusion that it is reputed to be. We demonstrate that seemingly small design decisions in how deletions are implemented have dramatic effects on the asymptotic performance of insertions: if these design decisions are made correctly, then even if a hash table operates continuously at a load factor of 1 − Θ(1=x), the expected amortized cost per insertion/deletion is O~(x). This is because the tombstones left behind by deletions can actually cause an anti-clustering effect that combats primary clustering. Interestingly, these design decisions, despite their remarkable effects, have historically been viewed as simply implementation-level engineering choices. -

Lock-Free Hopscotch Hashing

Lock-Free Hopscotch Hashing Robert Kelly∗ Barak A. Pearlmuttery Phil Maguirez Abstract ensure that reading the data structure is as cheap as In this paper we present a lock-free version of Hopscotch possible. The majority of operations on structures like Hashing. Hopscotch Hashing is an open addressing algo- hash tables are read operations, meaning that it pays rithm originally proposed by Herlihy, Shavit, and Tzafrir to optimise them for fast reads. [10], which is known for fast performance and excellent cache locality. The algorithm allows users of the table to skip or jump over irrelevant entries, allowing quick search, inser- tion, and removal of entries. Unlike traditional linear prob- ing, Hopscotch Hashing is capable of operating under a high load factor, as probe counts remain small. Our lock-free version improves on both speed, cache locality, and progress guarantees of the original, being a chimera of two concur- Figure 1: Table legend. The subscripts represent the rent hash tables. We compare our data structure to various optimal bucket index for an entry, the > represents other lock-free and blocking hashing algorithms and show a tombstone, and the ? represents an empty bucket. that its performance is in many cases superior to existing Bucket 1 contains the symbol for a tombstone, bucket 2 strategies. The proposed lock-free version overcomes some of contains an entry A which belongs at bucket 2, bucket the drawbacks associated with the original blocking version, 3 contains another entry B which also belongs at bucket leading to a substantial boost in scalability while maintain- 2, and bucket 4 is empty. -

SIMD Vectorized Hashing for Grouped Aggregation

SIMD Vectorized Hashing for Grouped Aggregation Bala Gurumurthy, David Broneske, Marcus Pinnecke, Gabriel Campero, and Gunter Saake Otto-von-Guericke-Universität, Magdeburg, Germany [email protected] Abstract. Grouped aggregation is a commonly used analytical func- tion. The common implementation of the function using hashing tech- niques suffers lower throughput rate due to the collision of the insert keys in the hashing techniques. During collision, the underlying tech- nique searches for an alternative location to insert keys. Searching an alternative location increases the processing time for an individual key thereby degrading the overall throughput. In this work, we use Single Instruction Multiple Data (SIMD) vectorization to search multiple slots at an instant followed by direct aggregation of results. We provide our experimental results of our vectorized grouped aggregation with various open-addressing hashing techniques using several dataset distributions and our inferences on them. Among our findings, we observe different impacts of vectorization on these techniques. Namely, linear probing and two-choice hashing improve their performance with vectorization, whereas cuckoo and hopscotch hashing show a negative impact. Overall, we provide in this work a basic structure of a dedicated SIMD acceler- ated grouped aggregation framework that can be adapted with different hashing techniques. Keywords: SIMD · hashing techniques · hash based grouping · grouped aggregation · direct aggregation · open addressing 1 Introduction Many analytical processing queries (e.g., OLAP) are directly related to the effi- ciency of their underlying functions. Some of these internal functions are time- consuming and even require the complete dataset to be processed before produc- ing the results. One of such compute-intensive internal functions is a grouped aggregation function that affects the overall throughput of the user query. -

Script ( PDF , 8 MB )

Main Memory DBMS G. Moerkotte February 4, 2019 2 Contents 1 Introduction9 2 Hardware 11 2.1 Memory................................ 11 2.1.1 Aligned vs. Unaligned Access................ 11 2.1.2 Address Translation (Virtual Memory)........... 11 2.1.3 Caches and the Memory Hierachie............. 15 2.1.4 Some Numbers of Some Processors............. 18 2.1.5 Prefetching.......................... 18 2.2 CPU.................................. 19 2.2.1 Pipelining........................... 19 2.2.2 Out-Of-Order-Execution................... 20 2.2.3 (Cost of) Branch (Mis-) Prediction............. 21 2.2.4 SIMD............................. 23 2.2.5 Simultaneous multithreading (SMT)............ 30 2.3 Cache Coherence........................... 30 2.4 Synchronization Primitives..................... 32 2.5 NUMA................................. 32 2.6 Performance Monitoring Unit.................... 34 2.7 References............................... 35 3 Operating System 37 4 Hash Tables 39 4.1 Hash Functions............................ 39 4.1.1 Why hash-functions matter................. 40 4.1.2 Properties........................... 42 4.1.3 Uniformity.......................... 42 4.1.4 Average-case Search Length................. 42 4.1.5 Expected Length of the Longest Probe Sequence (llps).. 42 4.1.6 Universality.......................... 43 4.1.7 k-Universal.......................... 46 4.1.8 (c; k)-Universal........................ 46 4.1.9 Dietzfelbinger: Universality without Primes........ 47 4.1.10 k-universal Hash Functions................. 47 4.1.11 Tabulation Hashing..................... 48 3 4 CONTENTS 4.1.12 Hashing string values.................... 48 4.2 Hash Table Organization....................... 49 4.2.1 Two versions of Chained Hashtable............. 49 4.2.2 Cuckoo-Hashing....................... 49 4.2.3 Robin-Hood-Hashing..................... 50 4.2.4 Hopscotch-Hashing...................... 50 5 Compression 51 6 Storage Layout 53 6.1 Row stores and Column Stores.................. -

Implementation and Performance Analysis of Hash Functions and Collision Resolutions

6.851: Advanced Data Structures Implementation and Performance Analysis of Hash Functions and Collision Resolutions Victor Jakubiuk Sanja Popovic Massachusetts Institute of Technology Spring 2012 Contents 1 Introduction 2 2 Theoretical background 2 2.1 Hash functions . 2 2.2 Collision resolution . 2 2.2.1 Linear and quadratic probing . 3 2.2.2 Cuckoo hashing . 3 2.2.3 Hopscotch hashing . 3 3 The Main Benchmark 4 4 Discussion 6 5 Conclusion 7 References 8 1 1 Introduction Hash functions are among the most useful functions in computer science as they map large sets of keys to smaller sets of hash values. Hash functions are most commonly used for hash tables, when a fast key-value look up is required. Because they are so ubiquitous, improving their performance has a potential for large impact. We implemented two hash functions (simple tabulation hashing and multiplication hash- ing), as well as four collision resolution methods (linear probing, quadratic probing, cuckoo hashing and hopscotch hashing). In total, we have eight combinations of hash functions and collision resolutions. They are compared against three most popular C++ hash libraries: unordered map structure in C++11 (GCC 4.4.5), unordered map in Boost library (version 1.42.0) and hash map in libstdc (GCC C++ extension library, GCC 4.4.5). We analyzed raw performance parameters: running time, memory consumption and cache behavior. We also did a comparison of the number of probes in the table depending on the load factor, as well as dependency of running time and load factor. 2 Theoretical background We begin with a brief theoretical overview of the hash functions and collisions resolution methods used. -

Hash Tables II Parallelism Announcements

Data Structures and Hash Tables II Parallelism Announcements Exercise 4 due TODAY. We’ll try to have it graded before the midterm. Section is midterm review. P2 Checkpoint is a week away Today Collision Resolution part II: Open Addressing General Purpose hashCode() int result = 17; // start at a prime foreach field f int fieldHashcode = boolean: (f ? 1: 0) byte, char, short, int: (int) f long: (int) (f ^ (f >>> 32)) float: Float.floatToIntBits(f) double: Double.doubleToLongBits(f), then above Object: object.hashCode( ) result = 31 * result + fieldHashcode; return result; Collision Resolution Last time: Separate Chaining when you have a collision, stuff everything into that spot Using a data structure. Today: Open Addressing If the spot is full, go somewhere else. Where? How do we find the elements later? Linear Probing First idea: linear probing h(key) % TableSize full? Try (h(key) + 1) % TableSize. Also full? (h(key) + 2) % TableSize. Also full? (h(key) + 3) % TableSize. Also full? (h(key) + 4) % TableSize. … Linear Probing Example Insert the hashes: 38, 19, 8, 109, 10 into an empty hash table of size 10. 0 1 2 3 4 5 6 7 8 9 8 109 10 38 19 How Does Delete Work? Just find the key and remove it, what’s the problem? How do we know if we should keep probing on a find? Delete 109 and call find on 10. If we delete something placed via probe we won’t be able to tell! If you’re using open addressing, you have to use lazy deletion. How Long Does Insert Take? If 휆 < 1 we’ll find a spot eventually. -

Practical Survey on Hash Tables

Practical Survey on Hash Tables Aurelian Țuțuianu In memoriam Mihai Pătraşcu (17 July 1982 – 5 June 2012) “I have no intention to ever teach computer science. I want to teach the love for computer science, and let the learning happen.” Teaching Statement (http://people.csail.mit.edu/mip/docs/job-application07/statements.pdf) Abstract • Hash table definition • Collision resolving schemas: – Chained hashing – Linear and quadratic probing – Cuckoo hashing • Some hash function theory • Simple tabulation hashing Omnipresence of hash tables • Symbol tables in compilers • Cache implementations • Database storages • Manage memory pages in Linux • Route tables • Large number of documents Hash Tables Considering a set of elements 푆 from a finite and much larger universe 푈. A hash table consists of: ℎ푎푠ℎ 푓푢푛푐푡표푛 ℎ: 푈 → {0, . , 푚 − 1} 푣푒푐푡표푟 푣 표푓 푠푧푒 푚 Collisions same hash for two different 26.17.41.60 keys 126.15.12.154 What to do? 202.223.224.33 • Ignore them 7.239.203.66 • Chain colliding values • Skip and try again 176.136.103.233 • Hash and displace • Find a perfect hash function War Story: cache with hash tables Problem: An application which application gets some data from an expensive repository. hash table Solution: Hash table with collision replacement. Key point: a big chunk of users “watched” a lot of common data. Data source Collision Resolution Schemas • Chained hashing • Open hash: linear and quadratic probing • Cuckoo hashing And many many others: perfect hashing, coalesced hashing, Robin Hood hashing, hopscotch hashing, etc. Chained Hashing Each slot contains a linked list. 0 푛 푂( ) = 푂(1) for all operations. -

Hashing: Part 2 332

Adam BlankLecture 11 Winter 2017 CSE 332: Data Structures and Parallelism CSE Hashing: Part 2 332 Data Structures and Parallelism HashTable Review 1 Hashing Choices 2 Hash Tables 1 Choose a hash function Provides 1 core Dictionary operations (on average) 2 Choose a table size We call the key space the “universe”: U and the Hash Table T O( ) We should use this data structure only when we expect U T 3 Choose a collision resolution strategy (Or, the key space is non-integer values.) S S >> S S Separate Chaining Linear Probing Quadratic Probing Double Hashing hash function mod T collision? E int Table Index Fixed Table Index Other issues to consider: S S ÐÐÐÐÐÐÐ→Hash Table Client ÐÐÐÐ→ HashÐÐÐÐÐ→ Table Library 4 Choose an implementation of deletion ´¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¸¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¶´¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¸¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¶ 5 Choose a λ that means the table is “too full” Another Consideration? What do we do when λ (the load factor) gets too large? We discussed the first few of these last time. We’ll discuss the rest today. Review: Collisions 3 Open Addressing 4 Definition (Collision) Definition (Open Addressing) A collision is when two distinct keys map to the same location in the Open Addressing is a type of collision resolution strategy that resolves hash table. collisions by choosing a different location when the natural choice is full. A good hash function attempts to avoid as many collisions as possible, There are many types of open addressing. Here’s the key ideas: but they are inevitable. We must be able to duplicate the path we took. How do we deal with collisions? We want to use all the spaces in the table.