Hash Tables II Parallelism Announcements

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

SAHA: a String Adaptive Hash Table for Analytical Databases

applied sciences Article SAHA: A String Adaptive Hash Table for Analytical Databases Tianqi Zheng 1,2,* , Zhibin Zhang 1 and Xueqi Cheng 1,2 1 CAS Key Laboratory of Network Data Science and Technology, Institute of Computing Technology, Chinese Academy of Sciences, Beijing 100190, China; [email protected] (Z.Z.); [email protected] (X.C.) 2 University of Chinese Academy of Sciences, Beijing 100049, China * Correspondence: [email protected] Received: 3 February 2020; Accepted: 9 March 2020; Published: 11 March 2020 Abstract: Hash tables are the fundamental data structure for analytical database workloads, such as aggregation, joining, set filtering and records deduplication. The performance aspects of hash tables differ drastically with respect to what kind of data are being processed or how many inserts, lookups and deletes are constructed. In this paper, we address some common use cases of hash tables: aggregating and joining over arbitrary string data. We designed a new hash table, SAHA, which is tightly integrated with modern analytical databases and optimized for string data with the following advantages: (1) it inlines short strings and saves hash values for long strings only; (2) it uses special memory loading techniques to do quick dispatching and hashing computations; and (3) it utilizes vectorized processing to batch hashing operations. Our evaluation results reveal that SAHA outperforms state-of-the-art hash tables by one to five times in analytical workloads, including Google’s SwissTable and Facebook’s F14Table. It has been merged into the ClickHouse database and shows promising results in production. Keywords: hash table; analytical database; string data 1. -

Chapter 5 Hashing

Introduction hashing performs basic operations, such as insertion, Chapter 5 deletion, and finds in average time Hashing 2 Hashing Hashing Functions a hash table is merely an of some fixed size let be the set of search keys hashing converts into locations in a hash hash functions map into the set of in the table hash table searching on the key becomes something like array lookup ideally, distributes over the slots of the hash table, to minimize collisions hashing is typically a many-to-one map: multiple keys are if we are hashing items, we want the number of items mapped to the same array index hashed to each location to be close to mapping multiple keys to the same position results in a example: Library of Congress Classification System ____________ that must be resolved hash function if we look at the first part of the call numbers two parts to hashing: (e.g., E470, PN1995) a hash function, which transforms keys into array indices collision resolution involves going to the stacks and a collision resolution procedure looking through the books almost all of CS is hashed to QA75 and QA76 (BAD) 3 4 Hashing Functions Hashing Functions suppose we are storing a set of nonnegative integers we can also use the hash function below for floating point given , we can obtain hash values between 0 and 1 with the numbers if we interpret the bits as an ______________ hash function two ways to do this in C, assuming long int and double when is divided by types have the same length fast operation, but we need to be careful when choosing first method uses -

Chapter 5. Hash Tables

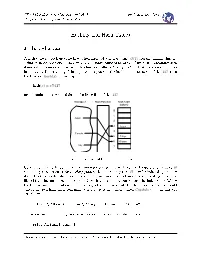

CSci 335 Software Design and Analysis 3 Prof. Stewart Weiss Chapter 5 Hashing and Hash Tables Hashing and Hash Tables 1 Introduction A hash table is a look-up table that, when designed well, has nearly O(1) average running time for a nd or insert operation. More precisely, a hash table is an array of xed size containing data items with unique keys, together with a function called a hash function that maps keys to indices in the table. For example, if the keys are integers and the hash table is an array of size 127, then the function hash(x), dened by hash(x) = x%127 maps numbers to their modulus in the nite eld of size 127. Key Space Hash Function Hash Table (values) 0 1 Japan 2 3 Rome 4 Canada 5 Tokyo 6 Ottawa Italy 7 Figure 1: A hash table for world capitals. Conceptually, a hash table is a much more general structure. It can be thought of as a table H containing a collection of (key, value) pairs with the property that H may be indexed by the key itself. In other words, whereas you usually reference an element of an array A by writing something like A[i], using an integer index value i, with a hash table, you replace the index value "i" by the key contained in location i in order to get the value associated with it. For example, we could conceptualize a hash table containing world capitals as a table named Capitals that contains the set of pairs (Italy, Rome), (Japan, Tokyo), (Canada, Ottawa) and so on, and conceptually (not actually) you could write a statement such as print Capitals[Italy] This work is licensed under the Creative Commons Attribution-ShareAlike 4.0 International License. -

Linear Probing Revisited: Tombstones Mark the Death of Primary Clustering

Linear Probing Revisited: Tombstones Mark the Death of Primary Clustering Michael A. Bender* Bradley C. Kuszmaul William Kuszmaul† Stony Brook Google Inc. MIT Abstract First introduced in 1954, the linear-probing hash table is among the oldest data structures in computer science, and thanks to its unrivaled data locality, linear probing continues to be one of the fastest hash tables in practice. It is widely believed and taught, however, that linear probing should never be used at high load factors; this is because of an effect known as primary clustering which causes insertions at a load factor of 1 − 1=x to take expected time Θ(x2) (rather than the intuitive running time of Θ(x)). The dangers of primary clustering, first discovered by Knuth in 1963, have now been taught to generations of computer scientists, and have influenced the design of some of the most widely used hash tables in production. We show that primary clustering is not the foregone conclusion that it is reputed to be. We demonstrate that seemingly small design decisions in how deletions are implemented have dramatic effects on the asymptotic performance of insertions: if these design decisions are made correctly, then even if a hash table operates continuously at a load factor of 1 − Θ(1=x), the expected amortized cost per insertion/deletion is O~(x). This is because the tombstones left behind by deletions can actually cause an anti-clustering effect that combats primary clustering. Interestingly, these design decisions, despite their remarkable effects, have historically been viewed as simply implementation-level engineering choices. -

Robin Hood Hashing

COP4530 Notes Summer 2019 TBD 1 Hashing Hashing is an old and time-honored method of accessing key/value pairs that have fairly random keys. The idea here is to create an array with a fixed size of N, and then “hash” the key to compute an index into the array. Ideally the hashing function should “spatter” the bits fairly evenly over the range N. 2 Hash functions As Weiss remarks, if the keys are just integers that are reasonably randomly distributed, simply using the mod function (key % N) (where N is prime) should work reasonably well. His example of a bad function would be one that has a table with 10 elements and keys that all end in 0, thus all computing to the same index of 0. For strings, Weiss points out that could just add the bytes in the string together into a sum and then again compute sum % N. This generalizes for any arbitrary key type; just add the actual bytes of the key in the same fashion. Another possibility is to use XOR rather the sum (though your XOR needs to be multibyte, with enough bytes to successfully get to the maximum range value.) You still have undesirable properties in the distribution of bits in most string coding schemes; ASCII leaves the high bit at zero, and UTF-8 unifor- mally sets the high bit for any code point outside of ASCII. This can be cured; C++ provides XOR-based hashing via FNV hashing as a standard option. Weiss gives this example for a good string hash function: 1 for(char c: key) 2 { 3 hash_value = 37 * hash_value+c; 4 } 5 return hash_value% table_size; 3 Collision resolution This is all well and good, but what happens when two dierent keys compute the same hash index? This is called a “collision” (and this term is used throughout the wider literature on hashing, and not just for the data structure hash table.) Resolving hashes, along with choosing hash functions, is among the primary concerns in designing hash tables. -

Hashing: Part 2 332

Adam BlankLecture 11 Winter 2017 CSE 332: Data Structures and Parallelism CSE Hashing: Part 2 332 Data Structures and Parallelism HashTable Review 1 Hashing Choices 2 Hash Tables 1 Choose a hash function Provides 1 core Dictionary operations (on average) 2 Choose a table size We call the key space the “universe”: U and the Hash Table T O( ) We should use this data structure only when we expect U T 3 Choose a collision resolution strategy (Or, the key space is non-integer values.) S S >> S S Separate Chaining Linear Probing Quadratic Probing Double Hashing hash function mod T collision? E int Table Index Fixed Table Index Other issues to consider: S S ÐÐÐÐÐÐÐ→Hash Table Client ÐÐÐÐ→ HashÐÐÐÐÐ→ Table Library 4 Choose an implementation of deletion ´¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¸¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¶´¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¸¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¹¶ 5 Choose a λ that means the table is “too full” Another Consideration? What do we do when λ (the load factor) gets too large? We discussed the first few of these last time. We’ll discuss the rest today. Review: Collisions 3 Open Addressing 4 Definition (Collision) Definition (Open Addressing) A collision is when two distinct keys map to the same location in the Open Addressing is a type of collision resolution strategy that resolves hash table. collisions by choosing a different location when the natural choice is full. A good hash function attempts to avoid as many collisions as possible, There are many types of open addressing. Here’s the key ideas: but they are inevitable. We must be able to duplicate the path we took. How do we deal with collisions? We want to use all the spaces in the table. -

Data Structures

Data structures PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Thu, 17 Nov 2011 20:55:22 UTC Contents Articles Introduction 1 Data structure 1 Linked data structure 3 Succinct data structure 5 Implicit data structure 7 Compressed data structure 8 Search data structure 9 Persistent data structure 11 Concurrent data structure 15 Abstract data types 18 Abstract data type 18 List 26 Stack 29 Queue 57 Deque 60 Priority queue 63 Map 67 Bidirectional map 70 Multimap 71 Set 72 Tree 76 Arrays 79 Array data structure 79 Row-major order 84 Dope vector 86 Iliffe vector 87 Dynamic array 88 Hashed array tree 91 Gap buffer 92 Circular buffer 94 Sparse array 109 Bit array 110 Bitboard 115 Parallel array 119 Lookup table 121 Lists 127 Linked list 127 XOR linked list 143 Unrolled linked list 145 VList 147 Skip list 149 Self-organizing list 154 Binary trees 158 Binary tree 158 Binary search tree 166 Self-balancing binary search tree 176 Tree rotation 178 Weight-balanced tree 181 Threaded binary tree 182 AVL tree 188 Red-black tree 192 AA tree 207 Scapegoat tree 212 Splay tree 216 T-tree 230 Rope 233 Top Trees 238 Tango Trees 242 van Emde Boas tree 264 Cartesian tree 268 Treap 273 B-trees 276 B-tree 276 B+ tree 287 Dancing tree 291 2-3 tree 292 2-3-4 tree 293 Queaps 295 Fusion tree 299 Bx-tree 299 Heaps 303 Heap 303 Binary heap 305 Binomial heap 311 Fibonacci heap 316 2-3 heap 321 Pairing heap 321 Beap 324 Leftist tree 325 Skew heap 328 Soft heap 331 d-ary heap 333 Tries 335 Trie -

Fundamental Data Structures

Fundamental Data Structures PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Thu, 17 Nov 2011 21:36:24 UTC Contents Articles Introduction 1 Abstract data type 1 Data structure 9 Analysis of algorithms 11 Amortized analysis 16 Accounting method 18 Potential method 20 Sequences 22 Array data type 22 Array data structure 26 Dynamic array 31 Linked list 34 Doubly linked list 50 Stack (abstract data type) 54 Queue (abstract data type) 82 Double-ended queue 85 Circular buffer 88 Dictionaries 103 Associative array 103 Association list 106 Hash table 107 Linear probing 120 Quadratic probing 121 Double hashing 125 Cuckoo hashing 126 Hopscotch hashing 130 Hash function 131 Perfect hash function 140 Universal hashing 141 K-independent hashing 146 Tabulation hashing 147 Cryptographic hash function 150 Sets 157 Set (abstract data type) 157 Bit array 161 Bloom filter 166 MinHash 176 Disjoint-set data structure 179 Partition refinement 183 Priority queues 185 Priority queue 185 Heap (data structure) 190 Binary heap 192 d-ary heap 198 Binomial heap 200 Fibonacci heap 205 Pairing heap 210 Double-ended priority queue 213 Soft heap 218 Successors and neighbors 221 Binary search algorithm 221 Binary search tree 228 Random binary tree 238 Tree rotation 241 Self-balancing binary search tree 244 Treap 246 AVL tree 249 Red–black tree 253 Scapegoat tree 268 Splay tree 272 Tango tree 286 Skip list 308 B-tree 314 B+ tree 325 Integer and string searching 330 Trie 330 Radix tree 337 Directed acyclic word graph 339 Suffix tree 341 Suffix array 346 van Emde Boas tree 349 Fusion tree 353 References Article Sources and Contributors 354 Image Sources, Licenses and Contributors 359 Article Licenses License 362 1 Introduction Abstract data type In computing, an abstract data type (ADT) is a mathematical model for a certain class of data structures that have similar behavior; or for certain data types of one or more programming languages that have similar semantics. -

Choosing Best Hashing Strategies and Hash Functions

Choosing Best Hashing Strategies and Hash Functions Thesis submitted in partial fulfillment of the requirements for the award of Degree of Master of Engineering in Software Engineering By: Name: Mahima Singh Roll No: 80731011 Under the supervision of: Dr. Deepak Garg Assistant Professor, CSED & Mr. Ravinder Kumar Lecturer, CSED COMPUTER SCIENCE AND ENGINEERING DEPARTMENT THAPAR UNIVERSITY PATIALA – 147004 JUNE 2009 Certificate I hereby certify that the work which is being presented in the thesis report entitled, “Choosing Best Hashing Strategies and Hash Functions”, submitted by me in partial fulfillment of the requirements for the award of degree of Master of Engineering in Computer Science and Engineering submitted in Computer Science and Engineering Department of Thapar University, Patiala, is an authentic record of my own work carried out under the supervision of Dr. Deepak Garg and Mr. Ravinder Kumar and refers other researcher’s works which are duly listed in the reference section. The matter presented in this thesis has not been submitted for the award of any other degree of this or any other university. (Mahima Singh) This is to certify that the above statement made by the candidate is correct and true to the best of my knowledge. (Dr. Deepak Garg) Assistant Professor, CSED Thapar University Patiala And (Mr. Ravinder Kumar) Lecturer, CSED Thapar University Patiala Countersigned by: Dr. Rajesh Kumar Bhatia (Dr. R.K.SHARMA) Assistant Professor & Head Dean (Academic Affairs) Computer Science & Engineering. Department Thapar University, Thapar University Patiala. Patiala. i Acknowledgement No volume of words is enough to express my gratitude towards my guide, Dr. Deepak Garg Assistant Professor. -

Hashing Dynamic Dictionaries

Hashing Dynamic Dictionaries Operations: • create • insert • find • remove • max/ min • write out in sorted order Only defined for object classes that are Comparable Hash tables Operations: • create • insert • find • remove • max/ min • write out in sorted order Only defined for object classes that are Comparable have equals defined Hash tables Operations: Java specific: From the Java • create documentation • insert • find • remove • max/ min • write out in sorted order Only defined for object classes that are Comparable have equals defined Hash tables – implementation • Have a table (an array) of a fixed tableSize • A hash function determines where in this table each item should be stored 2174 % 10 = 4 hash(item)item % tableSize [a positive integer] THE DESIGN QUESTIONS 1. Choosing tableSize 2. Choosing a hash function 3. What to do when a collision occurs Hash tables – tableSize • Should depend on the (maximum) number of values to be stored • Let λ = [number of values stored]/ tableSize • Load factor of the hash table • Restrict λ to be at most 1 (or ½) • Require tableSize to be a prime number • to “randomize” away any patterns that may arise in the hash function values • The prime should be of the form (4k+3) [for reasons to be detailed later] Hash tables – the hash function If the objects to be stored have integer keys (e.g., student IDs) hash(k) = k is generally OK, unless the keys have “patterns” Otherwise, some “randomized” way to obtain an integer Hash tables – the hash function If the objects to be stored have integer keys (e.g., -

7.7 Hashing 7.7 Hashing 7.7 Hashing 7.7 Hashing

7.7 Hashing 7.7 Hashing Dictionary: Definitions: ñ S.insert(x): Insert an element x. ñ Universe U of keys, e.g., U N0. U very large. ñ S.delete(x): Delete the element pointed to by x. ⊆ ñ Set S U of keys, S m n. ñ S.search(k): Return a pointer to an element e with ⊆ j j = ≤ key[e] k in S if it exists; otherwise return null. ñ Array T[0; : : : ; n 1] hash-table. = − ñ Hash function h : U [0; : : : ; n 1]. ! − So far we have implemented the search for a key by carefully choosing split-elements. The hash-function h should fulfill: ñ Fast to evaluate. Then the memory location of an object x with key k is determined ñ Small storage requirement. by successively comparing k to split-elements. ñ Good distribution of elements over the whole table. Hashing tries to directly compute the memory location from the given key. The goal is to have constant search time. EADS EADS 7.7 Hashing c Ernst Mayr, Harald Räcke 201 c Ernst Mayr, Harald Räcke 202 7.7 Hashing 7.7 Hashing Ideally the hash function maps all keys to different memory Suppose that we know the set S of actual keys (no insert/no locations. delete). Then we may want to design a simple hash-function that maps all these keys to different memory locations. k1 k1 k1 k7 k7 k1 U k7 universe k6 k3 x k7 of keys U universe k6 k3 x k3 of keys k6 S (actual keys) k3 k6 This special case is known as Direct Addressing. -

Chapter 19 Hashing

Chapter 19 Hashing hash: transitive verb1 1. (a) to chop (as meat and potatoes) into small pieces (b) confuse, muddle 2. ... This is the definition of hash from which the computer term was derived. The idea of hashing as originally conceived was to take values and to chop and mix them to the point that the original values are muddled. The term as used in computer science dates back to the early 1950’s. More formally the idea of hashing is to approximate a random function h : α ! β from a source (or universe) set U of type α to a destination set of type β. Most often the source set is significantly larger than the destination set, so the function not only chops and mixes but also reduces. In fact the source set might have infinite size, such as all character strings, while the destination set always has finite size. Also the source set might consist of complicated elements, such as the set of all directed graphs, while the destination are typically the integers in some fixed range. Hash functions are therefore many to one functions. Using an actual randomly selected function from the set of all functions from α to β is typically not practical due to the number of such functions and hence the size (number of bits) needed to represent such a function. Therefore in practice one uses some pseudorandom function. Exercise 19.1. How many hash functions are there that map from a source set of size n to the integers from 1 to m? How many bits does it take to represent them? What if the source set consists of character strings of length up to 20? Assume there are 100 possible characters.