Continuous Improvement, Probability, and Statistics Using Creative Hands-On Techniques Continuous Improvement Series Series Editors: Elizabeth A

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Inspiring Mathematical Creativity Through Juggling

Journal of Humanistic Mathematics Volume 10 | Issue 2 July 2020 Inspiring Mathematical Creativity through Juggling Ceire Monahan Montclair State University Mika Munakata Montclair State University Ashwin Vaidya Montclair State University Sean Gandini Follow this and additional works at: https://scholarship.claremont.edu/jhm Part of the Arts and Humanities Commons, and the Mathematics Commons Recommended Citation Monahan, C. Munakata, M. Vaidya, A. and Gandini, S. "Inspiring Mathematical Creativity through Juggling," Journal of Humanistic Mathematics, Volume 10 Issue 2 (July 2020), pages 291-314. DOI: 10.5642/ jhummath.202002.14 . Available at: https://scholarship.claremont.edu/jhm/vol10/iss2/14 ©2020 by the authors. This work is licensed under a Creative Commons License. JHM is an open access bi-annual journal sponsored by the Claremont Center for the Mathematical Sciences and published by the Claremont Colleges Library | ISSN 2159-8118 | http://scholarship.claremont.edu/jhm/ The editorial staff of JHM works hard to make sure the scholarship disseminated in JHM is accurate and upholds professional ethical guidelines. However the views and opinions expressed in each published manuscript belong exclusively to the individual contributor(s). The publisher and the editors do not endorse or accept responsibility for them. See https://scholarship.claremont.edu/jhm/policies.html for more information. Inspiring Mathematical Creativity Through Juggling Ceire Monahan Department of Mathematical Sciences, Montclair State University, New Jersey, USA -

Happy Birthday!

THE THURSDAY, APRIL 1, 2021 Quote of the Day “That’s what I love about dance. It makes you happy, fully happy.” Although quite popular since the ~ Debbie Reynolds 19th century, the day is not a public holiday in any country (no kidding). Happy Birthday! 1998 – Burger King published a full-page advertisement in USA Debbie Reynolds (1932–2016) was Today introducing the “Left-Handed a mega-talented American actress, Whopper.” All the condiments singer, and dancer. The acclaimed were rotated 180 degrees for the entertainer was first noticed at a benefit of left-handed customers. beauty pageant in 1948. Reynolds Thousands of customers requested was soon making movies and the burger. earned a nomination for a Golden Globe Award for Most Promising 2005 – A zoo in Tokyo announced Newcomer. She became a major force that it had discovered a remarkable in Hollywood musicals, including new species: a giant penguin called Singin’ In the Rain, Bundle of Joy, the Tonosama (Lord) penguin. With and The Unsinkable Molly Brown. much fanfare, the bird was revealed In 1969, The Debbie Reynolds Show to the public. As the cameras rolled, debuted on TV. The the other penguins lifted their beaks iconic star continued and gazed up at the purported Lord, to perform in film, but then walked away disinterested theater, and TV well when he took off his penguin mask into her 80s. Her and revealed himself to be the daughter was actress zoo director. Carrie Fisher. ©ActivityConnection.com – The Daily Chronicles (CAN) HURSDAY PRIL T , A 1, 2021 Today is April Fools’ Day, also known as April fish day in some parts of Europe. -

UNIVERSITY of CALIFORNIA, SAN DIEGO Flips and Juggles A

UNIVERSITY OF CALIFORNIA, SAN DIEGO Flips and Juggles ADissertationsubmittedinpartialsatisfactionofthe requirements for the degree Doctor of Philosophy in Mathematics by Jay Cummings Committee in charge: Professor Ron Graham, Chair Professor Jacques Verstra¨ete, Co-Chair Professor Fan Chung Professor Shachar Lovett Professor Je↵Remmel Professor Alexander Vardy 2016 Copyright Jay Cummings, 2016 All rights reserved. The Dissertation of Jay Cummings is approved, and it is acceptable in quality and form for publication on micro- film and electronically: Co-Chair Chair University of California, San Diego 2016 iii DEDICATION To mom and dad. iv EPIGRAPH We’ve taught you that the Earth is round, That red and white make pink. But something else, that matters more – We’ve taught you how to think. — Dr. Seuss v TABLE OF CONTENTS Signature Page . iii Dedication . iv Epigraph ...................................... v TableofContents.................................. vi ListofFigures ................................... viii List of Tables . ix Acknowledgements ................................. x AbstractoftheDissertation . xii Chapter1 JugglingCards ........................... 1 1.1 Introduction . 1 1.1.1 Jugglingcardsequences . 3 1.2 Throwingoneballatatime . 6 1.2.1 The unrestricted case . 7 1.2.2 Throwingeachballatleastonce . 9 1.3 Throwing m 2ballsatatime. 16 1.3.1 A digraph≥ approach . 17 1.3.2 Adi↵erentialequationsapproach . 21 1.4 Throwing di↵erent numbers of balls at di↵erent times . 23 1.4.1 Boson normal ordering . 24 1.5 Preserving ordering while throwing . 27 1.6 Juggling card sequences with minimal crossings . 32 1.6.1 A reduction bijection with Dyck Paths . 36 1.6.2 A parenthesization bijection with Dyck Paths . 48 1.6.3 Non-crossingpartitions . 52 1.6.4 Counting using generating functions . -

Biblioteca Digital De Cartomagia, Ilusionismo Y Prestidigitación

Biblioteca-Videoteca digital, cartomagia, ilusionismo, prestidigitación, juego de azar, Antonio Valero Perea. BIBLIOTECA / VIDEOTECA INDICE DE OBRAS POR TEMAS Adivinanzas-puzzles -- Magia anatómica Arte referido a los naipes -- Magia callejera -- Música -- Magia científica -- Pintura -- Matemagia Biografías de magos, tahúres y jugadores -- Magia cómica Cartomagia -- Magia con animales -- Barajas ordenadas -- Magia de lo extraño -- Cartomagia clásica -- Magia general -- Cartomagia matemática -- Magia infantil -- Cartomagia moderna -- Magia con papel -- Efectos -- Magia de escenario -- Mezclas -- Magia con fuego -- Principios matemáticos de cartomagia -- Magia levitación -- Taller cartomagia -- Magia negra -- Varios cartomagia -- Magia en idioma ruso Casino -- Magia restaurante -- Mezclas casino -- Revistas de magia -- Revistas casinos -- Técnicas escénicas Cerillas -- Teoría mágica Charla y dibujo Malabarismo Criptografía Mentalismo Globoflexia -- Cold reading Juego de azar en general -- Hipnosis -- Catálogos juego de azar -- Mind reading -- Economía del juego de azar -- Pseudohipnosis -- Historia del juego y de los naipes Origami -- Legislación sobre juego de azar Patentes relativas al juego y a la magia -- Legislación Casinos Programación -- Leyes del estado sobre juego Prestidigitación -- Informes sobre juego CNJ -- Anillas -- Informes sobre juego de azar -- Billetes -- Policial -- Bolas -- Ludopatía -- Botellas -- Sistemas de juego -- Cigarrillos -- Sociología del juego de azar -- Cubiletes -- Teoria de juegos -- Cuerdas -- Probabilidad -

2009–2010 Season Sponsors

2009–2010 Season Sponsors The City of Cerritos gratefully thanks our 2009–2010 Season Sponsors for their generous support of the Cerritos Center for the Performing Arts. YOUR FAVORITE ENTERTAINERS, YOUR FAVORITE THEATER If your company would like to become a Cerritos Center for the Performing Arts sponsor, please contact the CCPA Administrative Offices at (562) 916-8510. THE CERRITOS CENTER FOR THE PERFORMING ARTS (CCPA) thanks the following CCPA Associates who have contributed to the CCPA’s Endowment Fund. The Endowment Fund was established in 1994 under the visionary leadership of the Cerritos City Council to ensure that the CCPA would remain a welcoming, accessible, and affordable venue in which patrons can experience the joy of entertainment and cultural enrichment. For more information about the Endowment Fund or to make a contribution, please contact the CCPA Administrative Offices at (562) 916-8510. Benefactor Audrey and Rick Rodriguez Yvonne Cattell Renee Fallaha $50,001-$100,000 Marilynn and Art Segal Rodolfo Chacon Heather M. Ferber José Iturbi Foundation Kirsten and Craig M. Springer, Joann and George Chambers Steven Fischer Ph.D. Rodolfo Chavez The Fish Company Masaye Stafford Patron Liming Chen Elizabeth and Terry Fiskin Charles Wong $20,001-$50,000 Wanda Chen Louise Fleming and Tak Fujisaki Bryan A. Stirrat & Associates Margie and Ned Cherry Jesus Fojo The Capital Group Companies Friend Drs. Frances and Philip Chinn Anne Forman Charitable Foundation $1-$1,000 Patricia Christie Dr. Susan Fox and Frank Frimodig Richard Christy National Endowment for the Arts Maureen Ahler Sharon Frank Crista Qi and Vincent Chung Eleanor and David St. -

Analysis and Visualization of Collective Motion in Football Analysis of Youth Football Using GPS and Visualization of Professional Football

UPTEC F 15068 Examensarbete 30 hp December 2015 Analysis and visualization of collective motion in football Analysis of youth football using GPS and visualization of professional football Emil Rosén Abstract Analysis and visualization of collective motion in football Emil Rosén Teknisk- naturvetenskaplig fakultet UTH-enheten Football is one of the biggest sports in the world. Professional teams track their player's positions using GPS (Global Positioning System). This report is divided into Besöksadress: two parts, both focusing on applying collective motion to football. Ångströmlaboratoriet Lägerhyddsvägen 1 Hus 4, Plan 0 The goal of the first part was to both see if a set of cheaper GPS units could be used to analyze the collective motion of a youth football team. 15 football players did two Postadress: experiments and played three versus three football matches against each other while Box 536 751 21 Uppsala wearing a GPS. The first experiment measured the player's ability to control the ball while the second experiment measured how well they were able to move together as Telefon: a team. Different measurements were measured from the match and Spearman 018 – 471 30 03 correlations were calculated between measurements from the experiments and Telefax: matches. Players which had good ball control also scored more goals in the match and 018 – 471 30 00 received more passes. However, they also took the middle position in the field which naturally is a position which receives more passes. Players which were correlated Hemsida: during the team experiment were also correlated with team-members in the match. http://www.teknat.uu.se/student But, this correlation was weak and the experiment should be done again with more players. -

Counter Intelligence Is the Latest of a 36 Page Printed Booklet Which Do and It Is Completely Baffling, Release from the Brilliant Mind of Is Clear and Concise

Issue No. 3 Lite MANDY FRIEND MUDEN NOT FOE ALLAN NATURAL BORN DE-VAL THRILLER! THE OPENER A WORD FROM THE EDITOR ow, we are into in Comedy Clubs after giving helps to fill us in on everything our third edition up her attempts to become an that is happening in this niche of Magicseen Lite actress, and although her rise entertainment world. already, yet it only has been steady rather than seems minutes meteoric, her good showing on What else have we extracted from sensible buying decisions. ago that we Britain’s Got Talent has helped the full September Magicseen If you enjoy this free taster edition Wdecided to go ahead with the to firmly established her act. We to include here? Well, there’s of Magicseen, maybe we can project at all! It’s great to see are delighted to provide further Friend Not Foe, which is an article tempt you to sign up for the that lots of readers are taking background on her for you to exploring how we can harness whole thing! We have 1 or 2 year advantage of these special enjoy in this issue. the ‘comedian’ in the audience printed copy or download subs taster issues and we hope to enhance our act rather than available, and subscribers not that you will enjoy this latest Escapology is an allied art to destroy it, we offer a Masterclass only get the full versions of every one too. magic that we rarely get to routine from David Regal’s brand issue 6 times a year, they also feature in Magicseen, so we are new book Interpreting Magic, we get other benefits too. -

Abbott Downloads Over 1100 Downloads

2013 ABBOTT DOWNLOADS OVER 1100 DOWNLOADS Thank you for taking the time to download this catalog. We have organized the downloads as they are on the website – Access Point Worldwide, Books, Manuscripts, Workshop Plans, Tops Magazines, Free With Purchase. Clicking on a link will take you directly to that category. Greg Bordner Abbott Magic Company 5/3/2013 Contents ACCESS POINT WORLDWIDE ............................................................................................................ 4 ABBOTT DOWNLOADABLE BOOKS ............................................................................................... 19 ABBOTT DOWNLOADABLE MANUSCRIPTS ................................................................................ 23 ABBOTT DOWNLOADABLE WORKSHOP PLANS ....................................................................... 26 ABBOTT DOWNLOADABLE TOPS MAGAZINES ......................................................................... 30 ABBOTT DOWNLOADABLE INSTRUCTIONS .............................................................................. 32 ABBOTT FREE DOWNLOADS ........................................................................................................... 50 ACCESS POINT WORLDWIDE The latest downloads from around the world – Downloads range in price 100 percent Commercial Volume 1 - Comedy Stand Up 100 percent Commercial Volume 2 - Mentalism 100 percent Commercial Volume 3 - Closeup Magic 21 by Shin Lim, Donald Carlson & Jose Morales video 24Seven Vol. 1 by John Carey and RSVP Magic video 24Seven Vol. -

Roberto Giobbi's

A K R . bertoS Dear Roberto Giobbi can you help me develop a philosophy of magic... ?(...and 51 other vital questions for the performing magician.) Roberto Giobbi’s A K R . bertoS Can you help me develop a philosophy of magic... (...and 51 other vital questions for the performing magician.) Designed by Barbara Giobbi-Ebnöther Photographs by Barbara Giobbi-Ebnöther Completely Revised Edition June 2014 Lybrary.com ASK ROBERTO Contents Foreword 6 1. Injog Overhand Shuffle 10 2. Presentation Ideas 12 3. Three Card Monte 15 4. How to Study 16 5. Staystack 18 6. Fear of Starting to Perform 20 7. Memorized Version of Out of Sight Out of Mind 28 8. Think Of A Card Routines 30 9. Gilbreath Principle 33 10. Magician is Only an Actor Playing the Role of a Magician 36 11. Too Perfect Theory 45 12. How Many Effects? 51 13. Creative Process 54 14. Bottom Deal Applications 61 15. Philosophy of Magic 65 16. Origin of Display Pass 68 17. Trick That Can’t Be Explained 73 18. In Spectator’s Hands 77 19. Effect Categories for Cards 82 20. Vernon’s Travellers 97 21. Why do Magic? 101 22. Ten Best Card Effects 104 23. Practice 115 24. Best Self-Working Card Trick 118 25. Starting with Card Magic 121 26. Tabled Faro/Riffle Shuffle 125 27. Delayed Setup 129 28. Program Construction of an Act 133 29. How To Prepare For A Competition 143 30. Dirty Cards 149 31. Constructivism 155 32. Favorite Card Routine 162 33. Favorite ESP Card Trick 169 4 . -

David Copperfield PAGE 36

JUNE 2012 DAVID COPPERFIELD PAGE 36 MAGIC - UNITY - MIGHT Editor Michael Close Editor Emeritus David Goodsell Associate Editor W.S. Duncan Proofreader & Copy Editor Lindsay Smith Art Director Lisa Close Publisher Society of American Magicians, 6838 N. Alpine Dr. Parker, CO 80134 Copyright © 2012 Subscription is through membership in the Society and annual dues of $65, of which $40 is for 12 issues of M-U-M. All inquiries concerning membership, change of address, and missing or replacement issues should be addressed to: Manon Rodriguez, National Administrator P.O. Box 505, Parker, CO 80134 [email protected] Skype: manonadmin Phone: 303-362-0575 Fax: 303-362-0424 Send assembly reports to: [email protected] For advertising information, reservations, and placement contact: Mona S. Morrison, M-U-M Advertising Manager 645 Darien Court, Hoffman Estates, IL 60169 Email: [email protected] Telephone/fax: (847) 519-9201 Editorial contributions and correspondence concerning all content and advertising should be addressed to the editor: Michael Close - Email: [email protected] Phone: 317-456-7234 Fax: 866-591-7392 Submissions for the magazine will only be accepted by email or fax. VISIT THE S.A.M. WEB SITE www.magicsam.com To access “Members Only” pages: Enter your Name and Membership number exactly as it appears on your membership card. 4 M-U-M Magazine - JUNE 2012 M-U-M JUNE 2012 MAGAZINE Volume 102 • Number 1 S.A.M. NEWS 6 From the Editor’s Desk Photo by Herb Ritts 8 From the President’s Desk 11 M-U-M Assembly News 24 New Members 25 -

The Underground Sessions Page 36

MAY 2013 TONY CHANG DAN WHITE DAN HAUSS ERIC JONES BEN TRAIN THE UNDERGROUND SESSIONS PAGE 36 CHRIS MAYHEW MAY 2013 - M-U-M Magazine 3 MAGIC - UNITY - MIGHT Editor Michael Close Editor Emeritus David Goodsell Associate Editor W.S. Duncan Proofreader & Copy Editor Lindsay Smith Art Director Lisa Close Publisher Society of American Magicians, 6838 N. Alpine Dr. Parker, CO 80134 Copyright © 2012 Subscription is through membership in the Society and annual dues of $65, of which $40 is for 12 issues of M-U-M. All inquiries concerning membership, change of address, and missing or replacement issues should be addressed to: Manon Rodriguez, National Administrator P.O. Box 505, Parker, CO 80134 [email protected] Skype: manonadmin Phone: 303-362-0575 Fax: 303-362-0424 Send assembly reports to: [email protected] For advertising information, reservations, and placement contact: Mona S. Morrison, M-U-M Advertising Manager 645 Darien Court, Hoffman Estates, IL 60169 Email: [email protected] Telephone/fax: (847) 519-9201 Editorial contributions and correspondence concerning all content and advertising should be addressed to the editor: Michael Close - Email: [email protected] Phone: 317-456-7234 Submissions for the magazine will only be accepted by email or fax. VISIT THE S.A.M. WEB SITE www.magicsam.com To access “Members Only” pages: Enter your Name and Membership number exactly as it appears on your membership card. 4 M-U-M Magazine - MAY 2013 M-U-M MAY 2013 MAGAZINE Volume 102 • Number 12 26 28 36 PAGE STORY 27 COVER S.A.M. NEWS 6 From -

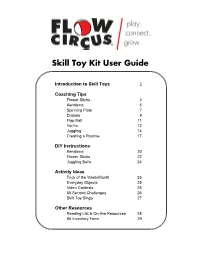

Skill Toy Kit User Guide

Skill Toy Kit User Guide Introduction to Skill Toys 2 Coaching Tips Flower Sticks 3 Kendama 5 Spinning Plate 7 Diabolo 9 Flop Ball 11 Yo-Yo 12 Juggling 14 Creating a Routine 17 DIY Instructions Kendama 20 Flower Sticks 22 Juggling Balls 24 Activity Ideas Trick of the Week/Month 25 Everyday Objects 25 Video Contests 25 60 Second Challenges 26 Skill Toy Bingo 27 Other Resources Reading List & On-line Resources 28 Kit Inventory Form 29 Introduction to Skill Toys You and your team are about to embark on a fun adventure into the world of skill toys. Each toy included in this kit has its own story, design, and body of tricks. Most of them have a lower barrier to entry than juggling, and the following lessons of good posture, mindfulness, problem solving, and goal setting apply to all. Before getting started with any of the skill toys, it is important to remember to relax. Stand with legs shoulder width apart, bend your knees, and take deep breaths. Many of them require you to absorb energy. We have found that the more stressed a player is, the more likely he/she will be to struggle. Not surprisingly, adults tend to have this problem more than kids. If you have players getting frustrated, remind them to relax. Playing should be fun! Also, remind yourself and other players that even the drops or mess-ups can give us valuable information. If they observe what is happening when they attempt to use the skill toys, they can use this information to problem solve.