EEG: the Missing Gap Between Controllers and Gestures

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Xbox 360 Total Size (GB) 0 # of Items 0

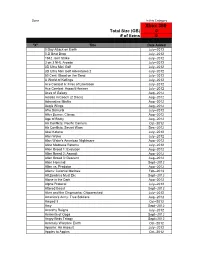

Done In this Category Xbox 360 Total Size (GB) 0 # of items 0 "X" Title Date Added 0 Day Attack on Earth July--2012 0-D Beat Drop July--2012 1942 Joint Strike July--2012 3 on 3 NHL Arcade July--2012 3D Ultra Mini Golf July--2012 3D Ultra Mini Golf Adventures 2 July--2012 50 Cent: Blood on the Sand July--2012 A World of Keflings July--2012 Ace Combat 6: Fires of Liberation July--2012 Ace Combat: Assault Horizon July--2012 Aces of Galaxy Aug--2012 Adidas miCoach (2 Discs) Aug--2012 Adrenaline Misfits Aug--2012 Aegis Wings Aug--2012 Afro Samurai July--2012 After Burner: Climax Aug--2012 Age of Booty Aug--2012 Air Conflicts: Pacific Carriers Oct--2012 Air Conflicts: Secret Wars Dec--2012 Akai Katana July--2012 Alan Wake July--2012 Alan Wake's American Nightmare Aug--2012 Alice Madness Returns July--2012 Alien Breed 1: Evolution Aug--2012 Alien Breed 2: Assault Aug--2012 Alien Breed 3: Descent Aug--2012 Alien Hominid Sept--2012 Alien vs. Predator Aug--2012 Aliens: Colonial Marines Feb--2013 All Zombies Must Die Sept--2012 Alone in the Dark Aug--2012 Alpha Protocol July--2012 Altered Beast Sept--2012 Alvin and the Chipmunks: Chipwrecked July--2012 America's Army: True Soldiers Aug--2012 Amped 3 Oct--2012 Amy Sept--2012 Anarchy Reigns July--2012 Ancients of Ooga Sept--2012 Angry Birds Trilogy Sept--2012 Anomaly Warzone Earth Oct--2012 Apache: Air Assault July--2012 Apples to Apples Oct--2012 Aqua Oct--2012 Arcana Heart 3 July--2012 Arcania Gothica July--2012 Are You Smarter that a 5th Grader July--2012 Arkadian Warriors Oct--2012 Arkanoid Live -

Leikin Kulttuuriaines Johan Huizinga — Homo Ludens

Leikillisyys, Leikki Peli, Pelillisyys Leikin kulttuuriaines Johan Huizinga — Homo Ludens Leikki on vapaaehtoista Leikki ei ole tavallista elämää Leikki ei ole hyödyllistä Leikki on rajattu paikallisesti ja ajallisesti Leikki luo järjestystä Leikin taikapiiri (Huizinga 1938, 17-21) repetitive play player-inappropriate play using a system parapathic play sensation-centric playful locomotor play play play to order one-sided social play dangerous play violent play play Stenros 2015: Playfulness, Play, and Games context insensitive A Constructionist Ludology Approach sisäiset tavoitteet ulkoiset tavoitteet leikin konteksti oikea leikki instrumentalisoitu ei-leikin konteksti sovitettu leikki ei-leikki Stenros 2015 suomennettu kökösti Peli kokemuksena Peli ei ole välineessä Peli ei ole aistissa Peli on kokemuksessa Peli kokemuksena John Dewey — Art as Experience Peli kokemuksena John Dewey — Art as Experience Esteettinen kokemus Kokemuksen materiaalina tunteet Kokemuksen kokeminen vs. läpikäyminen Aktiivinen toimijuus Ilmaiseminen Esteet / Haasteet Aktiivinen korjaaminen ? Pelin luonne Pelin luonne Interaktiivinen, rakenne, sisäinen tavoite, lopputulos Pelaaminen vs peli Pelaajan ja pelin kitka Väline hyvälle pelaamiselle (Esim. Stenros 2015) Pelin systeemiluonne Peli on systeeminen Ennalta rakennettu tai rakentuu pelatessa (Heikka) Systeemisyys Vahvistava palaute Tasapainottava prosessi Viive ja korkean vipuvoiman alue (Senge 1991) Peli systeeminä Hyvä peli auttaa ymmärtämään omaa systeemiään Peli auttaa hahmottamaan systeemejä? Pelisuunnittelu -

Folha De Rosto ICS.Cdr

“For when established identities become outworn or unfinished ones threaten to remain incomplete, special crises compel men to wage holy wars, by the cruellest means, against those who seem to question or threaten their unsafe ideological bases.” Erik Erikson (1956), “The Problem of Ego Identity”, p. 114 “In games it’s very difficult to portray complex human relationships. Likewise, in movies you often flit between action in various scenes. That’s very difficult to do in games, as you generally play a single character: if you switch, it breaks immersion. The fact that most games are first-person shooters today makes that clear. Stories in which the player doesn’t inhabit the main character are difficult for games to handle.” Hideo Kojima Simon Parkin (2014), “Hideo Kojima: ‘Metal Gear questions US dominance of the world”, The Guardian iii AGRADECIMENTOS Por começar quero desde já agradecer o constante e imprescindível apoio, compreensão, atenção e orientação dos Professores Jean Rabot e Clara Simães, sem os quais este trabalho não teria a fruição completa e correta. Um enorme obrigado pelos meses de trabalho, reuniões, telefonemas, emails, conversas e oportunidades. Quero agradecer o apoio de família e amigos, em especial, Tia Bela, João, Teté, Ângela, Verxka, Elma, Silvana, Noëmie, Kalashnikov, Madrinha, Gaivota, Chacal, Rita, Lina, Tri, Bia, Quelinha, Fi, TS, Cinco de Sete, Daniel, Catarina, Professor Albertino, Professora Marques e Professora Abranches, tanto pelas forças de apoio moral e psicológico, pelas recomendações e conselhos de vida, e principalmente pela amizade e memórias ao longo desta batalha. Por último, mas não menos importante, quero agradecer a incessante confiança, companhia e aceitação do bom e do mau pela minha Twin, Safira, que nunca me abandonou em todo o processo desta investigação, do meu caminho académico e da conquista da vida e sonhos. -

Recommending Games to Adults with Autism Spectrum Disorder(ASD) for Skill Enhancement Using Minecraft

Brigham Young University BYU ScholarsArchive Theses and Dissertations 2019-11-01 Recommending Games to Adults with Autism Spectrum Disorder(ASD) for Skill Enhancement Using Minecraft Alisha Banskota Brigham Young University Follow this and additional works at: https://scholarsarchive.byu.edu/etd Part of the Physical Sciences and Mathematics Commons BYU ScholarsArchive Citation Banskota, Alisha, "Recommending Games to Adults with Autism Spectrum Disorder(ASD) for Skill Enhancement Using Minecraft" (2019). Theses and Dissertations. 7734. https://scholarsarchive.byu.edu/etd/7734 This Thesis is brought to you for free and open access by BYU ScholarsArchive. It has been accepted for inclusion in Theses and Dissertations by an authorized administrator of BYU ScholarsArchive. For more information, please contact [email protected], [email protected]. Recommending Games to Adults with Autism Spectrum Disorder (ASD) for Skill Enhancement Using Minecraft Alisha Banskota A thesis submitted to the faculty of Brigham Young University in partial fulfillment of the requirements for the degree of Master of Science Yiu-Kai Dennis Ng, Chair Seth Holladay Daniel Zappala Department of Computer Science Brigham Young University Copyright c 2019 Alisha Banskota All Rights Reserved ABSTRACT Recommending Games to Adults with Autism Spectrum Disorder (ASD) for Skill Enhancement Using Minecraft Alisha Banskota Department of Computer Science, BYU Master of Science Autism spectrum disorder (ASD) is a long-standing mental condition characterized by hindered mental growth and development. In 2018, 168 out of 10,000 children are said to be affected with Autism in the USA. As these children move to adulthood, they have difficulty in communicating with others, expressing themselves, maintaining eye contact, developing a well-functioning motor skill or sensory sensitivity, and paying attention for longer period. -

Music Games Rock: Rhythm Gaming's Greatest Hits of All Time

“Cementing gaming’s role in music’s evolution, Steinberg has done pop culture a laudable service.” – Nick Catucci, Rolling Stone RHYTHM GAMING’S GREATEST HITS OF ALL TIME By SCOTT STEINBERG Author of Get Rich Playing Games Feat. Martin Mathers and Nadia Oxford Foreword By ALEX RIGOPULOS Co-Creator, Guitar Hero and Rock Band Praise for Music Games Rock “Hits all the right notes—and some you don’t expect. A great account of the music game story so far!” – Mike Snider, Entertainment Reporter, USA Today “An exhaustive compendia. Chocked full of fascinating detail...” – Alex Pham, Technology Reporter, Los Angeles Times “It’ll make you want to celebrate by trashing a gaming unit the way Pete Townshend destroys a guitar.” –Jason Pettigrew, Editor-in-Chief, ALTERNATIVE PRESS “I’ve never seen such a well-collected reference... it serves an important role in letting readers consider all sides of the music and rhythm game debate.” –Masaya Matsuura, Creator, PaRappa the Rapper “A must read for the game-obsessed...” –Jermaine Hall, Editor-in-Chief, VIBE MUSIC GAMES ROCK RHYTHM GAMING’S GREATEST HITS OF ALL TIME SCOTT STEINBERG DEDICATION MUSIC GAMES ROCK: RHYTHM GAMING’S GREATEST HITS OF ALL TIME All Rights Reserved © 2011 by Scott Steinberg “Behind the Music: The Making of Sex ‘N Drugs ‘N Rock ‘N Roll” © 2009 Jon Hare No part of this book may be reproduced or transmitted in any form or by any means – graphic, electronic or mechanical – including photocopying, recording, taping or by any information storage retrieval system, without the written permission of the publisher. -

Light Gun Shooter Developer: Headstrong Games Release Date: Q1 2009 Expected ESRB: “M” for Mature

The House of the Dead: OVERKILL™ Platform: Wii™ Genre: Light Gun Shooter Developer: Headstrong Games Release Date: Q1 2009 Expected ESRB: “M” for Mature THEY’VE COME FOR BRAINS, YOU’LL GIVE THEM BULLETS! The House of the Dead: OVERKILL™ charges you with mowing down waves of infected, blood-thirsty zombies in a last-ditch effort to survive and uncover the horrific truth behind the origins of the House of the Dead. Survival horror as its never been seen before. The House of the Dead: OVERKILL is a pulp-style take on the classic SEGA® light-gun shooter series. Back when the famous Agent G was fresh out of the academy, he teamed up with hard-boiled bad-ass Agent Washington to investigate stories of mysterious disappearances in small-town Louisiana. Little did they know what blood-soaked mutant horror awaited them in the streets and swamps of Bayou City. Features: • Pulp funk horror Zombie cool at its finest as one of the most popular shooter classics returns injected with a whole new retro b-movie look • An utterly in-your-face zombie-dismembering blast Non-stop light-gun shooting action on the Wii as you blow apart zombies for high-score thrills • Superb co-op action on Wii Grab a friend and play the game as intended in your own buddy action movie as two of the coolest characters in video gaming • Relentless, gore-drenched, over-the-top action Only the coolest, most cold-hearted agent’s going to keep his head against the zombie flood. Use “Slow- Mofo Time” to make the perfect head-popping shot and “Evil Eye” to spot moments of opportunity that’ll send the whole environment up in flames • Lighting quick Wii Remote reactions Get knee-deep in the dead with motion-sensitive Wii controls. -

Anna Priscilla De Albuquerque IOT4FUN

Pós-Graduação em Ciência da Computação Anna Priscilla de Albuquerque IOT4FUN: a relational model for hybrid gameplay interaction Universidade Federal de Pernambuco [email protected] www.cin.ufpe.br/~posgraduacao Recife 2017 Anna Priscilla de Albuquerque IOT4FUN: a relational model for hybrid gameplay interaction Este trabalho foi apresentado à Pós- Graduação em Ciência da Computação do Centro de Informática da Universidade Federal de Pernambuco como requisito parcial para obtenção do grau de Mestre em Ciência da Computação. Área de Concentração: Mídia e Interação Orientadora: Prof. Judith Kelner Co-orientador: Prof. Felipe Borba Breyer Recife 2017 Catalogação na fonte Bibliotecária Monick Raquel Silvestre da S. Portes, CRB4-1217 A345i Albuquerque, Anna Priscilla IoT4Fun: a relational model for hybrid gameplay interaction / Anna Priscilla Albuquerque. – 2017. 152 f.: il., fig., tab. Orientadora: Judith Kelner. Dissertação (Mestrado) – Universidade Federal de Pernambuco. CIn, Ciência da Computação, Recife, 2017. Inclui referências e apêndices. 1. Ciência da computação. 2. Interação homem-máquina. 3. Internet das coisas. I. Kelner, Judith (orientadora). II. Título. 004 CDD (23. ed.) UFPE- MEI 2019-074 Anna Priscilla de Albuquerque IoT4Fun: A Relational Model for Hybrid Gameplay Interaction Dissertação apresentada ao Programa de Pós- Graduação em Ciência da Computação da Universidade Federal de Pernambuco, como requisito parcial para a obtenção do título de Mestre em Ciência da Computação. Aprovado em: 31/07/2017 BANCA EXAMINADORA __________________________________________________ Prof. Dr. Hansenclever de França Bassani Centro de Informática / UFPE __________________________________________________ Prof. Dr. Esteban Walter Gonzalez Clua Escola de Engenharia / UFF __________________________________________________ Profa. Dra. Judith Kelner Centro de Informática / UFPE (Orientadora) ACKNOWLEDGEMENTS I would like to thank my family for all the support they gave me during these two years of hard work. -

Vocabulaire Du Jeu Vidéo

Vocabulaire du jeu vidéo manette multijoueur console de jeu jouabilité personnage jeu d’aventure mission avatar monde virtuel rétroludique costumade quête frag tridimensionnel jeu en ligne vidéoludique intrajeu ludographie niveau réseau local Office québécois de la langue française par Yolande Perron Vocabulaire du Catalogage avant publication Perron, Yolande Vocabulaire du jeu vidéo / par Yolande Perron. [Montréal] : Office québécois de la langue française, 2012. ISBN Version imprimée : 978-2-550-64423-1 ISBN Version électronique : 978-2-550-64424-8 1. Jeux vidéo – Dictionnaires français 2. Jeux vidéo – Dictionnaires anglais I. Office québécois de la langue française 794.803 GV 1469.3 Vocabulaire du manette multijoueur console de jeu jouabilité personnage jeu d’aventure mission avatar monde virtuel rétroludique costumade quête frag tridimensionnel jeu en ligne vidéoludique intrajeu ludographie niveau réseau local Office québécois de la langue française par Yolande Perron Vocabulaire réalisé sous la direction de Danielle Turcotte, directrice générale adjointe des services linguistiques, et sous la responsabilité de Tina Célestin, ex-directrice des travaux terminologiques à l’Office québécois de la langue française. Révision Micheline Savard Direction des travaux terminologiques et de l’assistance terminolinguistique Personnes-ressources (jeu vidéo) Jean Quilici Etienne Désilets-Trempe Direction des technologies de l’information Conception graphique Liliane Bernier Direction des communications Le contenu de cette publication est également -

TOEJAMANDEARL - Round 5

TOEJAMANDEARL - Round 5 1. Description acceptable. Creator Fumito Ueda has noted that, despite in-game messages to the contrary, this character is female. This character has a diamond-shaped feature between her eyes that changes to an “I” if a certain save file is present. Missiles from Basaran can be dodged with the help of this character, who also helps the player keep pace with Phalanx. Completing a time attack mode on both normal and hard will make this character appear white. This character surprisingly survives a fall from a chasm on the way to the 16th and final boss fight in a 2005 PS2 game. Agro is the real name of, for 10 points, what companion of the giant-slaying Wander in Shadow of the Colossus? ANSWER: the horse in Shadow of the Colossus (accept Agro before mention; accept just the horse after the end) 2. Collecting an exorbitant amount of items in this game’s levels earns the player the “Rule Breaker” status. A villain in this game known as “The Tri” is fought on Foundation Prime and is a grotesque amalgam of the protagonists’ sidekicks. One character in this game, the Gamer Kid, can unlock over 20 Midway arcade games and drives the car from Spyhunter. Lord Vortech kidnaps characters from numerous worlds in this game, whose default protagonists include Wildstyle, Gandalf, and Batman. A portal is physically assembled at the start of, for 10 points, what toy-and-game hybrid from a Danish block-making company? ANSWER: Lego Dimensions 3. The first game in this series was also the first in the loosely related Moero!! series in Japan. -

Recommending Video Games to Adults with Autism Spectrum

Recommending Video Games to Adults with Autism Spectrum Disorder for Social-Skill Enhancement Alisha Banskota Yiu-Kai Ng [email protected] [email protected] Computer Science Dept., Brigham Young University Computer Science Dept., Brigham Young University Provo, Utah, 84602, USA Provo, Utah, 84602, USA ABSTRACT with their peer at different deficiency levels [19]. Despite the limi- Autism spectrum disorder (ASD) is a long-standing mental condi- tation in social activity, individuals with ASD are highly interested tion characterized by hindered mental growth and development in video games as shown in different studies [14, 15, 18]. Children and is a lifelong disability for the majority of affected individuals. and adolescents with ASD spend more than two hours a day play- In 2018, 2-3% of children in the USA have been diagnosed with ing video games which is more than by typically developing chil- autism. As these children move to adulthood, they have difficulty dren or children with other disabilities [17]. Not just for children, in developing a well-functioning motor skill. Some of these abnor- adults with ASD have also reported to be engaged in video games. malities, however, can be gradually improved if they are treated These video games have positive impact on them as the adults find appropriately during their adulthood. Studies have shown that peo- video games to be entertaining, stress relieving, and creative [17]. ple with ASD enjoy playing video games. Educational games, how- Regular video games, however, are not designed especially for ever, have been primarily developed for children with ASD, which adults with autism in mind, even though games have been pre- are too primitive for adults with ASD. -

You've Seen the Movie, Now Play The

“YOU’VE SEEN THE MOVIE, NOW PLAY THE VIDEO GAME”: RECODING THE CINEMATIC IN DIGITAL MEDIA AND VIRTUAL CULTURE Stefan Hall A Dissertation Submitted to the Graduate College of Bowling Green State University in partial fulfillment of the requirements for the degree of DOCTOR OF PHILOSOPHY May 2011 Committee: Ronald Shields, Advisor Margaret M. Yacobucci Graduate Faculty Representative Donald Callen Lisa Alexander © 2011 Stefan Hall All Rights Reserved iii ABSTRACT Ronald Shields, Advisor Although seen as an emergent area of study, the history of video games shows that the medium has had a longevity that speaks to its status as a major cultural force, not only within American society but also globally. Much of video game production has been influenced by cinema, and perhaps nowhere is this seen more directly than in the topic of games based on movies. Functioning as franchise expansion, spaces for play, and story development, film-to-game translations have been a significant component of video game titles since the early days of the medium. As the technological possibilities of hardware development continued in both the film and video game industries, issues of media convergence and divergence between film and video games have grown in importance. This dissertation looks at the ways that this connection was established and has changed by looking at the relationship between film and video games in terms of economics, aesthetics, and narrative. Beginning in the 1970s, or roughly at the time of the second generation of home gaming consoles, and continuing to the release of the most recent consoles in 2005, it traces major areas of intersection between films and video games by identifying key titles and companies to consider both how and why the prevalence of video games has happened and continues to grow in power. -

Stay Alive Ebook, Epub

STAY ALIVE PDF, EPUB, EBOOK Simon Kernick | 352 pages | 30 Jan 2014 | Cornerstone | 9781780890753 | English | London, United Kingdom Stay Alive PDF Book Starting with a randomly chosen player, players take turns placing their marbles onto the board until all marbles are placed. Have you found a better way to keep your PC alert? The last player with a marble on the board is the winner. Dead or Alive m. The start player then chooses a slider and moves it one click either towards or away from the board. How about a software solution instead? Sophia Bush. Help Learn to edit Community portal Recent changes Upload file. Young Rookie as Richey Nash. Reviews Review policy and info. About the Campaign Stay Out — Stay Alive is a national public awareness campaign aimed at warning children and adults about the dangers of exploring and playing on active and abandoned mine sites. Government that Works Protect clean air, clean water, and public health and conserve working farms, forests, and natural lands. Yes No Report this. Winning Moves Milton Bradley. Wendell Pierce. Mine Safety and Health Administration, other state agencies and the active mining industry to promote the Stay Out Stay Alive campaign. Frankie Muniz. If you live long enough you will eventually outlast whatever season comes along, including this one. The mansion that appears in the video-game at the beginning of the movie is a carbon copy of the one where the action of SEGA's light gun shooter The House of the Dead See the full gallery. Sign In. Holiday Movie Stars, Then and Now.