Usaid/Morocco Reading for Success -Small Scale Experimentation Activity

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Activity Report 2012 ONHYM

KINGDOM OF MOROCCO Activity report 2012 ONHYM 5, Avenue Moulay Hassan B.P. 99, 1000, Rabat • Morocco Tel: +212 (0) 5 37 23 98 98 • Fax: +212 (0) 5 37 70 94 11 [email protected] • www. onhym.com Activity report 2012 • ONHYM 1 His Majesty The King Mohammed VI, May COD Assist Him. 2 ONHYM • Activity report 2012 TABLETABLEO OF CONTENTS • Message from the General Director 7 • International & national conjunctures 8 • Highlights 10 • Petroleum exploration 12 • Petroleum partnerships & cooperation 24 • Petroleum drilling & production 31 • Mining exploration 32 • Mining partnerships & cooperation 42 • Information system 45 • Human resources & training 46 Activity report 2012 • ONHYM 3 Maps : ' ONHYM Exploration Activity : 2012 achievements 16 ' Partners Activity : 2012 achievements 23 ' Mining Exploration 40 Amina BENKHADRA General Director Activity report 2012 • ONHYM 5 MESSAGE FROM THE GENERAL DIRECTOR If global economic growth continued to digress in nologies and buil- 2012 with a volatility of commodity prices, global in- ding partnerships vestment in exploration/production of hydrocarbons with major inter- and mining grew at a steady pace. national mining groups. The over- In this context, the year 2012 was satisfactory for ONHYM. haul of the Mining Code with favorable incentives for Its performance conformed with the budget plan thus national and international investment will represent a refl ecting the commitment of ONHYM to consolidate its powerful tool to develop the mining sector. eff orts for hydrocarbon and mining explorations made in recent years. From an organizational perspective, a study of ONHYM’s development strategy is underway and it is intended This year has seen an unprecedented demand as a re- to enhance the mining and hydrocarbon heritage (ex- sult of the oil companies growing interest in the explo- cluding phosphates) of the Kingdom, and make the ration of sedimentary Moroccan basins, bringing the most of its expertise in the country and abroad. -

Proceedings of the Third International American Moroccan Agricultural Sciences Conference - AMAS Conference III, December 13-16, 2016, Ouarzazate, Morocco

Atlas Journal of Biology 2017, pp. 313–354 doi: 10.5147/ajb.2017.0148 Proceedings of the Third International American Moroccan Agricultural Sciences Conference - AMAS Conference III, December 13-16, 2016, Ouarzazate, Morocco My Abdelmajid Kassem1*, Alan Walters2, Karen Midden2, and Khalid Meksem2 1 Plant Genetics, Genomics, and Biotechnology Lab, Dept. of Biological Sciences, Fayetteville State University, Fayetteville, NC 28301, USA; 2 Dept. of Plant, Soil, and Agricultural Systems, Southern Illinois University, Car- bondale, IL 62901-4415, USA Received: December 16, 2016 / Accepted: February 1, 2017 Abstract ORAL PRESENTATIONS ABSTRACTS The International American Moroccan Agricultural Sciences WEDNESDAY & THURSDAY Conference (AMAS Conference; www.amas-conference.org) is an international conference organized by the High Council DECEMBER 14 & 15, 2016 of Moroccan American Scholars and Academics (HC-MASA; www.hc-masa.org) in collaboration with various universities I. SESSION I. DATE PALM I: ECOSYSTEM’S PRESENT and research institutes in Morocco. The first edition (AMAS AND FUTURE, MAJOR DISEASES, AND PRODUC- Conference I) was organized on March 18-19, 2013 in Ra- TION SYSTEMS bat, Morocco; AMAS Conference II was organized on October 18-20, 2014 in Marrakech, Morocco; and AMAS III was or- Co-Chair: Mohamed Baaziz, Professor, Cadi Ayyad University, ganized on December 13-16, 2016 in Ouarzazate, Morocco. Marrakech, Morocco The current proceedings summarizes abstracts from 62 oral Co-Chair: Ikram Blilou, Professor, Wageningen University & Re- presentations and 100 posters that were presented during search, The Netherlands AMAS Conference III. 1. Date Palm Adaptative Strategies to Desert Conditions Keywords: AMAS Conference, HC-MASA, Agricultural Sciences. Alejandro Aragón Raygoza, Juan Caballero, Xiao, Ting Ting, Yanming Deng, Ramona Marasco, Daniele Daffonchio and Ikram Blilou*. -

ADAPTATION to CLIMATE CHANGE -Ooo- Project of Adaptation to Climate Change – Oases Areas

ADAPTATION TO CLIMATE CHANGE -oOo- Project of Adaptation to Climate Change – Oases Areas September 10th, 2014 1 Project of Adaptation to Climate Change- Oases Areas PROJECT/PROGRAMME PROPOSAL TO THE ADAPTATION FUND Acronyms ADA Agency for Agricultural Development ANDZOA National Agency for Development of Oases and Argan Tree Zones AUEA Association of Agricultural Water Users CEI Call for Expression of Interest CERKAS Center for the Restoration and Rehabilitation of Atlas and Sub-Atlas Zones CLE Local Water Council CTB Belgian Technical Cooperation CT Work Center DNM Department of National Meteorology DPA Provincial Direction of Agriculture DWS Drinkable Water Supply EIG Economic Interest Group ESA Environmental Strategic Assessment ESMP Environmental and Social Management Plan 2 GIEC Intergovernmental panel on Climate change HBA Hydraulic Basin Agency INDH National Initiative of Human Development INRA National Institute for Agronomic Research IRD Integrated Rural Development JICA Japanese International Cooperation Agency MAPM Ministry of Agriculture and Maritime Fisheries MP Master Plan OFPPT Office of Vocational Training and Employment Promotion ONCA National Agricultural Council Office ONEE National Office of Water and Electricity ONEP National Office of Drinkable Water ORMVA Regional Office of Agricultural Development PADO Plans for Adapting and Developing the Oases PCD Municipal Development Plans PCM Project Cycle Management PMU Project Management Unit PMV Moroccan Green Plan POT Program Oasis Tafilalet RCC Regional Coordinating Committee -

Proposal for Morocco

AFB/PPRC.16/12 19 March 2015 Adaptation Fund Board Project and Programme Review Committee Sixteenth Meeting Bonn, Germany, 7-8 April 2015 Agenda Item 6 h) PROPOSAL FOR MOROCCO AFB/PPRC.16/12 Background 1. The Operational Policies and Guidelines (OPG) for Parties to Access Resources from the Adaptation Fund (the Fund), adopted by the Adaptation Fund Board (the Board), state in paragraph 45 that regular adaptation project and programme proposals, i.e. those that request funding exceeding US$ 1 million, would undergo either a one-step, or a two-step approval process. In case of the one-step process, the proponent would directly submit a fully-developed project proposal. In the two-step process, the proponent would first submit a brief project concept, which would be reviewed by the Project and Programme Review Committee (PPRC) and would have to receive the endorsement of the Board. In the second step, the fully- developed project/programme document would be reviewed by the PPRC, and would ultimately require the Board’s approval. 2. The Templates approved by the Board (OPG, Annex 4) do not include a separate template for project and programme concepts but provide that these are to be submitted using the project and programme proposal template. The section on Adaptation Fund Project Review Criteria states: For regular projects using the two-step approval process, only the first four criteria will be applied when reviewing the 1st step for regular project concept. In addition, the information provided in the 1st step approval process with respect to the review criteria for the regular project concept could be less detailed than the information in the request for approval template submitted at the 2nd step approval process. -

Oriental Regionregion

MoroccoMorocco OrientalOriental RegionRegion An Investment Guide to the Oriental Region of Morocco Opportunities and Conditions 2012 United Nations UNITED NATIONS I AN INVESTMENT GUIDE TO THE ORIENTAL REGION OF MOROCCO Opportunities and Conditions 2012 UNITED NATIONS New York and Geneva, 2012 II UNCTAD The United Nations Conference on Trade and Development (UNCTAD) was established in 1964 as a per- manent intergovernmental body. Its main goals are to maximize the trade, investment and development opportunities of developing countries, to help them meet the challenges arising from globalization, and to help them integrate into the global economy on an equitable basis. UNCTAD's membership comprises 193 States. Its secretariat is located in Geneva, Switzerland, and forms part of the United Nations Secretariat. ICC The International Chamber of Commerce (ICC) is the world business organization. It is the only body that speaks with authority on behalf of enterprises from all sectors in every part of the world, grouping toge- ther thousands of members, companies and associations from 130 countries. ICC promotes an open trade and investment system and the market economy in the context of sustainable growth and deve- lopment. It makes rules that govern the conduct of business across borders. Within a year of the creation of the United Nations it was granted consultative status at the highest level (category A) with the United Nations Economic and Social Council. This is now known as General Category consultative status. Notes The term 'country' as used in this study also refers, as appropriate, to territories or areas; the designa- tions employed and the presentation of the material do not imply the expression of any opinion what- soever on the part of the Secretariat of the United Nations concerning the legal status of any country, territory, city or area or of its authorities, or concerning the delimitation of its frontiers or boundaries. -

Dossier Salubrité Et Sécurité Dans Les Bâtiments : Quel Règlement ?

N°30 / Mars 2015 / 30 Dh Dossier Salubrité et Sécurité dans les bâtiments : Quel règlement ? Architecture et Urbanisme L’urbanisme dans les 12 régions: Quelle vision ? Décoration d’Intérieur et Ameublement Cuisine: Quelles tendances déco 2015? Interview: Salon Préventica International : Une 2ème édition qui promet un grand nombre de Eric Dejean-Servières, commissaire nouveautés général, du salon Préventica International Casablanca Édito N°30 / Mars 2015 / 30 Dh Dossier Salubritéles et bâtiments Sécurité :dans Jamal KORCH Quel règlement ? Architecture et Urbanisme L’urbanisme dans les 12 régions: Quelle vision ? Décoration d’Intérieur et Ameublement Cuisine: Quelles tendances déco 2015? L’aménagement du territoire et le découpage Interview: Salon Préventica International : Une 2ème édition administratif : Y a-t-il une convergence ? qui promet un grand nombre de nouveautés al Eric Dejean-Servières,Casablanca commissaire général, du salon Préventica Internation e pas compromettre les n 2-15-40 fixant à 12 le nombre des Directeur de la Publication besoins des générations régions, leur dénomination, leur chef- Jamal KORCH futures, prendre en compte lieu, ainsi que les préfectures et les l’ensemble des efforts provinces qui les composent. Et sur ce Rédacteur en Chef N environnementaux des activités tracé que l’aménagement du territoire Jamal KORCH urbaines, assurer l’équilibre entre aura lieu en appliquant le contenu des [email protected] les habitants de la ville et ceux de différents documents y afférents. GSM: 06 13 46 98 92 la campagne, -

The Transition of Governance Approaches to Rural Tourism in Southern Morocco

The transition of governance approaches to rural tourism in Southern Morocco Andreas Kagermeier 1*, Lahoucine Amzil 2 and Brahim Elfasskaoui 3 Received: 31/07/2018 Accepted: 13/08/2018 1 Freizeit- und Tourismusgeographie, Universität Trier, Universitätsring 15, 54296 Trier, Germany, e-mail: [email protected], Phone: +49-172-9600865 2 Centre d'Études et de Recherches Géographiques (CERGéo), Faculté des Lettres et des Sciences Humaines, Université Mohammed V – Rabat, Avenue Mohamed Ben Abdellah Erregragui, 10000 Rabat, Morocco, E-mail: [email protected] 3 Département de Géographie, Faculté des Lettres et des Sciences Humaines, Université Moulay Ismaïl, Meknès, B.P. 11202, 50070 Meknès – Zitoune, Morocco, E-mail: [email protected] * Corresponding author Coordinating editor: Werner Gronau Abstract In the Global South traditional hierarchical steering modes are still quite widespread. The significantly changing conditions of competition in recent decades have boosted the need for innovation in tourism. As such, the core challenge for tourism development in many countries of the Global South has been to attain an innovation-based orientation by using stimuli from destination governance. This article is an attempt to analyse the factors that might facilitate the diffusion of an innovation-based orientation. As a basic hypothesis, the article adopts the “counter-flow principle”, with exchange between different spheres as stimuli for innovation. Taking the Souss-Massa region in Southern Morocco as a case study, the paper describes an analysis of the positions of public and private stakeholders as well as civil society organisations. The main question is what kind of relationship between the stakeholders would foster effective governance processes among local, regional and (inter-)national stakeholders. -

Capra Hircus) from Oriental Region, Province of Figuig, Morocco

International Journal of Agricultural Science Research Vol. 2(9), pp. 279-282, September 2013 Available online at http://academeresearchjournals.org/journal/ijasr ISSN 2327-3321 ©2013 Academe Research Journals Full Length Research Paper Prevalence and intensity of intestinal nematodes in goat (Capra hircus) from oriental region, Province of Figuig, Morocco Driss Lamrioui, Taoufik Hassouni*, Driss Belghyti, Jaouad Mostafi, Youssef El Madhi and Driss Lamri Laboratoire d’Environnement et Energies renouvelables. Faculté des Sciences, Université Ibn Tofail. B.P. 133, CP 14000, Kenitra, Maroc. Accepted 2 September, 2013 This study was conducted between December 2009 and November 2010 in oriental region, and 120 local goats were examined by helminthological autopsy for the identification of parasitic nematode. Six species of parasites were identified as follows: Trichostrongylus colubriformis (52.50%), Téladorsagia circumcincta (48.33%), Skrjabinema ovis (42.50%), Trichuris ovis (23.33%), Haemonchus contortus (19.16%) and Nematodirus spathiger (18.33%). The absence of liver flukes was noted, and parasite intensities were very high for species H. contortus (63), N. spathiger (62.37) and S. ovis (51.5). Overall, the mean parasite intensity was higher in female hosts than in male hosts. Seasonal dynamics of parasites indicated that the season has no significant influence on the parasitism prevalence. Key words: Goats, prevalence, nematodes, parasite, seasonal, Figuig. INTRODUCTION Figuig is a province in the oriental region of Morocco, 42.43% in Abakaliki metropolis in Nigeria (Nwigwe et al., characterized by small ruminant livestock. Among the 2013). species most frequently encountered are the black races This study aimed to determine the prevalence of goats. Small ruminants have an important economic and gastrointestinal nematodes among local goats and to social role. -

Emergency Plan of Action Final Report Morocco: Cold Wave

Emergency Plan of Action Final Report Morocco: cold wave DREF operation Operation n° MDRMA007; Date of Issue: 28/02/2017 Glide number: Glide n° CW-2016-000016- MAR Date of disaster: 27/02/2016 Timeframe covered by this update: 2 months Operation start date: 02/03/2016 Operation end date: 02/05/2016 Host National Society: Moroccan Red Crescent Operation budget: CHF 186,929 Number of people affected: 750,000 Number of people assisted: 7,500 (1,500 families) N° of National Societies involved in the operation: - N° of other partner organizations involved in the operation: - A. Situation analysis Description of the disaster Morocco was affected by a cold wave on Saturday the 27th of February 2016, this wave caused by a polar air mass flow passing from the North Pole to Europe and North Africa generated westerly winds accompanied with extreme and low temperatures. The regions of the Middle Atlas and the north of the High Atlas and the neighbouring plains witnessed severe thunderstorms, while significant snow fell over the High and Middle Atlas, the Rif and the highlands of eastern Morocco. This precipitation was accompanied by moderate to strong winds, and temperatures that dropped significantly, reaching exceptionally low records, particularly in the interior regions of the country and the Atlas Mountains. The wave caused economic losses and isolation for several areas in Morocco, the electricity was interrupted as well and houses suffered from structural damages and water supply interruption due to the freezing temperatures. Summary of response Overview of Host National Society Actions taken by Moroccan Red Crescent in response to the cold wave: Following on from the initial weather alerts, MRCS mobilised and organised intervention teams to assess needs and to respond to the most urgent needs MRCS volunteers and branches maintained close contact with MRCS HQ to report on the changing situation and updating on the results of needs assessments MRCS established First aid posts to assist those affected. -

Narrative Overview of Animal and Human Brucellosis in Morocco

Edinburgh Research Explorer Narrative overview of animal and human brucellosis in Morocco Citation for published version: Ducrotoy, M, Ammary, K, Lbacha, HA, Zouagui, Z, Mick, V, Prevost, L, Bryssinckx, W, Welburn, S & Benkirane, A 2015, 'Narrative overview of animal and human brucellosis in Morocco: intensification of livestock production as a driver for emergence?', Infectious diseases of poverty. https://doi.org/10.1186/s40249-015-0086-5 Digital Object Identifier (DOI): 10.1186/s40249-015-0086-5 Link: Link to publication record in Edinburgh Research Explorer Document Version: Publisher's PDF, also known as Version of record Published In: Infectious diseases of poverty Publisher Rights Statement: This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated. General rights Copyright for the publications made accessible via the Edinburgh Research Explorer is retained by the author(s) and / or other copyright owners and it is a condition of accessing these publications that users recognise and abide by the legal requirements associated with these rights. Take down policy The University of Edinburgh has made every reasonable effort to ensure that Edinburgh Research Explorer content complies with UK legislation. -

Nouveau Découpage Régional Au Maroc.Pdf

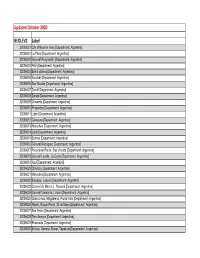

01/03/13 Nouveau découpage régional au Maroc - collectivités au Maroc Rechercher dans ce site Accueil Actualités Nouveau découpage régional au Maroc Régions Chiffres Clès Documentations Régions Populations en 2008 Provinces et Préfectures Etudes Réglementations Effectif Part du Part de Nombre Liste Total Rural l’urbain Fonds de Soutien RendezVous Région 1 : TangerTétouan 2830101 41.72% 58.28% 7 Tanger‑Assilah Avis d'Appel d'Offres (Préfecture) Contact Us M'Diq ‑ Fnidq Affiliations (Préfecture) Chefchaouen (Province) Fahs‑Anjra (Province) Larache (Province) Tétouan (Province) Ouezzane (Province) Région 2 : Oriental et Rif 2434870 42,92% 57,08% 8 Oujda Angad (Préfecture) Al Hoceima (Province) Berkane (Province) Jrada (Province) Nador (Province) Taourirt (Province) Driouch (Province) Guercif (Province) Région 3 : Fès‑Meknès 4022128 43,51% 56,49% 9 Meknès (Préfecture) Fès (Préfecture) Boulemane (Province) El Hajeb (Province) Ifrane (Province) Sefrou (Province) Taounate (Province) Taza (Province) Moulay Yacoub (Province) Région 4 : Rabat‑Salé‑ 4272901 32,31% 67,69% 7 Rabat (Préfecture) Kénitra (Sale (Préfecture ﺗﺭﺟﻣﺔ Skhirate‑Temara (Préfecture) Template tips Learn more about working with Kenitra (Province) templates. Khemisset (Province) How to change this sidebar. Sidi Kacem (Province) Sidi Slimane (Province) https://sites.google.com/site/collectivitesaumaroc/nouveau-dcoupage-rgional 1/3 01/03/13 Nouveau découpage régional au Maroc - collectivités au Maroc Région 5 : Béni Mellal‑ -

GEOLEV2 Label Updated October 2020

Updated October 2020 GEOLEV2 Label 32002001 City of Buenos Aires [Department: Argentina] 32006001 La Plata [Department: Argentina] 32006002 General Pueyrredón [Department: Argentina] 32006003 Pilar [Department: Argentina] 32006004 Bahía Blanca [Department: Argentina] 32006005 Escobar [Department: Argentina] 32006006 San Nicolás [Department: Argentina] 32006007 Tandil [Department: Argentina] 32006008 Zárate [Department: Argentina] 32006009 Olavarría [Department: Argentina] 32006010 Pergamino [Department: Argentina] 32006011 Luján [Department: Argentina] 32006012 Campana [Department: Argentina] 32006013 Necochea [Department: Argentina] 32006014 Junín [Department: Argentina] 32006015 Berisso [Department: Argentina] 32006016 General Rodríguez [Department: Argentina] 32006017 Presidente Perón, San Vicente [Department: Argentina] 32006018 General Lavalle, La Costa [Department: Argentina] 32006019 Azul [Department: Argentina] 32006020 Chivilcoy [Department: Argentina] 32006021 Mercedes [Department: Argentina] 32006022 Balcarce, Lobería [Department: Argentina] 32006023 Coronel de Marine L. Rosales [Department: Argentina] 32006024 General Viamonte, Lincoln [Department: Argentina] 32006025 Chascomus, Magdalena, Punta Indio [Department: Argentina] 32006026 Alberti, Roque Pérez, 25 de Mayo [Department: Argentina] 32006027 San Pedro [Department: Argentina] 32006028 Tres Arroyos [Department: Argentina] 32006029 Ensenada [Department: Argentina] 32006030 Bolívar, General Alvear, Tapalqué [Department: Argentina] 32006031 Cañuelas [Department: Argentina]