Understanding and Evaluating Cooperative Video Games

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

UPC Platform Publisher Title Price Available 730865001347

UPC Platform Publisher Title Price Available 730865001347 PlayStation 3 Atlus 3D Dot Game Heroes PS3 $16.00 52 722674110402 PlayStation 3 Namco Bandai Ace Combat: Assault Horizon PS3 $21.00 2 Other 853490002678 PlayStation 3 Air Conflicts: Secret Wars PS3 $14.00 37 Publishers 014633098587 PlayStation 3 Electronic Arts Alice: Madness Returns PS3 $16.50 60 Aliens Colonial Marines 010086690682 PlayStation 3 Sega $47.50 100+ (Portuguese) PS3 Aliens Colonial Marines (Spanish) 010086690675 PlayStation 3 Sega $47.50 100+ PS3 Aliens Colonial Marines Collector's 010086690637 PlayStation 3 Sega $76.00 9 Edition PS3 010086690170 PlayStation 3 Sega Aliens Colonial Marines PS3 $50.00 92 010086690194 PlayStation 3 Sega Alpha Protocol PS3 $14.00 14 047875843479 PlayStation 3 Activision Amazing Spider-Man PS3 $39.00 100+ 010086690545 PlayStation 3 Sega Anarchy Reigns PS3 $24.00 100+ 722674110525 PlayStation 3 Namco Bandai Armored Core V PS3 $23.00 100+ 014633157147 PlayStation 3 Electronic Arts Army of Two: The 40th Day PS3 $16.00 61 008888345343 PlayStation 3 Ubisoft Assassin's Creed II PS3 $15.00 100+ Assassin's Creed III Limited Edition 008888397717 PlayStation 3 Ubisoft $116.00 4 PS3 008888347231 PlayStation 3 Ubisoft Assassin's Creed III PS3 $47.50 100+ 008888343394 PlayStation 3 Ubisoft Assassin's Creed PS3 $14.00 100+ 008888346258 PlayStation 3 Ubisoft Assassin's Creed: Brotherhood PS3 $16.00 100+ 008888356844 PlayStation 3 Ubisoft Assassin's Creed: Revelations PS3 $22.50 100+ 013388340446 PlayStation 3 Capcom Asura's Wrath PS3 $16.00 55 008888345435 -

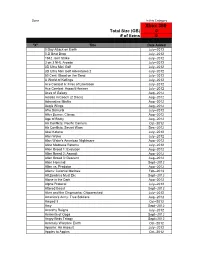

Xbox 360 Total Size (GB) 0 # of Items 0

Done In this Category Xbox 360 Total Size (GB) 0 # of items 0 "X" Title Date Added 0 Day Attack on Earth July--2012 0-D Beat Drop July--2012 1942 Joint Strike July--2012 3 on 3 NHL Arcade July--2012 3D Ultra Mini Golf July--2012 3D Ultra Mini Golf Adventures 2 July--2012 50 Cent: Blood on the Sand July--2012 A World of Keflings July--2012 Ace Combat 6: Fires of Liberation July--2012 Ace Combat: Assault Horizon July--2012 Aces of Galaxy Aug--2012 Adidas miCoach (2 Discs) Aug--2012 Adrenaline Misfits Aug--2012 Aegis Wings Aug--2012 Afro Samurai July--2012 After Burner: Climax Aug--2012 Age of Booty Aug--2012 Air Conflicts: Pacific Carriers Oct--2012 Air Conflicts: Secret Wars Dec--2012 Akai Katana July--2012 Alan Wake July--2012 Alan Wake's American Nightmare Aug--2012 Alice Madness Returns July--2012 Alien Breed 1: Evolution Aug--2012 Alien Breed 2: Assault Aug--2012 Alien Breed 3: Descent Aug--2012 Alien Hominid Sept--2012 Alien vs. Predator Aug--2012 Aliens: Colonial Marines Feb--2013 All Zombies Must Die Sept--2012 Alone in the Dark Aug--2012 Alpha Protocol July--2012 Altered Beast Sept--2012 Alvin and the Chipmunks: Chipwrecked July--2012 America's Army: True Soldiers Aug--2012 Amped 3 Oct--2012 Amy Sept--2012 Anarchy Reigns July--2012 Ancients of Ooga Sept--2012 Angry Birds Trilogy Sept--2012 Anomaly Warzone Earth Oct--2012 Apache: Air Assault July--2012 Apples to Apples Oct--2012 Aqua Oct--2012 Arcana Heart 3 July--2012 Arcania Gothica July--2012 Are You Smarter that a 5th Grader July--2012 Arkadian Warriors Oct--2012 Arkanoid Live -

Ubisoft to Co-Publish Oblivion™ on Playstation®3 and Playstation® Portable Systems in Europe and Australia

UBISOFT TO CO-PUBLISH OBLIVION™ ON PLAYSTATION®3 AND PLAYSTATION® PORTABLE SYSTEMS IN EUROPE AND AUSTRALIA Paris, FRANCE – September 28, 2006 – Today Ubisoft, one of the world’s largest video game publishers, announced that it has reached an agreement with Bethesda Softworks® to co-publish the blockbuster role-playing game The Elder Scrolls® IV: Oblivion™ on PLAYSTATION®3 and The Elder Scrolls® Travels: Oblivion™ for PlayStation®Portable (PSP™) systems in Europe and Australia. Developed by Bethesda Games Studios, Oblivion is the latest chapter in the epic and highly successful Elder Scrolls series and utilizes next- generation video game hardware to fully immerse the players into an experience unlike any other. Oblivion is scheduled to ship for the PLAYSTATION®3 European launch in March 2007 and Spring 2007 on PSP®. “We are pleased to partner with Besthesda Softworks to bring this landmark role-playing game to all PlayStation fans,” said Alain Corre EMEA Executive Director. “No doubt that this brand new version will continue the great Oblivion success story” The Elder Scrolls® IV: Oblivion has an average review score of 93.6%1 and has sold over 1.7 million units world-wide since its launch in March 2006. It has become a standard on Windows and the Xbox 360 TM entertainment system thanks to its graphics, outstanding freeform role- playing, and its hundreds of hours worth of gameplay. 1 Source : gamerankings.com About Ubisoft Ubisoft is a leading producer, publisher and distributor of interactive entertainment products worldwide and has grown considerably through its strong and diversified lineup of products and partnerships. -

Buy Painkiller Black Edition (PC) Reviews,Painkiller Black Edition (PC) Best Buy Amazon

Buy Painkiller Black Edition (PC) Reviews,Painkiller Black Edition (PC) Best Buy Amazon #1 Find the Cheapest on Painkiller Black Edition (PC) at Cheap Painkiller Black Edition (PC) US Store . Best Seller discount Model Painkiller Black Edition (PC) are rated by Consumers. This US Store show discount price already from a huge selection including Reviews. Purchase any Cheap Painkiller Black Edition (PC) items transferred directly secure and trusted checkout on Amazon Painkiller Black Edition (PC) Price List Price:See price in Amazon Today's Price: See price in Amazon In Stock. Best sale price expires Today's Weird and wonderful FPS. I've just finished the massive demo of this game and i'm very impressed with everything about it,so much so that i've ordered it through Amazon.The gameplay is smooth and every detail is taken care of.No need for annoying torches that run on batteries,tanks,aeroplanes, monsters and the environment are all lit and look perfect.Weird weapons fire stakes.Revolving blades makes minceme...Read full review --By Gary Brown Painkiller Black Edition (PC) Description 1 x DVD-ROM12 Page Manual ... See all Product Description Painkiller Black Ed reviewed If you like fps games you will love this one, great story driven action, superb graphics and a pounding soundtrack. The guns are wierd and wacky but very effective. The black edition has the main game plus the first expansion gamePainkiller Black Edition (PC). Very highly recommended. --By Greysword Painkiller Black Edition (PC) Details Amazon Bestsellers Rank: 17,932 in PC & Video Games (See Top 100 in PC & Video Games) Average Customer Review: 4.2 out of 5 stars Delivery Destinations: Visit the Delivery Destinations Help page to see where this item can be file:///D|/...r%20Black%20Edition%20(PC)%20Reviews,Painkiller%20Black%20Edition%20(PC)%20Best%20Buy%20Amazon.html[2012-2-5 22:40:11] Buy Painkiller Black Edition (PC) Reviews,Painkiller Black Edition (PC) Best Buy Amazon delivered. -

360Zine Issue 17

FREE! NAVIGATE Issue 16 | March 2008 360Free Magazine For Xbox 360 Gamers.ZineRead it, Print it, Send it to your mates… MASSIVE REVIEW INTERVIEWS! DARK SECTOR ACEYBONGOS Get ready for the glaive! FIRST LOOKS! STREET FIGHTER IV REVIEW! LEGENDARY: REVIEW! PREVIEW! THE BOX RAINBOW ARMY OF TWO LEGO: INDIANA JONES BOURNE SIX: VEGAS 2 Co-op combat! Swashbuckling good fun CONSPIRACY Surefire sequel? CONTROL a NAVIGATE | 02 QUICK FINDER Don’t miss! This month’s top highlights Every game’s just a click away! Street Fighter IV Kung Fu Panda Legendary: Race Driver: GRID The Box Far Cry 2 1942: Joint Strike Lego: Indiana Jones Bourne Conspiracy Alone In The Dark NBA Ballers: Dark Sector We have a trio of new shooters up for review Rainbow Six: Chosen One Rainbow Six: this month with Army of Two, Rainbow Six: Vegas 2 Monster Jam Vegas 2 Vegas 2 and Dark Sector all gunning for a Shoot from the strip Enemy Territory: Army of Two coveted place on your Xbox 360. See how our PAGE 21 Quake Wars XBLA panel of experts have rated them before you lock and load... Dark Sector MORE FREE MAGAZINES! LATEST ISSUES! In addition we have a fistful of new games MASSIVE REVIEW PAGE 18 coming through: headed up by none other than the much awaited Street Fighter IV you can also get the latest on Far Cry 2, Lego: Indiana Jones and Bourne Conspiracy. We also have two great interviews... First up we’ve been Army Of Two talking with Alone In The Dark producer, Nour Bring a buddy PAGE 24 Polloni - see what frighteners await in Central Park on page 15; and last but not least we’ve had a sit down with Community Manager of DON’T MISS ISSUE 18 SUBSCRIBE FOR FREE! Xbox EMEA Graham Boyd (AKA AceyBongos) - you can listen to the full interview on page 29. -

Emerging Leaders 2011 Team G Selection & Purchasing

Emerging Leaders 2011 Team G Selection & Purchasing Selection When selecting video games for a library collection, consider the following criteria: • Type of collection (e.g. circulating vs. non-circulating or programming collection). • Audience for collection (Is the collection for entertainment purposes or academic study? Will games be collected for children, teens, adults, or a combination of these?). • Curriculum support. • Game ratings. The Entertainment Software Rating Board (ESRB) gives each game a rating based on age appropriateness. Ratings include EC (Early Childhood), E (Everyone), E10+ (Everyone Ten and Older), T (Teen), and M (Mature). More information can be found on the ESRB website:http://www.esrb.org. • Game platforms. Games are available on many different platforms (e.g. Wii, PlayStation, etc.). Librarians will need to consider patron needs and budget constraints when considering which platform(s) to purchase for. As newer platforms are developed, consider whether to collect for these new platforms and whether it is necessary to keep games that are playable on older platforms. Conversely, platforms affect collection weeding; the Collection Maintenance section provides further details. • Collection of gaming items in addition to actual games (e.g. game consoles and accessories, game guides, gaming periodicals, and texts on the subject of games and gaming). • Reviews and recommendations for which games to purchase. • Qualities that make a game culturally significant. (See What Makes a Good Game.) The following resources -

P3zine Issue 12

FREE! NAVIGATE Issue 12 | March 2008 PLUS... ARMY OF TWO Rock Band 2008• Devil May CryTOP 4 • Persona 20 3 Come together PFree Magazine3 For PlayStationZ 3 Gamers. Readi it, Printn it, Send it to youre mates… MASSIVE PREVIEW! FAR CRY 2 GTA IV Jungle japes All the latest videos inside INTERVIEW! C&C TIBERIUM TNA iMPACT Squady style REVIEWED! REVIEWED! REVIEWED! Devil May Cry 4 Persona 3 Rock Band Bigger, badder and better 70 hours of RPG Take to the stage than ever before? loveliness with your mates CONTROL NAVIGATE | 02 The latest & QUICK FINDER Welcome DON’T MISS! greatest titles Every game’s just a click away This month’s highlights... on PS3 C&C: Tiberium GTA IV toP3Zine Shin Megami Far Cry 2 Army of Two Tensei: Persona 3 Disgaea 3 MX vs ATV Untamed Rockstar have done it again. No sooner as Mirror’s Edge TNA iMPACT the tumbleweed had threatened to roll Battlefield: BC Devil May Cry 4 through the streets of Liberty City and the Highlander Rock Band developers with marketing nous release not Final Fantasy XIII Persona 3 one, but five, video clips to whet the world’s MX vs ATV Team ICO The Club GTA appetite. Along with a website to boot. You can see all five cameo clips in our cover feature preview kicking off on page 8. MORE FREE MAGAZINES! LATEST ISSUES! Other cool stuff this issue? Check out Army of Two, have a gander at TNA iMPACT, and strut your stuff with Rock Band ahead of its imminent arrival this side of the pond. -

List of Search Engines

A blog network is a group of blogs that are connected to each other in a network. A blog network can either be a group of loosely connected blogs, or a group of blogs that are owned by the same company. The purpose of such a network is usually to promote the other blogs in the same network and therefore increase the advertising revenue generated from online advertising on the blogs.[1] List of search engines From Wikipedia, the free encyclopedia For knowing popular web search engines see, see Most popular Internet search engines. This is a list of search engines, including web search engines, selection-based search engines, metasearch engines, desktop search tools, and web portals and vertical market websites that have a search facility for online databases. Contents 1 By content/topic o 1.1 General o 1.2 P2P search engines o 1.3 Metasearch engines o 1.4 Geographically limited scope o 1.5 Semantic o 1.6 Accountancy o 1.7 Business o 1.8 Computers o 1.9 Enterprise o 1.10 Fashion o 1.11 Food/Recipes o 1.12 Genealogy o 1.13 Mobile/Handheld o 1.14 Job o 1.15 Legal o 1.16 Medical o 1.17 News o 1.18 People o 1.19 Real estate / property o 1.20 Television o 1.21 Video Games 2 By information type o 2.1 Forum o 2.2 Blog o 2.3 Multimedia o 2.4 Source code o 2.5 BitTorrent o 2.6 Email o 2.7 Maps o 2.8 Price o 2.9 Question and answer . -

List of Teen Zone Games

Gaming is Available When the Teen Zone is Staffed Must be Ages 13-19 & Have a Valid Library Card in Good Standing to Play Battlefield 1 Assassin’s Creed IV: Black Flag Battlefield 4 Battlefield 4 Gears of War 4 Call of Duty: Ghosts Halo 5 Call of Duty: Black Ops III Call of Duty: Infinite Warfare Army of Two Battalion Wars 2 Dark Souls III Assassin’s Creed Boom Blox Batman: Arkham Asylum & City Cabela’s Big Game Hunter 2010 Deus Ex Battlefield: 3 & Bad Company Cooking Mama Cookoff Fallout 4 BioShock DDR Hottest Party 2 Mortal Kombat X Burnout Paradise Dancing with the Stars Ace Combat 6 Call of Duty: Modern Warfare 1, 2 & 3 Deca Sports Avatar: The Game Star Wars Battlefront Call of Duty: World at War Geometry Wars Galaxies Batman: Arkham Asylum The Witcher Wild Hunt Call of Duty: Black Ops 1 & 2 Glee Karaoke Crackdown Uncharted 4: A Thief’s End Condemned 2: Bloodshot Just Dance 2, 3 & 4 Dead or Alive 4 Dead Space Legend of Zelda Twilight Princess DeadRising Devil May Cry 4 Lego: Batman 1, 2 & Star Wars Earth Defense Force 2017 Fallout 3 Mario & Sonic at the Olympic Games F.E.A.R. 2 FIFA 08 Mario Party 8 Gears of War: 1, 2 & 3 Fight Night Round 3 Mario Strikers Charged Halo: 3, 4, ODST & Wars Ghost Recon 2 MarioKart Left for Dead: 1 & 2 Dance Dance Revolution Guitar Hero: 3, 5, Aerosmith, Metallica Medal of Honor Heroes 2 Lost Planet God of War II & World Tour Metroid Prime Guitar Hero 2 Madden NFL: 09, 10 & 12 Madden NFL 09 New Carnival Games NBA 2K8 Madden 08 Metal Gear Solid 4 No More Heroes NCAA 08 Football NCAA Football 08 Mortal Kombat vs. -

Ea Reports Fourth Quarter and Fiscal Year 2008 Results

EA REPORTS FOURTH QUARTER AND FISCAL YEAR 2008 RESULTS Record GAAP Net Revenue of $3.665 Billion and Non-GAAP Net Revenue of $4.020 Billion in Fiscal 2008 More Than Fifteen New Games Scheduled for Release in Fiscal 2009 REDWOOD CITY, CA – May 13, 2008 – Electronic Arts (NASDAQ: ERTS) today announced preliminary financial results for its fiscal fourth quarter and fiscal year ended March 31, 2008. Full Year Results Net revenue for the fiscal year ended March 31, 2008 was $3.665 billion, up 19 percent as compared with $3.091 billion for the prior year. Beginning in fiscal 2008, EA no longer charges for its service related to certain online-enabled packaged goods games. As a result, the Company recognizes revenue from the sale of these games over the estimated service period. The Company ended the year with $387 million in deferred net revenue related to its service for certain online-enabled packaged goods games, which will be recognized in future periods. Non-GAAP net revenue* was $4.020 billion, up 30 percent as compared with $3.091 billion for the prior year. EA had 27 titles that sold more than one million copies in the year as compared with 24 titles in the prior year. Net loss for the year was $454 million as compared with net income of $76 million for the prior year. Diluted loss per share was $1.45 as compared with diluted earnings per share of $0.24 for the prior year. Non-GAAP net income* was $339 million as compared with $247 million a year ago, up 37 percent year-over-year. -

Copyrighted Material

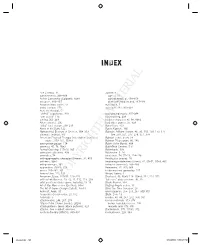

Index Ace Combat, 11 audience achievements, 368–369 age of, 29 Action Cartooning (Caldwell), 84n1 control needs of, 158–159 actuators, 166–167 pitch presentation and, 417–421 Advance Wars series, 11 Auto Race, 7 aerial combat, 270 autosave, 192, 363–364 Aero the Acrobat, 52 “ah-ha!” experience, 349 background music, 397–398 “aim assist,” 173 backtracking, 228 aiming, 263–264 badass character, 86–88, 86n2 Aliens (movie), 206 bad video games, 25, 429 “alley” level design, 218–219 Band Hero, 403 Alone in the Dark, 122 Banjo-Kazooie, 190 Alphabetical Bestiary of Choices, 304–313 Batman: Arkham Asylum, 46, 50, 110, 150, 173, 177, alternate endings, 376 184, 250, 251, 277, 278, 351, 374 American Physical Therapy Association exercises/ Batman comic book, 54 advice, 159–161, 159n3 Batman TV program, 86, 146 ammunition gauge, 174 Battle of the Bands, 404 amnesia, 47, 76, 76n9 BattleTech Centers, 5–6 Animal Crossing, 117n10, 385 Battletoads, 338 animated cutscenes, 408 Battlezone, 5, 14 animatics, 74 beat chart, 74, 77–79, 214–216 anthropomorphic characters/themes, 92, 459 Beetlejuice (movie), 18 anti-hero, 86n2 beginning/middle/end (stories), 41, 50–51, 50n9, 435 anti-power-ups, 361 behavior (enemies), 284–289 appendixes (GDD), 458 Bejeweled, 41, 350, 368 armor, 259–261, 361 bi-dimensional gameplay, 127 Army of Two, 112, 113 Bilson, Danny, 3 Arnenson, Dave, 217n13, 223–229 Bioshock, 46, 46n9, 118, 208n9, 212, 214, 372 artifi cial intelligence, 13,COPYRIGHTED 42, 71, 112, 113, 293 “bite-size” MATERIAL play sessions, 46, 79 artist positions (video game industry), 13–14 Blade Runner, 397 Art of Star Wars series (Del Rey), 84n1 Blazing Angels series, 11 The Art of Game Design (Schell), 15n14 Blinx: the Time Sweeper, 26 Ashcraft, Andy, 50 blocks/parries, 258–259, 287, 300–301 Assassin’s Creed series, 21, 172 Bluth, Don, 13 Asteroids, 5 bombs, 47–48, 247, 272, 358, 381 attack matrix, 248, 267, 279 bonus materials, 373–376 Attack of the Clones (movie), 202n6 bonus materials screen, 195 attack patterns (boss attack patterns), 323 rewards and, 373–376 attacks. -

Examining the Dynamics of the US Video Game Console Market

Can Nintendo Get its Crown Back? Examining the Dynamics of the U.S. Video Game Console Market by Samuel W. Chow B.S. Electrical Engineering, Kettering University, 1997 A.L.M. Extension Studies in Information Technology, Harvard University, 2004 Submitted to the System Design and Management Program, the Technology and Policy Program, and the Engineering Systems Division on May 11, 2007 in Partial Fulfillment of the Requirements for the Degrees of Master of Science in Engineering and Management and Master of Science in Technology and Policy at the Massachusetts Institute of Technology June 2007 C 2007 Samuel W. Chow. All rights reserved The author hereby grants to NIT permission to reproduce and to distribute publicly paper and electronic copies of this thesis document in whole or in part in any medium now know and hereafter created. Signature of Author Samuel W. Chow System Design and Management Program, and Technology and Policy Program May 11, 2007 Certified by James M. Utterback David J. cGrath jr 9) Professor of Management and Innovation I -'hs Supervisor Accepted by Pat Hale Senior Lecturer in Engineering Systems - Director, System Design and Management Program Accepted by Dava J. Newman OF TEOHNOLoGY Professor of Aeronautics and Astronautics and Engineering Systems Director, Technology and Policy Program FEB 1 E2008 ARCHNOE LIBRARIES Can Nintendo Get its Crown Back? Examining the Dynamics of the U.S. Video Game Console Market by Samuel W. Chow Submitted to the System Design and Management Program, the Technology and Policy Program, and the Engineering Systems Division on May 11, 2007 in Partial Fulfillment of the Requirements for the Degrees of Master of Science in Engineering and Management and Master of Science in Technology and Policy Abstract Several generations of video game consoles have competed in the market since 1972.