Prediction Models for Soccer Sports Analytics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

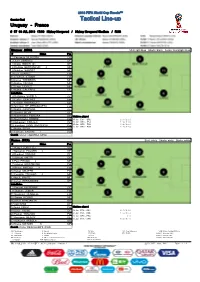

Tactical Line-Up Uruguay - France # 57 06 JUL 2018 17:00 Nizhny Novgorod / Nizhny Novgorod Stadium / RUS

2018 FIFA World Cup Russia™ Quarter-final Tactical Line-up Uruguay - France # 57 06 JUL 2018 17:00 Nizhny Novgorod / Nizhny Novgorod Stadium / RUS Uruguay (URU) Shirt: light blue Shorts: black Socks: black/light blue # Name Pos 1 Fernando MUSLERA GK 2 Jose GIMENEZ DF 3 Diego GODIN (C) DF 6 Rodrigo BENTANCUR X MF 8 Nahitan NANDEZ MF 9 Luis SUAREZ FW 11 Cristhian STUANI FW 14 Lucas TORREIRA MF 15 Matias VECINO MF 17 Diego LAXALT MF 22 Martin CACERES DF Substitutes 4 Guillermo VARELA DF 5 Carlos SANCHEZ MF 7 Cristian RODRIGUEZ MF 10 Giorgian DE ARRASCAETA FW 12 Martin CAMPANA GK 13 Gaston SILVA DF 16 Maximiliano PEREIRA DF Matches played 18 Maximiliano GOMEZ FW 15 Jun EGY - URU 0 : 1 ( 0 : 0 ) 19 Sebastian COATES DF 20 Jun URU - KSA 1 : 0 ( 1 : 0 ) 25 Jun URU - RUS 3 : 0 ( 2 : 0 ) 20 Jonathan URRETAVISCAYA FW 30 Jun URU - POR 2 : 1 ( 1 : 0 ) 23 Martin SILVA GK 21 Edinson CAVANI I FW Coach Oscar TABAREZ (URU) France (FRA) Shirt: white Shorts: white Socks: white # Name Pos 1 Hugo LLORIS (C) GK 2 Benjamin PAVARD X DF 4 Raphael VARANE DF 5 Samuel UMTITI DF 6 Paul POGBA X MF 7 Antoine GRIEZMANN FW 9 Olivier GIROUD X FW 10 Kylian MBAPPE FW 12 Corentin TOLISSO X MF 13 Ngolo KANTE MF 21 Lucas HERNANDEZ DF Substitutes 3 Presnel KIMPEMBE DF 8 Thomas LEMAR FW 11 Ousmane DEMBELE FW 15 Steven NZONZI MF 16 Steve MANDANDA GK 17 Adil RAMI DF 18 Nabil FEKIR FW Matches played 19 Djibril SIDIBE DF 16 Jun FRA - AUS 2 : 1 ( 0 : 0 ) 20 Florian THAUVIN FW 21 Jun FRA - PER 1 : 0 ( 1 : 0 ) 26 Jun DEN - FRA 0 : 0 22 Benjamin MENDY DF 30 Jun FRA - ARG 4 : 3 ( 1 : 1 ) 23 Alphonse AREOLA GK 14 Blaise MATUIDI N MF Coach Didier DESCHAMPS (FRA) GK: Goalkeeper A: Absent W: Win GD: Goal difference VAR: Video Assistant Referee DF: Defender N: Not eligible to play D: Drawn Pts: Points AVAR 1: Assistant VAR MF: Midfielder I: Injured L: Lost AVAR 2: Offside VAR FW: Forward X: Misses next match if booked GF: Goals for AVAR 3: Support VAR C: Captain MP: Matches played GA: Goals against FRI 06 JUL 2018 15:08 CET / 16:08 Local time - Version 1 22°C / 71°F Hum.: 53% Page 1 / 1. -

21.00CET Full Time Report Italy Spain

Match 49 #ITAESP Full Time Report Semi-finals - Tuesday 6 July 2021 Wembley Stadium - London Italy Spain Italy win 4 - 2 on penalties (0) (4) 21.00CET (2) (0) 1 Half-time Penalties Penalties Half-time 1 21 Gianluigi Donnarumma GK 23 Unai Simón GK 2 Giovanni Di Lorenzo 2 César Azpilicueta 3 Giorgio Chiellini C 5 Sergio Busquets C 6 Marco Verratti 8 Koke 8 Jorginho 11 Ferran Torres 10 Lorenzo Insigne 12 Eric García 13 Emerson 18 Jordi Alba 14 Federico Chiesa 19 Dani Olmo 17 Ciro Immobile 21 Mikel Oyarzabal 18 Nicolò Barella 24 Aymeric Laporte 19 Leonardo Bonucci 26 Pedri 1 Salvatore Sirigu GK 1 David de Gea GK 26 Alex Meret GK 13 Robert Sánchez GK 5 Manuel Locatelli 3 Diego Llorente 9 Andrea Belotti 4 Pau Torres 11 Domenico Berardi 6 Marcos Llorente 12 Matteo Pessina 7 Álvaro Morata 15 Francesco Acerbi 9 Gerard Moreno 16 Bryan Cristante 10 Thiago Alcántara 20 Federico Bernardeschi 14 José Gayà 23 Alessandro Bastoni 16 Rodri 24 Alessandro Florenzi 17 Fabián Ruiz 25 Rafael Tolói 20 Adama Traoré Coach: Coach: Roberto Mancini Luis Enrique Referee: VAR: Felix Brych (GER) Marco Fritz (GER) Assistant referees: Assistant VAR: Mark Borsch (GER) Christian Dingert (GER) Stefan Lupp (GER) Christian Gittelmann (GER) Fourth official: Bastian Dankert (GER) Sergei Karasev (RUS) Reserve Assistant Referee: Attendance: 57,811 Maksim Gavrilin (RUS) 1 2 23:43:28CET Goal Y Booked R Sent off Substitution P Penalty O Own goal C Captain GK Goalkeeper Star of the match * Misses next match if booked 06 Jul 2021 Match 49 #ITAESP Full Time Report Semi-finals - Tuesday 6 July 2021 Wembley Stadium - London Italy Spain Italy win 4 - 2 on penalties (4) Extra time (2) 1 Penalties Penalties 1 Penalties 5 Manuel Locatelli X X 19 Dani Olmo 9 Andrea Belotti 9 Gerard Moreno 19 Leonardo Bonucci 10 Thiago Alcántara 20 Federico Bernardeschi X 7 Álvaro Morata 8 Jorginho Attendance: 57,811 2 2 23:43:28CET Goal Y Booked R Sent off Substitution P Penalty O Own goal C Captain GK Goalkeeper Star of the match * Misses next match if booked 06 Jul 2021. -

Max Eberl Profi-Votum Wiederwahl

SONDERHEFT 2016 VDV-MANAGER VDV 11 VDV-TREFFEN Max Eberl Profi-Votum Wiederwahl „Fohlenelf“ Aubameyang, Christoph Metzelder galoppiert Weigl und Tuchel bleibt weiter durch Europa ganz vorne Vizepräsident www.spielergewerkschaft.de DAS ECKIGE MUSS INS ECKIGE. Das offizielle Bundesliga Magazin der DFL – jetzt auch als E-Paper für Smartphones und Tablets. Download kostenlos im App Store oder bei Google Play. DFL_Bundesliga_DasEckige_210x297_VDS_Sportjournalist_39L300.indd 1 11.03.16 09:39 WIR PROFIS DAS MAGAZIN DER VDV SONDERHEFT 2016 VDV-ANSTOSS 3 Liebe Mitglieder, liebe Fußballfreunde, warme Sonnenstrahlen, volle mung zur Bundesliga-Auswahl Profisaison gehen. Häufig werden Stadien, stimmungsvolle Fan- VDV 11 gleich drei BVB-Akteure unsere VDV-Teambetreuer von gesänge und der Geruch nach herausragten. So wurde Pierre- den jungen Spielern gefragt, wel- frisch gemähtem Gras: Es geht Emerick Aubameyang von den che Vorteile eine VDV-Mitglied- wieder los und die Vorfreude Profis zum VDV-Spieler der Saison schaft für sie hätte. Die Antwort auf die neue Saison ist riesen- gewählt, während sich Julian Wei- darauf ist einfach: Ihr könnt mit- groß. gl den Titel des stärksten Newco- reden und mitentscheiden, wenn mers sicherte und Thomas Tuchel es um Eure Arbeitsbedingungen zum besten Trainer gekürt wurde. geht. Zudem erhaltet Ihr hervorra- Ihnen sowie allen Spielern der genden und weitgehend kosten- VDV 11 und den Aufsteigern in die losen Service in fast allen für Fuß- Bundesliga, die 2. Bundesliga, die ballprofis relevanten Fragen – wie 3. Liga -

Big-5 Weekly Post

CIES Football Observatory Issue n°120 - 28/09/2015 Big-5 Weekly Post Best players since the start of the season Premier League Liga Position All players U21 Position All players U21 Laurent Koscielny Chancel Mbemba Iñigo Martínez Carlos Vigaray CB CB Arsenal FC Newcastle United FC Real Sociedad de Fútbol Getafe CF Cédric Soares Jordan Amavi Marcelo Vieira João Cancelo FB FB Southampton FC Aston Villa FC Real Madrid CF Valencia CF Santi Cazorla Emre Can Luka Modrić Víctor Camarasa DM DM Arsenal FC Liverpool FC Real Madrid CF Levante UD Mesut Özil Nathan Redmond Fabián Orellana Alen Halilović AM AM Arsenal FC Norwich FC RC Celta de Vigo Real Sporting de Gijón Riyad Mahrez Raheem Sterling Lionel Messi Isaac Success FW FW Leicester City FC Manchester City FC FC Barcelona Granada CF Ligue 1 Bundesliga Position All players U21 Position All players U21 Thiago Silva Olivier Boscagli Jérôme Boateng Jonathan Tah CB CB Paris St-Germain FC OGC Nice FC Bayern München Bayer 04 Leverkusen Serge Aurier Benjamin Mendy Wendell Nascimento Ulisses Garcia FB FB Paris St-Germain FC Olympique de Marseille Bayer 04 Leverkusen SV Werder Bremen Lassana Diarra Vincent Koziello Ilkay Gündoğan Julian Weigl DM DM Olympique de Marseille OGC Nice BV 09 Borussia Dortmund BV 09 Borussia Dortmund Wahbi Khazri Bernardo Silva Douglas Costa Hakan Çalhanoğlu AM AM FC Girondins de Bordeaux AS Monaco FC Bayern München Bayer 04 Leverkusen Michy Batshuayi Corentin Jean Robert Lewandowski Michael Gregoritsch FW FW Olympique de Marseille ES Troyes Aube Champagne FC Bayern München -

15 14 15 Spurs Go Top of Premier League

Established 1961 Sport MONDAY, DECEMBER 7, 2020 Pandya powers India to thrilling Mbappe brings up century Barcelona suffer fourth Liga 14T20 series victory over Australia 15 as PSG win at Montpellier 15 defeat, go down 2-1 to Cadiz Spurs go top of Premier League Vardy lifts Leicester into third, Palace punish 10-man West Brom LONDON: Tottenham Hotspur’s French goalkeeper Hugo Lloris punches away the ball during the English Premier League football match between Tottenham Hotspur and Arsenal yesterday. — AFP LONDON: Son Heung-min and Harry Kane fired the earlier favor to tee up Kane to smash in from Clubs in tier-three areas of England, with tougher Wilder’s men hit back immediately for only their Tottenham back to the top of the Premier League close range. Son and Kane have now combined for coronavirus restrictions, still have to play behind fifth league goal of the season when Oli yesterday after Jamie Vardy struck in the final 31 Premier League goals, the second most of any closed doors, while tier-two zones such as London McBurnie showed good strength to head home minute to lift Leicester to third in the table. Jose pair after Chelsea’s Didier Drogba and Frank and Liverpool can have crowds of up to 2,000. from a corner. Mourinho’s men beat faltering Arsenal 2-0 in front Lampard (36). Tottenham who have not lost a Sheffield United have just one point from 11 games The hosts then held out more comfortably in of 2,000 vocal fans at Tottenham Hotspur Stadium league match since the opening day of the season, and were punished for a lack of ruthlessness as the second period until they lost possession to leapfrog Chelsea. -

Carteret Analytics

Carteret Analytics – 24 June 2021 UEFA Euro 2020 Group Stage Analysis Report James Powell - CEO, Carteret Group Matthew Belford - Director, Carteret Group Molly Crawford - Corporate Analyst, Carteret Group ______________________________________________________________________________________________________________________________________________________________________________ UEFA Euro 2020 Championship Group Stage Analysis Report City A.M. James Powell1, Matthew Belford2, Molly Crawford3 Strictly Private and Confidential 24 June 2021 1James Powell, Chief Executive Officer, Carteret Group, London. T: +44 20 3876 2414. E: [email protected] 2Matthew Belford, Director, Carteret Group, London. T: +44 20 3876 2414. E: [email protected] 3Molly Crawford, Corporate Analyst, Carteret Group, London. T: +44 20 3876 2414. E: [email protected] This document is strictly private and confidential, subject to contract and for general information purposes only. No warranty is provided with regard to its content, and Carteret Analytics assumes no liability whatsoever for its content. Nothing in this document constitutes an offer or an invitation or a solicitation to enter into any transaction. The reader should always seek independent and professional advice on any corporate transaction. Carteret Analytics Limited, 85 Gresham Street, London, EC2V 7NQ. T: +44 20 3876 2414. W: www.carteret.group Carteret Analytics - UEFA Euro 2020 Group Stage Analysis Report - Strictly Private and Confidential 24 June 2021 ___________________________________________________________________________________________________________________________________________________________ Key terms are defined in the Glossary Terms highlighted in yellow in this Report are key terms related to the proprietary quantitative analysis utilised by Carteret Analytics to produce the various insights on the players. Each key term will be highlighted in yellow when it is first used in the Report, and each is defined in more detail in the Glossary at Section 5 of this Report (Page 7). -

Dos Locos Y Un Balón

MANCHESTER CITY 2019-2020* DESCIFRANDO EL JUEGO DE PEP Albert Quera & Jordi Pérez Dos locos y un balón Valoración de los jugadores Antes de empezar con el análisis de los 44 partidos de la temporada 19/20 del Manchester City de Josep Guardiola vamos a hacer, en modo introducción, la valoración de los jugadores durante todos estos encuentros. Desde Ederson y Claudio Bravo hasta Agüero. Pasando por Rodri, Bernardo Silva y Gündogan. Vamos a ello: Ederson: (7,5), uno de los jugadores más importantes de la plantilla. Su juego de pies es oro para el Manchester City. Claudio Bravo: (5,5), cumplidor. No ha contado con muchos minutos pero ha dejado buenas actuaciones bajo palos. Cancelo: (5,5), no ha contado con muchos minutos. Pero cuando ha jugado, su profundidad ha sido clave. Walker: (6,5), formando la línea de tres atrás ha sido una figura esencial. No es el mejor lateral del mundo, para nada. Pero ha sido clave para Pep, y su posicionamiento interior ha sido importante para la presión tras pérdida y segundas jugadas. Otamendi: (5,5), su rendimiento tras la lesión de Laporte ha sido muy bajo. Imprecisiones, errores de posicionamiento y mala lectura del juego a nivel defensivo. Fernandinho: (6,5), cumplidor. Fuera de su posición, siendo central, ha dejado grandes actuaciones. Defensivamente muy sólido. Stones: (5), pocos minutos. Central que ha dejado mucho que desear a nivel defensivo. No ha contado lo suficiente para Pep. Laporte: (8), el segundo mejor jugador de la plantilla. Y sino es el mejor, el más importante. Una figura capital en defensa, sin ir más lejos, el bajo rendimiento del City en defensa viene tras su lesión. -

2018/19 UEFA Europa League Technical Report

Technical report 2018/19 CONTENTS OVERVIEW 4 6 16 Introduction Road The final to Baku 22 24 The winning Results coach ANALYSIS 28 32 34 Talking Goals of Squad of points the season the season STATISTICS 36 40 56 Number Team Roll of crunching profiles honour INTRODUCTION Group A Group B Bayer 04 FC Zürich AEK PFC Ludogorets FC Salzburg Celtic FC RB Leipzig Rosenborg BK Leverkusen (FCZ) Larnaca FC 1945 (SBG) (CEL) (LEI) (RBK) (BL) (LAR) (LUD) Group C Group D FC Zenit SK Slavia Praha FC Girondins FC København GNK Dinamo Fenerbahçe SK FC Spartak RSC Anderlecht (ZEN) (SLP) de Bordeaux (FCK) Zagreb (FEN) Trnava (AND) (BOR) (DZ) (TRN) Group E Group F (From left): Savvas Constantinou, Ghenadie Scurtul, Jarmo Matikainen, Stefan Majewski, Thomas Schaaf and Milenko Ačimovič from the UEFA Technical Observers Group, and Frank Ludolph, UEFA’s head of football education services, at the final Arsenal FC Sporting FC Vorskla Qarabağ FK Real Betis Olympiacos FC AC Milan F91 Dudelange (ARS) Clube de Portugal Poltava (QAR) Balompié (O LY ) (ACM) (DUD) (SCP) (VOR) (BTS) Association (CFA) and a member of the Jira Group G Group H Panel, provided detailed insight into how Chelsea and Arsenal had made it an all- OBSERVING English final, and Stefan Majewski, a former Poland international and current sport director of the Polish Football Association (PZPN), provided some equally illuminating Villarreal CF SK Rapid Wien Rangers FC FC Spartak Eintracht SS Lazio Apollon Olympique THE TACTICAL (VLR) (RPD) (RAN) Moskva Frankfurt (LAZ) Limassol FC de Marseille analysis of Slavia Praha’s captivating THE TACTICAL (SPM) (EIN) (APO) (OM) charge to the quarter-finals. -

The Player Trading Game 2017

The Player Trading Game 2017 footballbenchmark.com What is KPMG Football Benchmark? Consolidated and verified database of football clubs' financial and operational performance. Business intelligence tool enabling relevant comparisons with competitors. An ever-growing platform that includes data from over 150 European football clubs. A tool offering insights into many aspects of football clubs' operations, including, but not limited to, revenue generators, expense categories, profitability indicators, balance sheet items and stadium statistics. footballbenchmark.com Credits: Paris Saint-Germain FC © 2017 KPMG Advisory Ltd., a Hungarian limited liability company and a member firm of the KPMG network of independent member firms affiliated with KPMG International Cooperative (“KPMG International”), a Swiss entity. All rights reserved. Table of contents Foreword 4 How we calculate player trading balance for the purposes of this report 7 The European Top 20 8 Where are the “big fish”? 13 Basis of preparation and limiting conditions 15 © 2017 KPMG Advisory Ltd., a Hungarian limited liability company and a member firm of the KPMG network of independent member firms affiliated with KPMG International Cooperative (“KPMG International”), a Swiss entity. All rights reserved. 4 The Player Trading Game Foreword Only one year ago, the whole media and fans, it is noticeable that football world was stunned when the ratio between the fee paid for Manchester United FC broke record transfers and the operating the transfer record by signing revenues of the acquiring club has Frenchman Paul Pogba for EUR 105 remained stable at approximately million. Despite being considered 23% in the last 10 years. In view of by many as a disproportionate and that, Neymar’s acquisition by Paris unsustainable trend, this summer Saint-Germain FC (at 42%) could we have witnessed a further pull be considered as an exception, of the financial muscle exercised and more aligned to the ratio at the by clubs. -

Big-5 Weekly Post

CIES Football Observatory Issue n°99- 17/02/2015 Big-5 Weekly Post Experience capital: most promising players, by age category and position Experience capital: number of matches played in adult championships weighted by league level and club results. For more information, see CIES Football Observatory Monthly Report 2. Goalkeepers Big-5 Other leagues2 U201 Mouez Hassen, Nice (FRA) 12.1 Georgi Kitanov, Cherno More Varna (BUL) 23.8 U21 Stefanos Kapino, Mainz (GER) 33.1 Yvon Mvogo, Young Boys (SUI) 25.6 U22 Jan Oblak, Atlético Madrid (ESP) 57.2 Oliver Zelenika, Lokomotiva Zagreb (CRO) 26.4 U23 Thibaut Courtois, Chelsea (ENG) 200.6 Mathew Ryan, Club Brugge (BEL) 83.1 Full backs Big-5 Other leagues2 U20 Luke Shaw, Manchester United (ENG) 65.8 Eduard Sobol, Metalurg Donetsk (UKR) 29.4 U21 Benjamin Mendy, Marseille (FRA) 60.8 Jetro Willems, PSV (NED) 94.5 U22 Juan Bernat, Bayern München (GER) 75.3 Jonas Svensson, Rosenborg (NOR) 62.9 U23 David Alaba, Bayern München (GER) 155.2 Laurens De Bock, Club Brugge (BEL) 82.4 Centre backs Big-5 Other leagues2 U20 Niklas Süle, Hoffenheim (GER) 36.4 Milan Škriniar, MŠK Žilina (SVK) 21.8 U21 Marquinhos Aoás, PSG (FRA) 66.8 Karim Rekik, PSV (NED) 52.2 U22 Raphaël Varane, Real Madrid (ESP) 84.8 Emil Bergström, Djurgårdens (SWE) 46.7 U23 Phil Jones, Manchester United (ENG) 143.0 Bruno Martins Indi, Porto (POR) 115.7 Defensive/Central midfielders Big-5 Other leagues2 U20 Carlos Gruezo, Stuttgart (GER) 51.0 Tonny Vilhena, Feyenoord (NED) 76.8 U21 Mateo Kovačić, Internazionale (ITA) 81.5 Kyle Ebecilio, Twente (NED) -

2020-21 Panini Impeccable Hobby Soccer Checklist

2020/21 Impeccable Premier League Soccer Card Totals 292 Players with Cards TOTAL TOTAL Auto Auto Gold Team Relic Metal Base CARDS HITS Only Relic Silver Aaron Connolly 33 33 33 Aaron Cresswell 171 0 171 Aaron Ramsdale 204 33 33 171 Aaron Wan-Bissaka 1145 1145 475 350 320 Adam Lallana 171 0 171 Adam Webster 483 483 483 Adama Traore 665 585 300 33 252 80 Ademola Lookman 346 175 175 171 Aleksandar Mitrovic 254 3 3 80 171 Alex McCarthy 253 82 82 171 Alex Telles 549 549 374 175 Alexandre Lacazette 240 160 160 80 Alexis Mac Allister 80 0 80 Alisson 136 136 26 33 77 Allan 33 33 33 Allan Saint-Maximin 204 33 33 171 Alphonse Areola 204 33 33 171 Andre Schurrle 175 175 175 Andreas Christensen 94 94 94 Andre-Frank Zambo Anguissa 204 33 33 171 Andros Townsend 109 109 33 76 Andy Robertson 47 47 47 Anthony Gordon 80 0 80 Anthony Martial 189 109 33 76 80 Antonee Robinson 204 33 33 171 Ashley Barnes 169 89 89 80 Ashley Westwood 204 33 33 171 Asmir Begovic 350 350 350 Aymeric Laporte 493 493 493 Ayoze Perez 157 77 77 80 Ben Chilwell 431 431 398 33 Ben Davies 134 134 134 Ben Mee 562 562 485 77 Ben White 171 0 171 Bernardo Silva 811 811 461 350 Bernd Leno 113 33 33 80 Billy Sharp 489 489 489 Bobby De Cordova-Reid 171 0 171 Brandon Williams 604 524 524 80 Bruno Fernandes 530 530 497 33 Bukayo Saka 320 320 287 33 Caglar Soyuncu 833 833 833 Callum Hudson-Odoi 77 77 77 Callum Robinson 204 33 33 171 GroupBreakChecklists.com 2019-20 Impeccable Premier League Soccer Card Totals TOTAL TOTAL Auto Auto Gold Team Relic Metal Base CARDS HITS Only Relic Silver Callum -

Pre-Season Tour 2018

MANCHESTER CITY PRE-SEASON TOUR 2018 MANCHESTER CITY PRE-SEASON TOUR 2017 1 THROUGHOUT ITS PROUD HISTORY, OUR FOOTBALL CLUB HAS BUILT A DEEP, LASTING KINSHIP WITH COMMUNITIES IN MANCHESTER AND IN CITIES FURTHER AFIELD. THE FANS SHOW IT IN THEIR UNWAVERING PASSION FOR THE CLUB; WE SHOW IT THROUGH OUR DEDICATION TO BUILDING, FOR THEM, THE SUCCESSFUL AND SUSTAINABLE FOOTBALL CLUB FOR THE FUTURE. IT IS A RESPONSIBILITY THAT THE CLUB IS HONOURED TO SHOULDER. CONTACTS COMMUNICATIONS DEPARTMENT CHIEF EXECUTIVE OFFICER CHIEF COMMUNICATIONS SIMON HEGGIE CONTACTS FOR USA FERRAN SORIANO OFFICER HEAD OF MEDIA RELATIONS SIMON HEGGIE VICKY KLOSS E: [email protected] STEPH TOMAN DIRECTOR OF FOOTBALL T: +44 161 438 7738 TOBY CRAIG TXIKI BEGIRISTAIN CHIEF FINANCIAL OFFICER M: +44 7791 857 452 ANDY YOUNG CONTACTS FOR FIRST TEAM MANAGER ALEX ROWEN COMMUNITY SHIELD MEDIA RELATIONS MANAGER PEP GUARDIOLA CHIEF INFRASTRUCTURE ALEX ROWEN OFFICER E: [email protected] CARLOS VICENTE HEAD OF ELITE JON STEMP M: +44 7885 268 047 DEVELOPMENT SQUAD PAUL HARSLEY CLUB AMBASSADOR CARLOS VICENTE MIKE SUMMERBEE INTERNATIONAL MEDIA HEAD OF RELATIONS MANAGER GLOBAL FOOTBALL LIFE PRESIDENT E: [email protected] BRIAN MARWOOD BERNARD HALFORD M: +44 7850 096 527 TOBY CRAIG DIRECTOR OF CORPORATE AND COMMERCIAL COMMUNICATIONS E: [email protected] T: +44 20 7874 5519 M: +44 7710 380 248 STEPHANIE TOMAN HEAD OF MARKETING COMMUNICATIONS E: [email protected] T: +44 161 438 7989 M: +44 7736 464 316 All information correct at time of