Aural (Re)Positioning and the Aesthetics of Realism in First-Person Shooter Games

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Media Violence Do Children Have Too Much Access to Violent Content?

Published by CQ Press, an Imprint of SAGE Publications, Inc. www.cqresearcher.com media violence Do children have too much access to violent content? ecent accounts of mass school shootings and other violence have intensified the debate about whether pervasive violence in movies, television and video R games negatively influences young people’s behavior. Over the past century, the question has led the entertain ment media to voluntarily create viewing guidelines and launch public awareness campaigns to help parents and other consumers make appropriate choices. But lawmakers’ attempts to restrict or ban con - tent have been unsuccessful because courts repeatedly have upheld The ultraviolent video game “Grand Theft Auto V” the industry’s right to free speech. In the wake of a 2011 Supreme grossed more than $1 billion in its first three days on the market. Young players know it’s fantasy, Court ruling that said a direct causal link between media violence some experts say, but others warn the game can negatively influence youths’ behavior. — particularly video games — and real violence has not been proved, the Obama administration has called for more research into the question. media and video game executives say the cause of mas shootings is multifaceted and c annot be blamed on the enter - I tainment industry, but many researchers and lawmakers say the THIS REPORT N industry should shoulder some responsibility. THE ISSUES ....................147 S BACKGROUND ................153 I CHRONOLOGY ................155 D CURRENT SITUATION ........158 E CQ Researcher • Feb. 14, 2014 • www.cqresearcher.com AT ISSUE ........................161 Volume 24, Number 7 • Pages 145-168 OUTLOOK ......................162 RECIPIENT Of SOCIETY Of PROfESSIONAL JOURNALISTS AwARD fOR BIBLIOGRAPHY ................166 EXCELLENCE N AmERICAN BAR ASSOCIATION SILvER GAvEL AwARD THE NEXT STEP ..............167 mEDIA vIOLENCE Feb. -

Bachelor Thesis

BACHELOR THESIS “How does Sound Design Impact the Players Perception of the Level of Difficulty in a First Person Shooter Game?” Sebastian Darth 2015 Bachelor of Arts Audio Engineering Luleå University of Technology Department of Arts, Communication and Education 1 “How does sound design impact the players perception of the level of difficulty in a first person shooter game?” Sebastian Darth 2015 Bachelor of Science Audio Engineering Luleå University of Technology Department of Arts, Communication and Education 2 Abstract This research paper explore if a games sound design have an impact on a games perceived difficulty from a players point of view. The game industry produces many game titles that are similar in terms of mechanics and controls, for example, first person shooter games. This thesis investigates if the differences in sound design matter. Where many first person shooter games are very similar in terms of gameplay, controls and sound design, these sound designs are similar though not identical. With new information on how players use sound when they play games, interesting new games styles, mechanics and kinds of game-play could be explored. This study found that players use the sounds of his or her allies in the game more than any other sound when presented different tasks in games. It was also shown that players use the sound of weapon fire to know where their targets are and when they need to take cover to avoid defeat. Finally it was suggested that loud weapons might impact the perceived difficulty as it might cover the sounds of the various voices. -

UPC Platform Publisher Title Price Available 730865001347

UPC Platform Publisher Title Price Available 730865001347 PlayStation 3 Atlus 3D Dot Game Heroes PS3 $16.00 52 722674110402 PlayStation 3 Namco Bandai Ace Combat: Assault Horizon PS3 $21.00 2 Other 853490002678 PlayStation 3 Air Conflicts: Secret Wars PS3 $14.00 37 Publishers 014633098587 PlayStation 3 Electronic Arts Alice: Madness Returns PS3 $16.50 60 Aliens Colonial Marines 010086690682 PlayStation 3 Sega $47.50 100+ (Portuguese) PS3 Aliens Colonial Marines (Spanish) 010086690675 PlayStation 3 Sega $47.50 100+ PS3 Aliens Colonial Marines Collector's 010086690637 PlayStation 3 Sega $76.00 9 Edition PS3 010086690170 PlayStation 3 Sega Aliens Colonial Marines PS3 $50.00 92 010086690194 PlayStation 3 Sega Alpha Protocol PS3 $14.00 14 047875843479 PlayStation 3 Activision Amazing Spider-Man PS3 $39.00 100+ 010086690545 PlayStation 3 Sega Anarchy Reigns PS3 $24.00 100+ 722674110525 PlayStation 3 Namco Bandai Armored Core V PS3 $23.00 100+ 014633157147 PlayStation 3 Electronic Arts Army of Two: The 40th Day PS3 $16.00 61 008888345343 PlayStation 3 Ubisoft Assassin's Creed II PS3 $15.00 100+ Assassin's Creed III Limited Edition 008888397717 PlayStation 3 Ubisoft $116.00 4 PS3 008888347231 PlayStation 3 Ubisoft Assassin's Creed III PS3 $47.50 100+ 008888343394 PlayStation 3 Ubisoft Assassin's Creed PS3 $14.00 100+ 008888346258 PlayStation 3 Ubisoft Assassin's Creed: Brotherhood PS3 $16.00 100+ 008888356844 PlayStation 3 Ubisoft Assassin's Creed: Revelations PS3 $22.50 100+ 013388340446 PlayStation 3 Capcom Asura's Wrath PS3 $16.00 55 008888345435 -

Nicole Vickery Thesis (PDF 10MB)

ENGAGING IN ACTIVITIES: THE FLOW EXPERIENCE OF GAMEPLAY Nicole E. M. Vickery Bachelor of Games and Interactive Entertainment, Bachelor of Information Technology (First Class Honours) Submitted in fulfilment of the requirements for the degree of Doctor of Philosophy School of Electrical Engineering and Computer Science Science and Engineering Faculty Queensland University of Technology 2019 Keywords Videogames; Challenge; Activities; Gameplay; Play; Activity Theory; Dynamics; Flow; Immersion; Game design; Conflict; Narrative. i Abstract Creating better player experience (PX) is dependent upon understanding the act of gameplay. The aim of this thesis is to develop an understanding of enjoyment in dynamics actions in-game. There are few descriptive works that capture the actions of players while engaging in playing videogames. Emerging from this gap is our concept of activities, which aims to help describe how players engage in both game-directed (ludic), and playful (paidic) actions in videogames. Enjoyment is central to the player experience of videogames. This thesis utilises flow as a model of enjoyment in games. Flow has been used previously to explore enjoyment in videogames. However, flow has rarely been used to explore enjoyment of nuanced gameplay behaviours that emerge from in-game activities. Most research that investigates flow in videogames is quantitative; there is little qualitative research that describes ‘how’ or ‘why’ players experience flow. This thesis aims to fill this gap by firstly understanding how players engage in activities in games, and then investigating how flow is experience based on these activities. In order to address these broad objectives, this thesis includes three qualitative studies that were designed to create an understanding of the relationship between activities and flow in games. -

Life Cycle Patterns of Cognitive Performance Over the Long

Life cycle patterns of cognitive performance over the long run Anthony Strittmattera,1 , Uwe Sundeb,1,2, and Dainis Zegnersc,1 aCenter for Research in Economics and Statistics (CREST)/Ecole´ nationale de la statistique et de l’administration economique´ Paris (ENSAE), Institut Polytechnique Paris, 91764 Palaiseau Cedex, France; bEconomics Department, Ludwig-Maximilians-Universitat¨ Munchen,¨ 80539 Munchen,¨ Germany; and cRotterdam School of Management, Erasmus University, 3062 PA Rotterdam, The Netherlands Edited by Robert Moffit, John Hopkins University, Baltimore, MD, and accepted by Editorial Board Member Jose A. Scheinkman September 21, 2020 (received for review April 8, 2020) Little is known about how the age pattern in individual perfor- demanding tasks, however, and are limited in terms of compara- mance in cognitively demanding tasks changed over the past bility, technological work environment, labor market institutions, century. The main difficulty for measuring such life cycle per- and demand factors, which all exhibit variation over time and formance patterns and their dynamics over time is related to across skill groups (1, 19). Investigations that account for changes the construction of a reliable measure that is comparable across in skill demand have found evidence for a peak in performance individuals and over time and not affected by changes in technol- potential around ages of 35 to 44 y (20) but are limited to short ogy or other environmental factors. This study presents evidence observation periods that prevent an analysis of the dynamics for the dynamics of life cycle patterns of cognitive performance of the age–performance profile over time and across cohorts. over the past 125 y based on an analysis of data from profes- An additional problem is related to measuring productivity or sional chess tournaments. -

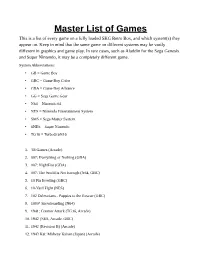

Master List of Games This Is a List of Every Game on a Fully Loaded SKG Retro Box, and Which System(S) They Appear On

Master List of Games This is a list of every game on a fully loaded SKG Retro Box, and which system(s) they appear on. Keep in mind that the same game on different systems may be vastly different in graphics and game play. In rare cases, such as Aladdin for the Sega Genesis and Super Nintendo, it may be a completely different game. System Abbreviations: • GB = Game Boy • GBC = Game Boy Color • GBA = Game Boy Advance • GG = Sega Game Gear • N64 = Nintendo 64 • NES = Nintendo Entertainment System • SMS = Sega Master System • SNES = Super Nintendo • TG16 = TurboGrafx16 1. '88 Games (Arcade) 2. 007: Everything or Nothing (GBA) 3. 007: NightFire (GBA) 4. 007: The World Is Not Enough (N64, GBC) 5. 10 Pin Bowling (GBC) 6. 10-Yard Fight (NES) 7. 102 Dalmatians - Puppies to the Rescue (GBC) 8. 1080° Snowboarding (N64) 9. 1941: Counter Attack (TG16, Arcade) 10. 1942 (NES, Arcade, GBC) 11. 1942 (Revision B) (Arcade) 12. 1943 Kai: Midway Kaisen (Japan) (Arcade) 13. 1943: Kai (TG16) 14. 1943: The Battle of Midway (NES, Arcade) 15. 1944: The Loop Master (Arcade) 16. 1999: Hore, Mitakotoka! Seikimatsu (NES) 17. 19XX: The War Against Destiny (Arcade) 18. 2 on 2 Open Ice Challenge (Arcade) 19. 2010: The Graphic Action Game (Colecovision) 20. 2020 Super Baseball (SNES, Arcade) 21. 21-Emon (TG16) 22. 3 Choume no Tama: Tama and Friends: 3 Choume Obake Panic!! (GB) 23. 3 Count Bout (Arcade) 24. 3 Ninjas Kick Back (SNES, Genesis, Sega CD) 25. 3-D Tic-Tac-Toe (Atari 2600) 26. 3-D Ultra Pinball: Thrillride (GBC) 27. -

Printing Trends in Board & Card Games

Printing Trends in Board & Card Games Jessica Lee Riddell Graphic Communication Department | College of Liberal Arts | California Polytechnic State University June 2013 Abstract The board and card game industry are facing growing pressures from digital games, as video and social media games become more prevalent. Emerging print and media technologies, namely printed electronics and augmented reality, could provide a board and card gaming experience that would draw in gamers who typically play digital games. The expected outcomes of the literature research, industry and market surveys, and subsequent paper are an understanding of the history of games, the current state of the game manufacturing and publishing industry, and attitudes of gamers who would be playing games embedded with the emerging technologies. A2 Table of Contents Abstract . 2 Table of Contents . 3 Chapter 1: Purpose of the Study . 4 Significance of the Study . 4 Interest in the Study . 5 Chapter 2: Literature Review . 6 History of Game Art and Production . 7 History of Game Technology . 8 Current Game Art and Production . 9 Current Game Technology . 11 Chapter 3: Research Methodology . 13 Objectives . 13 Samples Studied . 13 Data . 14 Analysis . 14 Chapter 4: Results . 15 Industry Survey . 15 Publishers . 15 Manufacturers . 16 Developers . 16 Print and Substrate Analysis . 17 Chapter 5: Conclusion . 18 References . 20 Appendices. 22 Appendix 1: Survey questions . 22 Appendix 2: Publisher Response . 26 Appendix 3: Publisher Response (Document) . 30 Appendix 4: Game Developer Response . 31 Appendix 5: Overview of Industry Survey Results . 35 Appendix 6: Print Analysis Overview . 36 Appendix 7: Gamer Survey Response . 40 T3 Purpose of the Study Design, production, and technology have a circular relationship. -

Lizengland Resume

Liz England Toronto, ON Systems Designer [email protected] SKILLS Almost 15 years of professional design experience in the game industry, and in a large variety of roles. I work best when wrangling huge complex game systems at the high level, and then also implementing and tuning the detail work needed to support that system at the low level. Some more specific skills and areas of design I am looking to explore on my next project(s): ● systems driven games ● procedural generation ● high player agency & expression ● emergent narrative systems ● simulations ● new/experimental tech (i.e. machine ● AI (non-combat) learning) EXPERIENCE TEAM LEAD GAME DESIGNER, Ubisoft Toronto 2016-2021 Watch Dogs Legion / 2020 / PC & Consoles Design lead on procedural generation and simulation systems involved in the "Play as Anyone" pillar, including: narrative tools and technology development (writing, audio, cinematic, and localization support for procgen characters and dynamic dialogue) and procedurally generated AI (assembling assets/data to generate coherent characters, narrative backstories, relationships, persistent schedules, recruitment systems, and more). DESIGNER, Insomniac Games 2010 - 2016 The Edge of Nowhere and Feral Rites / 2016/ PC & Oculus Rift VR General design tasks (scripting, level design, story development, puzzle design). Created development guidelines (level design, camera, UI, fx, art) to mitigate simulation sickness. Left prior to shipping. Sunset Overdrive / 2014 / Xbox One Focused mainly on integrating many large and small systems together to make a smooth open world experience, such as downtime between missions, shared spaces (quest/mission hubs), roll out of content, abilities, and rewards (macro progression), and miscellaneous open world systems such as collectibles, vendors , fast travel, maps, checkpoints/respawn, and player vanity. -

Battlefield 3 Psp Iso Download

Battlefield 3 Psp Iso Download Battlefield 3 Psp Iso Download 1 / 2 Download Battlefield 3 PSP (.iso) Gaming Rom Free For Android (Mobiles & Tablets) ... @rawatanuj307 kagak bisa bro di download. 0 replies .... Battlefield 3 is a first-person shooter game which has been released in ISO format full version. Please ignore highly compressed due to the .... Download Battlefield 3 PSP iso game on your Android Phones & Tablets and get Ready to Fight in a Epic Battle.Battlefield 3 is a first-person .... Battlefield 3 Psp Iso Download Game (Ppsspp) DLC Xbox360 Battlefield 3 DLC PACK 4PLAYERs Games Direct. battlefield 3 psp iso download .... Battlefield 3, free and safe download. Battlefield 3 latest version: Awesome next generation first-person shooter.. download game apk, apk downloader, apk games, coc mod, coc mod apk download, download apk gamesbattlefield 3 psp iso game apk, download game apk, .... Teen Patti Gold 2.16 Mod Hack Apk Download - weliketoplaygames. ... gta vice city android apk+obb - weliketoplaygames. ... Silent Hill Shattered Memories PSP iso Android (Eng) Game - weliketoplaygames.. Download Battlefield 3 PSP iso game on your Android Phones & Tablets and get Ready to Fight in a Epic Battle.Battlefield 3 is a first-person ... Those who are loyal fans of the first-person shooter genre will choose Battlefield 3 as one of the super products of this game genre. The game was.. Battlefield 3 (EUR+DLC) PS3 ISO Download for the Sony PlayStation 3/PS3/RPCS3. Game description, information and ISO download page.. Download Battlefield 3 PSP iso game on your Android Phones & Tablets and get Ready to Fight in a Epic Battle.Battlefield 3 is a first-person ... -

Module 2 Roleplaying Games

Module 3 Media Perspectives through Computer Games Staffan Björk Module 3 Learning Objectives ■ Describe digital and electronic games using academic game terms ■ Analyze how games are defined by technological affordances and constraints ■ Make use of and combine theoretical concepts of time, space, genre, aesthetics, fiction and gender Focuses for Module 3 ■ Computer Games ■ Affect on gameplay and experience due to the medium used to mediate the game ■ Noticeable things not focused upon ■ Boundaries of games ■ Other uses of games and gameplay ■ Experimental game genres First: schedule change ■ Lecture moved from Monday to Friday ■ Since literature is presented in it Literature ■ Arsenault, Dominic and Audrey Larochelle. From Euclidian Space to Albertian Gaze: Traditions of Visual Representation in Games Beyond the Surface. Proceedings of DiGRA 2013: DeFragging Game Studies. 2014. http://www.digra.org/digital- library/publications/from-euclidean-space-to-albertian-gaze-traditions-of-visual- representation-in-games-beyond-the-surface/ ■ Gazzard, Alison. Unlocking the Gameworld: The Rewards of Space and Time in Videogames. Game Studies, Volume 11 Issue 1 2011. http://gamestudies.org/1101/articles/gazzard_alison ■ Linderoth, J. (2012). The Effort of Being in a Fictional World: Upkeyings and Laminated Frames in MMORPGs. Symbolic Interaction, 35(4), 474-492. ■ MacCallum-Stewart, Esther. “Take That, Bitches!” Refiguring Lara Croft in Feminist Game Narratives. Game Studies, Volume 14 Issue 2 2014. http://gamestudies.org/1402/articles/maccallumstewart ■ Nitsche, M. (2008). Combining Interaction and Narrative, chapter 5 in Video Game Spaces : Image, Play, and Structure in 3D Worlds, MIT Press, 2008. ProQuest Ebook Central. https://chalmers.instructure.com/files/738674 ■ Vella, Daniel. Modelling the Semiotic Structure of Game Characters. -

Reviews of Selected Books and Articles on Gaming and Simulation. INSTITUTION Rand Corp., Santa Monica, Calif

DOCUMENT RESUME ED 077 221 EM 011 125 AUTHOR Shubik, Martin; Brewer, Garry D. TITLE Reviews of Selected Books and Articles on Gaming and Simulation. INSTITUTION Rand Corp., Santa Monica, Calif. SPONS AGENCY Department of Defense, Washington, D.C. Advanced Research Projects Agency. REPORT NO R-732-ARPA PUB DATE Jun 72 NOTE 55p.; ARPA Order No.: 189-1 EDRS PRICE MF -$0.65 HC-$3.29 DESCRIPTORS *Annotated Bibliographies; Educational Games; *Games; *Game Theory; *Models; *Simulation IDENTIFIERS War Games ABSTRACT This annotated bibliography represents the initial step taken by the authors to apply standards of excellence tothe evaluation of literature in the fields of gaming, simulation,and model-building. It aims at helping persons interested in these subjects deal with the flood of literatureon these topics by making value judgments, based on the professional competence ofthe reviewers, abcut individual works. Forty-eight books and articlesare reviewed, covering the years 1898-1971, although most of the works were published in the past 15 years. The majority of these deal with one of the following topics: 1) general game theory; 2) instructional games and simulation; 3) board and table games, suchas chess and dice games; 4) war games; 5) the interpersonal and psychological aspects of gaming; 6) gaming and the behavioral sciences; 7) crisis and conflict; and 8) the application of game theory to politicaland social policy-making. The reviews range in length froma few sentences-iko several pages, depending upon the individual articleor book. 044 ARPA ORDER NO.: 189-1 3D20 Human Recources R-732-ARPA June 1972 Reviews of Selected Books and ArticlesonGaming and Simulation Martin Shubik and Garry D. -

Računalne Igre Kao Rezervoar Taktika I Alat Za Regrutaciju Virtualnih Ratnika: Armed Assault, Ace, Vbs2

RAČUNALNE IGRE KAO REZERVOAR TAKTIKA I ALAT ZA REGRUTACIJU VIRTUALNIH RATNIKA: ARMED ASSAULT, ACE, VBS2 Dinko Štimac * UDK: 004:793/794 793/794:004 004:355 355:004 Primljeno: 23.X.2013. Prihvaćeno: 18.II.2014. Sažetak Tekst se bavi fenomenom FPS (first person shooter) igara i njihovim korištenjem kao simu- lacijskih alata za trening vojnika. Posebno se koncentrira na igru Armed Assault i njezin mod ACE. Također, nastoji se razviti preliminarni uvid u ulogu modova u stvaranju sve preciznijih simulatora. Vojska je prepoznala potencijal komercijalnih FPS-ova u preciznom simuliranju ratišta, posebno u smislu korištenja tog potencijala za trening jedinica na razini voda. Propituju se izvjesni pogledi prema kojima igranje igara dovodi do blaziranosti igrača i stvaranja dojma o ratu kao beskrvnoj i čistoj zabavi. Također, upozorava se na potencijal simulacija da u kontekstu suvremenih oružanih sila AVF (all volunteer force) tipa razviju bar kod dijela civilnog stanovništva bolje razumijevanje vojske kako bi se na taj način povećao nadzor koji civilni sektor može uspostaviti nad profesionalnim vojnim sektorom. Ključne riječi: first person shooter, Virtual Battlespace 2, all volunteer force, simulacija, virtualna stvarnost, vojska. UVOD FPS (first person shooter), tj. „pucačina iz prvog lica”, žanr je računalnih igara, u svojim začecima poznat kroz igre poput Wolfenstein 3D (1992) i Doom (1993), za kojima slijede sve napredniji i umreženiji Unreal Tournament (1999), Quake III Are- na (1999) i danas aktualne posljednje inkarnacije serijala Battlefield i Call of Duty. Primjerke ovog žanra karakterizira naglasak na pretežito pješački borbeni angažman igrača protiv posve fiktivnih, povijesnih ili aktualnih protivnika, a sve to izvedeno kroz „oči lika” (tj.