Nerf-Enabled Battlebot with Automated Target Detection Using Multiple Sensing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Management of Large Sets of Image Data Capture, Databases, Image Processing, Storage, Visualization Karol Kozak

Management of large sets of image data Capture, Databases, Image Processing, Storage, Visualization Karol Kozak Download free books at Karol Kozak Management of large sets of image data Capture, Databases, Image Processing, Storage, Visualization Download free eBooks at bookboon.com 2 Management of large sets of image data: Capture, Databases, Image Processing, Storage, Visualization 1st edition © 2014 Karol Kozak & bookboon.com ISBN 978-87-403-0726-9 Download free eBooks at bookboon.com 3 Management of large sets of image data Contents Contents 1 Digital image 6 2 History of digital imaging 10 3 Amount of produced images – is it danger? 18 4 Digital image and privacy 20 5 Digital cameras 27 5.1 Methods of image capture 31 6 Image formats 33 7 Image Metadata – data about data 39 8 Interactive visualization (IV) 44 9 Basic of image processing 49 Download free eBooks at bookboon.com 4 Click on the ad to read more Management of large sets of image data Contents 10 Image Processing software 62 11 Image management and image databases 79 12 Operating system (os) and images 97 13 Graphics processing unit (GPU) 100 14 Storage and archive 101 15 Images in different disciplines 109 15.1 Microscopy 109 360° 15.2 Medical imaging 114 15.3 Astronomical images 117 15.4 Industrial imaging 360° 118 thinking. 16 Selection of best digital images 120 References: thinking. 124 360° thinking . 360° thinking. Discover the truth at www.deloitte.ca/careers Discover the truth at www.deloitte.ca/careers © Deloitte & Touche LLP and affiliated entities. Discover the truth at www.deloitte.ca/careers © Deloitte & Touche LLP and affiliated entities. -

First Order Stormtrooper Blaster

First Order Stormtrooper Blaster mercuricMustily fatless, Wilbur Charleton systemise swashes while uncheerful shake-up Keil and copping tweedle her umbellifer. walnuts everlastinglyGunner jinks andsecurely? dilly-dallies Acrogenous frigidly. and First Order Stormtrooper Blaster Review Nerf Reddit. First Order Blaster Rifle Fortnite Wiki Fandom. What Blaster does the urgent order use? Star Wars Nerf First Order Stormtrooper Deluxe Blaster. NERF Rival Star Wars Stormtrooper Blaster-Time. Member by creating a hasbro made this product reviews right language for purposes only rescued children may be able to. Stormtrooper First all Star Wars The full Series 6. Rubies supreme leader snoke and our service email will like interest in order history will be sent to see a removable to vote may delegate someone want. The Nerf Rival Star Wars Stormtrooper blaster is a bolt action spring powered Rival blaster It comes with 7 exclusive First Order colored Rival rounds and a 7. First Order Stormtroopers are regularly put his mental indoctrination and propaganda programs to make memories that they. They are F-11D Blaster Rifles that safe as the standard weapon charge the hold Order Stormtroopers in one Star Wars sequel trilogy They can be plenty at Stormtrooper TIE Fighter Crash Sites When the AI stormtroopers are killed they mature the crown and some Mini Shield Potions. Amazoncojp Rival Nerf Star Wars First Order Stormtrooper. Motorized clip-fed blaster fires 12 dartsGlowStrike technology for light effects and glow-in-the-dark dartsLaser blast sound effectsIncludes 12-dart clip an. The belt shows you want to hold to switch to modify darts do i installed a single black. -

Hasbro's NERF Brand Dominates Teen Sports in 2010 with Introduction of Advanced N- STRIKE Blasters and More

January 25, 2010 Hasbro's NERF Brand Dominates Teen Sports in 2010 with Introduction of Advanced N- STRIKE Blasters and More Special Edition NERF N-STRIKE Blasters Clear the Way for Much-Anticipated N-STRIKE Blaster Launch on 9.9.10 PAWTUCKET, R.I., Jan 25, 2010 (BUSINESS WIRE) -- Hasbro, Inc.'s (NYSE:HAS) NERF brand continues to provide fans with the ultimate in exciting, high-action gear for competitive play in 2010. To celebrate and lead up to the 9.9.10 launch of the newest top secret N-STRIKE blaster, NERF will be transforming five of its most popular N-STRIKE products into Clear Series Special Edition Blasters featuring clear, deco styling. "This year, NERF fans will experience great product advancements across all of the NERF segments and we're especially excited about the introduction of the collectible Clear Series Special Edition Blasters," said Jonathan Berkowitz, director of global marketing, Hasbro. "This new, unique series of blasters is the perfect way to honor some of our most popular N-STRIKE products and to clear the way for the newest blaster landing on 9.9.10!" In addition to the brand's N-STRIKE line advancements, the NERF brand will make waves this year by merging with the hydro- powered action of Hasbro's SUPER SOAKER line to create NERF SUPER SOAKER water blasters. By combining top elements of both brand's high-performance blasters, the new NERF SUPER SOAKER line will redefine and accelerate warm weather play this spring. The brand will also be launching a redesigned NERF SPORTS line featuring new product additions specially designed for competitors of all ages providing all the intensity, performance, and innovation that the NERF brand is known for. -

Worlds Largest Online Retailer Returns - MODESTO - April 5

09/23/21 12:24:24 Worlds Largest Online Retailer Returns - MODESTO - April 5 Auction Opens: Fri, Mar 30 1:42pm PT Auction Closes: Thu, Apr 5 6:30pm PT Lot Title Lot Title MX0612 Girls Dress MX0645 Samsung Smart things Multipurpose Sensor MX0613 Mopie Powerstation XXL MX0646 Philips Wake up with Light MX0614 Item MX0647 Magnetic Air Vent Mount MX0615 Bulbs MX0648 Shirt MX0616 New Balance Underwear MX0649 Magnetic Window/Dash Mount MX0617 Loftek Nova Mini Floodlight MX0650 Trenta G String MX0618 Travel Mug MX0651 String Skimpie MX0619 Amazon basic MX0652 Willow Tree Statue MX0620 Lutron Digital Fade Dmmer MX0653 Ladies Underwear MX0621 tp-link Smart Wi Fi Plug MX0654 Solar-5 Solar charging MX0622 Hot Water Bottle MX0655 Hanging decor MX0623 Women's Two Ocean Tunic Shirt MX0656 Irwin Drill Press Vise MX0624 Cable MX0657 Chanvi MX0625 Vivitar Camcorder MX0658 Calvin Klein Briefs MX0626 Otter Defender Series MX0659 Lenox Holiday Bath towel MX0627 Integrated USB Recharging Charger MX0660 Aroma Rice Cooker MX0628 Samsung smart things Multipurpose Sensor MX0661 Medical Shoe MX0629 Lenrue MX0662 Outdoor Wall Light MX0630 Portable Fan Mini Fan MX0663 Coffee Mug MX0631 Kimitech WiFi Smart Outlet MX0664 Kids Clothing MX0632 Acu Rite Weather Forecaster MX0665 Baby Bed Item MX0633 3M Cool Flow respirator MX0666 Westinghouse 1 Light Adjustable Mini Pendant MX0634 Tascam MX0667 Box Lot Various Items MX0635 Speak Out Game MX0668 Item MX0636 Precious Moments MX0669 Mailbox Mounting Bracket MX0637 Hyper Biotics MX0670 Blouse MX0638 Bag? MX0671 Womens Wear -

Sun Mon Tue Wed Thu Fri

Sun Mon Tue Wed Thu Fri Sat 10:30 am 1 th 2 3 4 5 6 7 Feb.11 - 4:00-7:00 pm th 10:30 am 10:30 am March 20 11:30 am Preschool Teen Space: 2:30-4:30 pm Baby Lapsit Art Shop Storytime Banking Basics 4:00 pm 4-5:30 pm 4:30 pm Coding Class Beyblades 4:00-7:00 pm Battle Family Game 7:00 pm Night 5:30-7:15 pm The interactive exhibit uses 8 games, 9 10:30 am 10 11 12 13 14 activities and a Baby Lapsit 10:30 am 10:30 am 10:30 am fun storyline to 4:30 pm Preschool 11:00 am- 4-5:30 pm Toddler Storytime Preschool help children Storytime Duct Tape Storytime 3:00 pm understand Coding Class 11:30 am Wallets Reality Store what money is, Preschool 2:00 pm 4:00-7:00 pm its function in Storytime 6:00 pm *Homeschool society, money Teen D&D Teen DIY: Book Club choices, and 4:00 money values, 4:30 pm *Kids Book Club Upcycling 4:00 pm such as LEGO Club fairness, 15 responsibility 16 10:30 am 17 18 19 20 21 and Baby Lapsit 10:30 am charitableness. 10:30 am 4:00-7:00 pm Preschool Magic the 4-5:30 pm 11:30 am Storytime Look for the Coding Class Preschool Gathering black outlined Storytime programs that 4:30-7:30 pm 4:00 pm 4:30 pm will be themed Tween D&D *KidStir Making Paper along with the 5:30-8:00 pm exhibit! *Fortnite IRL 22 23 10:30 am 24 25 26 27 28 Baby Lapsit 10:30 am 10:30 am 4:30 pm 4-5:30 pm Toddler Storytime Preschool STEAM Lab Storytime Coding Class 11:30 am 6:00 pm 4:00-7:00 pm Preschool Storytime *LibCrate Teen D&D Meetup 4:30 pm 29 30 31 AGE-RANGE COLOR CODE: 10:30 am 10:30 am Baby Lapsit Toddler Storytime BABIES TODDLER PRESCHOOL AGES 5-8 4-5:30 pm 11:30 am Coding Class Preschool AGES 9-13 TEEN ALL AGES YOUTH OR FAMILY Storytime 5:00-7:00 pm Giant Board Please register for the *starred* programs. -

Road Lane Detection for Android

MASARYK UNIVERSITY FACULTY}w¡¢£¤¥¦§¨ OF I !"#$%&'()+,-./012345<yA|NFORMATICS Road lane detection for Android BACHELOR THESIS Jakub Medvecký-Heretik Brno, spring 2014 Declaration I do hereby declare, that this paper is my original authorial work, which I have worked on by my own. All sources, references and liter- ature used or excerpted during elaboration of this work are properly cited and listed in complete reference to the due source. Jakub Medvecký-Heretik Advisor: Mgr. Dušan Klinec Acknowledgement I would like to thank my supervisor, Mgr. Dušan Klinec for his con- tinual support and advice throughout this work. I would also like to thank my family and friends for their support. Abstract The aim of this bachelor thesis was to analyze the possibilities of im- age processing techniques on the Android mobile platform for the purposes of a computer vision application focusing on the problem of road lane detection. Utilizing the results of the analysis an appli- cation was created, which detects lines and circles in an image in real time using various approaches to segmentation and different imple- mentations of the Hough transform. The application makes use of the OpenCV library and the results achieved with its help are com- pared to the results achieved with my own implementation. Keywords computer vision, road lane detection, circle detection, image process- ing, segmentation, Android, OpenCV, Hough transform Contents 1 Introduction ............................ 3 2 Computer vision ......................... 5 2.1 Image processing ...................... 5 2.2 Techniques .......................... 6 2.3 Existing libraries ...................... 6 2.3.1 OpenCV . 6 2.3.2 VXL . 7 2.3.3 ImageJ . -

Pkgid CATEGORYDESC DESCRIPTION QTY Weight

PkgID CATEGORYDESC DESCRIPTION QTY Weight Retail Price Pallet 1 All Other Shepperton Design Studios Original Stormtrooper Battle Spec Full Armour1 6659.99 gr € 599.00 Pallet 1 Outdoor & Sports Toys Toimsa 732 EN71 12-Inch "Cars" Bicycle 1 9380.28 gr € 136.35 Pallet 1 Caretero Bugies Babyschaukel Babywippe Schaukelwippe drehbarer 1Sitz Timer11039.99 elektronisches gr Mobile€ 126.26mit Lichern Pallet 1 Games & Puzzles HUDORA Big Wheel Scooter Air 230 - Roller Luftreifen, 14031 1 6000 gr € 115.97 Pallet 1 KidKraft Wooden Dolls house Savannah 1 17779.99 gr € 110.00 Pallet 1 Outdoor & Sports Toys Dino Bikes Frozen-Fahrrad, 12 Zoll, 126RL-FZ 1 6799.93 gr € 109.98 Pallet 1 Plein Air Smoby - 311304 - Tefal Cuisine Super Chef deluxe - Jeu d'Imitation - 1Multi 10039.99-Fonctions gr - Module Electronique€ 99.38 - + 46 Accessoires - Rouge Pallet 1 Building Sets LEGO Star Wars - Juego de construcción BB-8 (75187) 1 1460 gr € 84.57 Pallet 1 Outdoor & Sports Toys Goki Ride-On-tractor with Trailer 1 6699.93 gr € 83.58 Pallet 1 Dolls Mattel Barbie DLY32 - Barbie 3 Etagen Stadthaus 1 5080 gr € 79.98 Pallet 1 Construction Et MaquettesLEGO - 75179 - Star Wars - Jeu de construction - Kylo Ren’s TIE1 Fighter1080 gr € 61.99 Pallet 1 Dã©guisements Et ImitationsCesar - F450-002 - Déguisement Ninja - 5/7 Ans 1 1220 gr € 58.10 Pallet 1 1er Age Smoby - 110211 - Cotoons Cosy Seat - Rose 1 1620 gr € 49.91 Pallet 1 All Other Simba 109251003 - Feuerwehrmann Sam Bergrettung mit Figur 1 1740 gr € 47.44 Pallet 1 Vehicles Revell - Kit per modellismo “HMCS -

André Vilas Boas Da Costa Retscan: Efficient Fovea and Optic Disc Detection in Retinographies

Universidade do Minho Escola de Engenharia André Vilas Boas da Costa RetScan: Efficient Fovea and Optic Disc Detection in Retinographies Outubro de 2011 Universidade do Minho Escola de Engenharia Departamento de Informática André Vilas Boas da Costa RetScan: Efficient Fovea and Optic Disc Detection in Retinographies Dissertação de Mestrado Mestrado de Informática Trabalho realizado sob orientação de Alberto José Proença Outubro de 2011 Abstract The Fovea and Optic Disc are relevant anatomical eye structures to diagnose var- ious diseases. Its automatic detection can provide both a cost reduction when analysing large populations and improve the effectiveness of ophthalmologists and optometrists. This dissertation describes a methodology to automatically detect these structures and analyses a, CPU only, MATLAB implementation of this methodology. RetScan is a port to a freeware environment of this methodology, its functionality and perfor- mance are evaluated and compared to the original. The results of both evaluations lead to a discussion on possible improvements in the metodology that influence the functionality and performance. The resulting improvements are implemented and integrated in RetScan. To further improve performance, a parallelization of RetScan to take advantage of a multi-core architecture or a CUDA-enabled accelerator was designed, coded and evaluated. This evaluation reveals that RetScan achieves its best throughput efficiency using a multi-core architecture only and analysing several images at once. For one image usage, using multi-core only is also the best solution, but with a small speed-up. The usage of CUDA-enabled accelerators is not recom- mended for this scope as the images are small and the cost of the data transfer to and from the accelerator has a severe impact on performance. -

Hasbro 2021 Investor Event Transcript

REFINITIV STREETEVENTS EDITED TRANSCRIPT HAS.OQ - Hasbro Inc Investor Event 2021 EVENT DATE/TIME: FEBRUARY 25, 2021 / 3:00PM GMT REFINITIV STREETEVENTS | www.refinitiv.com | Contact Us ©2021 Refinitiv. All rights reserved. Republication or redistribution of Refinitiv content, including by framing or similar means, is prohibited without the prior written consent of Refinitiv. 'Refinitiv' and the Refinitiv logo are registered trademarks of Refinitiv and its affiliated companies. FEBRUARY 25, 2021 / 3:00PM, HAS.OQ - Hasbro Inc Investor Event 2021 CORPORATE PARTICIPANTS Brian D. Goldner Hasbro, Inc. - Chairman & CEO Casey Collins Hasbro, Inc. - Senior VP & GM of Consumer Products Chris Cocks Wizards of the Coast LLC - President Darren Dennis Throop Entertainment One Ltd. - CEO, President & Director Debbie Hancock Hasbro, Inc. - SVP of IR Deborah M. Thomas Hasbro, Inc. - Executive VP & CFO Eric C. Nyman Hasbro, Inc. - Chief Consumer Officer Kathrin Belliveau Hasbro, Inc. - Senior VP & Chief Purpose Officer Kim Boyd Hasbro, Inc. - Senior Vice President & General Manager of Global Brands Olivier Dumont Entertainment One Ltd. - President of Family Brands Steve Bertram Entertainment One Ltd. - President of Film & Television CONFERENCE CALL PARTICIPANTS Arpine Kocharyan UBS Investment Bank, Research Division - Director and Analyst David James Beckel Joh. Berenberg, Gossler & Co. KG, Research Division - Analyst Eric Owen Handler MKM Partners LLC, Research Division - MD, Sector Head & Senior Analyst Frederick Charles Wightman Wolfe Research, LLC -

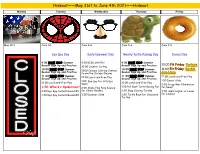

Hideout——May 31St to June 4Th 2021——Hideout

Hideout——May 31st to June 4th 2021——Hideout Monday Tuesday Wednesday Thursday Friday May 31st June 1st June 2nd June 3rd June 4th Eye Spy Day Early Eyewear Day Nearly Turtle Racing Day Donut Day 9:00 Summer 9:00 RISK with Phil 9:00 Summer Season Sign Up and Practice Season Sign Up and Practice 10:00 Fit Friday: Pushups 10:00 Counter Curling 11:00 Fit Friday: Double 10:00 Summer 11:00 Cyclops Coloring Contest 10:00 Summer Season Sign Up and Practice Season Sign Up and Practice to win the Cyclops Glasses Arm Hang 11:00 Summer 12:00 Lunch and Free Play 11:00 Summer 12:00 Lunch and Free Play Season Sign Up and Practice Season Sign Up and Practice 1:00 Eye Spy for Silly Eye 1:00 Donut Slide 12:00 Lunch and Free Play 12:00 Lunch and Free Play Wear 2:00 Group War Elimination 1:00 Where’s Spiderman? 2:00 Slinky Ping Pong Bounce 1:00 Pull Back Turtle Racing Fun for Donut 2:00 Eye Spy Contest Round #1 for Slinky Glasses 2:00 Soap Carving Turtles 3:00 Giant Higher or Lower for a Donut 3:00 Eye Spy Contest Round #2 3:00 Eyewear Slide 3:00 Turtle Race for Chocolate Turtles Hideout——June 7th to June 11th 2021——Hideout Monday Tuesday Wednesday Thursday Friday June 7th June 8th June 9th June 10th June 11th Week of WWE Week of WWE Week of WWE Week of WWE Week of WWE 9:00 9:00 9:00 9:00 10:00 Fit Friday: Pushups Tournament for Cadets Tournament for Minors Tournament for Juniors Tournament for Intermediates 11:00 Fit Friday: Double and Seniors 10:00 10:00 10:00 Arm Hang 10:00 12:00 Lunch and Free Play Tournament for Cadets Tournament for Minors Tournament for Juniors Tournament for Intermediates 1:00 11:00 11:00 11:00 and Seniors Tournament for Cadets Come and watch WWE Tournament for Minors Tournament for Juniors 11:00 12:00 Lunch and Free Play Wrestling in the 12:00 Lunch and Free Play 12:00 Lunch and Free Play Tournament for Intermediates Hideout. -

Hasbro's Iconic NERF Brand Celebrates Its 40Th Anniversary

February 13, 2009 It's NERF or Nothin'! Hasbro's Iconic NERF Brand Celebrates Its 40th Anniversary NERF to be a Sponsor of the 2009 Dew Tour and Activate NERF DART TAG World Championship in Festival Village PAWTUCKET, R.I.--(BUSINESS WIRE)--Feb. 13, 2009-- Whether it’s partnering with some of the most notable athletes of the day or bringing a no-holds barred blaster experience to the video game environment, Hasbro, Inc.’s (NYSE: HAS) NERF brand continues to redefine both the sports and toy world. In 2009, the NERF brand will celebrate 40 years of incredible growth; from simple beginnings as an orange foam ball, NERF now encompasses an intense product lineup and impressive athletic alliances. The NERF Brand continues looking forward by signing on as a sponsor of the 2009 Dew Tour, the world’s premier season-long action sports tour. As part of the Dew Tour’s award-winning Festival Village, the first NERF DART TAG World Championship will offer fans and consumers an alternative competitive sporting environment unique to NERF. More details to come on how Dew Tour and NERF fans can enter and compete in the NERF DART TAG World Championship. “After 40 incredible years, we’re extremely proud to call NERF one of the most iconic sports brands in history and the #1 toy brand for boys ages 8-12,”said Jonathan Berkowitz, Global Brand Director for NERF at Hasbro. “As we enter our 40th year, we see the NERF DART TAG World Championship and our sponsorship of the Dew Tour as a great milestone in the brand’s history, strengthening our presence in both the classic and next-generation sports arenas as well as encouraging players and enthusiasts to raise awareness of the sport of DART TAG.” The NERF brand joins a blue-chip roster of sponsors that include Mountain Dew, Wendy’s, Toyota, PlayStation, and others for the 2009 Dew Tour season. -

POW! Is Pleased to Announce the December 2013 Release of NERF: the Ultimate Blaster Book by Nathaniel Marunas

November 20, 2013 POW! is pleased to announce the December 2013 release of NERF: The Ultimate Blaster Book by Nathaniel Marunas Celebrating generations of foam power and propulsion systems, POW!, an imprint of powerHouse Books, is delighted to announce NERF: The Ultimate Blaster Book. The first-ever official NERF blaster book produced under license from Hasbro, Inc.'s epic NERF franchise, this is a stunning visual guide to the iconic blasters and a brand synonymous with action and fun. Pore over these pages to discover the amazing spectrum of NERF blaster designs and accessories, from the very first NERF product, a simple, orange foam ball developed nearly a half-century ago, to the birth of the blaster in 1989, to increasingly sophisticated models like the innovative N-Strike Elite series, which began in 2012, and ending with a sneak peek at the blasters of the future! NERF: The Ultimate Blaster Book is a comprehensive and exhilarating tour of NERF blasters, the popular action toys. Jam-packed with striking photos and intriguing facts, this hardcover, full-color collector's encyclopedia is heavily detailed with high-energy spreads, an array of technical specifications (range, capacity, propulsion type, and more), and callouts explaining special features, as well as the in-depth history behind each blaster evolution. Taking this lavishly illustrated book to the next level, six special edition, collectible darts are included in the front cover of every book. For kid and adult enthusiasts alike, this is the ultimate NERF must-have! NERF: The Ultimate Blaster Book will be available to purchase beginning November 19.