Polytopes Course Notes

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Geometry of Generalized Permutohedra

Geometry of Generalized Permutohedra by Jeffrey Samuel Doker A dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Mathematics in the Graduate Division of the University of California, Berkeley Committee in charge: Federico Ardila, Co-chair Lior Pachter, Co-chair Matthias Beck Bernd Sturmfels Lauren Williams Satish Rao Fall 2011 Geometry of Generalized Permutohedra Copyright 2011 by Jeffrey Samuel Doker 1 Abstract Geometry of Generalized Permutohedra by Jeffrey Samuel Doker Doctor of Philosophy in Mathematics University of California, Berkeley Federico Ardila and Lior Pachter, Co-chairs We study generalized permutohedra and some of the geometric properties they exhibit. We decompose matroid polytopes (and several related polytopes) into signed Minkowski sums of simplices and compute their volumes. We define the associahedron and multiplihe- dron in terms of trees and show them to be generalized permutohedra. We also generalize the multiplihedron to a broader class of generalized permutohedra, and describe their face lattices, vertices, and volumes. A family of interesting polynomials that we call composition polynomials arises from the study of multiplihedra, and we analyze several of their surprising properties. Finally, we look at generalized permutohedra of different root systems and study the Minkowski sums of faces of the crosspolytope. i To Joe and Sue ii Contents List of Figures iii 1 Introduction 1 2 Matroid polytopes and their volumes 3 2.1 Introduction . .3 2.2 Matroid polytopes are generalized permutohedra . .4 2.3 The volume of a matroid polytope . .8 2.4 Independent set polytopes . 11 2.5 Truncation flag matroids . 14 3 Geometry and generalizations of multiplihedra 18 3.1 Introduction . -

Lecture 3 1 Geometry of Linear Programs

ORIE 6300 Mathematical Programming I September 2, 2014 Lecture 3 Lecturer: David P. Williamson Scribe: Divya Singhvi Last time we discussed how to take dual of an LP in two different ways. Today we will talk about the geometry of linear programs. 1 Geometry of Linear Programs First we need some definitions. Definition 1 A set S ⊆ <n is convex if 8x; y 2 S, λx + (1 − λ)y 2 S, 8λ 2 [0; 1]. Figure 1: Examples of convex and non convex sets Given a set of inequalities we define the feasible region as P = fx 2 <n : Ax ≤ bg. We say that P is a polyhedron. Which points on this figure can have the optimal value? Our intuition from last time is that Figure 2: Example of a polyhedron. \Circled" corners are feasible and \squared" are non feasible optimal solutions to linear programming problems occur at \corners" of the feasible region. What we'd like to do now is to consider formal definitions of the \corners" of the feasible region. 3-1 One idea is that a point in the polyhedron is a corner if there is some objective function that is minimized there uniquely. Definition 2 x 2 P is a vertex of P if 9c 2 <n with cT x < cT y; 8y 6= x; y 2 P . Another idea is that a point x 2 P is a corner if there are no small perturbations of x that are in P . Definition 3 Let P be a convex set in <n. Then x 2 P is an extreme point of P if x cannot be written as λy + (1 − λ)z for y; z 2 P , y; z 6= x, 0 ≤ λ ≤ 1. -

Two Poset Polytopes

Discrete Comput Geom 1:9-23 (1986) G eometrv)i.~.reh, ~ ( :*mllmlati~ml © l~fi $1~ter-Vtrlq New Yorklu¢. t¢ Two Poset Polytopes Richard P. Stanley* Department of Mathematics, Massachusetts Institute of Technology, Cambridge, MA 02139 Abstract. Two convex polytopes, called the order polytope d)(P) and chain polytope <~(P), are associated with a finite poset P. There is a close interplay between the combinatorial structure of P and the geometric structure of E~(P). For instance, the order polynomial fl(P, m) of P and Ehrhart poly- nomial i(~9(P),m) of O(P) are related by f~(P,m+l)=i(d)(P),m). A "transfer map" then allows us to transfer properties of O(P) to W(P). In particular, we transfer known inequalities involving linear extensions of P to some new inequalities. I. The Order Polytope Our aim is to investigate two convex polytopes associated with a finite partially ordered set (poset) P. The first of these, which we call the "order polytope" and denote by O(P), has been the subject of considerable scrutiny, both explicit and implicit, Much of what we say about the order polytope will be essentially a review of well-known results, albeit ones scattered throughout the literature, sometimes in a rather obscure form. The second polytope, called the "chain polytope" and denoted if(P), seems never to have been previously considered per se. It is a special case of the vertex-packing polytope of a graph (see Section 2) but has many special properties not in general valid or meaningful for graphs. -

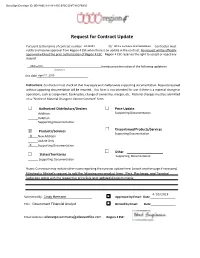

Request for Contract Update

DocuSign Envelope ID: 0E48A6C9-4184-4355-B75C-D9F791DFB905 Request for Contract Update Pursuant to the terms of contract number________________R142201 for _________________________ Office Furniture and Installation Contractor must notify and receive approval from Region 4 ESC when there is an update in the contract. No request will be officially approved without the prior authorization of Region 4 ESC. Region 4 ESC reserves the right to accept or reject any request. Allsteel Inc. hereby provides notice of the following update on (Contractor) this date April 17, 2019 . Instructions: Contractor must check all that may apply and shall provide supporting documentation. Requests received without supporting documentation will be returned. This form is not intended for use if there is a material change in operations, such as assignment, bankruptcy, change of ownership, merger, etc. Material changes must be submitted on a “Notice of Material Change to Vendor Contract” form. Authorized Distributors/Dealers Price Update Addition Supporting Documentation Deletion Supporting Documentation Discontinued Products/Services X Products/Services Supporting Documentation X New Addition Update Only X Supporting Documentation Other States/Territories Supporting Documentation Supporting Documentation Notes: Contractor may include other notes regarding the contract update here: (attach another page if necessary). Attached is Allsteel's request to add the following new product lines: Park, Recharge, and Townhall Collection along with the respective price -

On the Circuit Diameter Conjecture

On the Circuit Diameter Conjecture Steffen Borgwardt1, Tamon Stephen2, and Timothy Yusun2 1 University of Colorado Denver [email protected] 2 Simon Fraser University {tamon,tyusun}@sfu.ca Abstract. From the point of view of optimization, a critical issue is relating the combina- torial diameter of a polyhedron to its number of facets f and dimension d. In the seminal paper of Klee and Walkup [KW67], the Hirsch conjecture of an upper bound of f − d was shown to be equivalent to several seemingly simpler statements, and was disproved for unbounded polyhedra through the construction of a particular 4-dimensional polyhedron U4 with 8 facets. The Hirsch bound for bounded polyhedra was only recently disproved by Santos [San12]. We consider analogous properties for a variant of the combinatorial diameter called the circuit diameter. In this variant, the walks are built from the circuit directions of the poly- hedron, which are the minimal non-trivial solutions to the system defining the polyhedron. We are able to prove that circuit variants of the so-called non-revisiting conjecture and d-step conjecture both imply the circuit analogue of the Hirsch conjecture. For the equiva- lences in [KW67], the wedge construction was a fundamental proof technique. We exhibit why it is not available in the circuit setting, and what are the implications of losing it as a tool. Further, we show the circuit analogue of the non-revisiting conjecture implies a linear bound on the circuit diameter of all unbounded polyhedra – in contrast to what is known for the combinatorial diameter. Finally, we give two proofs of a circuit version of the 4-step conjecture. -

7 LATTICE POINTS and LATTICE POLYTOPES Alexander Barvinok

7 LATTICE POINTS AND LATTICE POLYTOPES Alexander Barvinok INTRODUCTION Lattice polytopes arise naturally in algebraic geometry, analysis, combinatorics, computer science, number theory, optimization, probability and representation the- ory. They possess a rich structure arising from the interaction of algebraic, convex, analytic, and combinatorial properties. In this chapter, we concentrate on the the- ory of lattice polytopes and only sketch their numerous applications. We briefly discuss their role in optimization and polyhedral combinatorics (Section 7.1). In Section 7.2 we discuss the decision problem, the problem of finding whether a given polytope contains a lattice point. In Section 7.3 we address the counting problem, the problem of counting all lattice points in a given polytope. The asymptotic problem (Section 7.4) explores the behavior of the number of lattice points in a varying polytope (for example, if a dilation is applied to the polytope). Finally, in Section 7.5 we discuss problems with quantifiers. These problems are natural generalizations of the decision and counting problems. Whenever appropriate we address algorithmic issues. For general references in the area of computational complexity/algorithms see [AB09]. We summarize the computational complexity status of our problems in Table 7.0.1. TABLE 7.0.1 Computational complexity of basic problems. PROBLEM NAME BOUNDED DIMENSION UNBOUNDED DIMENSION Decision problem polynomial NP-hard Counting problem polynomial #P-hard Asymptotic problem polynomial #P-hard∗ Problems with quantifiers unknown; polynomial for ∀∃ ∗∗ NP-hard ∗ in bounded codimension, reduces polynomially to volume computation ∗∗ with no quantifier alternation, polynomial time 7.1 INTEGRAL POLYTOPES IN POLYHEDRAL COMBINATORICS We describe some combinatorial and computational properties of integral polytopes. -

Computational Design Framework 3D Graphic Statics

Computational Design Framework for 3D Graphic Statics 3D Graphic for Computational Design Framework Computational Design Framework for 3D Graphic Statics Juney Lee Juney Lee Juney ETH Zurich • PhD Dissertation No. 25526 Diss. ETH No. 25526 DOI: 10.3929/ethz-b-000331210 Computational Design Framework for 3D Graphic Statics A thesis submitted to attain the degree of Doctor of Sciences of ETH Zurich (Dr. sc. ETH Zurich) presented by Juney Lee 2015 ITA Architecture & Technology Fellow Supervisor Prof. Dr. Philippe Block Technical supervisor Dr. Tom Van Mele Co-advisors Hon. D.Sc. William F. Baker Prof. Allan McRobie PhD defended on October 10th, 2018 Degree confirmed at the Department Conference on December 5th, 2018 Printed in June, 2019 For my parents who made me, for Dahmi who raised me, and for Seung-Jin who completed me. Acknowledgements I am forever indebted to the Block Research Group, which is truly greater than the sum of its diverse and talented individuals. The camaraderie, respect and support that every member of the group has for one another were paramount to the completion of this dissertation. I sincerely thank the current and former members of the group who accompanied me through this journey from close and afar. I will cherish the friendships I have made within the group for the rest of my life. I am tremendously thankful to the two leaders of the Block Research Group, Prof. Dr. Philippe Block and Dr. Tom Van Mele. This dissertation would not have been possible without my advisor Prof. Block and his relentless enthusiasm, creative vision and inspiring mentorship. -

![The Geometry of Nim Arxiv:1109.6712V1 [Math.CO] 30](https://docslib.b-cdn.net/cover/8642/the-geometry-of-nim-arxiv-1109-6712v1-math-co-30-48642.webp)

The Geometry of Nim Arxiv:1109.6712V1 [Math.CO] 30

The Geometry of Nim Kevin Gibbons Abstract We relate the Sierpinski triangle and the game of Nim. We begin by defining both a new high-dimensional analog of the Sierpinski triangle and a natural geometric interpretation of the losing positions in Nim, and then, in a new result, show that these are equivalent in each finite dimension. 0 Introduction The Sierpinski triangle (fig. 1) is one of the most recognizable figures in mathematics, and with good reason. It appears in everything from Pascal's Triangle to Conway's Game of Life. In fact, it has already been seen to be connected with the game of Nim, albeit in a very different manner than the one presented here [3]. A number of analogs have been discovered, such as the Menger sponge (fig. 2) and a three-dimensional version called a tetrix. We present, in the first section, a generalization in higher dimensions differing from the more typical simplex generalization. Rather, we define a discrete Sierpinski demihypercube, which in three dimensions coincides with the simplex generalization. In the second section, we briefly review Nim and the theory behind optimal play. As in all impartial games (games in which the possible moves depend only on the state of the game, and not on which of the two players is moving), all possible positions can be divided into two classes - those in which the next player to move can force a win, called N-positions, and those in which regardless of what the next player does the other player can force a win, called P -positions. -

1 Lifts of Polytopes

Lecture 5: Lifts of polytopes and non-negative rank CSE 599S: Entropy optimality, Winter 2016 Instructor: James R. Lee Last updated: January 24, 2016 1 Lifts of polytopes 1.1 Polytopes and inequalities Recall that the convex hull of a subset X n is defined by ⊆ conv X λx + 1 λ x0 : x; x0 X; λ 0; 1 : ( ) f ( − ) 2 2 [ ]g A d-dimensional convex polytope P d is the convex hull of a finite set of points in d: ⊆ P conv x1;:::; xk (f g) d for some x1;:::; xk . 2 Every polytope has a dual representation: It is a closed and bounded set defined by a family of linear inequalities P x d : Ax 6 b f 2 g for some matrix A m d. 2 × Let us define a measure of complexity for P: Define γ P to be the smallest number m such that for some C s d ; y s ; A m d ; b m, we have ( ) 2 × 2 2 × 2 P x d : Cx y and Ax 6 b : f 2 g In other words, this is the minimum number of inequalities needed to describe P. If P is full- dimensional, then this is precisely the number of facets of P (a facet is a maximal proper face of P). Thinking of γ P as a measure of complexity makes sense from the point of view of optimization: Interior point( methods) can efficiently optimize linear functions over P (to arbitrary accuracy) in time that is polynomial in γ P . ( ) 1.2 Lifts of polytopes Many simple polytopes require a large number of inequalities to describe. -

Geometry © 2000 Springer-Verlag New York Inc

Discrete Comput Geom OF1–OF15 (2000) Discrete & Computational DOI: 10.1007/s004540010046 Geometry © 2000 Springer-Verlag New York Inc. Convex and Linear Orientations of Polytopal Graphs J. Mihalisin and V. Klee Department of Mathematics, University of Washington, Box 354350, Seattle, WA 98195-4350, USA {mihalisi,klee}@math.washington.edu Abstract. This paper examines directed graphs related to convex polytopes. For each fixed d-polytope and any acyclic orientation of its graph, we prove there exist both convex and concave functions that induce the given orientation. For each combinatorial class of 3-polytopes, we provide a good characterization of the orientations that are induced by an affine function acting on some member of the class. Introduction A graph is d-polytopal if it is isomorphic to the graph G(P) formed by the vertices and edges of some (convex) d-polytope P. As the term is used here, a digraph is d-polytopal if it is isomorphic to a digraph that results when the graph G(P) of some d-polytope P is oriented by means of some affine function on P. 3-Polytopes and their graphs have been objects of research since the time of Euler. The most important result concerning 3-polytopal graphs is the theorem of Steinitz [SR], [Gr1], asserting that a graph is 3-polytopal if and only if it is planar and 3-connected. Also important is the related fact that the combinatorial type (i.e., the entire face-lattice) of a 3-polytope P is determined by the graph G(P). Steinitz’s theorem has been very useful in studying the combinatorial structure of 3-polytopes because it makes it easy to recognize the 3-polytopality of a graph and to construct graphs that represent 3-polytopes without producing an explicit geometric realization. -

Understanding the Formation of Pbse Honeycomb Superstructures by Dynamics Simulations

PHYSICAL REVIEW X 9, 021015 (2019) Understanding the Formation of PbSe Honeycomb Superstructures by Dynamics Simulations Giuseppe Soligno* and Daniel Vanmaekelbergh Condensed Matter and Interfaces, Debye Institute for Nanomaterials Science, Utrecht University, Princetonplein 1, Utrecht 3584 CC, Netherlands (Received 28 October 2018; revised manuscript received 28 January 2019; published 23 April 2019) Using a coarse-grained molecular dynamics model, we simulate the self-assembly of PbSe nanocrystals (NCs) adsorbed at a flat fluid-fluid interface. The model includes all key forces involved: NC-NC short- range facet-specific attractive and repulsive interactions, entropic effects, and forces due to the NC adsorption at fluid-fluid interfaces. Realistic values are used for the input parameters regulating the various forces. The interface-adsorption parameters are estimated using a recently introduced sharp- interface numerical method which includes capillary deformation effects. We find that the final structure in which the NCs self-assemble is drastically affected by the input values of the parameters of our coarse- grained model. In particular, by slightly tuning just a few parameters of the model, we can induce NC self- assembly into either silicene-honeycomb superstructures, where all NCs have a f111g facet parallel to the fluid-fluid interface plane, or square superstructures, where all NCs have a f100g facet parallel to the interface plane. Both of these nanostructures have been observed experimentally. However, it is still not clear their formation mechanism, and, in particular, which are the factors directing the NC self-assembly into one or another structure. In this work, we identify and quantify such factors, showing illustrative assembled-phase diagrams obtained from our simulations. -

Archimedean Solids

University of Nebraska - Lincoln DigitalCommons@University of Nebraska - Lincoln MAT Exam Expository Papers Math in the Middle Institute Partnership 7-2008 Archimedean Solids Anna Anderson University of Nebraska-Lincoln Follow this and additional works at: https://digitalcommons.unl.edu/mathmidexppap Part of the Science and Mathematics Education Commons Anderson, Anna, "Archimedean Solids" (2008). MAT Exam Expository Papers. 4. https://digitalcommons.unl.edu/mathmidexppap/4 This Article is brought to you for free and open access by the Math in the Middle Institute Partnership at DigitalCommons@University of Nebraska - Lincoln. It has been accepted for inclusion in MAT Exam Expository Papers by an authorized administrator of DigitalCommons@University of Nebraska - Lincoln. Archimedean Solids Anna Anderson In partial fulfillment of the requirements for the Master of Arts in Teaching with a Specialization in the Teaching of Middle Level Mathematics in the Department of Mathematics. Jim Lewis, Advisor July 2008 2 Archimedean Solids A polygon is a simple, closed, planar figure with sides formed by joining line segments, where each line segment intersects exactly two others. If all of the sides have the same length and all of the angles are congruent, the polygon is called regular. The sum of the angles of a regular polygon with n sides, where n is 3 or more, is 180° x (n – 2) degrees. If a regular polygon were connected with other regular polygons in three dimensional space, a polyhedron could be created. In geometry, a polyhedron is a three- dimensional solid which consists of a collection of polygons joined at their edges. The word polyhedron is derived from the Greek word poly (many) and the Indo-European term hedron (seat).