Efficient Algorithms for Personalized Pagerank

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Received Citations As a Main SEO Factor of Google Scholar Results Ranking

RECEIVED CITATIONS AS A MAIN SEO FACTOR OF GOOGLE SCHOLAR RESULTS RANKING Las citas recibidas como principal factor de posicionamiento SEO en la ordenación de resultados de Google Scholar Cristòfol Rovira, Frederic Guerrero-Solé and Lluís Codina Nota: Este artículo se puede leer en español en: http://www.elprofesionaldelainformacion.com/contenidos/2018/may/09_esp.pdf Cristòfol Rovira, associate professor at Pompeu Fabra University (UPF), teaches in the Depart- ments of Journalism and Advertising. He is director of the master’s degree in Digital Documenta- tion (UPF) and the master’s degree in Search Engines (UPF). He has a degree in Educational Scien- ces, as well as in Library and Information Science. He is an engineer in Computer Science and has a master’s degree in Free Software. He is conducting research in web positioning (SEO), usability, search engine marketing and conceptual maps with eyetracking techniques. https://orcid.org/0000-0002-6463-3216 [email protected] Frederic Guerrero-Solé has a bachelor’s in Physics from the University of Barcelona (UB) and a PhD in Public Communication obtained at Universitat Pompeu Fabra (UPF). He has been teaching at the Faculty of Communication at the UPF since 2008, where he is a lecturer in Sociology of Communi- cation. He is a member of the research group Audiovisual Communication Research Unit (Unica). https://orcid.org/0000-0001-8145-8707 [email protected] Lluís Codina is an associate professor in the Department of Communication at the School of Com- munication, Universitat Pompeu Fabra (UPF), Barcelona, Spain, where he has taught information science courses in the areas of Journalism and Media Studies for more than 25 years. -

Analysis of the Youtube Channel Recommendation Network

CS 224W Project Milestone Analysis of the YouTube Channel Recommendation Network Ian Torres [itorres] Jacob Conrad Trinidad [j3nidad] December 8th, 2015 I. Introduction With over a billion users, YouTube is one of the largest online communities on the world wide web. For a user to upload a video on YouTube, they can create a channel. These channels serve as the home page for that account, displaying the account's name, description, and public videos that have been up- loaded to YouTube. In addition to this content, channels can recommend other channels. This can be done in two ways: the user can choose to feature a channel or YouTube can recommend a channel whose content is similar to the current channel. YouTube features both of these types of recommendations in separate sidebars on the user's channel. We are interested analyzing in the structure of this potential network. We have crawled the YouTube site and obtained a dataset totaling 228575 distinct user channels, 400249 user recommendations, and 400249 YouTube recommendations. In this paper, we present a systematic and in-depth analysis on the structure of this network. With this data, we have created detailed visualizations, analyzed different centrality measures on the network, compared their community structures, and performed motif analysis. II. Literature Review As YouTube has been rising in popularity since its creation in 2005, there has been research on the topic of YouTube and discovering the structure behind its network. Thus, there exists much research analyzing YouTube as a social network. Cheng looks at videos as nodes and recommendations to other videos as links [1]. -

Human Computation Luis Von Ahn

Human Computation Luis von Ahn CMU-CS-05-193 December 7, 2005 School of Computer Science Carnegie Mellon University Pittsburgh, PA 15213 Thesis Committee: Manuel Blum, Chair Takeo Kanade Michael Reiter Josh Benaloh, Microsoft Research Jitendra Malik, University of California, Berkeley Copyright © 2005 by Luis von Ahn This work was partially supported by the National Science Foundation (NSF) grants CCR-0122581 and CCR-0085982 (The Aladdin Center), by a Microsoft Research Fellowship, and by generous gifts from Google, Inc. The views and conclusions contained in this document are those of the author and should not be interpreted as representing official policies, either expressed or implied, of any sponsoring institution, the U.S. government or any other entity. Keywords: CAPTCHA, the ESP Game, Peekaboom, Verbosity, Phetch, human computation, automated Turing tests, games with a purpose. 2 Abstract Tasks like image recognition are trivial for humans, but continue to challenge even the most sophisticated computer programs. This thesis introduces a paradigm for utilizing human processing power to solve problems that computers cannot yet solve. Traditional approaches to solving such problems focus on improving software. I advocate a novel approach: constructively channel human brainpower using computer games. For example, the ESP Game, introduced in this thesis, is an enjoyable online game — many people play over 40 hours a week — and when people play, they help label images on the Web with descriptive keywords. These keywords can be used to significantly improve the accuracy of image search. People play the game not because they want to help, but because they enjoy it. I introduce three other examples of “games with a purpose”: Peekaboom, which helps determine the location of objects in images, Phetch, which collects paragraph descriptions of arbitrary images to help accessibility of the Web, and Verbosity, which collects “common-sense” knowledge. -

Pagerank Best Practices Neus Ferré Aug08

Search Engine Optimisation. PageRank best Practices Graduand: Supervisor: Neus Ferré Viñes Florian Heinemann [email protected] [email protected] UPC Barcelona RWTH Aachen RWTH Aachen Business Sciences for Engineers Business Sciences for Engineers and Natural Scientists and Natural Scientists July 2008 Abstract Since the explosion of the Internet age the need of search online information has grown as well at the light velocity. As a consequent, new marketing disciplines arise in the digital world. This thesis describes, in the search engine marketing framework, how the ranking in the search engine results page (SERP) can be influenced. Wikipedia describes search engine marketing or SEM as a form of Internet marketing that seeks to promote websites by increasing their visibility in search engine result pages (SERPs). Therefore, the importance of being searchable and visible to the users reveal needs of improvement for the website designers. Different factors are used to produce search rankings. One of them is PageRank. The present thesis focuses on how PageRank of Google makes use of the linking structure of the Web in order to maximise relevance of the results in a web search. PageRank used to be the jigsaw of webmasters because of the secrecy it used to have. The formula that lies behind PageRank enabled the founders of Google to convert a PhD into one of the most successful companies ever. The uniqueness of PageRank in contrast to other Web Search Engines consist in providing the user with the greatest relevance of the results for a specific query, thus providing the most satisfactory user experience. -

The Pagerank Algorithm and Application on Searching of Academic Papers

The PageRank algorithm and application on searching of academic papers Ping Yeh Google, Inc. 2009/12/9 Department of Physics, NTU Disclaimer (legal) The content of this talk is the speaker's personal opinion and is not the opinion or policy of his employer. Disclaimer (content) You will not hear physics. You will not see differential equations. You will: ● get a review of PageRank, the algorithm used in Google's web search. It has been applied to evaluate journal status and influence of nodes in a graph by researchers, ● see some linear algebra and Markov chains associated with it, and ● see some results of applying it to journal status. Outline Introduction Google and Google search PageRank algorithm for ranking web pages Using MapReduce to calculate PageRank for billions of pages Impact factor of journals and PageRank Conclusion Google The name: homophone to the word “Googol” which means 10100. The company: ● founded by Larry Page and Sergey Brin in 1998, ● ~20,000 employees as of 2009, ● spread in 68 offices around the world (23 in N. America, 3 in Latin America, 14 in Asia Pacific, 23 in Europe, 5 in Middle East and Africa). The mission: “to organize the world's information and make it universally accessible and useful.” Google Services Sky YouTube iGoogle web search talk book search Chrome calendar scholar translate blogger.com Android product news search maps picasaweb video groups Gmail desktop reader Earth Photo by mr.hero on panoramio (http://www.panoramio.com/photo/1127015) 6 Google Search http://www.google.com/ or http://www.google.com.tw/ The abundance problem Quote Langville and Meyer's nice book “Google's PageRank and beyond: the science of search engine rankings”: The men in Jorge Luis Borges’ 1941 short story, “The Library of Babel”, which describes an imaginary, infinite library. -

The Pagerank Algorithm Is One Way of Ranking the Nodes in a Graph by Importance

The PageRank 13 Algorithm Lab Objective: Many real-world systemsthe internet, transportation grids, social media, and so oncan be represented as graphs (networks). The PageRank algorithm is one way of ranking the nodes in a graph by importance. Though it is a relatively simple algorithm, the idea gave birth to the Google search engine in 1998 and has shaped much of the information age since then. In this lab we implement the PageRank algorithm with a few dierent approaches, then use it to rank the nodes of a few dierent networks. The PageRank Model The internet is a collection of webpages, each of which may have a hyperlink to any other page. One possible model for a set of n webpages is a directed graph, where each node represents a page and node j points to node i if page j links to page i. The corresponding adjacency matrix A satises Aij = 1 if node j links to node i and Aij = 0 otherwise. b c abcd a 2 0 0 0 0 3 b 6 1 0 1 0 7 A = 6 7 c 4 1 0 0 1 5 d 1 0 1 0 a d Figure 13.1: A directed unweighted graph with four nodes, together with its adjacency matrix. Note that the column for node b is all zeros, indicating that b is a sinka node that doesn't point to any other node. If n users start on random pages in the network and click on a link every 5 minutes, which page in the network will have the most views after an hour? Which will have the fewest? The goal of the PageRank algorithm is to solve this problem in general, therefore determining how important each webpage is. -

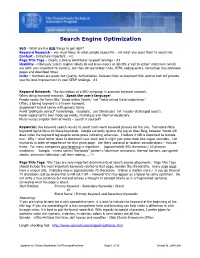

SEO - What Are the BIG Things to Get Right? Keyword Research – You Must Focus on What People Search for - Not What You Want Them to Search For

Search Engine Optimization SEO - What are the BIG things to get right? Keyword Research – you must focus on what people search for - not what you want them to search for. Content – Extremely important - #2. Page Title Tags – Clearly a strong contributor to good rankings - #3 Usability – Obviously search engine robots do not know colors or identify a ‘call to action’ statement (which are both very important to visitors), but they do see broken links, HTML coding errors, contextual links between pages and download times. Links – Numbers are good; but Quality, Authoritative, Relevant links on Keyword-Rich anchor text will provide you the best improvement in your SERP rankings. #1 Keyword Research: The foundation of a SEO campaign is accurate keyword research. When doing keyword research: Speak the user's language! People search for terms like "cheap airline tickets," not "value-priced travel experience." Often, a boring keyword is a known keyword. Supplement brand names with generic terms. Avoid "politically correct" terminology. (example: use ‘blind users’ not ‘visually challenged users’). Favor legacy terms over made-up words, marketese and internal vocabulary. Plural verses singular form of words – search it yourself! Keywords: Use keyword search results to select multi-word keyword phrases for the site. Formulate Meta Keyword tag to focus on these keywords. Google currently ignores the tag as does Bing, however Yahoo still does index the keyword tag despite some press indicating otherwise. I believe it still is important to include one. Why – what better place to document your work and it might just come back into vogue someday. List keywords in order of importance for that given page. -

Personalized Pagerank Estimation and Search: a Bidirectional Approach

Personalized PageRank Estimation and Search: A Bidirectional Approach Peter Lofgren Siddhartha Banerjee Ashish Goel Department of CS School of ORIE Department of MS&E Stanford University Cornell University Stanford University [email protected] [email protected] [email protected] ABSTRACT 1. INTRODUCTION We present new algorithms for Personalized PageRank es- On social networks, personalization is necessary for re- timation and Personalized PageRank search. First, for the turning relevant results for a query. For example, if a user problem of estimating Personalized PageRank (PPR) from searches for a common name like John on a social network a source distribution to a target node, we present a new like Facebook, the results should depend on who is doing the bidirectional estimator with simple yet strong guarantees on search and who their friends are. A good personalized model correctness and performance, and 3x to 8x speedup over ex- for measuring the importance of a node t to a searcher s is isting estimators in experiments on a diverse set of networks. Personalized PageRank πs(t)[20, 13, 12] { this motivates a Moreover, it has a clean algebraic structure which enables natural Personalized PageRank Search Problem: Given it to be used as a primitive for the Personalized PageRank • a network with nodes V (each associated with a set of Search problem: Given a network like Facebook, a query keywords) and edges E (possibly weighted and directed), like \people named John," and a searching user, return the • a keyword inducing a set of targets: top nodes in the network ranked by PPR from the perspec- T = ft 2 V : t is relevant to the keywordg tive of the searching user. -

Centrality Metrics

Centrality Metrics Dr. Natarajan Meghanathan Professor of Computer Science Jackson State University E-mail: [email protected] Centrality • Tells us which nodes are important in a network based on the topological structure of the network (instead of using the offline information about the nodes: e.g., popularity of nodes) – How influential a person is within a social network – Which genes play a crucial role in regulating systems and processes – Infrastructure networks: if the node is removed, it would critically impede the functioning of the network. Nodes X and Z have higher Degree Node Y is more central from X YZ the point of view of Betweenness – to reach from one end to the other Closeness – can reach every other vertex in the fewest number of hops Centrality Metrics • Degree-based Centrality Metrics – Degree Centrality : measure of the number of vertices adjacent to a vertex (degree) – Eigenvector Centrality : measure of the degree of the vertex as well as the degree of its neighbors • Shortest-path based Centrality Metrics – Betweeness Centrality : measure of the number of shortest paths a node is part of – Closeness Centrality : measure of how close is a vertex to the other vertices [sum of the shortest path distances] – Farness Centrality: captures the variation of the shortest path distances of a vertex to every other vertex Degree Centrality Time Complexity: Θ(V 2) Weakness : Very likely that more than one vertex has the same degree and not possible to uniquely rank the vertices Eigenvector Power Iteration Method -

Local Approximation of Pagerank and Reverse Pagerank ∗

Local Approximation of PageRank and Reverse PageRank ¤ Ziv Bar-Yossef Li-Tal Mashiach Department of Electrical Engineering Department of Computer Science Technion, Haifa, Israel Technion, Haifa, Israel and [email protected] Google Haifa Engineering Center, Haifa, Israel [email protected] ABSTRACT Over the past decade PageRank [27] has become one of the most popular methods for ranking nodes by their “prominence” in a net- We consider the problem of approximating the PageRank of a target 1 node using only local information provided by a link server. This work. PageRank’s underlying idea is simple but powerful: a promi- problem was originally studied by Chen, Gan, and Suel (CIKM nent node is one that is “supported” (linked to) by other prominent 2004), who presented an algorithm for tackling it. We prove that nodes. PageRank was originally introduced as means for rank- local approximation of PageRank, even to within modest approxi- ing web pages in search results. Since then it has found uses in mation factors, is infeasible in the worst-case, as it requires probing many other domains, such as measuring centrality in social net- the link server for (n) nodes, where n is the size of the graph. The works [20], evaluating the importance of scientific publications, difficulty emanates from nodes of high in-degree and/or from slow prioritizing pages in a crawler’s frontier [10], personalizing search convergence of the PageRank random walk. results [8], combating spam [17], measuring trust, selecting pages We show that when the graph has bounded in-degree and admits for indexing, and more. -

The Pagerank Citation Ranking: Bringing Order to The

The PageRank Citation Ranking: Bringing Order to the Web January 29, 1998 Abstract The imp ortance of a Web page is an inherently sub jective matter, which dep ends on the readers interests, knowledge and attitudes. But there is still much that can b e said ob jectively ab out the relative imp ortance of Web pages. This pap er describ es PageRank, a metho d for rating Web pages ob jectively and mechanically, e ectively measuring the human interest and attention devoted to them. We compare PageRank to an idealized random Web surfer. We show how to eciently compute PageRank for large numb ers of pages. And, we showhow to apply PageRank to search and to user navigation. 1 Intro duction and Motivation The World Wide Web creates many new challenges for information retrieval. It is very large and heterogeneous. Current estimates are that there are over 150 million web pages with a doubling life of less than one year. More imp ortantly, the web pages are extremely diverse, ranging from "What is Jo e having for lunchtoday?" to journals ab out information retrieval. In addition to these ma jor challenges, search engines on the Web must also contend with inexp erienced users and pages engineered to manipulate search engine ranking functions. However, unlike " at" do cument collections, the World Wide Web is hyp ertext and provides considerable auxiliary information on top of the text of the web pages, such as link structure and link text. In this pap er, we take advantage of the link structure of the Web to pro duce a global \imp ortance" ranking of every web page. -

Twitter-Driven Youtube Views: Beyond Individual Influencers

Twitter-driven YouTube Views: Beyond Individual Influencers Honglin Yu, Lexing Xie, Scott Sanner The Australian National University and NICTA, Canberra, Australia [email protected], [email protected], [email protected] ABSTRACT This work is related to several areas in online video and so- This paper proposes a novel method to predict increases in YouTube cial media popularity. Early studies included large-scale profiling viewcount driven from the Twitter social network. Specifically, we of content [5] and their social networks [7]. Subsequent measure- aim to predict two types of viewcount increases: a sudden increase ments provided statistics on video popularity [6] and its geographic in viewcount (named as JUMP), and the viewcount shortly after distribution [4]. A number of recent works focused on forecasting the upload of a new video (named as EARLY). Experiments on popularity: YouTube video views are found to be predictable from hundreds of thousands of videos and millions of tweets show that recent viewcount history [13, 15], aggregate video sharing behav- Twitter-derived features alone can predict whether a video will be ior is found to be related to geographical, social and temporal shar- in the top 5% for EARLY popularity with 0.7 Precision@100. Fur- ing trends [16], and the lifecycle of trending topics in the news are thermore, our results reveal that while individual influence is in- found to generalize from historically similar topics across multi- deed important for predicting how Twitter drives YouTube views, it ple information platforms [2]. More recently, researchers started to is a diversity of interest from the most active to the least active Twit- examine video sharing behavior on microblogs.