Msc THESIS Communication-Centric Debugging of Systems on Chip Using Networks on Chip

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Shoe List and Stretching

Marin Foot and Ankle 7 North Knoll Road, Suite 3, Mill Valley, CA 94941 Phone: 415-388-2777 Fax: 415-388-2778 www.marinfootandankle.com Amazing Calf Stretch 1. Always stretch with your shoes on. Start by standing parallel with the wall, approximately an arm’s length away, feet together. WALL Arm’s Length Away 2. Make an “L” shape with your feet and bend both knees. If necessary, steady yourself by placing one hand on the wall, but do not lean against the wall. 3. Straighten and lock your knee- trying to get your heel as close to baseboard as you can. Now straighten and lock your other knee. You should be able to feel the stretch in the calf closest to the wall. Hold the stretch for 20 seconds. Bad knees? Bad back? Stand on Preferred method – heel against wall. edge of step, hold railings, and let heel dangle over the edge. 4. Turn and repeat using the opposite side. WALL Arm’s Length Away 5. Two reps each morning and two reps each night. Repeat as often as you can during the day especially before and after athletic activities. Marin Foot and Ankle 7 North Knoll Road, Suite 3, Mill Valley, CA 94941 Phone: 415-388-2777 Fax: 415-388-2778 www.marinfootandankle.com Exercise Walking/Running Dress Shoes - Women Aetrex Edge Aetrex, Aravon, Ariat, Beautifeel, Blend, Cole Haan, Dansko, Finn Comfort, Kumfs, Munro, Asics Gel Kayano, GT 2000 Naot, Rockport, Salamander, Sanita, Selby Brooks Beast*, Ariel*, Addiction, Adrenaline, Transcend Sudini, Taryn Rose, Theresia, Hoka Gaviota, Bondi, Conquest, Stinson, Akasa Samuel Hubbard Mizuno Wave Alchemy -

MOLTEN USA, INC. 1170 Trademark Drive, Suite 109 Reno, NV 89521

MOLTEN USA, INC. 1170 Trademark Drive, Suite 109 Reno, NV 89521 (775)353-4000 · Fax (775)358-9407 Toll Free (800)477-1994 MOLTENUSA.COM CONTENTS VOLLEYBALL 5–17 • Elite Competition (7–11) • Competition (11–13) • Training (13) • Recreation & Novelty (14–17) • Custom (15,16) BEACH VOLLEYBALL 18–22 • Elite Competition (19–21) • Recreation & Novelty (22) SOCCER 23–27 • Elite Competition (24–25) • Competition (25–27) • Novelty (27) BASKETBALL 28–32 CHILDREN Products are intended and recommended for children 12 years of age and younger • Elite Competition (29–30) • Competition (31) Products are intended and recommended for indoor use • Recreation (32) Products are intended and recommended for outdoor use • Training & Novelty (32) Trophy products are recommended for awards, gifts or autographs and not intended for competition HANDBALL 33–34 Training products are recommended exclusively for skill development and not intended for competition Please contact Molten for more information about our custom products SPORTS EQUIPMENT 35–43 MINI Mini replica versions of larger Molten ball designs • Whistles (36–37) • Ball Carts (38) VOLLEYBALL Products specified for volleyball usage • Backpacks (39) SOCCER Products specified for soccer usage • Ball Bags (39–40) • Coaching Accessories (41– 42) BASKETBALL Products specified for basketball usage PRODUCT SIZING AFFILIATIONS FIRST TOUCH VOLLEYBALL V70 (2.5 oz.) - Recommended for players age 6 and younger V140 (5 oz.) - Recommended for players age 8 and younger CCAA.CA FIBA.COM V210 (7.5 oz.) - Recommended for -

Australia/New Zealand June 1, 2013

Australia & New Zealand Monthly sponsorship industry analysis report June 2013 AUSTRALIA & NEW ZEALAND International Marketing Reports Ltd 33 Chapel Street Buckfastleigh TQ11 0AB UK Tel +44 (0) 1364 642224 [email protected] www.imrsponsorship.com ISSN 2050-4888 eISSN 2050-4896 Copyright ©2012 by International Marketing Reports Ltd All rights reserved. No part of this publication may be reproduced, stored in a retrieval system or transmitted in any form or by any means, electronic, photocopying or otherwise, without the prior permission of the publisher and copyright owner. While every effort has been made to ensure accuracy of the information, advice and comment in this publication, the publisher cannot accept responsibility for any errors or actions taken as a result of information provided. 2 Sponsorship Today methodology Sponsorship Today reports are created through the collection of data from news feeds, web searches, industry and news publications. Where sponsorship deals have not been reported, the Sponsorship Today team actively seeks data through web searches, annual financial reports and contacting sponsors, agencies and rights holders. Most sponsorship deals are not reported and, of those that are, the majority do not provide accurate fee or duration data. IMR estimates unreported fee values through comparisons with similar deals, contacts with industry insiders and through its long experience of creating sponsorship analysis reports. There is no guarantee of accuracy of estimates. The sponsorship industry is also known to overstate sponsorship fee values. Such reports are frequently based on the maximum potential value of a deal and might include the total should all incentive clauses (such as sporting success) be met and no morality clauses invoked. -

Notice City Commission Regular Meeting

Notice City Commission Regular Meeting 7:00 pm Monday, June 4, 2018 Commission Chambers, Governmental Center 400 Boardman A venue Traverse City, Michigan 49684 Posted and Published: 05-31-18 Meeting informational packet is available for public inspection at the Traverse Area District Library, City Police Station, City Manager's Office and City Clerk's Office. The City of Traverse City does not discriminate on the basis of disability in the admission to, access to, treatment in, or employment in, its programs or activities. Penny Hill, Assistant City Manager, 400 Boardman Avenue, Traverse City, Michigan 49684, phone 231-922-4440, TDD/TTY 231-922-4412, VRS 231- 421-7008, has been designated to coordinate compliance with the non discrimination requirements contained in Section 3 5 .107 of the Department of Justice regulations. Information concerning the provisions of the Americans with Disabilities Act, and the rights provided thereunder, are available from the ADA Coordinator. If you are planning to attend and you have a disability requiring any special assistance at the meeting and/or if you have any concerns, please immediately notify the ADA Coordinator. City Commission: c/o Benjamin Marentette, MMC, City Clerk (231) 922-4480 Email: [email protected] Web: www.traversecitymi.gov 400 Boardman A venue Traverse City, MI 49684 The mission of the Traverse City City Commission is to guide the preservation and development of the City's infrastructure, services, and planning based on extensive participation by its citizens coupled with the expertise of the city's staff. The Commission will both lead and serve Traverse City in developing a vision for sustainability and the future that is rooted in the hopes and input of its citizens and organizations, as well as cooperation from surrounding units ofgovernment. -

Mechanic Street Historic District

Figure 6.2-2. High Style Italianate, 306 North Van Buren Street Figure 6.2-3. Italianate House, 1201 Center Avenue Figure 6.2-4. Italianate House, 615 North Grant Street Figure 6.2-5. Italianate House, 901 Fifth Street Figure 6.2-6. Italianate House, 1415 Fifth Street Figure 6.2-7. High Style Queen Anne House, 1817 Center Avenue Figure 6.2-8. High Style Queen Anne House, 1315 McKinley Avenue featuring an irregular roof form and slightly off-center two-story tower with conical roof on the front elevation. The single-story porch has an off-center entry accented with a shallow pediment. Eastlake details like spindles, a turned balustrade, and turned posts adorn the porch, which extends across the full front elevation and wraps around one corner. The house at 1315 McKinley Avenue also displays a wraparound porch, spindle detailing, steep roof, fish scale wall shingles, and cut-away bay on the front elevation. An umbrage porch on the second floor and multi-level gables on the primary façade add to the asymmetrical character of the house. More typical examples of Queen Anne houses in the district display a variety of these stylistic features. Examples of more common Queen Anne residences in Bay City include 1214 Fifth Street, 600 North Monroe Street, and 1516 Sixth Street (Figures 6.2-9, 6.2-10, and 6.2-11). In general, these buildings have irregular footprints and roof forms. Hipped roofs with cross-gabled bays are common, as are hip-on-gable or jerkinhead details. Porch styles vary but typically extend across the full or partial length of the front elevation and wrap around the building corner. -

Complete List of Footwear

Evaluation of Slip Resistant Footwear Complete List of Footwear Casual/Work M/W Brand Name Style # MAA casual M Baffin Zone Soft SOFT-M006 casual M Baffin Logan 62000377 casual M Camo Hunting 087-3354-4 casual M CAT Drover Ice + WP TX P721731 casual M CAT Stiction Hiker Ice P720863 casual M Columbia Bugaboot Plus III Omni Cold- 1626251 Weather Boot casual M Columbia Redmond Waterproof 1553592231 MidHiking Shoe casual M Hi-tec Trail OX Winter 200 I WP 58030 casual M Huntshield Northern Tracker II 1870009 casual M Hush puppies Pender Spy Ice+ Black WP HMP1556-001 Leather casual M Kamik Greenbay 4 Cold Weather XNK0199S Boot casual M Kamik Supreme 872580 casual M Kamik Quest – Third best seller 870394 casual M Keen Koven Polar Raven / Tawney 1013309 Olive casual M Kodiak Blue Renegade 310082BLK Page 1 of 5 November 27, 2017 casual M Kodiak ProClassic 310088 casual M Merrell Capra Glacial Ice + Mid WP J35799 casual M Merrell Coldpack Ice + Mid Polar WP J91841 casual M Merrell Coldpack Ice + Mid WP J49819 casual M Merrell Jungle MOC Ice J37829 casual M Merrell MOAB FST Ice + Thermo J35791 casual M Merrell Overlook 6 Ice + LTR WP J598431 casual M Merrell Overlook 6 Ice + WP J36941 casual M Merrell Thermo Adventure Ice + 6” J06097 WP casual M Nike Son of Force Mid Winter 807242-009 Casual Shoes casual M OTB Artic BT 1870910 casual M OTB Blizzard 1871042 casual M OTB Glacier 0916 1870916 casual M OTB Snowguard 1870968 casual M Red frog boots Celtin Black Celtin Black casual M Reebok Royal Reamaze 2 M V69715 casual M Rockport Elkhart Snow Boot -

Police Crime Report- September 24

PPoolliiccee CCrriimmee BBuulllleettiinn Crime Prevention Bureau 26000 Evergreen Road, Southfield, Michigan (248) 796-5500 September 24, 2018 – September 30, 2018 Acting Chiefs of Police Brian Bassett & Nick Loussia Prepared by Mark Malott Neighborhood Watch Coordinator 248-796-5415 Commercial Burglaries: Date/Time Address (block range) Method of Entry Description/Suspect Information 09/29/2018 25000 Telegraph Rd. Exterior glass door Officers responded to business in the 25000 Block of 7:03am (Discount Store) was broken out. Telegraph Rd. reference a B&E alarm. Upon arrival, Officers observed that the glass on the front door had been broken out. Investigation revealed that the suspect stole cigarettes from behind the counter. Surveillance footage showed the suspect to be a B/M, 5’8”-5’11”, medium complexion and medium build with a goatee and mustache. The suspect was wearing an orange “Baby Phat” hoodie over a white tee-shirt, grey pants, and black shoes with white trim around the bottom. From: 09/26/2018 28000 Telegraph Rd. No forced entry. Victim states sometime between 12:30am- 12:50am 12:30am (Commercial Self- Storage) Manager believes someone forced entry into his storage unit located in To: 09/26/2018 suspects have an the 28000 Block of Telegraph Rd. and took 3- Flat 12:50am access code. Screen TV’s and a Home Stereo System. Manager states surveillance video shows 2- males breaking into the unit and entering the building around 12:40am. The video does not show the faces of the suspects. Manager advises this is an ongoing problem at this location and that she is trying to determine how the suspects are gaining entry to the building. -

GVHUSA-Genetic Indicator Sires-01112021

GENETIC INDICATOR SIRE LISTING EPD Run Date 010521 To qualify for this list, sires must adhere to these criteria: 1) Achieve Active Sire status. This means they have produced a calf born between January 1, 2018 and January 11, 2021. 2) Achieve accuracy on WW EPD between 0.46 and 0.65. 3) Hold an AMGV prefix 4) Be owned by an active AGA member (junior, regular or lifetime) or in partnership with one of the same. 1324 sire met the qualification for inclusion on this listing GENETIC INDICATOR SIRE LISTING AMERICAN GELBVIEH ASSOCIATION Name of Bull Sire Owners Name CED BW WW YW MK TM CEM HP PG30 ST DOC SC DMI YG CW REA MB FT ADG RFI $Cow FPI EPI Birthdate Reg No Maternal Grandsire Location ---------------- Accuracy ---------------- ---------------- Accuracy ---------------- Prefix Tattoo Color HPS %GV AI Qual Enhanced? Prog Dau ---------------- Percentile ---------------- ---------------- Percentile ---------------- ----- Percentile ----- 01E TWISTER 451B KICKING HORSE RANCH 16 -0.4 80 117 26 66 7 5.94 -1.88 15 8 1.10 -0.115 -0.23 46 0.87 0.25 -0.04 -0.052 -0.149 104.07 85.31 97.48 02/04/2017 1389622 47R OILMONT MT 0.50 0.58 0.54 0.54 0.18 0.29 0.26 0.17 0.06 0.28 0.28 0.22 0.16 0.35 0.47 0.45 0.37 0.31 0.25 0.20 KHR 01E 1 P PB94 Yes 20 0 15 40 10 15 15 10 35 45 90 50 90 15 10 60 10 30 35 45 60 3 55 10 25 03F CORNHUSKER RED 524C KICKING HORSE RANCH 17 -1.3 71 102 22 57 10 10.91 -0.34 18 11 1.23 -0.16 30 0.49 0.23 -0.04 79.85 02/16/2018 1424415 25A OILMONT MT 0.46 0.55 0.51 0.51 0.17 0.28 0.23 0.08 0.05 0.26 0.31 0.16 0.35 0.46 0.42 0.35 0.34 -

Week of April 11, 2021

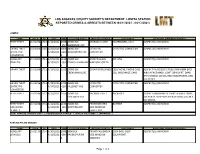

LOS ANGELES COUNTY SHERIFF'S DEPARTMENT- LOMITA STATION REPORTED CRIMES & ARRESTS BETWEEN (04/11/2021 - 04/17/2021) LOMITA: CRIME FILE # RD DATE TIME LOCATION-PUBLIC METHOD OF ENTRY LOSS-PUBLIC ADDITIONAL INFORMATION-PUBLIC PETTY THEFT 21-01194 1713 4/12/2021 0600 25000 BLK STORAGE CLOSET SKATEBOARD, LOCK SUSPECT(S) UNKNOWN -171 NARBONNE AVE GRAND THEFT 21-01203 1710 3/22/2021- 0000 24000 BLK CATALYTIC CATALYTIC CONVERTER SUSPECT(S) UNKNOWN (CATALYTIC 4/13/2021 -000 WOODWARD AVE CONVERTER CONVERTER) 0 BURGLARY 21-01205 1711 3/27/2021- 1100 25000 BLK BROKEN GLASS NO LOSS SUSPECT(S) UNKNOWN (TRAILER) 4/13/2021 -093 PENNSYLVANIA AVE WINDOW SCREEN 0 GRAND THEFT 21-01206 1711 4/13/2021 1225 24000 BLK OPEN FOR BUSINESS CELLPHONE, PHONE CASE, SUSPECT FH/60/503/170/BLK HAIR/BRN EYES CRENSHAW BLVD CDL, INSURANCE CARD WRG A FACE MASK, LIGHT GRN SHIRT, DARK GRY HOODED JACKET, RED SWEATPANTS, AND BLK SHOES. GRAND THEFT 21-01216 1713 4/13/2021- 1700 26000 BLK CATALYTIC CATALYTIC CONVERTER SUSPECT(S) UNKNOWN (CATALYTIC 4/14/2021 -030 HILLCREST AVE CONVERTER CONVERTER) 0 PETTY THEFT 21-01246 1712 4/16/2021 1555 25000 BLK PACKAGE THEFT PACKAGES SUSPECT MH/HEAVY SET WRG A WHITE SHIRT, ESHELMAN AVE BLK PANTS. SUSP WAS SEEN LEAVING LOC IN A BLK SEDAN. PETTY THEFT 21-01268 1751 4/11/2021- 1100 26000 BLK FRONT DRIVER'S BATTERY SUSPECT(S) UNKNOWN (UNLOCKED 4/12/2021 -110 WESTERN AVE SIDE WINDOW VEHICLE) 0 SHATTERED TOTAL ARRESTS: IDENTITY THEFT - 2, POSSESSION OF A BATON - 1, VEHICLE VIOLATIONS - 1, WARRANTS - 6 RANCHO PALOS VERDES: CRIME FILE # RD DATE TIME -

The City of Rapid City Bill List by Vendor - Detail

The City of Rapid City Bill List by Vendor - Detail Vendor # Invoice # PO # Vendor Name GL Account Line Item Description Line Item Amount 5 02/05/19 1ST NATIONAL BANK IN SIOUX 10700124-442000 2018 SALES TAX REV BOND 343,568.51 FALLS PYMT 02/25/19 1ST NATIONAL BANK IN SIOUX 60400833-442000 2011B WASTEWATER BOND 86,691.25 FALLS PYMT 03/01/19 1ST NATIONAL BANK IN SIOUX 60200932-442000 2009 WTR REV BOND PYMT 249,435.42 FALLS 1ST NATIONAL BANK IN SIOUX 679,695.18 FALLS Total: 37 IN584514 123300 A & B BUSINESS EQUIPMENT INC 60407072-426100 TOSHIBA COPIER STAPLES 92.90 IN582658 122737 A & B BUSINESS EQUIPMENT INC 60407072-422500 FEB 2019, COPIER 167.99 MAINTENANCE IN585872 123513 A & B BUSINESS EQUIPMENT INC 60207013-426100 TOSHIBA COPIER/FAX MAINT 69.71 02051 IN585872 123513 A & B BUSINESS EQUIPMENT INC 60207014-425300 TOSHIBA COPIER/FAX MAINT 161.36 02051 IN582999 123015 A & B BUSINESS EQUIPMENT INC 10100201-424400 COPIER LEASES 1,679.99 A & B BUSINESS EQUIPMENT INC 2,171.95 Total: 42 287261158408 123163 A T & T MOBILITY 61507103-428100 PHONE 46.44 X012319 A T & T MOBILITY Total: 46.44 44 12471012119 123081 A TO Z SHREDDING 60207012-422500 SHREDDING OF OLD OFFICE 15.10 PAPERW 12471012119 123081 A TO Z SHREDDING 60407071-422500 SHREDDING OF OLD OFFICE 9.06 PAPERW 12471012119 123081 A TO Z SHREDDING 60907401-422500 SHREDDING OF OLD OFFICE 6.04 PAPERW 12515012319 122705 A TO Z SHREDDING 10100106-426100 Shredding removal 31.00 A TO Z SHREDDING Total: 61.20 46 00985957 122587 A&B WELDING SUPPLY CO INC 10100305-426900 WELDING SUPPLIES 289.05 00986054 -

Aerial Imagery Catalog Collection at [email protected]

Aerial Imagery Catalog collection at USDA - Farm Production and Conservation Business Center Geospatial Enterprise Operations Division (GEO) 125 So. State Street Suite 6416 Salt Lake City, UT 84138 (801) 844-2922 - Customer Service Section (855) 415-2014 - Fax [email protected] http://www.fsa.usda.gov/programs-and-services/aerial-photography This catalog listing indicates the various imagery coverage used by the U.S. Department of Agriculture (USDA) from 1955 to the present. It shows imagery used primarily by the U.S Forest Service (USFS). For imagery prior to 1955, please contact the National Archives & Records Administration: Cartographic & Architectural Reference (NWCS-Cartographic) Aerial Photographs Team [email protected] Coverage is listed alphabetically by county. A Federal Information Processing Standard (FIPS) numerical code was assigned to each county after July 1, 1971. The prior years of imagery were assigned an alpha code, shown in parenthesis. Visit the FIPS Index at: http://www.fsa.usda.gov/Internet/FSA_File/alphafips.pdf Some form of an index (i.e. digital, line, photo, spot) is available for most imagery projects. The number of index sheets required for each project is shown on this listing. Current aerial imagery is now obtainable in digital format on CD, DVD, flash or hard drive media. Scanning aerial imagery film on repository at GEO (1955 - present) can also produce digital imagery products. Contact our Customer Service Section for ordering information, instructions, and further details on available products at: http://www.fsa.usda.gov/programs-and-services/aerial-photography/imagery-programs/naip- -

Northwestern State Men's Basketball

NORTHWESTERN STATE MEN’S BASKETBALL Southland Conference Champions: 2004-05 • 2005-06 Southland Conference Tournament Champions: 2001, 2006, 2013 NCAA Tournament Appearances: 2001 • 2006 • 2013 CIT Appearance: 2015 Jason Pugh, Assistant AD for Media Relations • Office: 318-357-6467 • Cell: 318-663-5701 • [email protected] • @JasonSPugh NSUDemons.com • /forkemdemons • @NSUDemons (Department) • @NSUDemonsMBB (Men’s Basketball) • Press Row: 318-357-4544 at No. 1 Gonzaga Tuesday, Dec. 22, 2020 • 8 p.m. (CST) 10 Spokane, Wash. • McCarthey Athletic Center (6,000) 2020-21 SCHEDULE MATCHUP November Northwestern State No. 1 Gonzaga 25 at No. 14 Texas Tech L, 101-58 Demons Zags 28 #vs. UT Arlington L, 80-71 2020-21 Record: 1-8 2020-21 Record: 5-0 29 at Louisiana Tech L, 91-77 Head Coach: Mike McConathy Head Coach: Mark Few Career Record: 663-503 (38th season) Career Record: 604-124 (22nd season) December Northwestern State Record: 311-344 (22nd season) Gonzaga Record: 604-124 (22nd season) 3 at TCU L, 74-68 Record vs. Gonzaga: 0-1 6 ULM L, 92-83 (OT) 12 CHAMPION CHRISTIAN W, 77-44 RADIO 18 at Tulsa L, 82-55 Demon Sports Network (95.9 Kix Country, NSUDemons.com) 19 at Missouri State L, 94-67 21 at No. 1 Gonzaga L, 95-57 PxP: Patrick Netherton 22 at No. 1 Gonzaga 8 p.m. 23 at Washington State 4 p.m. TIPOFF • The Northwestern State men’s basketball team caps a two-game series against the top-ranked team in the January 2 *at Houston Baptist 7 p.m.