Chips with Everything: Coding As Performance and the Aesthetics of Constraint

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

INSIDE Six to Run for ND Student Body President Three to Vie for SMC

Careers Unlimited- INSIDE VOL. XXI, NO. 85 THURSDAY, FEBRU A RY 5,1987 the independent student newspaper serving Notre Dame and Saint M a n ’s Six to run for ND student body president By BETH CORNWELL Gonzales must declare his Staff Reporter running mate by 1 p.m. today, according to Ombudsman Six potential student body Election Committee Chairman presidential tickets and at least Dan Gamache. Cooke and one candidate from each stu Bink, who have declared their dent senate district were pres candidacies but have not yet ent at a mandatory meeting for specified which will be the prospective candidates Wed presidential nomminee, must nesday night. also register their decision with The prospective presidential the Ombudsman committee by tickets include Theodore’s 1 p.m. Thursday. manager Vince Willis and Incumbant senator Brian junior class president Cathy Holst is the only candidate run Nonnenkamp, juniors Todd ning in senate district one, Graves and Brian Moffitt, which includes St. Edward’s, Grace Giorgio and Bill Sam- Lewis, Holy Cross, Carroll, mon, Willie Franklin and Jim Sorin, Walsh, Alumni, and Old Mangan, Black Student Union College halls. President Martin Rodgers and Sophomore Sean Hoffman L.B. Eckelkamp. registered three minutes Sophomore Class President before the 7:30 p.m. deadline Pat Cooke and J.P.W. Commis to delare an unopposed can sioner Laurie Bink, as well as didacy for senate district sophomores Raul Gonzales and two, which includes Stanford, either Ray Lopez or Chuck Keenan, Zahm, Cavanaugh, Neidhoefer also declared their Student Body President and Vice President meeting for candidates last night. -

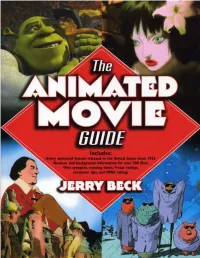

The Animated Movie Guide

THE ANIMATED MOVIE GUIDE Jerry Beck Contributing Writers Martin Goodman Andrew Leal W. R. Miller Fred Patten An A Cappella Book Library of Congress Cataloging-in-Publication Data Beck, Jerry. The animated movie guide / Jerry Beck.— 1st ed. p. cm. “An A Cappella book.” Includes index. ISBN 1-55652-591-5 1. Animated films—Catalogs. I. Title. NC1765.B367 2005 016.79143’75—dc22 2005008629 Front cover design: Leslie Cabarga Interior design: Rattray Design All images courtesy of Cartoon Research Inc. Front cover images (clockwise from top left): Photograph from the motion picture Shrek ™ & © 2001 DreamWorks L.L.C. and PDI, reprinted with permission by DreamWorks Animation; Photograph from the motion picture Ghost in the Shell 2 ™ & © 2004 DreamWorks L.L.C. and PDI, reprinted with permission by DreamWorks Animation; Mutant Aliens © Bill Plympton; Gulliver’s Travels. Back cover images (left to right): Johnny the Giant Killer, Gulliver’s Travels, The Snow Queen © 2005 by Jerry Beck All rights reserved First edition Published by A Cappella Books An Imprint of Chicago Review Press, Incorporated 814 North Franklin Street Chicago, Illinois 60610 ISBN 1-55652-591-5 Printed in the United States of America 5 4 3 2 1 For Marea Contents Acknowledgments vii Introduction ix About the Author and Contributors’ Biographies xiii Chronological List of Animated Features xv Alphabetical Entries 1 Appendix 1: Limited Release Animated Features 325 Appendix 2: Top 60 Animated Features Never Theatrically Released in the United States 327 Appendix 3: Top 20 Live-Action Films Featuring Great Animation 333 Index 335 Acknowledgments his book would not be as complete, as accurate, or as fun without the help of my ded- icated friends and enthusiastic colleagues. -

Keyboard July 1981 by Bob Doerschuk Remembering the Man

Remembering the Man Behind "Cast Your Fate to The Wind” ONE NIGHT NOT long ago, a group of friends met at the home of Jean Gleason in Berkeley, California. They came from different parts of the Bay Area and different walks of life. Over the dinner table, with the lights of San Francisco and the Bay Bridge as a backdrop through the western windows, the conversation was light and literate, touching on the arts and issues that crop up in gatherings like this throughout the country. But after a while the mood turned mellow. Slowly the guests filed into Jean's living room, arranged themselves on couches and cushions, and waited as the lights dimmed to darkness and a motion picture projector came to life. The film was Anatomy Of A Hit, produced by Jean's late husband, the noted jazz critic Ralph Gleason, for public tele-vision in 1963. For the next few hours, every-one watched silently as images of a short stocky man with thick-rimmed glasses and an improbable mustache flickered across the screen. He joked with acquaintances in the shadows of the past, bustled into late-night bistros long since forgotten by today's young San Franciscans, scribbled messages to him-self on a basement pipe, clambered playfully onto an empty storage shelf in the ware-house at Fantasy records, and above all, he played the piano. In recording studios, in North Beach nightclubs, in his own house, where he kept a battered spinet and a baby grand only a few yards from one another in case inspiration hit too suddenly to move, Vince Guaraldi was filmed behind the keys. -

There Are Three Composers That I Have at One Time Or Another Tried to Play

Love Will Come – new long web notes: There are three composers that I have at one time or another tried to play all of their songs: New Orleans R&B pianist Professor Longhair, The Doors, and Vince Guaraldi...Vince Guaraldi and his music are so much a part of the deep heart and soul of San Francisco, and of the experience of childhood, and beyond.” — George Winston In 1996, George released his first album of Guaraldi compositions, entitled LINUS AND LUCY - THE MUSIC OF VINCE GUARALDI. It nicely mixed well known-standards like the title track and Cast Your Fate to the Wind, with less well-known material, including some music cues that had only been heard in the soundtracks. George’s second album of Guaraldi music delves even deeper into the repertoire, uncovering some hidden gems. Like many musicians of his generation, George Winston first heard the music of San Francisco based jazz pianist Vince Guaraldi on his 1962 hit Cast Your Fate to the Wind, and on the 1965 televised Peanuts® animation special A Charlie Brown Christmas. First aired on the CBS network in 1965, the television special was created by the Peanuts® comic strip creator Charles Schulz (1922-2000), writer/producer Lee Mendelson (1933-2019), and former Warner Brothers animator Bill Melendez (1916-2008). Vince Guaraldi was hired to compose the jazz soundtrack, a bold step for network television at that time. Vince completed the diamond that was the Dream Team. At first, some executives at CBS weren’t fully on board about using jazz for the music soundtrack. -

Musicophilia Les-Bibliothc3a9caires A-Library-Music-Collection Booklet.Pdf

Les Bibliothécaires A Library Music Collection For music obsessives, discoveries usually come one or two at a time--a new artist here and again, maybe a new corner of a subgenre now and then. But discovering Library Music amplifies that many times over: it’s not really a genre, not a scene, but rather a whole mirror world, familiar and yet unknown, reminiscent of the one we spend our lives in, but shifted and skewed in incredible ways. Most of the ingredients are recognizable from the funk, jazz, rock, disco, pop and folk musics we love--bass guitar, drums, bongos, piano, organs, brass instruments, synthesizers--but the proportions are different, the emphasis a bit unusual, the production a little alien. The beats are punchier, the bass more emphatically bouncing, the guitars often secondary, as if from a reality where harpsichords were one of the more popular garage band instruments. And then there are the strings, sometimes orchestral, often at a chamber scale, soaring above and around in a dance with the drums and the bass, not just reemphasizing the chord changes like we expect when they show up in pop music. If you love ‘Melody Nelson,’ or “Apache,” or golden-age Hip-Hop breakbeat samples, you’ve had a glimpse of this world’s sounds echoing into the regular music world, and you’re in for a treat. Library Music, and Library musicians in the late 60s, the 70s, and the early 80s seem to have collectively decided they weren’t going to take sides in a world of ever-increasing possibilities: they were often classically trained, and many clearly spent their formative years in the jazz world, and yet rather than being snobs about it, they jumped head-first into funk and psychedelic rock and pop and crazy tape music and new electronic gadgets. -

WEA in European CD Push

SM 14011 NEWSPAPER B8O*96FEENLYMUNTOO rARBC !WPM( f,ULENLV 03 10 3-140 ELM UC."Y LONE BEACH CA 90807 r A Billboard Publication The International Newsweekly Of Music & Home Entertainment April 14, 1984 $3 (U.S.) BIG FIRST MONTH WEA In European CD Push Music Dealers Key Lower Player Cost Seen Luring Youth K -tel Computer Arm By IRV LICHTMAN CD buyer of "increasingly younger national levels; four -color posters, age, with the market now stretching and p -o -p material. In addition, more By STEVEN DUPLER NEW YORK -Eleven WEA com- from adolescents to audiophiles and than a million four -color booklets panies in Europe have launched a classical enthusiasts," according to have been printed for insertion in all NEW YORK -K -tel Software ducing the software into such mass broad -based, long- running marketing Jurgen Otterstein, WEA Europe new CD releases and use as in -store Inc., a newly formed computer soft- market outlets as Pathmark super- campaign for Compact Disc software marketing director. The emergence giveaways. ware division of K -tel International markets. to take advantage of dramatically of younger CD fans, Otterstein notes, The campaign involves WEA com- Inc., is making a direct bid for a pres- The software is marketed under lower hardware prices (Billboard, largely results from "the continued panies in England, Germany, France, ence in major record /tape stores. three brand names: Xonex cartridge March 24). downward trend in hardware Belgium, Spain, Holland, Italy, Swit- In its first month, the new division games, K -Tek budget software, and Tagged "Adventures In Modern prices," thus producing a CD market zerland, Austria, Sweden and Den- says it has shipped $1 million worth K -tel medium priced personal and Sounds: WEA Stars On CD," the that is changing rapidly. -

To Help Flood Victims

08120 z á m JULY 8, 1972 $1.25 á F A 3 BILLBOARD PUBLICATION 1'V SEVENTY -EIGHTH YEAR z The International r,.III Music- RecordTape Newsweekly TAPE /AUDIO /VIDEO PAGE 28 HOT 100 PAGE 56 I C) TOP LP'S PAGES 58, 60 FCC's Ray To Clear NARM Spearheads Drive Payola Air At Forum LOS ANGELES -William B. Ray, chief of the complaints and To Help Flood Victims compliances division of the Federal Communications Commission, By PAUL ACKERMAN will clear the air on the topic of payola as a speaker of the fifth annual Billboard Radio Programming Forum which will be held here NEW YORK-A massive all - ing closely with the record manu- working out these plans in con- Aug. 17 -19 at the Century Plaza Hotel. Ray will be luncheon speaker industry drive to aid record and facturers, vendors of fixtures and junction with representatives of all on Aug. 18. tape retailers whose businesses accessories, even pressing plants industry segments. He indicated have been partially or totally wiped and we are drawing that final decisions would be up Seven other new up a speakers, including new commissioner Ben out by the recent floods is being plan whereby damaged stock and to branches. local distributors. rack - Hooks of the FCC, have been slated for the three -day Forum, the spearheaded by NARM executive fixtures may be replenished at jobbers. rather than a central largest educational radio programming meeting of its kind. Also director Jules Malamud, NARM cost. We are also hopeful that group. speaking will be Paul Drew, programming consultant from Wash- president Dave Press and the or- the victimized businesses will be Overall Plan ington, D.C.; Pat O'Day, general manager of KJR, Seattle; Sonny ganization's board of directors. -

Harris Is Elektra VP

Super Vision The A/V Challenge (See Editoriak . Hits Turn Eye Toward Moderate Policy Thinkin . Chappell & Famous Add Five Years To mt' July Tie Harry 18,t971 Fox Agcy Cuts I Commis'n Fees ) $1.00 . RCA & Kirshner Mass Efforts On Dante ~Ma. INIERNAÍlONAL MUSIC SECIION1 ' E - ..Harris Is Elektra VP .. MCA Links Force lh f Nith Compo Of Canada To Form New Label "INIT. GRAND FUNK RAILROAD: EXPRESS-IVE INT'L SECTION BEGINS ON PAGE Last 53 J--- ° N, -+. sf= 7, '..r hr -34111w - 111k4"um y, 1 fJ ir, F l } l\\\\\\\\\\\\\\\\\` ==..` 1 =:=- : \`\ \\\\1. _ 41 1 0 Yi+,+i,\ ' _u_%,.. k\ _ _- \ ....` To á t11111,11111111101 \\-.\___ \ Communicate With Me" is Ray Stevens' new single. "America, ZS7 2016 Barnaby Records Distributed by Columbia Records m\ mom mum ////i a\\\ /////\/// \\ Minn//OM Mt THE INTERNATIONAL MUSIC -RECORD WEEKLY W111111ii WWII \\1111//// WWI/ \ NW VOL. XXXI - Number 50/July 18, 1970 Publication Office/ 1780 Broadway, New York, New York 10019 / Telephone. JUdson 6-2640 / Cable Address: Cash Box, N. Y. GEORGE ALBERT President and Publisher MARTY OSTROW Vice President IRV LICHTMAN Editor in Chief EDITORIAL MARV GOODMAN Assoc. Editor JOHN KLEIN Super- Vision ED KELLEHER FRED HOLMAN ERIC VAN LUSTBADER EDITORIAL ASSISTANTS MIKE MARTUCCI ANTHONY LANZETTA ADVERTISING BERNIE BLAKE Director of Advertising Though one picture is rumored to film soundtrack highlights are ready- ACCOUNT EXECUTIVES be worth a thousand words, inflation made shows with hit songs accompan- STAN SOIFER, New York HARVEY GELLER, Hollywood and the increasing consumer favor of ied by footage from hit movies. WOODY HARDING visual media are destined to alter that Beyond the public package, the a -v Art Director estimate. -

Ed Bogas and Désirée Goyette

by Alexandra Fee Photos by Laura Reoch, September-Days Photography to therefore introduce you to Ed Bogas and Désirée Goyette. For 40 years, this extraordinary Mill Valley duo have composed HAPPINESS IS... and performed some of our favorite, timeless tunes from Peanuts, Garfield and countless other television series, specials and THE PERFECT EQUATION feature films. For starters, Ed Bogas is a brilliant mathematician and ED BOGAS AND musician. A San Francisco native, his Russian immigrant parents moved west from the east coast when he was DÉSIRÉE GOYETTE a baby. At age 16, Ed entered a math contest earning him a full-ride scholarship to attend Stanford University followed up by a full scholarship to UC Berkeley’s graduate program. They say math and music stem from the same area of the brain as is certainly the case with Ed. He got his start in music with the viola and at 10 years old, he played in a semi-professional string quartet, later switching In the words of Charlie Brown, happiness is…Ed Bogas and Désirée to the violin. His older brother, Roy was a budding piano prodigy Goyette…a perfect equation of happiness built on a foundation of so his father steered Ed towards a career in math. “All you need,” love, music, passion and community. his father would say, “is a pencil and paper.” Ed’s mathematical prowess led him to work for Project S.E.E.D. (Special Elementary For 70 years, Snoopy, Charlie Brown and the rest of the Peanuts Education for the Disadvantaged) where he taught math to children gang have been entertaining millions of people of all ages worldwide. -

“Rotoscopia Y Captura De Movimiento. Una Aproximación General a Través

UNIVERSITAT POLITÈCNICA DE VALÈNCIA UNIVERSIDADESCOLA POLITÈCNICA POLITECNICA SUPERIOR DE DEGANDIA VALENCIA ESCUELAUNIVERSIDADPOLITECNICA POLITECNICASUPERIOR DE VALEDE NCIAGANDIA MÁSTERESCUELAENPOLITEPOSTPRODUCCIÓNCNICA SUPERIORDIGITALDE GANDIA MASTER EN POSTPRODUCCION DIGITAL MASTER EN POSTPRODUCCION DIGITAL “Rotoscopia y captura de movimiento. Una“ ¿Cómoaproximación doblar generalel humor? a través Particularidades de sus “¿Cómotécnicasdel doblargénero y procesos y estrategiasel humor? en la postproducción” para Particularidades la traducción del género y audiovisualestrategias de lo para cómico la” traducción audiovisual de lo cómico” TRABAJOTRABAJOFIN FINALAL DE MASTERDE MÁSTER Autor: Vicente Aranda Ferrer Autora: Sara Mérida Mejías Director: Antonio Forés López TRABAJODirectora:FIN ElisaAL MarchDE MASTER Leuba Gandia, septiembre de 2013 Autor: Vicente Aranda Ferrer Gandia, septiembre de 2013 Director: Antonio Forés López Gandia, septiembre de 2013 RESUMEN El trabajo de investigación que a continuación se desarrolla se trata de un Trabajo Final del Máster en Postproducción Digital realizado en el Campus de Gandia de la Universidad Politécnica de Valencia. El objetivo de esta investigación consiste en profundizar en el conocimiento de las técnicas de la rotoscopia y de la captura de movimiento. Considerando las afirmaciones que realizan diversos autores sobre que la técnica de la captura de movimiento es una evolución de la técnica de la rotoscopia, se pretende demostrar mediante el análisis si los procesos se pueden considerar un avance el uno del otro o, por el contrario, son métodos completamente distintos entre sí. Para ello, se efectuará un estudio de ambas técnicas con el objetivo de conocer su evolución, el propósito con el que fueron creadas y su adaptación a las nuevas tecnologías. Tras la investigación realizada se obtienen las características que definen a cada una de las técnicas, lo que permite realizar una comparación de las mismas y verificar así la hipótesis planteada.