Intelr С Atomtm Processor Core Made FPGA-Synthesizable

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Wind River Vxworks Platforms 3.8

Wind River VxWorks Platforms 3.8 The market for secure, intelligent, Table of Contents Build System ................................ 24 connected devices is constantly expand- Command-Line Project Platforms Available in ing. Embedded devices are becoming and Build System .......................... 24 VxWorks Edition .................................2 more complex to meet market demands. Workbench Debugger .................. 24 New in VxWorks Platforms 3.8 ............2 Internet connectivity allows new levels of VxWorks Simulator ....................... 24 remote management but also calls for VxWorks Platforms Features ...............3 Workbench VxWorks Source increased levels of security. VxWorks Real-Time Operating Build Configuration ...................... 25 System ...........................................3 More powerful processors are being VxWorks 6.x Kernel Compatibility .............................3 considered to drive intelligence and Configurator ................................. 25 higher functionality into devices. Because State-of-the-Art Memory Host Shell ..................................... 25 Protection ..................................3 real-time and performance requirements Kernel Shell .................................. 25 are nonnegotiable, manufacturers are VxBus Framework ......................4 Run-Time Analysis Tools ............... 26 cautious about incorporating new Core Dump File Generation technologies into proven systems. To and Analysis ...............................4 System Viewer ........................ -

Intel® Architecture Instruction Set Extensions and Future Features Programming Reference

Intel® Architecture Instruction Set Extensions and Future Features Programming Reference 319433-037 MAY 2019 Intel technologies features and benefits depend on system configuration and may require enabled hardware, software, or service activation. Learn more at intel.com, or from the OEM or retailer. No computer system can be absolutely secure. Intel does not assume any liability for lost or stolen data or systems or any damages resulting from such losses. You may not use or facilitate the use of this document in connection with any infringement or other legal analysis concerning Intel products described herein. You agree to grant Intel a non-exclusive, royalty-free license to any patent claim thereafter drafted which includes subject matter disclosed herein. No license (express or implied, by estoppel or otherwise) to any intellectual property rights is granted by this document. The products described may contain design defects or errors known as errata which may cause the product to deviate from published specifica- tions. Current characterized errata are available on request. This document contains information on products, services and/or processes in development. All information provided here is subject to change without notice. Intel does not guarantee the availability of these interfaces in any future product. Contact your Intel representative to obtain the latest Intel product specifications and roadmaps. Copies of documents which have an order number and are referenced in this document, or other Intel literature, may be obtained by calling 1- 800-548-4725, or by visiting http://www.intel.com/design/literature.htm. Intel, the Intel logo, Intel Deep Learning Boost, Intel DL Boost, Intel Atom, Intel Core, Intel SpeedStep, MMX, Pentium, VTune, and Xeon are trademarks of Intel Corporation in the U.S. -

Instruction-Level Distributed Processing

COVER FEATURE Instruction-Level Distributed Processing Shifts in hardware and software technology will soon force designers to look at microarchitectures that process instruction streams in a highly distributed fashion. James E. or nearly 20 years, microarchitecture research In short, the current focus on instruction-level Smith has emphasized instruction-level parallelism, parallelism will shift to instruction-level distributed University of which improves performance by increasing the processing (ILDP), emphasizing interinstruction com- Wisconsin- number of instructions per cycle. In striving munication with dynamic optimization and a tight Madison F for such parallelism, researchers have taken interaction between hardware and low-level software. microarchitectures from pipelining to superscalar pro- cessing, pushing toward increasingly parallel proces- TECHNOLOGY SHIFTS sors. They have concentrated on wider instruction fetch, During the next two or three technology generations, higher instruction issue rates, larger instruction win- processor architects will face several major challenges. dows, and increasing use of prediction and speculation. On-chip wire delays are becoming critical, and power In short, researchers have exploited advances in chip considerations will temper the availability of billions of technology to develop complex, hardware-intensive transistors. Many important applications will be object- processors. oriented and multithreaded and will consist of numer- Benefiting from ever-increasing transistor budgets ous separately -

Configurable RISC-V Softcore Processor for FPGA Implementation

1 Configurable RISC-V softcore processor for FPGA implementation Joao˜ Filipe Monteiro Rodrigues, Instituto Superior Tecnico,´ Universidade de Lisboa Abstract—Over the past years, the processor market has and development of several programming tools. The RISC-V been dominated by proprietary architectures that implement Foundation controls the RISC-V evolution, and its members instruction sets that require licensing and the payment of fees to are responsible for promoting the adoption of RISC-V and receive permission so they can be used. ARM is an example of one of those companies that sell its microarchitectures to participating in the development of the new ISA. In the list of the manufactures so they can implement them into their own members are big companies like Google, NVIDIA, Western products, and it does not allow the use of its instruction set Digital, Samsung, or Qualcomm. (ISA) in other implementations without licensing. The RISC-V The main goal of this work is the development of a RISC- instruction set appeared proposing the hardware and software V softcore processor to be implemented in an FPGA, using development without costs, through the creation of an open- source ISA. This way, it is possible that any project that im- a non-RISC-V core as the base of this architecture. The plements the RISC-V ISA can be made available open-source or proposed solution is focused on solving the problems and even implemented in commercial products. However, the RISC- limitations identified in the other RISC-V cores that were V solutions that have been developed do not present the needed analyzed in this thesis, especially in terms of the adaptability requirements so they can be included in projects, especially the and flexibility, allowing future modifications according to the research projects, because they offer poor documentation, and their performances are not suitable. -

Intel® Atom™ Processor E6x5c Series-Based Platform for Embedded Computing

PlAtfOrm brief Intel® Atom™ Processor E6x5C Series Embedded Computing Intel® Atom™ Processor E6x5C Series-Based Platform for Embedded Computing Platform Overview Available with industrial and commercial The Intel® Atom™ processor E6x5C series temperature ranges, this processor series delivers, in a single package, the benefits of provides embedded lifecycle support and the Intel® Atom™ processor E6xx combined is supported by the broad Intel® archi- with a Field-Programmable Gate Array tecture ecosystem as well as standard (FPGA) from Altera. This series offers Altera development tools. Additionally, a exceptional flexibility to incorporate a compatible, dedicated Power Management wide range of standard and user-defined Integrated Circuit (PMIC) solution may be I/O interfaces, high-speed connectivity, obtained from leading PMIC suppliers to memory interfaces, and process accelera- help minimize platform part count and tion to meet the requirements of a variety reduce bill of material costs and design of embedded applications in industrial, medi- complexity. Options include separate PMIC cal, communication, vision systems, voice and clock generator chips (available from over Internet protocol (VoIP), military, high- ROHM Co., Ltd.) or a single-chip solution performance programmable logic control- that integrates the voltage regulator and lers (PLCs) and embedded computers. clock generator (available from Dialog Semiconductor). The Intel Atom processor E6x5C series is a multi-chip, single-package device that Product Highlights reduces board footprint, lowers compo- • Single-package: A compact 37.5 x nent count, and simplifies inventory 37.5 mm, 0.8 mm ball pitch, multi-chip control and manufacturing. This compact device internally connects the Intel Atom design offers single-vendor support while processor E6xx with a user-programma- providing Intel Atom processors for ble FPGA. -

Intel Atom® Processor C2000 Product Family for Microservers

Intel® Atom™ Processor C2000 Product Family for Microservers PRODUCT BRIEF Maximize Efficiency for Your Lightweight Scale-Out Workloads Extreme Density and Energy-Efficiency for Low-End, Scale-Out Workloads With a need to rapidly deliver new services, cope with massive data growth, and contain costs, cloud service providers and hosters seek increasingly efficient ways to handle the demands on their infrastructure. Today’s servers based on Intel® Xeon® processors provide leadership performance and performance per watt with the flexibility to handle a wide range of workloads and peak demands. 2nd generation 64-bit However, certain lightweight, scale-out workloads—such as basic dedicated hosting, low-end static Intel® Atom™ Processor web serving, and simple content delivery can sometimes be hosted more efficiently on larger C2000 Product Family numbers of smaller servers built for extreme power efficiency. for microservers To address this need, Intel worked with a broad giving you the flexibility to right-size your infra- • Up to 7x higher7 perfor- ecosystem of leading server manufacturers to structure without limiting software mobility and mance, up to 6x better8 develop and deliver a variety of extreme low-power interoperability as your applications evolve. systems to support an emerging server category— performance/watt Optimized Platform Support microservers. With up to a 1,000 nodes1 per Intel provides complete microserver platform 9 rack and shared power, cooling, and networking • 6-20 watt TDP solutions that simplify implementation, improve resources, microservers can help you improve data overall efficiency and enable higher node density. • Up to 1000+ server center efficiency by right-sizing infrastructure for New innovations include: 10 relatively light processing requirements. -

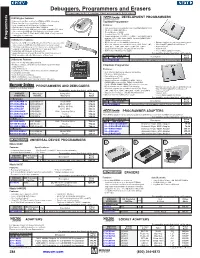

Debuggers, Programmers and Erasers Products May Be Rohs Compliant

Debuggers, Programmers and Erasers Products may be RoHS compliant. Check mouser.com for RoHS status. USB2Wiggler Features: DEVELOPMENT PROGRAMMERS • Universal Serial Bus interface for JTAG and BDM debugging TopMaxII Programmer • Faster than the classic parallel port Wiggler • Fully compatible with all Macraigor Systems software Features: • Operates up to Hi-Speed USB rates (480Mb/s) • High speed device programmer and TTL/LOGIC/DRAM tester • One side interfaces to USB port of host IBM compatible PC, other • PC driven: USB 2.0 interface side connects to OCD (On-Chip Debug) port of target system • Device libraries > 18000 • Port may be JTAG, E-JTAG, OnCE, COP, BDM, or any of several • Standard 48-pin DIL ZIF socket other types of connections • Supported devices: EPROM, EEPROM, FLASH (NOR/NAND) Programmers memory, PLD, FPGA, Serial PROM, Parallel PROM, CMOS USB2Demon Features: PROM, PIC and microcontrollers • Interfaces to USB 1.1 or USB 2.0 port of host PC on one side, other • B/P/V takes 65 sec. for 64Mbit flash memory • External start key for auto programming mode side connects to OCD (On-Chip Debug) port on target system • All socket adapters are available in stock (USA): PLCC, SOP, • Win 95/98/NT/2000/XP/Vista/Windows 7 • Simultaneously debugs up to 255 devices on a single scan chain SOIC, QFP, TSOP, SON, BGA, TSSOP, and TSOPII • Approved by CE • Supports configurable JTAG/BDM clock rates up to 20MHz • Supported low-voltages: 1.8/2.0/2.7/3.0/3.3/5.0 volt • Made in USA • Compatible with Windows and Linux hosts • Built-in AC (110-240) power supply • Free software update for lifetime • Supported versions of Linux: Red Hat 7.2-9 and Fedora Core 2 For quantities greater than listed, call for quote. -

Clock Gating for Power Optimization in ASIC Design Cycle: Theory & Practice

Clock Gating for Power Optimization in ASIC Design Cycle: Theory & Practice Jairam S, Madhusudan Rao, Jithendra Srinivas, Parimala Vishwanath, Udayakumar H, Jagdish Rao SoC Center of Excellence, Texas Instruments, India (sjairam, bgm-rao, jithendra, pari, uday, j-rao) @ti.com 1 AGENDA • Introduction • Combinational Clock Gating – State of the art – Open problems • Sequential Clock Gating – State of the art – Open problems • Clock Power Analysis and Estimation • Clock Gating In Design Flows JS/BGM – ISLPED08 2 AGENDA • Introduction • Combinational Clock Gating – State of the art – Open problems • Sequential Clock Gating – State of the art – Open problems • Clock Power Analysis and Estimation • Clock Gating In Design Flows JS/BGM – ISLPED08 3 Clock Gating Overview JS/BGM – ISLPED08 4 Clock Gating Overview • System level gating: Turn off entire block disabling all functionality. • Conditions for disabling identified by the designer JS/BGM – ISLPED08 4 Clock Gating Overview • System level gating: Turn off entire block disabling all functionality. • Conditions for disabling identified by the designer • Suspend clocks selectively • No change to functionality • Specific to circuit structure • Possible to automate gating at RTL or gate-level JS/BGM – ISLPED08 4 Clock Network Power JS/BGM – ISLPED08 5 Clock Network Power • Clock network power consists of JS/BGM – ISLPED08 5 Clock Network Power • Clock network power consists of – Clock Tree Buffer Power JS/BGM – ISLPED08 5 Clock Network Power • Clock network power consists of – Clock Tree Buffer -

Intel® Industrial Iot Workshop Security for Industrial Platforms

Intel® Industrial IoT workshop Security for industrial platforms Gopi K. Agrawal Security Architect IOTG Technical Sales & Marketing Intel Corporation Legal © 2018 Intel Corporation No license (express or implied, by estoppel or otherwise) to any intellectual property rights is granted by this document. Intel disclaims all express and implied warranties, including without limitation, the implied warranties of merchantability, fitness for a particular purpose, and non-infringement, as well as any warranty arising from course of performance, course of dealing, or usage in trade. This document contains information on products, services and/or processes in development. All information provided here is subject to change without notice. Contact your Intel representative to obtain the latest Intel product specifications and roadmaps. Intel technologies' features and benefits depend on system configuration and may require enabled hardware, software or service activation. Performance varies depending on system configuration. No computer system can be absolutely secure. Check with your system manufacturer or retailer or learn more at www.intel.com. Intel, the Intel logo, are trademarks of Intel Corporation in the U.S. and/or other countries. *Other names and brands may be claimed as the property of others. All information provided here is subject to change without notice. Contact your Intel representative to obtain the latest Intel product specifications and roadmaps No license (express or implied, by estoppel or otherwise) to any intellectual property rights is granted by this document. Intel technologies’ features and benefits depend on system configuration and may require enabled hardware, software or service activation. Performance varies depending on system configuration. No computer system can be absolutely secure. -

FPGA Architecture: Survey and Challenges Full Text Available At

Full text available at: http://dx.doi.org/10.1561/1000000005 FPGA Architecture: Survey and Challenges Full text available at: http://dx.doi.org/10.1561/1000000005 FPGA Architecture: Survey and Challenges Ian Kuon University of Toronto Toronto, ON Canada [email protected] Russell Tessier University of Massachusetts Amherst, MA USA [email protected] Jonathan Rose University of Toronto Toronto, ON Canada [email protected] Boston – Delft Full text available at: http://dx.doi.org/10.1561/1000000005 Foundations and Trends R in Electronic Design Automation Published, sold and distributed by: now Publishers Inc. PO Box 1024 Hanover, MA 02339 USA Tel. +1-781-985-4510 www.nowpublishers.com [email protected] Outside North America: now Publishers Inc. PO Box 179 2600 AD Delft The Netherlands Tel. +31-6-51115274 The preferred citation for this publication is I. Kuon, R. Tessier and J. Rose, FPGA Architecture: Survey and Challenges, Foundations and Trends R in Elec- tronic Design Automation, vol 2, no 2, pp 135–253, 2007 ISBN: 978-1-60198-126-4 c 2008 I. Kuon, R. Tessier and J. Rose All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, mechanical, photocopying, recording or otherwise, without prior written permission of the publishers. Photocopying. In the USA: This journal is registered at the Copyright Clearance Cen- ter, Inc., 222 Rosewood Drive, Danvers, MA 01923. Authorization to photocopy items for internal or personal use, or the internal or personal use of specific clients, is granted by now Publishers Inc for users registered with the Copyright Clearance Center (CCC). -

Saber Eletrônica, Designers Pois Precisamos Comprovar Ao Meio Anunciante Estes Números E, Assim, Carlos C

editorial Editora Saber Ltda. Digital Freemium Edition Diretor Hélio Fittipaldi Nesta edição comemoramos o fantástico número de 258.395 downloads da edição 460 digital em PDF que tivemos nos primeiros 50 dias de circu- www.sabereletronica.com.br lação. Assim, esperamos atingir meio milhão em twitter.com/editora_saber seis meses. Na fase de teste, no ano passado, com Editor e Diretor Responsável a Edição Digital Gratuita que chamamos de “Digital Hélio Fittipaldi Conselho Editorial Freemium (Free + Premium) Edition”, já atingimos João Antonio Zuffo este marco e até ultrapassamos. Redação Fica aqui nosso agradecimento a todos os que Hélio Fittipaldi Augusto Heiss seguiram nosso apelo, para somente fazerem Revisão Técnica Eutíquio Lopez download das nossas edições através do link do Portal Saber Eletrônica, Designers pois precisamos comprovar ao meio anunciante estes números e, assim, Carlos C. Tartaglioni, Diego M. Gomes obtermos patrocínio para manter a edição digital gratuita. Publicidade Aproveitamos também para avisar aos nossos leitores de Portugal, Caroline Ferreira, cerca de 6.000 pessoas, que infelizmente os custos para enviarmos as Nikole Barros revistas impressas em papel têm sido altos e, por solicitação do nosso Colaboradores Alexandre Capelli, distribuidor, não enviaremos mais os exemplares impressos em papel Bruno Venâncio, para distribuição no mercado português e ex-colônias na África. César Cassiolato, Dante J. S. Conti, Em junho teremos a edição especial deste semestre e o assunto prin- Edriano C. de Araújo, cipal é a eletrônica embutida (embedded electronic), ou como dizem os Eutíquio Lopez, Tsunehiro Yamabe espanhóis e portugueses: electrónica embebida. Como marco teremos também no Centro de Exposições Transamérica em São Paulo, a 2ª edição da ESC Brazil 2012 e a 1ª MD&M, o maior evento de tecnologia para o mercado de design eletrônico que, neste ano, estará sendo promovido PARA ANUNCIAR: (11) 2095-5339 pela UBM junto com o primeiro evento para o setor médico/odontoló- [email protected] gico (a MD&M Brazil). -

Intel Atom® Processor C3000 Series for Embedded and Iot Applications: Product Brief

Product brief Internet of Things Intel Atom® Processor C3000 Series Expanding Intelligence and Flexibility at the Edge Scalable, dense-compute SoC for demanding IoT workloads From the factory foor to the energy grid, airplanes to supply chains, the sensors, controls, gateways, and other connected devices of Internet of Things (IoT) are driving the next industrial revolution. As IoT continues its explosive growth, the need for intelligent devices for more specialized applications is also growing exponentially. Industrial, energy, aerospace, robotics, public sector, and other customers with demanding IoT workloads want new ways to easily extract value from their data, reduce their time to market, and innovate connected technologies quickly and efciently. Moreover, they increasingly require reliable IoT solutions that bring maximum performance and greater capabilities to an ever-expanding array of challenging locations and operating conditions. The Intel Atom® processor C3000 series extends low-power Intel® architecture into new segments and accelerates IoT innovation across a wide range of demanding environments and use cases. With high performance per watt, low thermal design power (TDP) of 9.5W, and up to 20 confgurable high-speed input/output (HSIO) lanes, and pin-to-pin compatibility, this new system-on-a-chip (SoC) family delivers next-generation, multicore performance and scalability for a broad variety of low-power, high-density, and fanless designs. Multicore scalability With the Intel Atom processor C3000 series, customers are able to scale performance and achieve workload consolidation in situations and use cases that uP to require very low power, high density, and high I/O integration. Designed in an FCBGA 34mm x 28mm compact form factor, this SoC-based CPU is manufactured on Intel’s optimized 14nm process technology, available from 2 to 12 cores from 2.3X 1.6 to 2.0 GHz, and includes up to 256 GB DDR4 2133 MHz ECC (SODIMM, UDIMM, better PerforMANce or RDIMM) of addressable memory.