Fastflow: Combining Pattern-Level Abstraction and Efficiency in Gpgpus

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Curriculum Vitae

C URRICULUM V ITAE - A NNA D E G RASSI PERSONAL INFORMATION Surname Name De Grassi Anna Date of birth 03-02-1976 Department Bioscienze, Biotecnologie e Biofarmaceutica Institution Università degli Studi di Bari “Aldo Moro” Street Address Via Orabona 4, 70125 Bari - Italy. Phone/Fax +39 080 5443614/ +39 080 5442770 E-mail address [email protected] Researcher Orcid Identifier https://orcid.org/0000-0001-7273-4263 EDUCATION 2007 PhD in Genetics and Molecular Evolution, University of Bari – Italy 2004 Master Diploma in Bioinformatics, University of Turin – Italy, 110/110 2003 Qualification to practice the profession of Biologist 2002 University Diploma in Biological Sciences, University of Bari – Italy, 110/110 cum laude 1994 Secondary School Diploma, Liceo Classico Q. Orazio Flacco, Bari – Italy, 60/60 RESEARCH ACTIVITY IN ITALY From October 2012 Assistant Professor (BIO/13), Department of Biosciences, Biotechnology and Biopharmaceutics, University of Bari, Computational Genomics applied to Cancer and Rare Diseases 2007-2011 Post-Doc and Staff Scientist, Department of Experimental Oncology, European Institute of Oncology, Milan, Deep-Sequencing and Evolution of Cancer Genomes 2004-2007 PhD student, CNR – Institute of Biomedical Technologies (ITB), Bari, Comparative Genomics of gene families in vertebrates and Microarray Analysis of Human Cancer Cell Models 2003-2004 Master Diploma Student, Department of Clinical and Biological Sciences, University of Turin, Detection and Analysis of Genes Involved in Breast Tumor Progression in the her/neut Mouse Model Using Expression Microarrays 2003 Graduate Student, Cavalieri Ottolenghi Foundation, Turin, Actin-Tubulin Interaction in D. melanogaster Embryonic Cell Lines 2001-2002 Undergraduate Student, Department of Genetics, University of Bari, Evolution of Stellate-like and Crystal-like Sequences in the Genus Drosophila RESEARCH ACTIVITY ABROAD Dec. -

Laboratorio Di Ontologia

UNIVERSITÀ LABORATORIO DEGLI STUDI DI ONTOLOGIA DI TORINO estroverso.com Società Italiana di FILOSOFIA TEORETICA INTERNATIONAL CHAIR OF PHILOSOPHY JACQUES DERRIDA / LAW AND CULTURE FOURTH EDITION. POST-TRUTH, NEW REALISM, DEMOCRACY October 23rd October 24th Morning session Morning session 9.20 - 10.00 Greetings 10.00 - 10.30 Sanja Bojanic (University of Rijeka) Gianmaria Ajani (Rector, University of Turin) What Mocumentaries Tell Us about Truth? Renato Grimaldi (Director of the Department of Philosophy 10.30 - 11.00 Sebastiano Maffettone (LUISS Guido Carli University) and Educational Sciences, University of Turin) Derrida and the Political Culture of Post-colonialism Pier Franco Quaglieni (Director of Centro Pannunzio, Turin) Tiziana Andina (Director of LabOnt, University of Turin) 11.00 - 11.30 Coffee break 10.00 - 10.20 Prize Award Ceremony 11.30 - 12.00 Elisabetta Galeotti (University of Eastern Piedmont) Maurizio Ferraris (President of LabOnt, University of Turin) Types of Political Deception 10.20 - 11.10 Lectio Magistralis by Günter Figal (University of Freiburg) On Freedom Afternoon session 11.10 - 11.30 Coffee break 14.30 - 15.00 Jacopo Domenicucci (École Normale Supérieure, Paris) The Far We(b)st. Making Sense of Trust in Digital Contexts 11.30 - 12.00 Angela Condello (University of Roma Tre, University of Turin) 15.00 - 15.30 Davide Pala (University of Turin) Post-truth and Socio-legal Studies Citizens, Post-truth and Justice 12.00 - 12.30 Maurizio Ferraris (President of LabOnt, University of Turin) Deconstructing Post-truth 15.30 - 16.00 Break Afternoon session 16.00 - 16.30 Sara Guindani (Fondation Maison des sciences de l’homme, Paris) Derrida and the Impossible Transparency - Aesthetics and Politics 15.00 - 15.30 Luca Illetterati (University of Padua) Post-truth and Authority. -

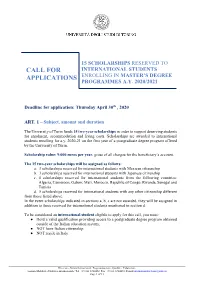

International Students Applications Enrolling in Master’S Degree Programmes A.Y

15 SCHOLARSHIPS RESERVED TO CALL FOR INTERNATIONAL STUDENTS APPLICATIONS ENROLLING IN MASTER’S DEGREE PROGRAMMES A.Y. 2020/2021 Deadline for application: Thursday April 30th , 2020 ART. 1 – Subject, amount and duration The University of Turin funds 15 two-year scholarships in order to support deserving students for enrolment, accommodation and living costs. Scholarships are awarded to international students enrolling for a.y. 2020-21 on the first year of a postgraduate degree program offered by the University of Turin. Scholarship value: 9,000 euros per year, gross of all charges for the beneficiary’s account. The 15 two-year scholarships will be assigned as follows: a. 3 scholarships reserved for international students with Mexican citizenship b. 3 scholarships reserved for international students with Japanese citizenship c. 4 scholarships reserved for international students from the following countries: Algeria, Cameroon, Gabon; Mali, Morocco, Republic of Congo, Rwanda, Senegal and Tunisia d. 5 scholarships reserved for international students with any other citizenship different from those listed above. In the event scholarships indicated in sections a, b, c are not awarded, they will be assigned in addition to those reserved for international students mentioned in section d. To be considered an international student eligible to apply for this call, you must: ● Hold a valid qualification providing access to a postgraduate degree program obtained outside of the Italian education system; ● NOT have Italian citizenship; ● NOT reside in Italy. _________________________________________________________________________________________________________ Direzione Attività Istituzionali, Programmazione, Qualità e Valutazione Sezione Mobilità e Didattica internazionale; Tel. +39 011 6704452; Fax +39 011 6704494; E-mail [email protected] Page 1 of 11 ART. -

Print Special Issue Flyer

IMPACT CITESCORE FACTOR 2.4 2.847 SCOPUS an Open Access Journal by MDPI Anaerobic Digestion Processes Guest Editors: Message from the Guest Editors Dr. Pietro Bartocci This Special Issue on "Anaerobic Digestion Processes" aims CRB Italian Biomass Research to curate novel advances in biogas production and use, Centre, University of Perugia, focusing both on modeling and experimental campaigns. 06100 Perugia, Italy Topics include, but are not limited to, the following: [email protected] Modeling of anaerobic digestion and biogas Prof. Qing Yang John A. Paulson School of production; Engineering and Applied Organic substrate characterization and pre- Sciences, Harvard University, treatment; Cambridge, MA 02138, USA Biogas purification and biomethane production [email protected] and use; Biogas combustion in engines and turbines. Prof. Francesco Fantozzi Department of Industrial Engineering, University of Perugia, Via G. Duranti 67, 06125 Perugia, Italy [email protected] Deadline for manuscript submissions: 30 November 2021 mdpi.com/si/30853 SpeciaIslsue IMPACT CITESCORE FACTOR 2.4 2.847 SCOPUS an Open Access Journal by MDPI Editor-in-Chief Message from the Editor-in-Chief Prof. Dr. Giancarlo Cravotto Processes (ISSN 2227-9717) provides an advanced forum Department of Drug Science and for process/system-related research in chemistry, biology, Technology, University of Turin, material, energy, environment, food, pharmaceutical, Via P. Giuria 9, 10125 Turin, Italy manufacturing and allied engineering fields. The journal publishes regular research papers, communications, letters, short notes and reviews. Our aim is to encourage researchers to publish their experimental, theoretical and computational results in as much detail as necessary. There is no restriction on paper length or number of figures and tables. -

Elisabetta Listorti

ELISABETTA LISTORTI Via Parma 24/C, Torino, 10152, Italy | 0039 3398779760 | [email protected] Date - place of birth: November 21th, 1990 - Lanciano (Ch) – Italy WORK EXPERIENCE From 1st October Centre for Research on Health and Social Care Management, Bocconi University, 2019 Milan, Italy From 1st May 2018 to Epidemiology Unit ASL TO3 (S.C. A D.U. Servizio sovrazonale di epidemiologia), 30th September 2019 Grugliasco, Italy From 1st April 2019 to Scholarship for the Department of Clinical and Biological Sciences, University of Turin, 30th September 2019 Italy Competences Management of research projects: identification and development of potential areas acquired of research; literature review; data analysis; production, communication and presentation of research outputs; coordination of a research group EDUCATION From January 2019 Master in Epidemiology, University of Turin, Italy Courses of Cohort Studies, Case Control Studies, Clinical Trials, Systematic Reviews, Survival Analysis, Geo statistical methods November 2014 – Ph.D. cum laude in Management, Production and Design, Politecnico di Torino, October 2018 Italy PhD thesis “Hospital procedures concentration: how to combine quality and patient choice. A managerial use of the volume-outcome association” Research topic: Planning problems in health care systems. Development of operational research models for volume allocation; use of econometric models for patient choice; creation of managerial algorithms for integrated approaches October 2012- Engineering and Management (Postgraduate -

Monica Bucciarelli Date of Birth: April the 19Th 1968 Nationality: Italian

MONICA BUCCIARELLI CURRICULUM VITAE Personal Information Name: Monica Bucciarelli Date of birth: April the 19th 1968 Nationality: Italian Current/past positions 1997-2000 Researcher in General Psychology Department of Psychology and Faculty of Psychology, University of Turin 2000-2006 Associate Professor in General Psychology Department of Psychology and Faculty of Sciences of Education and Training, University of Turin 2006- Full Professor of General Psychology Department of Psychology, University of Turin Education 1990 Degree in Pedagogy from the University of Florence 1994 Ph.D in Developmental Psychology at the University of Florence 1995-1997 Post-Doctoral Research Fellowship at the University of Turin 1997 Degree in Psychology from the University of Turin 2005 Master in Cognitive Psychotherapy Italian Society for Behavior and Cognitive Therapy Teaching A.Y. 2002-2003 / 2012-2013 lecturer in The Psychology of Learning (Second-level degree in Education, Faculty of Education and Training, University of Turin) – 54 hours per year Since A.Y. 2000-2001 lecturer in General Psychology (Three-year degree course in Education, Faculty of Education and Training, University of Turin) – 54 hours per year Since A.Y. 2013-2014 lecturer in The Psychology of Reasoning (Second-level degree in Psychology, Department of Psychology) – 60 hours per year Since A.Y. 2000 Member of the teaching body of the Doctoral School in Neurosciences, University of Turin Research collaborations • Collaboration with the Department of Psychology of Florence University since 1987. • Working at the Department of Psychology and the Center for Cognitive Science of Turin University since 1990. 1 • Working on joint research projects between Italy and the U.S.A and, as part of this collaboration, Visiting Research Fellow - laboratory of Prof. -

Shroud of Turin, Catania Scholars Dispute the Statistics Published In

The dating of the Holy Shroud is to be redone, the measurements made at the end of the 1980s with radiocarbon and published by the prestigious journal Nature turned out to be unreliable, in light of the current availability of the raw data of those measurements and new and more accurate statistical analysis tools. This is what statisticians, historians, physicists and mathematicians claim in the article recently published by the journal Archaeometry (2019) "Radiocarbon dating of the Shroud Turin: new evidence from raw data", presented this morning during a conference held in 'great hall of the University of Catania. Opening the proceedings, the rector Francesco Basile underlined the importance of hosting a debate in Catania that fascinates and attracts scholars from all over the world, through the contribution of internationally renowned scholars who are inspired by the availability of new acquisitions. The director of the Department of Economics and Business Michela Cavallaro pointed out, in particular, the interdisciplinary nature of this research project and the potential impact in the scientific world. The mayor Salvo Pogliese, on the other hand, underlined the contribution of the University, with its scientific and educational excellences, to the relaunching of the city of Catania, afflicted at this particular historical moment by the scourge of instability. "There is no conclusive evidence that the Shroud is medieval," Professor Benedetto Torrisi, associate professor of Economic Statistics at the University, said. Many researchers, in the last thirty years, had requested, in vain, the publication of the raw data of the three laboratories (Oxford, Arizona, Zurich) that carried out the analyzes in 1988, and finally, in 2017, Dr. -

Curriculum Vitae

Douglas Mark Ponton Via Cava Gucciardo Pirato 1d1 97015 Modica (RG) Italy Tel: 338 5786999 Email: [email protected], [email protected] Curriculum Vitae Education Phd University of Catania, Italy. Major: English and American Studies (2007) Masters (MSc) 2008 Aston University, Birmingham, England. Major: TESOL (2008) B.A. (hons) Sidney Sussex College, Cambridge University. Major: English Literature, Minor: French. (1980). M.A. (hons) Sidney Sussex College, Cambridge University. Major: English Literature, Minor: French. (1987). Certificate Trinity College, London. Major: TESOL (1997). Diploma Trinity College, London.Major: TESOL (2002). Secondary school (1969- 1976): St. Albans School, Abbey Gateway, St. Albans, Herts. Oxford & Cambridge Examination Board: 3 A Levels (English (grade A), History (grade A), Economics (grade D). Italian national abilitation for English teaching in schools: Passed national competition (mark 80/80) (2001). Italian National Abilitation for Associate Professor (2014) Work Experience Associate Professor, University of Catania 2017 - the present Researcher /Assistant Professor at the University of Catania. 2009 - 2017 - Academic research and participation in conferences. Planning courses and giving lessons at undergraduate, masters and phd levels, administration of written and oral exams, student counselling, overseeing theses, attending degree course meetings, participating in final exam commissions. - Co-ordinator for languages in the department, liaising with lecturers in English, French and German. Head of exam commissions for language skills exams. - Member of academic staff for degree courses in Science of Administration and Organisation (first level degree) and Internationalization of Commercial Relations, and Global Politics and Euro-Mediterranean Relations (Masters level). Teaching in the following degree courses: - 2009 - present. Scienze dell’Amministrazione (Bachelors’). -

SCIENTIFIC COMMITTEE Giancarlo Cravotto Department of Drug

GENP 2018 III Edition “Green Extraction of Natural Products” University Aldo Moro Bari-Italy November12-13, 2018 SCIENTIFIC COMMITTEE Giancarlo Cravotto Department of Drug Science and Technology, University of Turin, Italy, PhD Farid Chemat University of Avignon, GREEN, France, PhD Jochen Strube Institute for Separation and Process Technology, Clausthal University of Technology, Germany, PhD Francisco Barba Department of Food Science, University of Copenhagen, Denmark, PhD Ivana Radojčić Redovniković Department of Biochemical Engineering University of Zagreb Patrizia Perego Department of Civil, Chemical and Environmental Engineering, University of Genova Italy Filomena Corbo Department of Pharmacy –Drug Science , University Aldo Moro Bari, Italy PhD Maria Lisa Clodoveo Interdisciplinary Department of Medicine University Aldo Moro Bari, Italy Carlo Franchini Department of Pharmacy –Drug Science , University Aldo Moro Bari, Italy PhD Riccardo Amirante Department of Mechanics, Mathematics and Management, Polytecnic of Bari, Italy PhD Program November 12st 9.00 Registration and Welcome 10.00 Opening Ceremony and meeting presentation Antonio Felice Uricchio -Rector of University Aldo Moro Bari (UNIBA) Eugenio Di Sciascio- Rector of Polytechnic of Bari Angela Agostiano- President SCI (Italian Chemical Society) Gianluca Farinola-President of EuCheMs Organic Chemistry Division Francesco Leonetti-Director of Department of Pharmacy-Drug Science, UNIBA Carlo Sabbà-Director of Interdisciplinary Department of Medicine UNIBA Topic Innovative extraction -

Table of Contents

Table of Contents Preface.................................................................................................................................................xvi Acknowledgment..............................................................................................................................xxiii Section 1 Managing Global Luxury Brands in the Era of Digitalisation Chapter 1 TheEvolutionofDistributionintheLuxurySector:FromSingletoOmni-Channel............................ 1 Fabrizio Mosca, University of Turin, Italy Elisa Giacosa, University of Turin, Italy Luca Matteo Zagni, University of Turin, Italy Chapter 2 OmnichannelShoppingExperiencesforFastFashionandLuxuryBrands:AnExploratoryStudy.... 22 Cesare Amatulli, University of Bari, Italy Matteo De Angelis, LUISS University, Italy Andrea Sestino, University of Bari, Italy Gianluigi Guido, University of Salento, Italy Chapter 3 ’ExaminingtheIntegrationofVirtualandPhysicalPlatformsFromLuxuryBrandManagers Perspectives........................................................................................................................................... 44 Paola Peretti, The IULM University, Italy Valentina Chiaudano, University of Turin, Italy Mohanbir Sawhney, Kellogg School of Management, Northwestern University, USA Chapter 4 ManagingIntegratedBrandCommunicationStrategiesintheOnlineEra:NewMarketing FrontiersforLuxuryGoods................................................................................................................. -

Riccardo Scalenghe

SHORT CV riccardo I was born in Turin in 1965, married, in our family we are four. I am researcher in pedology (former SSD AGR/14) at the University of Palermo since the year 2000. In 1989, I graduated in Agricultural Sciences at the University of Turin, the same year I scalenghe obtained the professional qualification to practice as agronomist. In the past, I was part of the technical/scientific staff of the Einstein Committee ('Project Ecology', > field of teaching for the Rome, 1985 and 'The Breath of the City', Turin, 1986). Later, I was in charge for the past 4 years : Hydrometric Center of the ‘Coutenza Canali Cavour’ (Consorzio Irriguo Est Sesia, Novara, 1989-91) and environmental agronomist in land use planning (Consulagri, pedology, soil science Turin, 1992-94). In 1996, I obtained the research doctorate in Soil Chemistry from > research topics : the University of Pisa. I lectured Chemistry of Materials (Turin University, ay 1997/98), Soil Chemistry and Applied Pedology (Genoa University, ay 1997/98), periurban environment, Chemistry (University of Turin, ay 1998/99), Applied Soil Science (Turin University, ay anthropogenic changes, 1999/00); Classification and Soil Mapping, Soil Geography, Applied Pedology, soil bio-geocycling, soil Techniques of Land Evaluation (Palermo University, since ay 2000/01). I was the co- pollution, land scientific director of the Master in Sustainability Interuniversity Consortium among Bocconi University, Chieti, L'Aquila, Teramo and Turin Universities (ay 1997/99). I evaluation was a member of the academic board of the PhD in Soil Science (ay 2000/04) and in Hydraulics (ay 2005/07) at the University of Palermo. -

European Papers

European Papers A Journal on Law and Integration Vol. 4, 2019, N0 2 www.europeanpapers.eu European Papers – A Journal on Law and Integration (www.europeanpapers.eu) Editors Ségolène Barbou des Places (University Paris 1 Panthéon-Sorbonne); Enzo Cannizzaro (University of Rome “La Sapienza”); Gareth Davies (VU University Amsterdam); Christophe Hillion (Universities of Leiden, Gothenburg and Oslo); Adam Lazowski (University of Westminster, London); Valérie Michel (University Paul Cézanne Aix-Marseille III); Juan Santos Vara (University of Salamanca); Ramses A. Wessel (University of Twente). Associate Editor Nicola Napoletano (University of Rome “Unitelma Sapienza”). European Forum Editors Charlotte Beaucillon (University of Lille); Stephen Coutts (University College Cork); Daniele Gallo (Luiss “Guido Carli” University, Rome); Paula García Andrade (Comillas Pontifical University, Madrid); Theodore Konstatinides (University of Essex); Stefano Montaldo (University of Turin); Benedikt Pirker (University of Fribourg); Oana Stefan (King’s College London); Charikleia Vlachou (University of Orléans); Pieter Van Cleynenbreugel (University of Liège). Scientific Board M. Eugenia Bartoloni (University of Campania “Luigi Vanvitelli”); Uladzislau Belavusau (University of Amsterdam); Marco Benvenuti (University of Rome “La Sapienza”); Francesco Bestagno (Catholic University of the Sacred Heart, Milan); Giacomo Biagioni (University of Cagliari); Marco Borraccetti (University of Bologna); Susanna Maria Cafaro (University of Salento); Roberta Calvano (University