Democracy Under Threat: Risks and Solutions in the Era of Disinformation and Data Monopoly

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Debates of the House of Commons

43rd PARLIAMENT, 2nd SESSION House of Commons Debates Official Report (Hansard) Volume 150 No. 008 Friday, October 2, 2020 Speaker: The Honourable Anthony Rota CONTENTS (Table of Contents appears at back of this issue.) 467 HOUSE OF COMMONS Friday, October 2, 2020 The House met at 10 a.m. dence that the judge in their case will enforce sexual assault laws fairly and accurately, as Parliament intended. Prayer [English] It has never been more critical that all of us who serve the public GOVERNMENT ORDERS are equipped with the right tools and understanding to ensure that everyone is treated with the respect and dignity that they deserve, ● (1005) no matter what their background or their experiences. This would [English] enhance the confidence of survivors of sexual assault and the Cana‐ dian public, more broadly, in our justice system. There is no room JUDGES ACT in our courts for harmful myths or stereotypes. Hon. David Lametti (Minister of Justice, Lib.) moved that Bill C-3, An Act to amend the Judges Act and the Criminal Code, be read the second time and referred to a committee. I know that our government's determination to tackle this prob‐ lem is shared by parliamentarians from across Canada and of all He said: Mr. Speaker, I am pleased to stand in support of Bill political persuasions. The bill before us today will help ensure that C-3, an act to amend the Judges Act and the Criminal Code, which those appointed to a superior court would undertake to participate is identical to former Bill C-5. -

Evidence of the Special Committee on the COVID

43rd PARLIAMENT, 1st SESSION Special Committee on the COVID-19 Pandemic EVIDENCE NUMBER 019 Tuesday, June 9, 2020 Chair: The Honourable Anthony Rota 1 Special Committee on the COVID-19 Pandemic Tuesday, June 9, 2020 ● (1200) Mr. Paul Manly (Nanaimo—Ladysmith, GP): Thank you, [Translation] Madam Chair. The Acting Chair (Mrs. Alexandra Mendès (Brossard— It's an honour to present a petition for the residents and con‐ Saint-Lambert, Lib.)): I now call this meeting to order. stituents of Nanaimo—Ladysmith. Welcome to the 19th meeting of the Special Committee on the Yesterday was World Oceans Day. This petition calls upon the COVID-19 Pandemic. House of Commons to establish a permanent ban on crude oil [English] tankers on the west coast of Canada to protect B.C.'s fisheries, tourism, coastal communities and the natural ecosystems forever. I remind all members that in order to avoid issues with sound, members participating in person should not also be connected to the Thank you. video conference. For those of you who are joining via video con‐ ference, I would like to remind you that when speaking you should The Acting Chair (Mrs. Alexandra Mendès): Thank you very be on the same channel as the language you are speaking. much. [Translation] We now go to Mrs. Jansen. As usual, please address your remarks to the chair, and I will re‐ Mrs. Tamara Jansen (Cloverdale—Langley City, CPC): mind everyone that today's proceedings are televised. Thank you, Madam Chair. We will now proceed to ministerial announcements. I'm pleased to rise today to table a petition concerning con‐ [English] science rights for palliative care providers, organizations and all health care professionals. -

NDP Response - Canadian Paediatric Society (CPS)

NDP Response - Canadian Paediatric Society (CPS) Establishing Child-Friendly Pharmacare 1. What will your party do to ensure that all children and youth have equitable access to safe, effective and affordable prescription drugs? An NDP government will implement a comprehensive, public universal pharmacare system so that every Canadian, including children, has access to the medication they need at no cost. 2. What measures will your party take to ensure that Canada has a national drug formulary and compounding registry that is appropriate for the unique needs of paediatric patients? An NDP government would ensure that decisions over what drugs to cover in the national formulary would be made by an arm’s-length body that would negotiate with drug companies. This would ensure that the needs of Canadians – from paediatric to geriatric – are considered. The NDP will work with paediatricians, health professionals, and other stakeholders to ensure that the formulary meets the needs of Canadians and that children and youth have access to effective and safe medication that will work for them. 3. How will your party strengthen Canada’s regulatory system to increase the availability and accessibility of safe paediatric medications and child- friendly formulations? The best way to increase the availability and accessibility of safe paediatric medications and child-friendly formulations is by establishing a comprehensive, national public pharmacare system because it gives Canada the strongest negotiating power with pharmaceutical companies. An NDP government will work with patients, caregivers, health professionals, and pharmaceutical companies to ensure that the best interest of Canadians will not continue to be adversely impacted by the long-awaited need for universal pharmacare. -

PRISM::Advent3b2 9.00

CANADA House of Commons Debates VOLUME 141 Ï NUMBER 061 Ï 1st SESSION Ï 39th PARLIAMENT OFFICIAL REPORT (HANSARD) Friday, October 6, 2006 Speaker: The Honourable Peter Milliken CONTENTS (Table of Contents appears at back of this issue.) Also available on the Parliament of Canada Web Site at the following address: http://www.parl.gc.ca 3747 HOUSE OF COMMONS Friday, October 6, 2006 The House met at 10 a.m. Being from a Scottish background I would think of what my grandmother would say now. She would talk about Such A Parcel Of Rogues In A Nation: Prayers What force or guile could not subdue, Thro' many warlike ages, Is wrought now by a coward few, For hireling traitor's wages. GOVERNMENT ORDERS We're bought and sold for English gold- Such a parcel of rogues in a nation! Ï (1005) [English] There is a fundamental difference between the parcel of rogues who sold out Scotland and the parcel of rogues that are selling out SOFTWOOD LUMBER PRODUCTS EXPORT CHARGE our resource industry right now. At least the chieftains who sold out ACT, 2006 their own people in Scotland got some money for it. The House resumed from September 25 consideration of the We are being asked in Parliament to pay money, so that we can motion that Bill C-24, An Act to impose a charge on the export of sell ourselves out. I think that is an unprecedented situation. We are certain softwood lumber products to the United States and a charge seeing that the communities I represent no longer matter to the on refunds of certain duty deposits paid to the United States, to government. -

PRISM::Advent3b2 8.25

HOUSE OF COMMONS OF CANADA CHAMBRE DES COMMUNES DU CANADA 39th PARLIAMENT, 1st SESSION 39e LÉGISLATURE, 1re SESSION Journals Journaux No. 1 No 1 Monday, April 3, 2006 Le lundi 3 avril 2006 11:00 a.m. 11 heures Today being the first day of the meeting of the First Session of Le Parlement se réunit aujourd'hui pour la première fois de la the 39th Parliament for the dispatch of business, Ms. Audrey première session de la 39e législature, pour l'expédition des O'Brien, Clerk of the House of Commons, Mr. Marc Bosc, Deputy affaires. Mme Audrey O'Brien, greffière de la Chambre des Clerk of the House of Commons, Mr. R. R. Walsh, Law Clerk and communes, M. Marc Bosc, sous-greffier de la Chambre des Parliamentary Counsel of the House of Commons, and Ms. Marie- communes, M. R. R. Walsh, légiste et conseiller parlementaire de Andrée Lajoie, Clerk Assistant of the House of Commons, la Chambre des communes, et Mme Marie-Andrée Lajoie, greffier Commissioners appointed per dedimus potestatem for the adjoint de la Chambre des communes, commissaires nommés en purpose of administering the oath to Members of the House of vertu d'une ordonnance, dedimus potestatem, pour faire prêter Commons, attending according to their duty, Ms. Audrey O'Brien serment aux députés de la Chambre des communes, sont présents laid upon the Table a list of the Members returned to serve in this dans l'exercice de leurs fonctions. Mme Audrey O'Brien dépose sur Parliament received by her as Clerk of the House of Commons le Bureau la liste des députés qui ont été proclamés élus au from and certified under the hand of Mr. -

The Cambridge Analytica Files

For more than a year we’ve been investigating Cambridge Analytica and its links to the Brexit Leave campaign in the UK and Team Trump in the US presidential election. Now, 28-year-old Christopher Wylie goes on the record to discuss his role in hijacking the profiles of millions of Facebook users in order to target the US electorate by Carole Cadwalladr Sun 18 Mar 2018 06:44 EDT The first time I met Christopher Wylie, he didn’t yet have pink hair. That comes later. As does his mission to rewind time. To put the genie back in the bottle. By the time I met him in person [www.theguardian.com/uk- news/video/2018/mar/17/cambridge-analytica- whistleblower-we-spent-1m-harvesting-millions-of- facebook-profiles-video], I’d already been talking to him on a daily basis for hours at a time. On the phone, he was clever, funny, bitchy, profound, intellectually ravenous, compelling. A master storyteller. A politicker. A data science nerd. Two months later, when he arrived in London from Canada, he was all those things in the flesh. And yet the flesh was impossibly young. He was 27 then (he’s 28 now), a fact that has always seemed glaringly at odds with what he has done. He may have played a pivotal role in the momentous political upheavals of 2016. At the very least, he played a From www.theguardian.com/news/2018/mar/17/data-war-whistleblower-christopher-wylie-faceook-nix-bannon- trump 1 20 March 2018 consequential role. At 24, he came up with an idea that led to the foundation of a company called Cambridge Analytica, a data analytics firm that went on to claim a major role in the Leave campaign for Britain’s EU membership referendum, and later became a key figure in digital operations during Donald Trump’s election [www.theguardian.com/us-news/2016/nov/09/how- did-donald-trump-win-analysis] campaign. -

A Layman's Guide to the Palestinian-Israeli Conflict

CJPME’s Vote 2019 Elections Guide « Vote 2019 » Guide électoral de CJPMO A Guide to Canadian Federal Parties’ Positions on the Middle East Guide sur la position des partis fédéraux canadiens à propos du Moyen-Orient Assembled by Canadians for Justice and Peace in the Middle East Préparé par Canadiens pour la justice et la paix au Moyen-Orient September, 2019 / septembre 2019 © Canadians for Justice and Peace in the Middle East Preface Préface Canadians for Justice and Peace in the Middle East Canadiens pour la paix et la justice au Moyen-Orient (CJPME) is pleased to provide the present guide on (CJPMO) est heureuse de vous présenter ce guide Canadian Federal parties’ positions on the Middle électoral portant sur les positions adoptées par les East. While much has happened since the last partis fédéraux canadiens sur le Moyen-Orient. Canadian Federal elections in 2015, CJPME has Beaucoup d’eau a coulé sous les ponts depuis les élections fédérales de 2015, ce qui n’a pas empêché done its best to evaluate and qualify each party’s CJPMO d’établir 13 enjeux clés relativement au response to thirteen core Middle East issues. Moyen-Orient et d’évaluer les positions prônées par chacun des partis vis-à-vis de ceux-ci. CJPME is a grassroots, secular, non-partisan organization working to empower Canadians of all CJPMO est une organisation de terrain non-partisane backgrounds to promote justice, development and et séculière visant à donner aux Canadiens de tous peace in the Middle East. We provide this horizons les moyens de promouvoir la justice, le document so that you – a Canadian citizen or développement et la paix au Moyen-Orient. -

Core 1..174 Hansard (PRISM::Advent3b2 17.25)

House of Commons Debates VOLUME 148 Ï NUMBER 006 Ï 1st SESSION Ï 42nd PARLIAMENT OFFICIAL REPORT (HANSARD) Thursday, December 10, 2015 Speaker: The Honourable Geoff Regan CONTENTS (Table of Contents appears at back of this issue.) 203 HOUSE OF COMMONS Thursday, December 10, 2015 The House met at 10 a.m. CRIMINAL CODE Mr. Jim Eglinski (Yellowhead, CPC) moved for leave to Prayer introduce Bill C-206, An Act to amend the Criminal Code (abuse of vulnerable persons). ROUTINE PROCEEDINGS He said: Mr. Speaker, as this is the first time I rise in the 42nd Parliament, I would like to congratulate all of my fellow members of Ï (1000) Parliament from across Canada, and you, sir, for being elected as our [Translation] Speaker. I would like to thank the constituents of my great riding for putting their support behind me to be their representative in Ottawa. OFFICE OF THE PRIVACY COMMISSIONER The Speaker: Pursuant to section 38 of the Privacy Act, I have the honour to lay upon the table the annual report of the Privacy I am pleased to stand in the House today to table my first private Commissioner for the fiscal year ending March 31, 2015. Pursuant to member's bill, an act to amend the Criminal Code on abuse of Standing Order 108(3)(h), this report is deemed permanently referred vulnerable persons. The bill would amend section 718.2 of the to the Standing Committee on Access to Information, Privacy and Criminal Code by making tougher penalties for an offender who Ethics. knows or reasonably should know that a person is an elder or other vulnerable person, and wilfully exploits or takes advantage of that *** person through financial, physical, sexual, or emotional abuse. -

Investigation Into the Use of Data Analytics in Political Campaigns

Information Commissioner’ Investigation into the use of data analytics in political campaigns Investigation update 11 July 2018 ? Contents Executive summary ................................................................................................................................. 2 1. Introduction ................................................................................................................................ 6 2. The investigation ......................................................................................................................... 9 3. Regulatory enforcement action and criminal offences ............................................................ 12 3.1 Failure to properly comply with the Data Protection Principles; ........................................... 13 3.2 Failure to properly comply with the Privacy and Electronic Communications Regulations (PECR); ........................................................................................................................................... 13 3.3 Section 55 offences under the Data Protection Act 1998 ...................................................... 13 4. Interim update .......................................................................................................................... 14 4.1 Political parties ........................................................................................................................ 14 4.2 Social media platforms ........................................................................................................... -

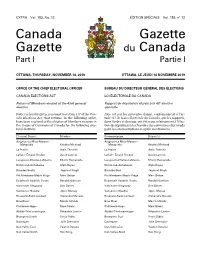

Canada Gazette, Part I

EXTRA Vol. 153, No. 12 ÉDITION SPÉCIALE Vol. 153, no 12 Canada Gazette Gazette du Canada Part I Partie I OTTAWA, THURSDAY, NOVEMBER 14, 2019 OTTAWA, LE JEUDI 14 NOVEMBRE 2019 OFFICE OF THE CHIEF ELECTORAL OFFICER BUREAU DU DIRECTEUR GÉNÉRAL DES ÉLECTIONS CANADA ELECTIONS ACT LOI ÉLECTORALE DU CANADA Return of Members elected at the 43rd general Rapport de député(e)s élu(e)s à la 43e élection election générale Notice is hereby given, pursuant to section 317 of the Can- Avis est par les présentes donné, conformément à l’ar- ada Elections Act, that returns, in the following order, ticle 317 de la Loi électorale du Canada, que les rapports, have been received of the election of Members to serve in dans l’ordre ci-dessous, ont été reçus relativement à l’élec- the House of Commons of Canada for the following elec- tion de député(e)s à la Chambre des communes du Canada toral districts: pour les circonscriptions ci-après mentionnées : Electoral District Member Circonscription Député(e) Avignon–La Mitis–Matane– Avignon–La Mitis–Matane– Matapédia Kristina Michaud Matapédia Kristina Michaud La Prairie Alain Therrien La Prairie Alain Therrien LaSalle–Émard–Verdun David Lametti LaSalle–Émard–Verdun David Lametti Longueuil–Charles-LeMoyne Sherry Romanado Longueuil–Charles-LeMoyne Sherry Romanado Richmond–Arthabaska Alain Rayes Richmond–Arthabaska Alain Rayes Burnaby South Jagmeet Singh Burnaby-Sud Jagmeet Singh Pitt Meadows–Maple Ridge Marc Dalton Pitt Meadows–Maple Ridge Marc Dalton Esquimalt–Saanich–Sooke Randall Garrison Esquimalt–Saanich–Sooke -

Amended Complaint

Case 3:18-md-02843-VC Document 257 Filed 02/22/19 Page 1 of 424 Lesley E. Weaver (SBN 191305) Derek W. Loeser (admitted pro hac vice) BLEICHMAR FONTI & AULD LLP KELLER ROHRBACK L.L.P. 555 12th Street, Suite 1600 1201 Third Avenue, Suite 3200 Oakland, CA 94607 Seattle, WA 98101 Tel.: (415) 445-4003 Tel.: (206) 623-1900 Fax: (415) 445-4020 Fax: (206) 623-3384 [email protected] [email protected] Plaintiffs’ Co-Lead Counsel Additional counsel listed on signature page UNITED STATES DISTRICT COURT NORTHERN DISTRICT OF CALIFORNIA IN RE: FACEBOOK, INC. CONSUMER MDL No. 2843 PRIVACY USER PROFILE LITIGATION Case No. 18-md-02843-VC This document relates to: FIRST AMENDED CONSOLIDATED COMPLAINT ALL ACTIONS Judge: Hon. Vince Chhabria FIRST AMENDED CONSOLIDATED MDL NO. 2843 COMPLAINT CASE NO. 18-MD-02843-VC Case 3:18-md-02843-VC Document 257 Filed 02/22/19 Page 2 of 424 TABLE OF CONTENTS I. INTRODUCTION ...............................................................................................................1 II. JURISDICTION, VENUE, AND CHOICE OF LAW ........................................................6 III. PARTIES .............................................................................................................................7 A. Plaintiffs ...................................................................................................................7 B. Defendants and Co-Conspirators .........................................................................118 1. Prioritized Defendant and Doe Defendants: ..................................................118 -

The Pennsylvania State University Schreyer Honors College

THE PENNSYLVANIA STATE UNIVERSITY SCHREYER HONORS COLLEGE DEPARTMENT OF ENGLISH FEAR OF WORKING-CLASS AGENCY IN THE VICTORIAN INDUSTRIAL NOVEL ADAM BIVENS SPRING 2018 A thesis submitted in partial fulfillment of the requirements for a baccalaureate degree in English with honors in English Reviewed and approved* by the following: Elizabeth Womack Assistant Professor of English Thesis Supervisor Paul deGategno Professor of English Honors Adviser * Signatures are on file in the Schreyer Honors College. i ABSTRACT This work examines the Victorian Industrial novel as a genre of literature that reflects the middle-class biases of influential authors like Charles Dickens and Elizabeth Gaskell, who cater to middle-class readers by simultaneously sympathizing with the poor and admonishing any efforts of the working class to express political agency that challenge the social order. As such, the Victorian Industrial novel routinely depicts trade unionism in a negative light as an ineffective means to secure socioeconomic gains that is often led by charismatic demagogues who manipulate naïve working people to engage in violent practices with the purpose of intimidating workers. The Victorian Industrial novel also acts as an agent of reactionary politics, reinforcing fears of mob violence and the looming threat of revolutionary uprising in England as had occurred throughout Europe in 1848. The novels display a stubborn refusal to link social ills to their material causes, opting instead to endorse temporary and idealist solutions like paternalism, liberal reformism, and marriage between class members as panaceæ for class antagonisms, thereby decontextualizing the root of the problem through the implication that all poor relations between the worker and employer, the proletariat and the bourgeoisie, can be attributed to a breakdown in communication and understanding.