Elections Performance Index Methodology

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Guide to Provisional Ballots FALL 2020

Guide to Provisional Ballots FALL 2020 Before the Polls Open on Election Day, Review Supplemental List and Mark the Poll Book Poll books are printed 2 weeks before the election The Friday before Election Day, SURE reports are created; these supplemental lists show voters who requested or returned a ballot through the mail o The supplemental list may be someone who applies for a mail-in ballot but shows up to vote in person (“I’ve applied for but not yet received my mail-in ballot.”) Review the supplemental list of names and place a RED DOT next to the voter’s name in the poll book if their name appears on the supplemental list They have to either vote provisionally or get a replacement ballot 1 Guide to Provisional Ballots – FALL 2020 Determine Voter Eligibility Look at your poll book for a name with a red dot and review the supplemental list to find out if voter is: a) registered to vote and b) trying to vote at the correct location If voter is registered in another district, direct voter to vote in that district If voter is unwilling or unable to go to the proper polling place, a provisional ballot may be issued o Pennsylvania counties are prohibited from counting a provisional ballot cast by a voter registered in another county DO NOT allow voter to sign the poll book or Numbered List of Voters If the voter’s name does not appear in your poll book or supplemental list and you can’t confirm voter registration status by looking up the voter’s registration on https://www.votespa.com, call the Election Office at 610-891-4659 to find out whether the person is registered or not and in which district the person is registered 2 Guide to Provisional Ballots – FALL 2020 When to Issue a Provisional Ballot (When in doubt, fill one out!) Voter has requested (but not returned) If the voter wants to vote by a mail-in or absentee ballot regular ballot, they must surrender the mail-in or o In this instance, the voter may ONLY absentee ballot they previously vote provisionally in the polling place received. -

Black Box Voting Ballot Tampering in the 21St Century

This free internet version is available at www.BlackBoxVoting.org Black Box Voting — © 2004 Bev Harris Rights reserved to Talion Publishing/ Black Box Voting ISBN 1-890916-90-0. You can purchase copies of this book at www.Amazon.com. Black Box Voting Ballot Tampering in the 21st Century By Bev Harris Talion Publishing / Black Box Voting This free internet version is available at www.BlackBoxVoting.org Contents © 2004 by Bev Harris ISBN 1-890916-90-0 Jan. 2004 All rights reserved. No part of this book may be reproduced in any form whatsoever except as provided for by U.S. copyright law. For information on this book and the investigation into the voting machine industry, please go to: www.blackboxvoting.org Black Box Voting 330 SW 43rd St PMB K-547 • Renton, WA • 98055 Fax: 425-228-3965 • [email protected] • Tel. 425-228-7131 This free internet version is available at www.BlackBoxVoting.org Black Box Voting © 2004 Bev Harris • ISBN 1-890916-90-0 Dedication First of all, thank you Lord. I dedicate this work to my husband, Sonny, my rock and my mentor, who tolerated being ignored and bored and galled by this thing every day for a year, and without fail, stood fast with affection and support and encouragement. He must be nuts. And to my father, who fought and took a hit in Germany, who lived through Hitler and saw first-hand what can happen when a country gets suckered out of democracy. And to my sweet mother, whose an- cestors hosted a stop on the Underground Railroad, who gets that disapproving look on her face when people don’t do the right thing. -

Millions to the Polls

Millions to the Polls PRACTICAL POLICIES TO FULFILL THE FREEDOM TO VOTE FOR ALL AMERICANS PROVISIONAL BALLOTING j. mijin cha & liz kennedy PROVISIONAL BALLOTING • Provisional ballots are not counted as regular ballots and should be used in only very limited situations. • Provisional ballots cast solely because an eligible voter voted in the wrong precinct or polling place should be counted as a regular ballot for any office for which the voter was eligible to vote. • Adopting Same Day Registration would substantially decrease the need for provisional ballots because eligible voters can simply re-register if there are registration issues. he scenario occurs regularly on Election Day: a voter will show up at the polling place only to find that his or her name is not on the voting rolls. Sometimes an incomplete registration form is to blame. Other times, people have moved since registering and Tmay show up at the wrong polling place. But in many cases, processing errors by election administrators, overly aggressive purging procedures, or other mistakes outside of the voter’s control result in the voter being mistakenly left off the voting rolls. Under the Help America Vote Act of 2002 (HAVA), voters whose names cannot be found on the voter rolls on Election Day or whose eligibility is challenged must be provided a provisional ballot.1 These provisional votes are subsequently counted if local election officials are able to verify, by a set deadline, that the individual is a legitimate voter under state law.2 The Presidential Commission on Election Administra- tion found that “high rates of provisional balloting can . -

Vote Center Training

8/17/2021 Vote Center Training 1 Game Plan for This Section • Component is Missing • Paper Jam • Problems with the V-Drive • Voter Status • Signature Mismatch • Fleeing Voters • Handling Emergencies 2 1 8/17/2021 Component Is Missing • When the machine boots up in the morning, you might find that one of the components is Missing. • Most likely, this is a connectivity problem between the Verity equipment and the Oki printer. • Shut down the machine. • Before you turn it back on, make sure that everything is plugged in. • Confirm that the Oki printer is full of ballot stock and not in sleep mode. • Once everything looks connected and stocked, turn the Verity equipment back on. 3 Paper Jam • Verify whether the ballot was counted—if it was, the screen will show an American flag. If not, you will see an error message. • Call the Elections Office—you will have to break the Red Sticker Seal on the front door of the black ballot box. • Release the Scanner and then tilt it up so that the voter can see the underside of the machine. Do not look at or touch the ballot unless the voter asks you to do so. • Ask the voter to carefully remove the ballot from the bottom of the Scanner, using both hands. • If the ballot was not counted, ask the voter to check for any rips or tears on the ballot. If there are none, then the voter may re-scan the ballot. • If the ballot is damaged beyond repair, spoil that ballot and re-issue the voter a new ballot. -

NOTICE to PROVISIONAL VOTER (For Provisional Voter That Did Not (1) Present an Acceptable Form of Photo ID and (2) Complete a Reasonable Impediment Declaration)

7‐15c Prescribed by Secretary of State Sections 63.001(g), Texas Election Code 1/2018 NOTICE TO PROVISIONAL VOTER (For provisional voter that did not (1) present an acceptable form of photo ID and (2) complete a reasonable impediment declaration) A determination whether your ballot will be counted will be made by the early voting ballot board after the election. A notice will be mailed to you within 30 days of the election at the address you provided on your affidavit to vote a provisional ballot indicating either (1) that your ballot was counted or (2) if it was not counted, the reason your ballot was not counted. Voter must appear before Voter Registrar If you are voting in the correct precinct, in order to have your provisional ballot by: accepted, you will be required to visit your local county voter registrar’s office (information below) within six days of the date of the election to either present one of the below forms of photo ID OR if you do not possess and cannot reasonably obtain one of the below forms of photo ID, execute a Reasonable Impediment Declaration and present one of the below forms of supporting ID OR submit one of the temporary __________________________________ forms addressed below (e.g., religious objection or natural disaster exemption) in the Date presence of the county voter registrar OR submit the paperwork required to obtain a permanent disability exemption. The process can be expedited by taking this notice with you to the county voter registrar at the time you present your acceptable form of photo identification (or if you do not possess and cannot reasonably obtain one of the below acceptable forms of photo ID, execute your Reasonable Impediment Declaration and present one of the below forms of supporting ID, or execute your temporary affidavit or provide your paperwork for your permanent exemption); however, taking this notice is not a requirement. -

Fresh Perspectives NCDOT, State Parks to Coordinate on Pedestrian, Bike Bridge For

Starts Tonight Poems Galore •SCHS opens softball play- offs with lop-sided victory Today’s issue includes over Red Springs. •Hornets the winners and win- sweep Jiggs Powers Tour- ning poems of the A.R. nament baseball, softball Ammons Poetry Con- championships. test. See page 1-C. Sports See page 3-A See page 1-B. ThePublished News since 1890 every Monday and Thursday Reporterfor the County of Columbus and her people. Thursday, May 12, 2016 Fresh perspectives County school Volume 125, Number 91 consolidation, Whiteville, North Carolina 75 Cents district merger talks emerge at Inside county meeting 3-A By NICOLE CARTRETTE News Editor •Top teacher pro- motes reading, paren- Columbus County school officials are ex- tal involvement. pected to ask Columbus County Commission- ers Monday to endorse a $70 million plan to consolidate seven schools into three. 4-A The comprehensive study drafted by Szotak •Long-delayed Design of Chapel Hill was among top discus- murder trial sions at the Columbus County Board of Com- set to begin here missioners annual planning session held at Southeastern Community College Tuesday Monday. night. While jobs and economic development, implementation of an additional phase of a Next Issue county salary study, wellness and recreation talks and expansion of natural gas, water and sewer were among topics discussed, the board spent a good portion of the four-hour session talking about school construction. No plans The commissioners tentatively agreed that they had no plans to take action on the propos- al Monday night and hinted at wanting more details about coming to an agreement with Photo by GRANT MERRITT the school board about funding the proposal. -

Bill Analysis Lynda J

Ohio Legislative Service Commission Bill Analysis Lynda J. Jacobsen Am. Sub. S.B. 148 129th General Assembly (As Passed by the Senate) Sens. Wagoner, Hite, Bacon, Beagle, Coley, Daniels, Faber, Jones, Jordan, Lehner, Manning, Niehaus, Widener TABLE OF CONTENTS Election administration ............................................................................................................... 3 Documentation for voters with a former address on their ID ................................................... 3 Contracts for the provision of election services ...................................................................... 3 Bulk purchase of election supplies ......................................................................................... 3 Bid threshold for ballots and election supplies ........................................................................ 3 Out-of-state ballot printing ...................................................................................................... 4 Use of a voter's full Social Security number instead of last four digits..................................... 4 Number of precinct officials at a special election .................................................................... 4 Polling place accessibility ....................................................................................................... 4 Journalist access to polling places ......................................................................................... 5 Qualifications to circulate an election petition -

Provisional Ballot Processing System

PROVISIONAL BALLOT PROCESSING SYSTEM Maricopa County Elections Department Pew GeekNet MN July 14th, 2012 Maricopa County Profile • 1,869,666 Active Voters (2,094,176 with Inactives) • 38% Republican • 34% Party Not Designated • 28% Democrat • (Less than 1% Green & Libertarian) • Voting Rights Act Coverage: • Section 203: Spanish & Tohono O’odham • Section 4f4: Spanish • Section 5 Preclearance • Conduct elections for all jurisdictions with exception of the City of Phoenix. • Blended system of optical scan & DREs • >½ Voters on Permanent Early Voting Why so many in AZ?? Volume of provisional ballots has been called the “canary in the coalmine” – but what is being signaled? Administrative issues? Lack of list maintenance? Insufficient or inconvenient registration methods? Definition of what is a “provisional”? Or, just maybe, human nature to procrastinate &/or not read instructions? Background to Provisional Ballots In Arizona we have had a form of provisional voting for years—going back to before we were a state. Previously called “Ballots To Be Verified” and “Question Ballots”, voters whose name did not appear in the Signature Roster were still able to cast their vote and have it count after it was determined that they were eligible. Historically the most common reason this occurs is due to voters not maintaining a current voter registration when they move. Changes in Trends With the implementation of a Permanent Early Voting List (PEVL) we have seen a shift in Provisional voting. Previously on average 1% of the voters who had requested an early ballot went to the polls and voted a provisional. After PEVL, that has tripled to 3% (in some cases quadrupling the actual number). -

Absentee Voting and Vote by Mail

CHapteR 7 ABSENTEE VOTING AND VOTE BY MAIL Introduction some States the request is valid for one or more years. In other States, an application must be com- Ballots are cast by mail in every State, however, the pleted and submitted for each election. management of absentee voting and vote by mail varies throughout the nation, based on State law. Vote by Mail—all votes are cast by mail. Currently, There are many similarities between the two since Oregon is the only vote by mail State; however, both involve transmitting paper ballots to voters and several States allow all-mail ballot voting options receiving voted ballots at a central election office for ballot initiatives. by a specified date. Many of the internal procedures for preparation and mailing of ballots, ballot recep- Ballot Preparation and Mailing tion, ballot tabulation and security are similar when One of the first steps in preparing to issue ballots applied to all ballots cast by mail. by mail is to determine personnel and facility and The differences relate to State laws, rules and supply needs. regulations that control which voters can request a ballot by mail and specific procedures that must be Facility Needs: followed to request a ballot by mail. Rules for when Adequate secure space for packaging the outgoing ballot requests must be received, when ballots are ballot envelopes should be reviewed prior to every mailed to voters, and when voted ballots must be election, based on the expected quantity of ballots returned to the election official—all defer according to be processed. Depending upon the number of ballot to State law. -

Report on Provisional Ballots and American Elections

REPORT ON PROVISIONAL BALLOTS AND AMERICAN ELECTIONS Prepared by Professor Daron Shaw University of Texas at Austin Professor Vincent Hutchings University of Michigan For the Presidential Commission on Election Administration June 21, 2013 Overview Both empirical and anecdotal data indicate that the use of provisional ballots in U.S. elections is a mixed bag. On the one hand, providing voters whose eligibility is unclear with an opportunity to cast a provisional ballot might prevent many voters from being disenfranchised. Indeed, the evidence from several states (for example, in California provisional ballots were estimated to be 5.8% of all ballots cast in 2008) indicates that the incidence of disputed eligibility can be quite substantial, and that provisional balloting options are substantively important. On the other hand, those states with provisional balloting systems may be less likely to seek to improve their registration, voter list, and election administration procedures, as provisional ballots provide a “fail-safe” option. We assume that the goal here is to (a) reduce incidences in which voter eligibility is at issue, and (b) provide an opportunity for all eligible voters to participate. In light of these goals, we recommend the best practices identified in the U.S. Election Assistance Commission’s 2010 report on provisional voting: improving voter outreach/ communication, adding to staff and poll worker training, encouraging more consistent and comprehensive Election Day management procedures, and strengthening procedures for offering and counting provisional ballots as well as upgrading post-election statistical 1 systems. These recommendations respect differences between and amongst the states, but provide a pathway for achieving consistency within states and improving voting procedures across the board. -

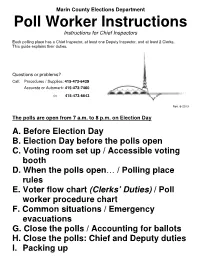

Poll Worker Instructions Instructions for Chief Inspectors

Marin County Elections Department Poll Worker Instructions Instructions for Chief Inspectors Each polling place has a Chief Inspector, at least one Deputy Inspector, and at least 2 Clerks. This guide explains their duties. Questions or problems? Call: Procedures / Supplies: 415-473-6439 Accuvote or Automark: 415-473-7460 Or 415-473-6643 Rev. 6-2013 The polls are open from 7 a.m. to 8 p.m. on Election Day A. Before Election Day B. Election Day before the polls open C. Voting room set up / Accessible voting booth D. When the polls open … / Polling place rules E. Voter flow chart (Clerks’ Duties) / Poll worker procedure chart F. Common situations / Emergency evacuations G. Close the polls / Accounting for ballots H. Close the polls: Chief and Deputy duties I. Packing up A. Before Election Day – Chief Inspector Duties ♣ Pick up a red bag at the training class. Do not open the sealed This bag contains: a black Accuvote bag, a polling place portion of the Accuvote accessibility supply bag, a Vote by Mail Ballot Box, and other bag until Election Day. supplies you will need. ♣ Use the inventory list in the red bag to make sure your red bag has all the supplies you need. ♣ Call your polling place contact (listed on your supply receipt) to make sure you can get into the polling place on Election Day by 6:30 a.m., or earlier. Important! Take this person’s contact info with you on Election Day in case you have any problems getting in. ♣ Call your Deputy Inspector(s) to tell them what time to meet you at the polling place on Election Day. -

Vietnamese Glossary of Election Terms.Pdf

U.S. ELECTION AssISTANCE COMMIssION ỦY BAN TRợ GIÚP TUYểN Cử HOA Kỳ 2008 GLOSSARY OF KEY ELECTION TERMINOLOGY Vietnamese BảN CHÚ GIảI CÁC THUậT Ngữ CHÍNH Về TUYểN Cử Tiếng Việt U.S. ELECTION AssISTANCE COMMIssION ỦY BAN TRợ GIÚP TUYểN Cử HOA Kỳ 2008 GLOSSARY OF KEY ELECTION TERMINOLOGY Vietnamese Bản CHÚ GIải CÁC THUậT Ngữ CHÍNH về Tuyển Cử Tiếng Việt Published 2008 U.S. Election Assistance Commission 1225 New York Avenue, NW Suite 1100 Washington, DC 20005 Glossary of key election terminology / Bản CHÚ GIải CÁC THUậT Ngữ CHÍNH về TUyển Cử Contents Background.............................................................1 Process.................................................................2 How to use this glossary ..................................................3 Pronunciation Guide for Key Terms ........................................3 Comments..............................................................4 About EAC .............................................................4 English to Vietnamese ....................................................9 Vietnamese to English ...................................................82 Contents Bối Cảnh ...............................................................5 Quá Trình ..............................................................6 Cách Dùng Cẩm Nang Giải Thuật Ngữ Này...................................7 Các Lời Bình Luận .......................................................7 Về Eac .................................................................7 Tiếng Anh – Tiếng Việt ...................................................9